Methodology Overview (less than 1 page)

Our team's approach to measuring and mitigating bias was informed by commonly used

mathematical and statistical approaches, our experience developing machine-learning

algorithms, and our experience managing, measuring and transforming data to the FHIR

standard for 40M patients at 1upHealth.

To measure bias, our team approached the problem by considering the principles of

equal opportunity, equal odds and differential validity. This approach allowed us to most

effectively measure retrospective and latent bias present in the output of a given model. If our

tool is used to measure a model’s output over time as it is updated with newer data, we would

also be able to measure the change in bias over time.

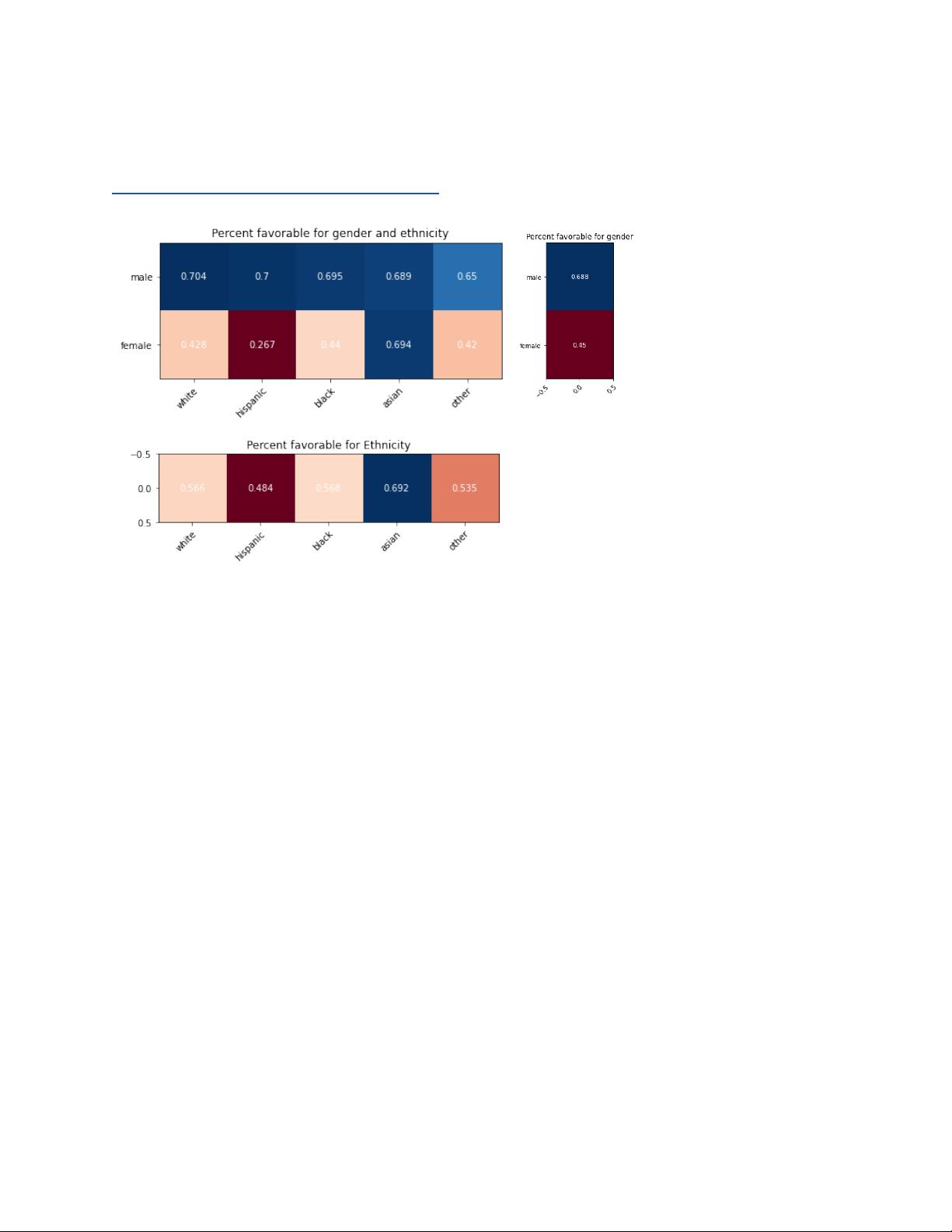

We utilized demographic data in conjunction with the provided binary outcome data to

measure bias across the intersection of two group categories: sex/gender and race. While we

used binary outcome data to produce these plots, continuous outcome data could also be used.

We calculated the following metrics:

● Equal odds difference

● Equal odds ratio

● Demographic parity difference

● Demographic_parity ratio

Our solution supports output from all classification models including classical linear models, svm

tree and XGboost type models, neural networks, and sequence to sequence generative AI

models. We tested our bias measurement tool with multiple models which included biased, poor

performance, excellent performance, and random guess output. Through these tests and by

using our own generative AI model we were able to test and develop the above bias

measurement criteria.

To mitigate bias, we created a classifier using XGBoost in combination with a threshold

optimizer. The classifier is trained to predict a binary outcome based on a column defined in the

input dataset. The model requires a number of arguments to be supplied in order to define the

protected classes and reference classes.

The model also uses a threshold optimizer from Fairlearn in order to adjust the sample weights

to minimize the equalized odds between the protected and reference classes. In other words, it

is trying to match true positive and false positive prediction rates across all classes as defined in

the input.