SNLI (Bowman et al., 2015)

Premise: Dark-haired man wearing a watch and oven

mitt about to cook some meat in the kitchen.

Hypothesis: A man is cooking something to eat.

Label: entailment

e-SNLI (Camburu et al., 2018): Meat is cooked in a

kitchen, and is a food that you eat. Using an oven mitt

implies you’re about to cook with hot utensils.

GPT-3: Cooking is usually done to prepare food to eat.

CommonsenseQA (Talmor et al., 2019)

Question: What is the result of applying for job?

Answer Choices: anxiety and fear, increased workload,

praise, less sleep, or being employed

Correct Choice: being employed

CoS-E (Rajani et al., 2019): being employed applying

for job

ECQA (Aggarwal et al., 2021): Applying for a job is

followed by attending interview which results in being

employed.

Applying for a job may not result in the other

options.

GPT-3: Applying for a job can result in being em-

ployed, which is a positive outcome.

Table 1: Task-specific instances, along with their

crowdsourced explanations from the respective

datasets, shown alongside explanations generated

greedily by GPT-3. In our experiments, the SNLI GPT-

3 explanation was preferred over its corresponding

e-SNLI explanation by 2/3 annotators. For Common-

senseQA, 3/3 preferred the GPT-3 explanation to the

CoS-E explanation, and 2/3 to the ECQA one.

which we expect the model to generate an explana-

tion.

3

We use a total of 115 randomly sampled train

instances to create our prompts; each prompt con-

sists of 8-24 randomly selected examples from this

set. For each instance, we generate a single expla-

nation with greedy decoding. More details about

prompt construction are in Appendix A; example

prompts are given in Tables 2 and 11.

Crowdsourcing evaluation. Given that existing

automatic metrics often do not correlate well with

human judgements of explanation quality (Clinciu

et al., 2021; Kayser et al., 2021),

we conduct human

3

We condition on the gold label as a methodological con-

trol to ensure reliable human evaluation. In pilot studies, we

found it hard to avoid bias against explanations when we

Let’s explain classification decisions.

A young boy wearing a tank-top is climbing a tree.

question: A boy was showing off for a girl.

true, false, or neither? neither

why? A boy might climb a tree to show off for a girl,

but he also might do it for fun or for other reasons.

###

A person on a horse jumps over a broken down airplane.

question: A person is outdoors, on a horse.

true, false, or neither? true

why? Horse riding is an activity almost always done

outdoors. Additionally, a plane is a large object and is

most likely to be found outdoors.

###

There is a red truck behind the horses.

question: The horses are becoming suspicious of my

apples.

true, false, or neither? false

why? The presence of a red truck does not imply there

are apples, nor does it imply the horses are suspicious.

###

A dog carries an object in the snow.

question: A dog is asleep in its dog house.

true, false, or neither? false

why?

Table 2: Example of a prompt with 3 training examples

for SNLI: presented are the premise/hypothesis pairs,

the gold labels, and the explanations (written by us)

that act as input to GPT-3 (in practice, we use 8-24 ex-

amples per prompt). The text generated by the model

acts as the free-text explanation. In this case, the model

greedily auto-completes (given 12 examples): “A dog

cannot carry something while asleep”.

studies for evaluation.

4

We ensure each experiment

has a substantial number of distinct crowdworkers

to mitigate individual annotator bias (Table 17).

We present workers with a dataset instance, its

gold label, and two explanations for the instance

generated under different conditions (“head-to-

head”). We then ask them to make a preferential

selection, collecting 3 annotations per data point.

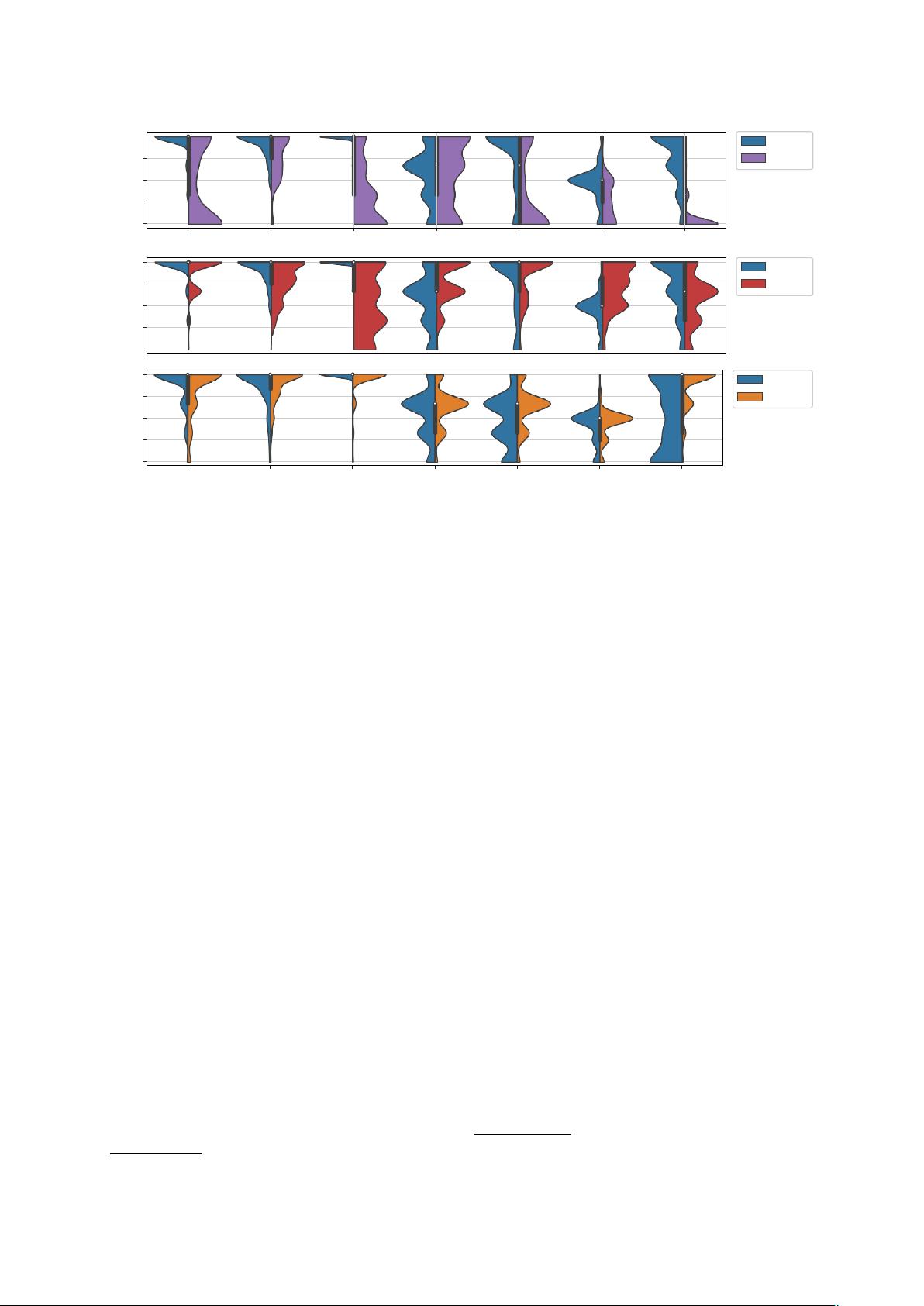

We report inter-annotator agreement (IAA) using

Krippendorff’s α (Krippendorff, 2011). We find

low-to-moderate agreement across studies, indicat-

ing the subjective nature of the task; see Tables 3,

4, and 5. Appendix B contains further details on

quality control.

disagreed with the predicted label. Prior work (Kayser et al.,

2021; Marasovic´ et al., 2021) has removed this confounder

by only considering explanations for correctly-predicted in-

stances, which may overestimate explanation quality. Our

method allows us to report results on a truly representative

sample. We experiment with incorrect vs. correct predictions

in Appendix C, finding that GPT-3 can competitively explain

gold labels for instances it predicted incorrectly.

4

Our studies were conducted on the Amazon Mechanical

Turk platform, at the Allen Institute for AI. We selected work-

ers located in Australia, Canada, New Zealand, the UK, or the

US, with a past HIT approval rate of >98% and >5000 HITs

approved, and compensated them at a rate of $15/hour. Each

worker completed qualifying exams on explanation evaluation

and the NLI task.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功