MoCoGAN- Decomposing Motion and Content for Video Generation.pdf

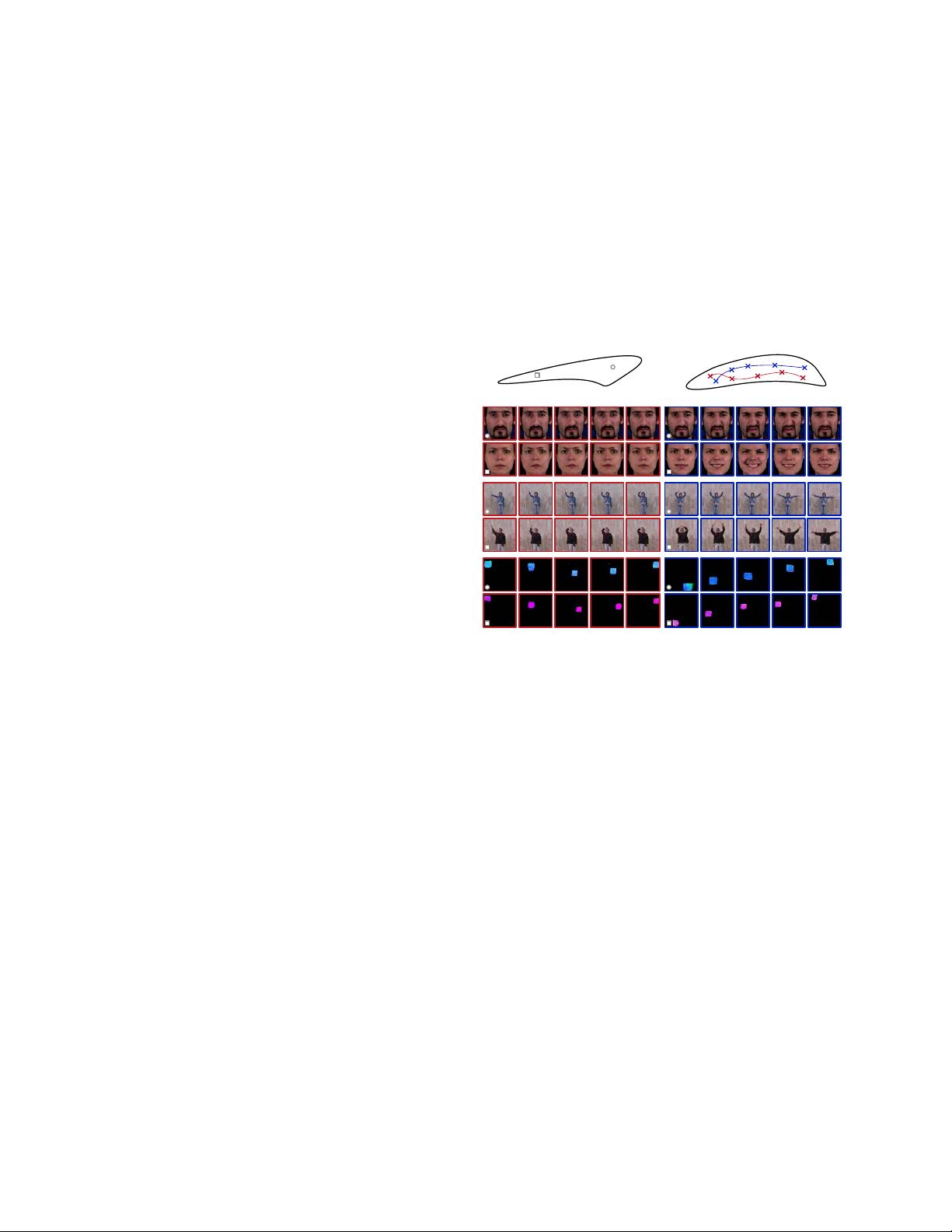

适用人群 本论文适用于以下专业读者: 计算机视觉和机器学习领域的研究人员和学者。 对生成对抗网络(GANs)在视频生成任务上的应用感兴趣的工程师和开发者。 探索深度学习在视频处理和动作识别中应用的数据科学家。 人工智能领域的学生和教育工作者,特别是那些专注于视频内容生成和分析的。 使用场景及目标 研究与开发:研究人员可以使用DVD-GAN模型来探索视频生成的新方法,提高视频合成和预测的质量和效率。 教育应用:作为教学案例,帮助学生理解GANs在视频处理领域的应用,以及如何评估生成模型的性能。 工业应用:在娱乐、虚拟现实、游戏开发等行业中,利用DVD-GAN生成的视频内容创造新的用户体验。 数据分析:数据科学家可以使用DVD-GAN来模拟视频数据,用于增强现有数据集,或进行数据增强以改善机器学习模型的训练。 技术评估:研究人员和开发人员可以利用论文中提到的评估指标(如IS和FID)来比较不同模型生成的视频质量。 论文的目标是通过展示DVD-GAN在复杂视频数据集上的应用,推动视频生成技术的发展,并为未来在更大规模和更复杂数据集上的模型训练和评估提供基准。通过这项研究,作者希望强调在大型和复杂的视频数据集上训练生成模型的重要性,并期待DVD-GAN能成为未来研究的参考点。 ### MoCoGAN: 分解运动与内容以实现视频生成 #### 概述 《MoCoGAN: Decomposing Motion and Content for Video Generation》是一篇聚焦于视频生成领域的重要论文。该研究由Sergey Tulyakov(Snap Research)、Ming-Yu Liu、Xiaodong Yang及Jan Kautz(NVIDIA)共同完成。MoCoGAN的主要贡献在于提出了一种能够有效分解视频中的“内容”和“运动”的生成对抗网络框架,从而实现了高质量的视频生成。这一工作不仅对计算机视觉和机器学习领域的研究者具有重要意义,同时也为视频处理和动作识别提供了有力的技术支持。 #### 适用人群与应用场景 **适用人群**: 1. **计算机视觉与机器学习领域的研究者**:MoCoGAN为视频生成的研究提供了新的思路和技术手段,有助于推动相关领域的学术发展。 2. **对生成对抗网络(GANs)感兴趣的工程师与开发者**:该论文深入探讨了GANs在视频生成任务中的应用,对于理解GANs的工作原理及其优化方法具有指导意义。 3. **专注于视频处理和动作识别的数据科学家**:通过MoCoGAN,数据科学家可以更好地理解和模拟视频数据,进而提升机器学习模型的性能。 4. **人工智能领域的学生与教育工作者**:MoCoGAN可以作为教学案例,帮助学生掌握视频生成的基本概念和技术实现。 **应用场景**: 1. **研究与开发**:MoCoGAN模型可用于探索视频生成的新方法,特别是在提升视频合成和预测的质量与效率方面。 2. **教育应用**:作为教学资源,帮助学生深入了解GANs在视频处理领域的应用,包括如何评估生成模型的性能等。 3. **工业应用**:在娱乐、虚拟现实、游戏开发等领域,MoCoGAN可以生成高质量的视频内容,创造新颖的用户体验。 4. **数据分析**:数据科学家可以利用MoCoGAN模拟视频数据,以增强现有数据集或进行数据增强,从而改善机器学习模型的训练效果。 5. **技术评估**:研究人员和开发者可通过MoCoGAN提供的评估指标(如图像分数IS和Frechet Inception Distance, FID)来比较不同模型生成的视频质量。 #### MoCoGAN的关键技术点 1. **Motion and Content Decomposition**:MoCoGAN的核心思想在于将视频中的“内容”与“运动”进行分离。其中,“内容”定义了视频中包含的对象,“运动”则描述了这些对象的动态变化过程。 - 内容部分保持不变,确保视频中对象的一致性; - 运动部分通过随机过程建模,使得生成的视频展现出不同的动态变化。 2. **Unsupervised Learning Scheme**:为了在无监督的情况下学习运动和内容的分解,MoCoGAN引入了一种新型的对抗学习方案。该方案结合了图像和视频判别器,以确保生成的视频既具有真实感又符合预期的内容和运动模式。 3. **Qualitative and Quantitative Evaluation**:通过在多个具有挑战性的数据集上的广泛实验验证了MoCoGAN的有效性。这些实验不仅包括与现有先进技术的定性对比,还涉及定量指标的评估,如图像分数IS和FID等。 4. **Flexible Generation Capabilities**:MoCoGAN的一个显著特点是其灵活的生成能力。它不仅可以生成具有相同内容但不同运动模式的视频,也可以生成具有不同内容但相同运动模式的视频。 MoCoGAN不仅为视频生成领域带来了创新的方法论,还在理论和技术层面上展示了其优越性和灵活性。随着技术的不断发展,MoCoGAN有望成为视频生成技术发展的重要里程碑之一。

剩余12页未读,继续阅读

- 粉丝: 1448

- 资源: 125

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于Java语言实现养老院信息管理系统(SQL Server数据库)

- 社区居民诊疗健康-JAVA-基于SpringBoot的社区居民诊疗健康管理系统设计与实现(毕业论文)

- ChromeSetup.zip

- 大黄蜂V14旋翼机3D

- 体育购物商城-JAVA-基于springboot的体育购物商城设计与实现(毕业论文)

- 三保一评关系与区别分析

- 星形发动机3D 星形发动机

- 机考样例(学生).zip

- Day-05 Vue22222222222

- 经过数据增强后番茄叶片病害识别,约45000张数据,已标注

- 商用密码技术及产品介绍

- CC2530无线zigbee裸机代码实现WIFI ESP8266上传数据到服务器.zip

- 文物管理系统-JAVA-基于springboot的文物管理系统的设计与实现(毕业论文)

- 店铺数据采集系统项目全套技术资料.zip

- 数据安全基础介绍;数据安全概念

- 目标检测数据集: 果树上的tomato西红柿图像检测数据【VOC标注格式、包含数据和标签】

信息提交成功

信息提交成功