IEEE TRANSACTIONS ON PARALLEL AND DISTRIBUTED SYSTEMS, VOL. X, NO. Y, JANUARY 2016 2

usually deployed and many more KV items can be

held in memory for faster access. As most gets can

be absorbed by large-capacity multi-layer caches, and

only a few scattered gets fall in the LSM-tree, many

data center applications are actually put-dominated.

Thus it is unnecessary to discreetly push KV items

top-down with component-by-component mechanism,

which incurs excessive write amplification and then

sacrifices the system throughput.

In this paper, we propose Skip-tree, to aggressively

push the KV items to the non-adjacent larger com-

ponents via skipping some components. By reduc-

ing the number of steps during the flowing process

from memory-resident component to the disk-resident

largest component, Skip-tree reduces the read and

write I/Os, decreases the write amplification. Besides,

the bloom filter [31] is used in the Skip-tree to further

reduce the read I/Os caused by the version constraint.

We develop adaptive and reliable KV item move-

ments among components. As a consequence, Skip-

tree improves the throughput of key value stores.

We design and implement SkipStore based on Skip-

tree. The experiments demonstrates that SkipStore

outperforms RocksDB by 66.5%. Since SkipStore uses

buffer to cache some KV items in newly added buffer

component, we also design and implement reliability

mechanism, which is based on write-ahead log, for

SkipStore to prevent data loss. Benefitting from better

put and scan performance, SkipStore can be used as

the back-end storage engine of both cloud storage

systems and other data analysis processing systems,

such as PNUTS [12], Walnut [38] and Hadoop [39].

The rest of this paper is organized as follows. Sec-

tion 2 describes the background and motivation. Sec-

tion 3 presents the overview of our solution. Section

4 describes the Skip-tree data structure, and Section 5

presents the design and implement issues of SkipStore

based on Skip-tree. Section 6 presents and discusses

the evaluation results. Section 7 presents the related

work. Finally, we conclude this paper in Section 8 by

summarizing the main contributions of this paper.

2 BACKGROUND AND MOTIVATIONS

2.1 Multi-layer cached Data Center

Nowdays, most data centers are using multi-layer

cache to reduce the average read latency and the

read request counts to the backend system. We take

Facebook’s photo-serving stack [25] as an example to

illustrate the architecture of multi-layer cached data

center. There are three layers of caches in Facebook’s

photo-serving stack, which are browser cache, edge

cache and origin cache. The first cache layer is in

the client’s browser. It caches the most read request,

which is 65.5%. The Facebook Edge is comprised of

a set of Edge Caches that each run inside points of

presence (POPs) close to end users. As the second

cache layer, edge cache caches 20% of read requests.

data

block

data

block

data

block

data

block

data

block

……

Index block

SSTable

T

24

SSTable

T

25

SSTable

T

26

C

0

C

1

Memory

Disk

Immutable

MemTable

dump

R

SSTable T

01

Index

SSTable T

11

Index

SSTable T

12

Index

SSTable T

21

Index

SSTable T

22

Index

SSTable T

23

Index

SSTable T

02

Index

C

2

MemTable

Put/Get/Del KV

SSTable

T

34

SSTable

T

35

SSTable

T

36

C

0

C

1

Memory

Disk

SSTable T

11

Index

SSTable T

21

Index

SSTable T

22

Index

SSTable T

31

Index

SSTable T

32

Index

SSTable T

33

Index

SSTable T

12

Index

C

2

C

3

Immutable

MemTable

MemTable

Put/Get/Delete

Key-Value Items

dump

Compaction

C

0

C

1

Memory

Disk

SST T

11

Index

SST T

21

Index

SST T

22

Index

SST T

31

Index

SST T

32

Index

SST T

33

Index

SST T

12

Index

C

2

C

3

Immutable

MemTable

MemTable

Put/Get/Delete

Key-Value Items

dump

Compaction

SST T

34

Index

SST T

35

Index

SST T

36

Index

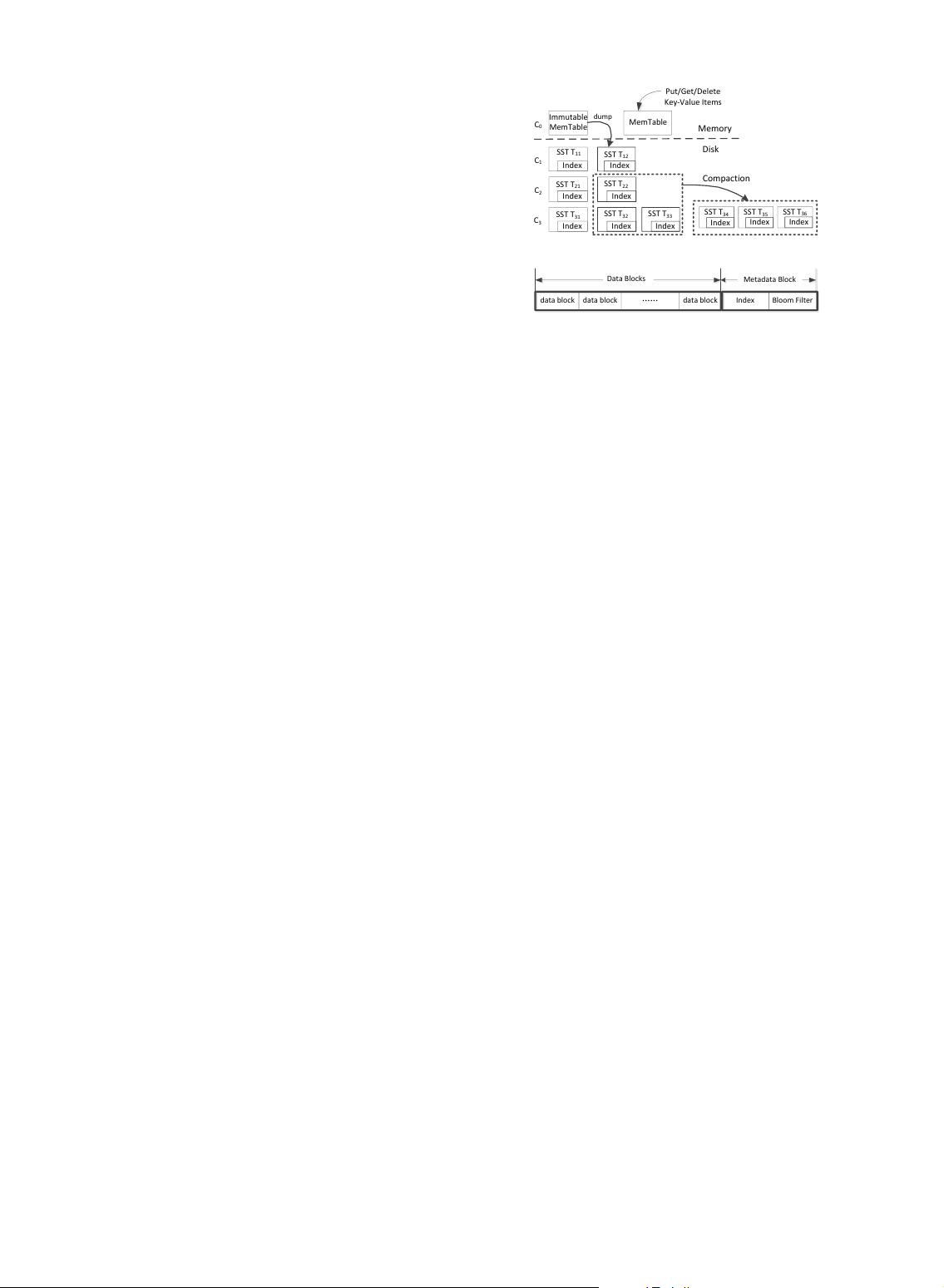

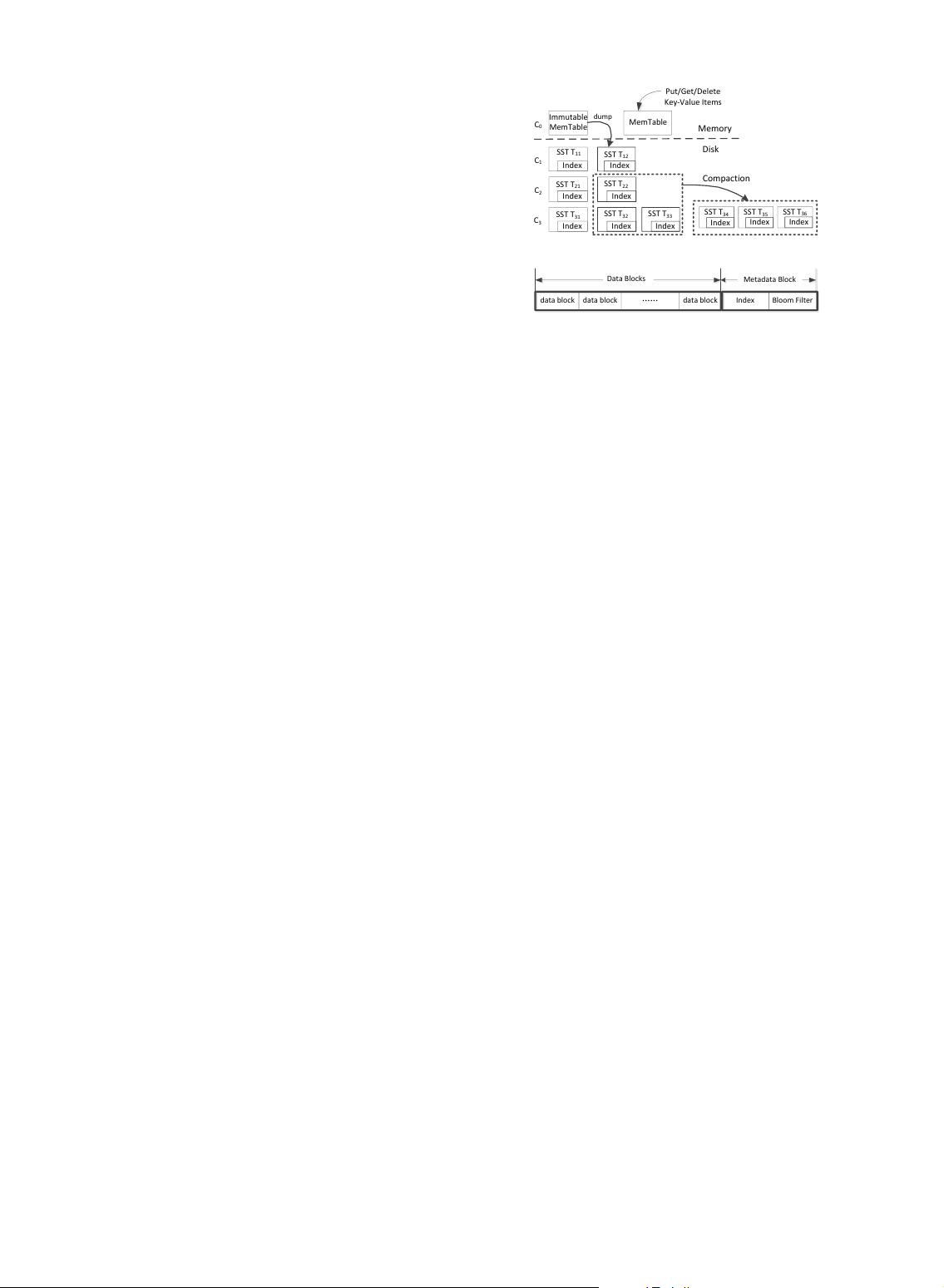

(a) LSM-tree data structure

data block data block data block

……

SSTable

T

24

SSTable

T

25

SSTable

T

26

C

0

C

1

Memory

Disk

Immutable

MemTable

dump

R

SSTable T

01

Index

SSTable T

11

Index

SSTable T

12

Index

SSTable T

21

Index

SSTable T

22

Index

SSTable T

23

Index

SSTable T

02

Index

C

2

MemTable

Put/Get/Del KV

SSTable

T

34

SSTable

T

35

SSTable

T

36

C

0

C

1

Memory

Disk

SSTable T

11

Index

SSTable T

21

Index

SSTable T

22

Index

SSTable T

31

Index

SSTable T

32

Index

SSTable T

33

Index

SSTable T

12

Index

C

2

C

3

Immutable

MemTable

MemTable

Put/Get/Delete

Key-Value Items

dump

Compaction

C

0

C

1

Memory

Disk

SST T

11

Index

SST T

21

Index

SST T

22

Index

SST T

31

Index

SST T

32

Index

SST T

33

Index

SST T

12

Index

C

2

C

3

Immutable

MemTable

MemTable

Put/Get/Delete

Key-Value Items

dump

Compaction

SST T

34

Index

SST T

35

Index

SST T

36

Index

Data Blocks

Metadata Block

Bloom FilterIndex

(b) SSTable layout

Fig. 1. The basic LSM-tree data structure, SSTable layout

and compaction procedure

The last cache layer, origin cache, is located with

backend storage system. It caches 4.6% read requests

and leaves 9.9% to the backend storage system, which

is Haystack in Facebook.

Although there exist some temporary KV items in

specific scenarios, it is unnecessary to persist them to

the disk-based storage system. However, massive KV

items in most scenarios are needed to be persistent to

the disk-based storage systems. In this paper, we focus

on solving the performance bottleneck of the LSM-

tree based storage systems. Although KV items can be

written to the memstore with extremely low latency,

these should eventually be dumped into the disk-based

persistent storage and involved in the compaction

procedure to flow down to the larger components

in most cases. Reducing write amplification and then

improving write throughput of the back-end storage

engine are challenging problems.

2.2 Basic KV organization of LSM-tree

LSM-tree organizes KV items in multiple tree-like

components, generally including one memory resi-

dent component and multiple disk resident compo-

nents, as shown in Figure 1(a). Each component size

is limited to a predefined threshold, which grows ex-

ponentially. We use a representative design, RocksDB

at Facebook, as an example to present the design and

implementation of LSM-tree. RocksDB first uses an

in-memory buffer, called MemTable, to receive the

incoming KV items and keep them sorted. When

an MemTable is filled up, it will be dumped to the

hard disk to be an immutable SSTable, such as T

12

in Figure 1(a). KV items are key-sorted and placed in

fix-sized blocks. The key of the first KV item in each

block is recorded as index to facilitate the KV item

locating. Each disk component consists of multiple

SSTables, whose key ranges do not overlap with each

other except those in C

1

. Figure 1(b) presents the

layout of the SSTable. Each SSTable contains multiple

data blocks and one metadata block. The data blocks

contain the sorted KV items, while the metadata block

This is the author's version of an article that has been published in this journal. Changes were made to this version by the publisher prior to publication.

The final version of record is available at http://dx.doi.org/10.1109/TPDS.2016.2609912

Copyright (c) 2016 IEEE. Personal use is permitted. For any other purposes, permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功