Coupling Semi-supervised Learning and Example Selection for Tracking 3

In this paper, we propose an online object tracking algorithm which explic-

itly couples the objectives of semi-supervised learning and example selection.

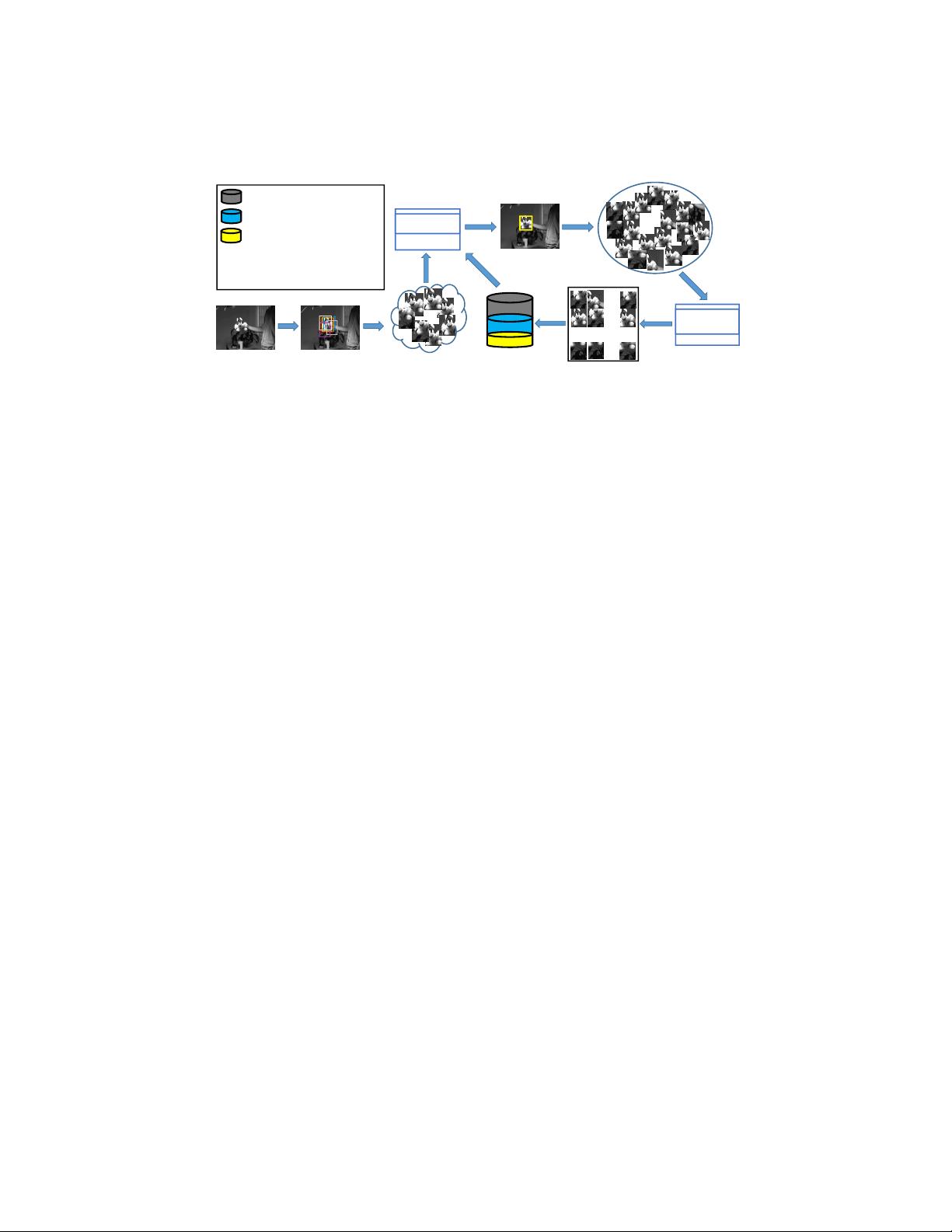

The overview of our tracker is shown in Fig. 1. We use a manifold regular-

ized semi-supervised learning method, i.e., Laplacian Regularized Least Squares

(LapRLS) [21], to learn a robust classifier for object tracking. We show that it

is crucial to exploit the abundant unlabeled data which can be easily collected

during tracking to improve the classifier and alleviate the drift problem caused

by label noisy. To avoid the ambiguity introduced by an independent example

collection strategy, an active example selection stage is introduced between sam-

pling and labeling to select the examples that are useful for LapRLS. The active

example selection approach is designed to maximize the classification confidence

of the classifier using the formalism of active learning [22, 23], thus guarantees

the consistency between classifier learning and example selection in a principled

manner. Our experiments suggest that coupling semi-supervised learning and

example selection leads to significant improvement on tracking performance. To

make the classifier more adaptive to appearance changes, part of the selected

examples that satisfy strict constraints are labeled, and the rest are considered

as unlabeled data. According to the stability-plasticity dilemma [24], the addi-

tional labels provide reliable supervisory information to guide semi-supervised

learning during tracking, and hence increases the plasticity of the tracker, which

is validated in our experiments.

Semi-supervised tracking: Semi-supervised approaches have been previ-

ously used in tracking. Grabner et al. [14] proposed an online semi-supervised

boosting tracker to avoid self-learning as only the examples in the first frame

are considered as labeled. Saffari et al. [16] proposed a multi-view boosting al-

gorithm which considers the given priors as a regularization component over the

unlabeled data, and validate its robustness for object tracking. Kalal et al. [19]

presented a P-N learning algorithm to bootstrap a prior classifier by iteratively

labeling unlabeled examples via structural constraints. Gao et al. [18] employed

the cluster assumption to exploit unlabeled data to encode most of the discrim-

inant information of their tensor representation, and showed great improvement

on tracking performance.

The methods mentioned above actually determine the “pseudo-label” of the

unlabeled data, and do not discover the intrinsic geometrical structure of the fea-

ture space. In contrast, the LapRLS algorithm employed in our algorithm learns

a classifier that predicts similar labels for similar data points by constructing

a data adjacency graph. We show that it is crucial to consider the similarity

in terms of label prediction during tracking. Bai and Tang [17] introduced a

similar algorithm, i.e., Laplacian ranking SVM, for object tracking. However,

they adopt a handcrafted example collection strategy to obtain the labeled and

unlabeled data, which limits the performance of their tracking method.

Active learning: Active learning, also referred to as experimental design

in statistics, aims to determine which unlabeled examples would be the most

informative (i.e., improve the classifier the most if they were labeled and used

as training data) [22, 23], and has been well applied in text categorization [25]

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功