没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

Chapter 6: Probability and Naive BayesNaïve BayesLet us return yet again to our women athlete example. Suppose I ask you what sport Brittney Griner participates in (gymnastics, marathon running, or basketball) and I tell you she is 6 foot 8 inches and weighs 207 pounds. I imagine you would say basketball and if I ask you how confident you feel about your decision I imagine you would say something along the lines of “pretty darn confident.”Now I ask you what sport Heather Zurich (pictured

资源推荐

资源详情

资源评论

Chapter 6: Probability and Naive Bayes

Naïve Bayes

Let us return yet again to our women athlete example. Suppose I ask you what sport Brittney

Griner participates in (gymnastics, marathon running, or basketball) and I tell you she is 6

foot 8 inches and weighs 207 pounds. I imagine you would say basketball and if I ask you

how confident you feel about your decision I imagine you would say something along the

lines of “pretty darn confident.”

Now I ask you what sport Heather Zurich (pictured

on the right) plays. She is 6 foot 1 and weighs 176

pounds. Here I am less certain how how will answer.

You might say ‘basketball’ and I ask you how

confident you are about your prediction. You

probably are less confident than you were about your

prediction for Brittney Griner. She could be a tall

marathon runner.

Finally, I ask you about what sport Yumiko Hara

participates in; she is 5 foot 4 inches tall and weighs

95 pounds. Let's say you say ‘gymnastics’ and I ask

how confident you feel about your decision. You will

probably say something along the lines of “not too

confident.” A number of marathon runner have

similar heights and weights.

With the nearest neighbor algorithms, it is difficult to

quantify confidence about a classification. With

classification methods based on probability—

Bayesian methods—we can not only make a classification but we can make probabilistic

classifications—this athlete is 80% likely to be a basketball player, this patient has a 40%

chance of getting diabetes in the next five years, the probability of rain in Las Cruces in the

next 24 hours is 10%.

Nearest Neighbor approaches are called

lazy learners. They are called this

because when we give them a set of

training data, they just basically save—

or remember—the set. Each time it

classifies an instance, it goes through

the entire training dataset. If we have

a 100,000 music tracks in our

training data, it goes through the

entire 100,000 tracks each time it

classifies an instance.

Bayesian methods are called eager

learners. When given a training set

eager learners immediately analyze the

data and build a model. When it wants

to classify an instance it uses this

internal model. Eager learners tend to

classify instances faster than lazy

learners.

The ability to make probabilistic classifications, and the fact that they are eager learners

are two advantages of Bayesian methods.

6-2

Probability

I am assuming you have some basic knowledge of probability. I flip a coin; what is the

probably of it beings a 'heads'? I roll a 6 sided fair die, what is the probability that I roll a '1'?

that sort of thing. I tell you I picked a random 19 year old and have you tell me the probability

of that person being female and without doing any research you say 50%. These are example

of what is called prior probability and is denoted P(h)—the probability of hypothesis h.

Suppose I give you some additional information about that 19 yr. old—the person is a student

at the Frank Lloyd Wright School of Architecture in Arizona. You do a quick Google search,

see that the student body is 86% female and revise your estimate of the likelihood of the

person being female to 86%.

This we denote as P(h|D) —the probability of the hypothesis h given some data D. For

example:

So for a coin:

P(heads) = 0.5

For a six sided dice, the probability of rolling a ‘1’:

P(1) = 1/6

If I have an equal number of 19 yr. old male and

females →

P(female) = .5

P(female | attends Frank Lloyd Wright School) = 0.86

which we could read as “The probability the person is female given

that person attends the Frank Lloyd Wright School is 0.86

PROBABILITY AND NAÏVE BAYES

6-3

The formula is

P(A | B) =

P(A ∩ B)

P(B)

An example.

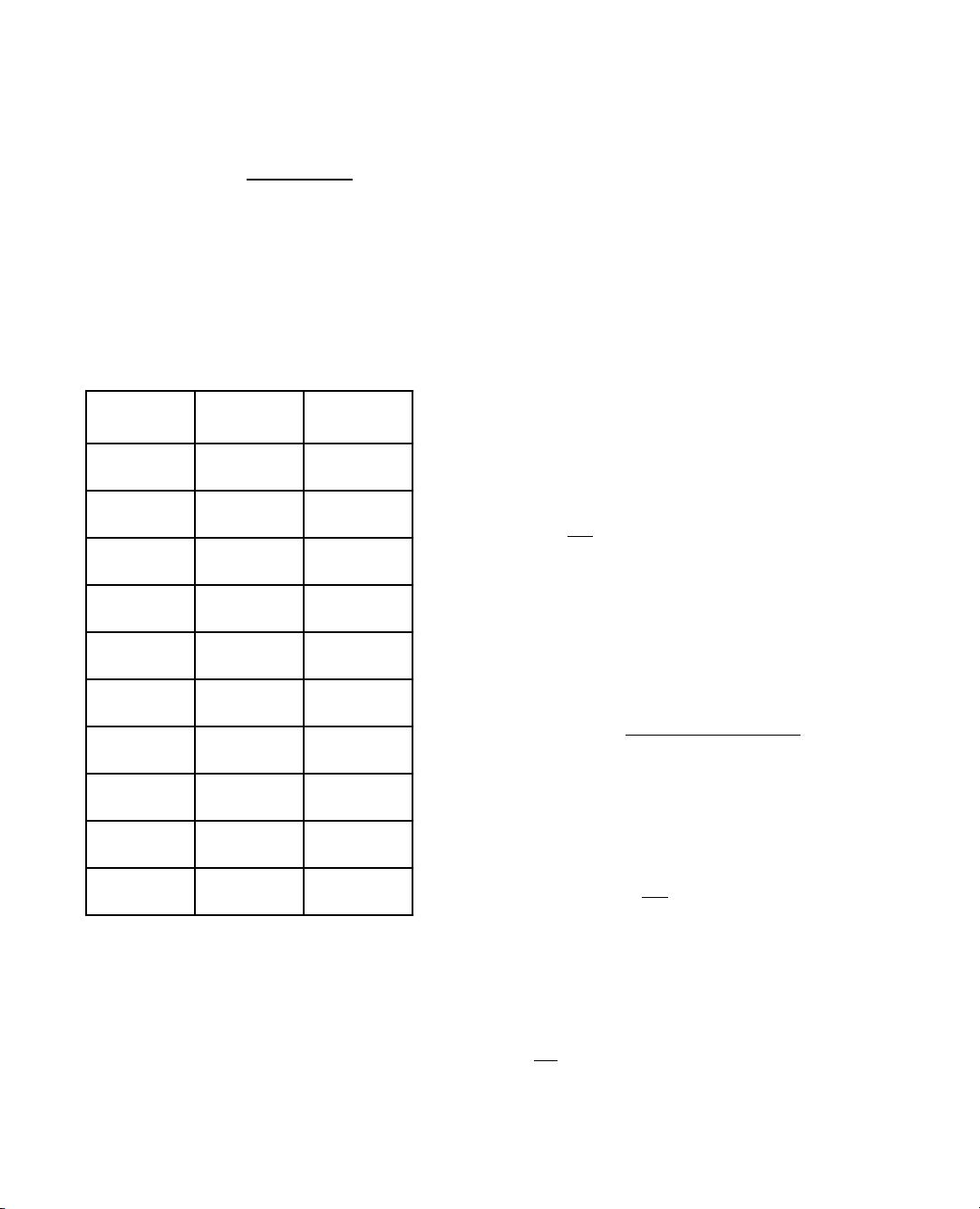

In the following table I list some people and the types of laptops and phones they have:

6-4

name

laptop

phone

Kate

PC

Android

To m

PC

Android

Harry

PC

Android

Annika

Mac

iPhone

Naomi

Mac

Android

Joe

Mac

iPhone

Chakotay

Mac

iPhone

Neelix

Mac

Android

Kes

PC

iPhone

B’Elanna

Mac

iPhone

What is the probability that a randomly

selected person uses an iPhone?

There are 5 iPhone users out of 10 total users so

P(iPhone) =

5

10

= 0.5

What is the probability that a randomly selected

person uses an iPhone given that person uses a

Mac laptop?

P(iPhone | mac) =

P(mac ∩ iPhone)

P(mac)

First, there are 4 people who use both a Mac and

an iPhone:

P(mac ∩ iPhone) =

4

10

= 0.4

and the probability of a random person using a

mac is

P(mac) =

6

10

= 0.6

So the probability of that some person uses an iPhone given that person uses a Mac is

P(iPhone | mac) =

0.4

0.6

= 0.667

That is the formal definition of posterior probability. Sometimes when we implement this we

just use raw counts:

P(iPhone|mac) =

P(iPhone | mac)=

4

6

= 0.667

s sharpen your pencil

What’s the probability of a person owning

a mac given that they own an iPhone

i.e., P(mac|iPhone)?

PROBABILITY AND NAÏVE BAYES

6-5

number of people who use a mac and an iPhone

number of people who use a mac

tip

If you feel you need practice with basic probabilities please see the links to

tutorials at guidetodatamining.com.

剩余70页未读,继续阅读

资源评论

weixin_38719540

- 粉丝: 6

- 资源: 908

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- bdwptqmxgj11.zip

- onnxruntime-win-x86

- onnxruntime-win-x64-gpu-1.20.1.zip

- vs2019 c++20 语法规范 头文件 <ratio> 的源码阅读与注释,处理分数的存储,加减乘除,以及大小比较等运算

- 首次尝试使用 Win,DirectX C++ 中的形状渲染套件.zip

- 预乘混合模式是一种用途广泛的三合一混合模式 它已经存在很长时间了,但似乎每隔几年就会被重新发现 该项目包括使用预乘 alpha 的描述,示例和工具 .zip

- 项目描述 DirectX 引擎支持版本 9、10、11 库 Microsoft SDK 功能相机视图、照明、加载网格、动画、蒙皮、层次结构界面、动画控制器、网格容器、碰撞系统 .zip

- 项目 wiki 文档中使用的代码教程的源代码库.zip

- 面向对象的通用GUI框架.zip

- 基于Java语言的PlayerBase游戏角色设计源码

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功