1644 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 22, NO. 4, APRIL 2013

Circular Reranking for Visual Search

Ting Yao, Chong-Wah Ngo, Member, IEEE,andTaoMei,Senior Member, IEEE

Abstract—Search reranking is regarded as a common way

to boost retrieval precision. The problem nevertheless is not

trivial especially when there are multiple features or modalities

to be considered for search, which often happens in image and

video retrieval. This paper proposes a new reranking algorithm,

named circular reranking, that reinforces the mutual exchange

of information across multiple modalities for improving search

performance, following the philosophy that strong performing

modality could learn from weaker ones, while weak modality

does benefit from interacting with stronger ones. Technically,

circular reranking conducts multiple runs of random walks

through exchanging the ranking scores among different features

in a cyclic manner. Unlike the existing techniques, the reranking

procedure encourages interaction among modalities to seek a

consensus that are useful for reranking. In this paper, we study

several properties of circular reranking, including how and which

order of information propagation should be configured to fully

exploit the potential of modalities for reranking. Encouraging

results are reported for both image and video retrieval on

Microsoft Research Asia Multimedia image dataset and TREC

Video Retrieval Evaluation 2007-2008 datasets, respectively.

Index Terms—Circular reranking, multimodality fusion, visual

search.

I. INTRODUCTION

T

HE rapid development of Web 2.0 technologies has

led to the surge of research activities in visual search.

While visual documents are rich in audio-visual content and

user-supplied texts, commercial visual search engines to date

mostly perform retrieval by keyword matching. A common

practice to improve search performance is to rerank the visual

documents returned from a search engine using a larger and

richer set of features. The ultimate goal is to seek con-

sensus from various features for reordering the documents

and boosting the retrieval precision. There are two general

approaches along this direction: visual pattern mining [8] and

multi-modality fusion [1], [2]. The former mines the recurrent

patterns, either explicitly or implicitly, from initial search

results and then moves up the ranks of visually similar docu-

ments. Random walk [9], for instance, performs self-reranking

Manuscript received January 12, 2012; revised October 14, 2012; accepted

December 4, 2012. Date of publication December 24, 2012; date of cur-

rent version February 12, 2013. This work was supported in part by

the National Natural Science Foundation of China under Grant 61272290

and Grant 61228205 and a grant from Microsoft Research Asia Windows

Phone Academic Program FY12-RESOPP-107. The associate editor coordi-

nating the review of this manuscript and approving it for publication was

Prof. Ton Kalker.

T. Yao and C.-W. Ngo are with the Department of Computer

Science, City University of Hong Kong, Hong Kong (e-mail:

tingyao2@student.cityu.edu.hk; cscwngo@cityu.edu.hk).

T. Mei is with Microsoft Research Asia, Beijing 100190, China (e-mail:

tmei@microsoft.com).

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TIP.2012.2236341

through identifying documents with similar patterns based on

inter-image similarity and initial rank scores. This category of

approaches, nevertheless, seldom explores the joint utilization

of multiple modalities. Instead, different modalities are treated

independently. Furthermore, the utilization of a modality is

often application dependent, making it difficult to generalize

the mining for general-purpose search. Multi-modality fusion,

in contrast, predicts the importance of modalities, for instance,

through fusion weight learning, and linearly combines them

for reordering documents. The fusion, however, is done at

the decision stage. More specifically, the estimation of fusion

weights is mainly derived from the ranking scores in different

ranked lists. There is no mechanism, however, where the

interaction among multiple modalities could be exploited for

reranking in a principle way.

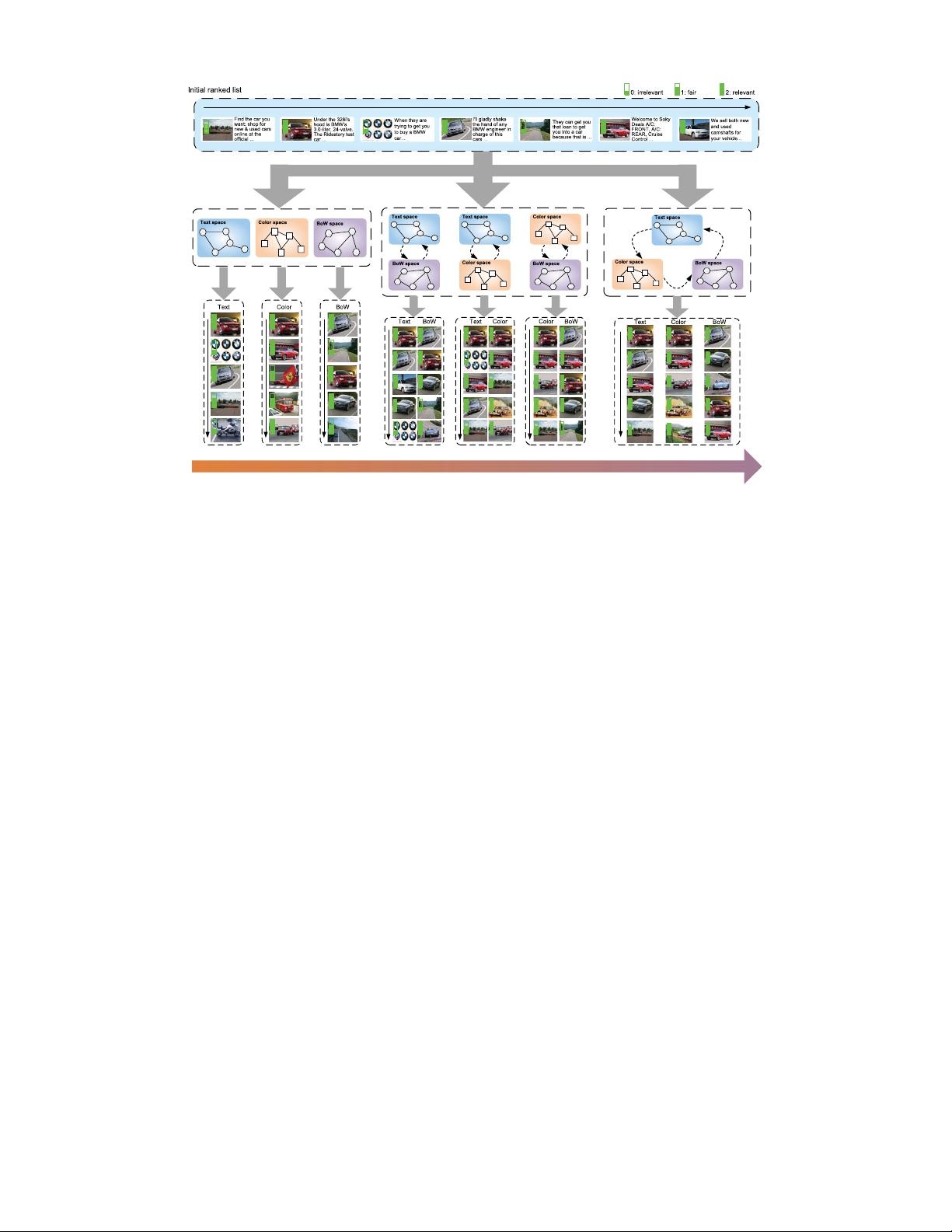

This paper proposes a novel algorithm, named circular

reranking, that takes advantages of both pattern mining and

multi-modality fusion for visual search. More importantly,

modality interaction is taken into account, on one hand to

implicitly mine recurrent patterns, and on the other, to leverage

the modalities of different strength for maximizing search per-

formance. Figure 1 shows an overview of our proposed work

compared with the existing methods. Given a ranked list of

visual documents returned from a search engine, conventional

methods use to perform random walk to rerank the results as

shown in Figure 1(a). There are variants of approaches arisen

from this methodology, for instance, conducting random walk

on the original text space [31] or a new space built upon

visual features [8], [10]. Typically each space is viewed as

a graph that specifies the document proximity. More sophis-

ticated approaches include lately fusing the reranked results

from random walks in different feature spaces, or conversely,

performing random walk on a unified graph that is fused

from multiple features [9], [21]. Regardless of these different

versions, a common issue not fully explored and studied is

how the modalities should interact in view that their abilities in

answering different queries could vary largely. We address this

issue, as shown in Figure 1(b), from the viewpoint of mutual

reinforcement. Specifically, different modalities interact by

exchanging their feature spaces while preserving the original

document scores for random walk. The exchange results in

the following outcome: the ranks for documents which remain

sharing similar local view of proximity in a different space

tend to be upgraded. Take the text and bag-of-visual-words

(BoW) feature spaces in Figure 1(b) as an example, the

second and fourth images in the initial ranked list are similar

in both text and BoW feature spaces. After reinforcement

as in Figure 1(b), these group of images remains close in

proximity and thus their ranks are likely to be moved up

after random walk. Meanwhile, the second and third images

1057–7149/$31.00 © 2012 IEEE

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功