没有合适的资源?快使用搜索试试~ 我知道了~

Cost-sensitive three-way class-specific attribute reduction

0 下载量 189 浏览量

2021-02-07

18:32:26

上传

评论

收藏 701KB PDF 举报

温馨提示

The theory of rough sets provides a method to construct three types of classification rules, leading to three-way decisions. From such a point of view, we introduce the concept of cost-sensitive three-way class-specific attribute reducts. Based on the semantics of the three-way decisions, we introduce a monotonic result cost in decision-theoretic rough set model, called the result cost of three-way decisions. We provide a critical analysis of classification-based attribute reducts from result-co

资源推荐

资源详情

资源评论

International Journal of Approximate Reasoning 105 (2019) 153–174

Contents lists available at ScienceDirect

International Journal of Approximate Reasoning

www.elsevier.com/locate/ijar

Cost-sensitive three-way class-specific attribute reduction

Xi-Ao Ma

a,∗

, Xue Rong Zhao

b

a

School of Computer and Information Engineering, Zhejiang Gongshang University, Hangzhou, Zhejiang 310018, China

b

School of Mathematics and Statistics, South-Central University for Nationalities, Wuhan 430074, China

a r t i c l e i n f o a b s t r a c t

Article history:

Received

6 April 2018

Received

in revised form 20 November 2018

Accepted

21 November 2018

Available

online 27 November 2018

Keywords:

Cost-sensitive

three-way decision

Attribute

reduction

Decision-theoretic

rough set

The theory of rough sets provides a method to construct three types of classification

rules, leading to three-way decisions. From such a point of view, we introduce the

concept of cost-sensitive three-way class-specific attribute reducts. Based on the semantics

of the three-way decisions, we introduce a monotonic result cost in decision-theoretic

rough set model, called the result cost of three-way decisions. We provide a critical

analysis of classification-based attribute reducts from result-cost-sensitive and test-cost-

sensitive

perspectives. On this basis, we propose class-specific cost-sensitive attribute

reduction approaches. More specifically, we define a class-specific minimum cost reduct.

The objective of attribute reduction is to minimize result cost and test cost with respect

to a particular decision class. We design two algorithms for constructing a family of class-

specific

minimum cost reducts based on addition–deletion strategy and deletion strategy,

respectively. The experimental results indicate that the result cost of three-way decisions

is monotonic with respect to the set inclusion of attributes and the class-specific minimum

cost reducts can make a better trade-off between misclassification cost and test cost with

respect to a particular decision class.

© 2018 Published by Elsevier Inc.

1. Introduction

Three-way decisions [45], proposed by Yao, provide a practical way to human daily decision-making. It can be interpreted

within a trisecting-and-acting framework [48]. The universe is first divided into three pair-wise disjoint parts according

to trisecting. The acting then constructs and applies the most effective strategies to process the three parts. Three-way

decisions have been widely used in many fields and disciplines, such as clustering analysis [55], face recognition [11],

granular computing [29,44,49], linguistic assessment [15], pattern recognition [33,41,42], recommender systems [7,56], social

networks [30,54], software defect prediction [14], and fuzzy decisions [59–61]. In the theory of rough sets, proposed by

Pawlak [26–28], one divides the universe into three pair-wise disjoint parts, namely, the positive, boundary, and negative

regions. We can use the notion of three-way decisions to interpret rules. Specifically, one can construct acceptance rules from

the positive region and rejection rules from the negative region to make acceptance and rejection decisions, respectively.

For the boundary region, we make neither acceptance nor rejection decisions, but make non-commitment decisions. Pawlak

rough set model requires that both the acceptance and rejection decisions must be fully correct or certain, which does not

allow any tolerance of errors. Probabilistic rough set models [4,17,32,34,45,50,57,63]were proposed to make acceptance or

rejection decisions for more objects by allowing certain acceptable levels of errors.

*

Corresponding author.

E-mail

addresses: maxiao73559@163.com (X.-A. Ma), xrzhao@whu.edu.cn (X.R. Zhao).

https://doi.org/10.1016/j.ijar.2018.11.014

0888-613X/

© 2018 Published by Elsevier Inc.

154 X.-A. Ma, X.R. Zhao / International Journal of Approximate Reasoning 105 (2019) 153–174

The notion of attribute reducts plays a key role in attribute reduction [6,9,13,19,21,25,31,37,39,40,58]. An attribute reduct

is a minimal subset of attributes serving the same purpose as the entire set of attributes [47]. There are two types of at-

tribute

reducts, namely, classification-based attribute reducts [27] and class-specific attribute reducts [1,2,18,20,35,36,51].

A classification-based attribute reduct usually chooses the same set of attribute reducts for all decision classes. A class-

specific

attribute reduct allows for different sets of attribute reducts for different decision classes. Since different attributes

may have different capabilities in discriminating different decision classes, a class-specific attribute reduct enables us to

select an attribute reduct that is most suitable for a particular decision class. In terms of the two types of attribute reducts,

three-way attribute reducts have received much attention in recent years. Zhang and Miao [58]investigated a framework of

three-way classification-based attribute reducts. Yao and Zhang [51]introduced the notion of class-specific attribute reducts

by considering only the positive region of a particular decision class. From three-way decision perspectives, Ma and Yao [20]

examined

three types of class-specific attribute reducts that respectively focus on the positive region, the negative region,

and both the positive and negative regions of a particular decision class. However, these studies do not consider the cost. In

this paper, we study and introduce the concept of cost-sensitive three-way class-specific attribute reducts.

There

are two types of important costs, namely, result cost and test cost, in cost-sensitive three-way decision model.

The result cost is the cost of the decision result, that is, quality of the decision result in terms of costs caused by incorrect

decisions. Decision-theoretic rough set model can be regarded as a kind of cost-sensitive three-way decision model [11,12].

Jia et al. [8]proposed the concept of decision cost in decision-theoretic rough set model. The decision cost can be viewed

as a kind of result cost. The test cost is the cost needed for obtaining new information. For example, in medical practice, a

patient needs to take an X-ray test to diagnose the pneumonia, which will incur certain extra costs. In recent years, result

cost and test cost have attracted a great deal of interests and are widely studied in cost-sensitive attribute reduction.

According

to the result cost and test cost, we can classify cost-sensitive attribute reduction into two categories: result-

cost-sensitive

attribute reduction and test-cost-sensitive attribute reduction. For result-cost-sensitive attribute reduction, an

attribute reduct is defined by minimizing the result cost. Yao and Zhao [52] discussed cost criterion for attribute reduction

in decision-theoretic rough set model. Jia et al. [8] constructed an optimization problem with the objective of minimizing

the decision cost and defined a minimum cost reduct in decision-theoretic rough set model. Song et al. [35]proposed the

notion of minimal decision cost reducts in fuzzy decision-theoretic rough set model to deal with numerical data. For test-

cost-sensitive

attribute reduction, the main objective of attribute reduction is to minimize the test cost under the condition

of preserving sufficient information for classification. Min et al. [22]proposed a minimal test cost reduct and developed

the effective reduction algorithm. In a follow-up study, they [23]described attribute reduction problem concerning the test

cost constraint as a constraint satisfaction problem. Yang et al. [43] established test-cost-sensitive multigranulation rough

set and presented a backtracking algorithm and a heuristic algorithm for deriving a reduct with minimal test cost. Ju et al.

[10]proposed cost-sensitive rough set model by incorporating test cost into the indiscernibility relation.

In

this paper, we make a critical analysis of classification-based attribute reducts from the perspectives of result-cost-

sensitive

attribute reduction and test-cost-sensitive attribute reduction. In addition, we introduce a new result cost based on

the semantics of three-way decisions, called the result cost of three-way decisions. It meets the monotonicity with respect

to the set inclusion of attributes. The experiment results verify the monotonicity of the result cost of three-way decisions.

Based on the result cost of three-way decisions, we propose the notion of class-specific minimum cost reducts. A class-

specific

minimum cost reduct is a minimal subset of attributes satisfying the criterion that minimizes result cost and test

cost with respect to a particular decision class. The monotonicity of the result cost of three-way decisions provides a com-

putationally

efficient method for constructing a reduct. On this basis, we develop two heuristic algorithms for constructing

a family of class-specific minimum cost reducts based on addition–deletion strategy and deletion strategy [53], respectively.

In the end, we provide several experimental analyses on the performance between class-specific minimum cost reducts and

classification-based minimum cost reducts. Here, a classification-based minimum cost reduct is defined as a minimal subset

of attributes that minimizes result cost and test cost on all decision classes.

The

rest of this paper is organized as follows. Section 2 briefly reviews some basic notions related to three-way deci-

sions

with decision-theoretic rough sets. Section 3 provides a critical analysis of classification-based attribute reducts from

result-cost-sensitive perspective and test-cost-sensitive perspective. Section 4 presents the notion of class-specific minimum

cost reducts and designs two reduct construction algorithms for finding a family of class-specific minimum cost reducts.

Section 5 carries out some experimental analyses. Section 6 presents conclusions and outlines further research trends.

2. Three-way decision with decision-theoretic rough sets

Decision-theoretic rough sets can be viewed as a specific three-way decision model. In this section, we review some

basic notions related to decision-theoretic rough sets [16,50,62].

Definition

1. An information table is defined by a tuple:

T = (OB, AT , V ={V

a

| a ∈ AT}, I ={I

a

| a ∈ AT}), (1)

where OB is a finite nonempty set of objects called the universe, AT is a nonempty finite set of attributes, V

a

is a nonempty

set of values of an attribute a ∈ At, called the domain of a, and I

a

: OB → V

a

is a description function that maps an object

in OB to exactly one value in V

a

.

X.-A. Ma, X.R. Zhao / International Journal of Approximate Reasoning 105 (2019) 153–174 155

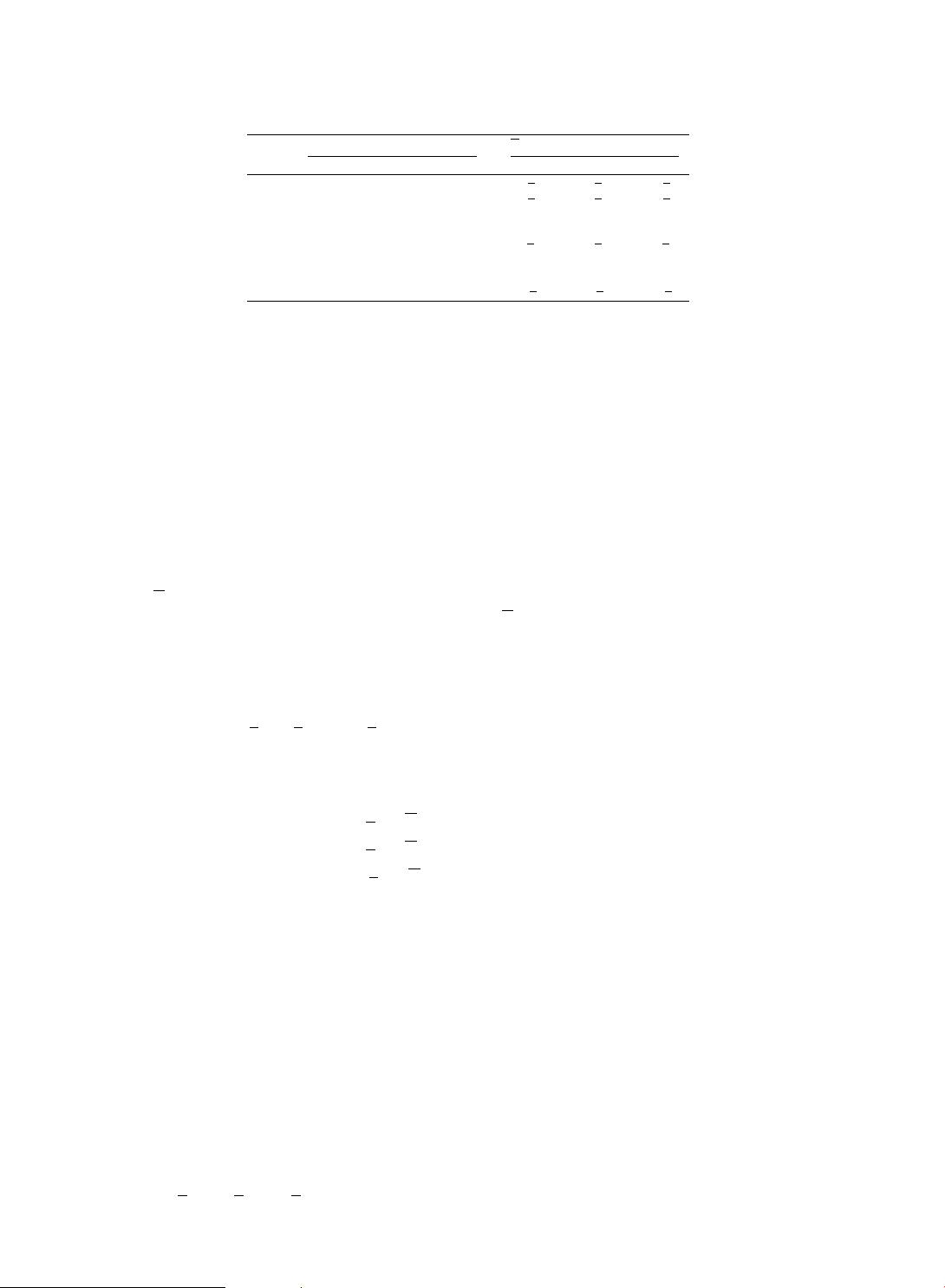

Table 1

Loss

functions.

D

j

D

j

a

P

j

a

B

j

a

N

j

a

P

j

a

B

j

a

N

j

D

1

λ

P

1

D

1

λ

B

1

D

1

λ

N

1

D

1

λ

P

1

D

1

λ

B

1

D

1

λ

N

1

D

1

D

2

λ

P

2

D

2

λ

B

2

D

2

λ

N

2

D

2

λ

P

2

D

2

λ

B

2

D

2

λ

N

2

D

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

D

j

λ

P

j

D

j

λ

B

j

D

j

λ

N

j

D

j

λ

P

j

D

j

λ

B

j

D

j

λ

N

j

D

j

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

D

m

λ

P

m

D

m

λ

B

m

D

m

λ

N

m

D

m

λ

P

m

D

m

λ

B

m

D

m

λ

N

m

D

m

Given a subset of attributes A ⊆ AT, an equivalence relation is defined by:

E

A

={(x, y) ∈ OB× OB|∀a ∈ A, I

a

(x) = I

a

(y)}. (2)

The equivalence relation induces a partition of OB denoted by π

A

= OB/E

A

={[x]

A

| x ∈ OB}, where [x]

A

={y ∈ OB |

(

x, y) ∈ E

A

}. A partition of OB is also called a classification.

A

decision table is a special type of information tables with AT = C ∪ D, where C is a set of condition attributes, D is

a set of decision attributes, and C ∩ D =∅. For brevity, a decision table is denoted by DT = (OB, C ∪ D). For any B ⊆ C ,

we have a partition π

B

. For the set of decision attributes D, we have a partition π

D

={D

1

, D

2

, ..., D

j

, ..., D

m

}, called a

decision classification of OB. Each equivalence class D

j

∈ π

D

is called a decision class.

Decision-theoretic rough set model [50]is a probabilistic extension of Pawlak rough set model [26,27]by a straight-

forward

application of the Bayesian decision theory. The set of states is given by a finite set of m decision classes

={D

1

, D

2

, ..., D

j

, ..., D

m

}. For simplicity, we use the same symbol to denote both a subset D

j

and the corresponding

state. For a m-class decision problem, we can convert it into m two-class decision problems. In general, the costs incurred

for misclassifying an object into different decision classes are different. For each decision class D

j

, the set of states is given

by

j

={D

j

, D

j

} indicating that an object is in D

j

and not in D

j

, respectively. Given a partition π

B

, the probabilities for

these two complement states are denoted as Pr(D

j

|[x]

B

) and Pr(D

j

|[x]

B

) = 1 − Pr(D

j

|[x]

B

). One can divide the universe

into three pair-wise disjoint positive region POS(D

j

|π

B

), boundary region BND(D

j

|π

B

), and negative region NEG(D

j

|π

B

).

The set of actions is denoted by A

j

={a

P

j

, a

B

j

, a

N

j

}, where a

P

j

, a

B

j

, and a

N

j

represent the three actions in classifying an

object x, namely, deciding x ∈ POS(D

j

|π

B

), deciding x ∈ BND(D

j

|π

B

), and deciding x ∈ NEG(D

j

|π

B

), respectively. The loss

functions are given by a m × 6matrix showed in Table 1.

In the matrix, λ

P

j

D

j

, λ

B

j

D

j

, and λ

N

j

D

j

denote the costs incurred for taking actions a

P

j

, a

B

j

, and a

N

j

, respectively, when

an object belongs to D

j

; λ

P

j

D

j

, λ

B

j

D

j

, and λ

N

j

D

j

denote the costs incurred for taking actions a

P

j

, a

B

j

, and a

N

j

, respectively,

when an object does not belong to D

j

.

The expected costs associated with taking individual actions for objects in [x]

B

with respect to the decision class D

j

can

be expressed as follows:

R(a

P

j

|[x]

B

) = λ

P

j

D

j

Pr(D

j

|[x]

B

) + λ

P

j

D

j

Pr(D

j

|[x]

B

),

R(a

B

j

|[x]

B

) = λ

B

j

D

j

Pr(D

j

|[x]

B

) + λ

B

j

D

j

Pr(D

j

|[x]

B

),

R(a

N

j

|[x]

B

) = λ

N

j

D

j

Pr(D

j

|[x]

B

) + λ

N

j

D

j

Pr(D

j

|[x]

B

).

The Bayesian decision procedure leads to the following minimum-risk decisions:

(P) If R(a

P

j

|[x]

B

) ≤ R(a

B

j

|[x]

B

) and R(a

P

j

|[x]

B

) ≤ R(a

N

j

|[x]

B

),

decide x ∈ POS(D

j

|π

B

);

(

B) If R(a

B

j

|[x]

B

) ≤ R(a

P

j

|[x]

B

) and R(a

B

j

|[x]

B

) ≤ R(a

N

j

|[x]

B

),

decide x ∈ BND(D

j

|π

B

);

(

N) If R(a

N

j

|[x]

B

) ≤ R(a

P

j

|[x]

B

) and R(a

N

j

|[x]

B

) ≤ R(a

B

j

|[x]

B

),

decide x ∈ NEG(D

j

|π

B

).

Tie-breaking criteria should be added so that each object is classified into only one region. Consider a special type of loss

function with the following constraints:

(C1)λ

P

j

D

j

≤ λ

B

j

D

j

<λ

N

j

D

j

,

λ

N

j

D

j

≤ λ

B

j

D

j

<λ

P

j

D

j

.

156 X.-A. Ma, X.R. Zhao / International Journal of Approximate Reasoning 105 (2019) 153–174

That is, the cost of classifying an object x

belonging

to D

j

into the positive region POS(D

j

|π

B

) is less than or equal to

the cost of classifying x

into

the boundary region BND(D

j

|π

B

), and both of these costs are strictly less than the cost of

classifying x

into

the negative region NEG(D

j

|π

B

). The reverse order of costs is used to classify an object not in D

j

. By

Pr(D

j

|[x]

B

) + Pr(D

j

|[x]

B

) = 1, we can simplify the decision rules (P)–(N) under the condition (C1). The first condition in

rule (P) can be expressed as follows:

R(a

P

j

|[x]

B

) ≤ R(a

B

j

|[x]

B

)

⇐⇒ λ

P

j

D

j

Pr(D

j

|[x]

B

) + λ

P

j

D

j

Pr(D

j

|[x]

B

) ≤ λ

B

j

D

j

Pr(D

j

|[x]

B

) + λ

B

j

D

j

Pr(D

j

|[x]

B

)

⇐⇒ λ

P

j

D

j

Pr(D

j

|[x]

B

) + λ

P

j

D

j

(1 − Pr(D

j

|[x]

B

)) ≤ λ

B

j

D

j

Pr(D

j

|[x]

B

) + λ

B

j

D

j

(1 − Pr(D

j

|[x]

B

))

⇐⇒

Pr(D

j

|[x]

B

) ≥

(λ

P

j

D

j

− λ

B

j

D

j

)

(λ

P

j

D

j

− λ

B

j

D

j

) + (λ

B

j

D

j

− λ

P

j

D

j

)

.

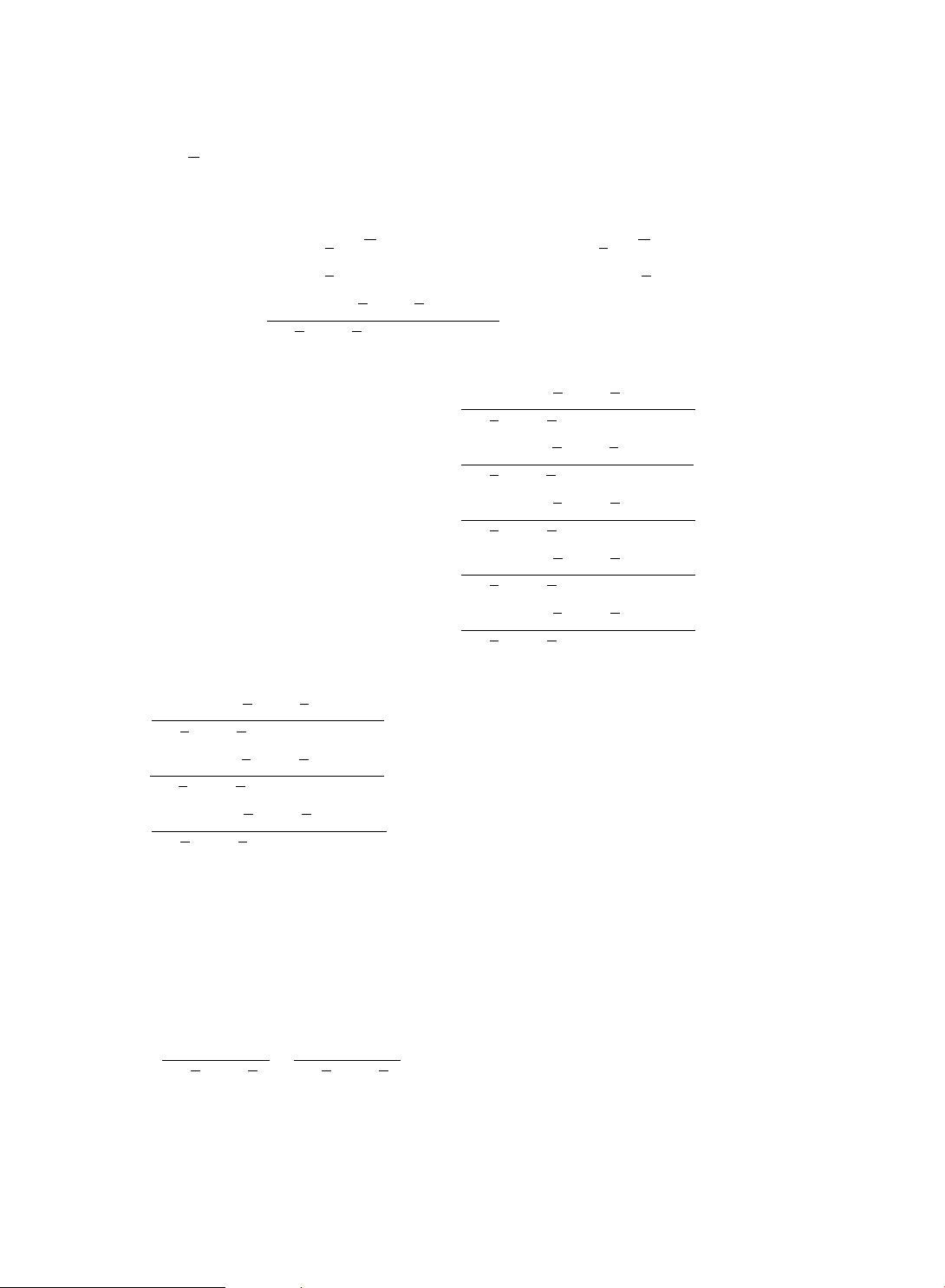

Similarly, other conditions in rules (P)–(N) can be expressed as follows:

R(a

P

j

|[x]

B

) ≤ R(a

N

j

|[x]

B

) ⇐⇒ Pr(D

j

|[x]

B

) ≥

(λ

P

j

D

j

− λ

N

j

D

j

)

(λ

P

j

D

j

− λ

N

j

D

j

) + (λ

N

j

D

j

− λ

P

j

D

j

)

,

R(a

B

j

|[x]

B

) ≤ R(a

P

j

|[x]

B

) ⇐⇒ Pr(D

j

|[x]

B

) ≤

(λ

P

j

D

j

− λ

B

j

D

j

)

(λ

P

j

D

j

− λ

B

j

D

j

) + (λ

B

j

D

j

− λ

P

j

D

j

)

,

R(a

B

j

|[x]

B

) ≤ R(a

N

j

|[x]

B

) ⇐⇒ Pr(D

j

|[x]

B

) ≥

(λ

B

j

D

j

− λ

N

j

D

j

)

(λ

B

j

D

j

− λ

N

j

D

j

) + (λ

N

j

D

j

− λ

B

j

D

j

)

,

R(a

N

j

|[x]

B

) ≤ R(a

P

j

|[x]

B

) ⇐⇒ Pr(D

j

|[x]

B

) ≤

(λ

P

j

D

j

− λ

N

j

D

j

)

(λ

P

j

D

j

− λ

N

j

D

j

) + (λ

N

j

D

j

− λ

P

j

D

j

)

,

R(a

N

j

|[x]

B

) ≤ R(a

B

j

|[x]

B

) ⇐⇒ Pr(D

j

|[x]

B

) ≤

(λ

B

j

D

j

− λ

N

j

D

j

)

(λ

B

j

D

j

− λ

N

j

D

j

) + (λ

N

j

D

j

− λ

B

j

D

j

)

.

By introducing three parameters α

j

, β

j

, and γ

j

:

α

j

=

(λ

P

j

D

j

− λ

B

j

D

j

)

(λ

P

j

D

j

− λ

B

j

D

j

) + (λ

B

j

D

j

− λ

P

j

D

j

)

,

β

j

=

(λ

B

j

D

j

− λ

N

j

D

j

)

(λ

B

j

D

j

− λ

N

j

D

j

) + (λ

N

j

D

j

− λ

B

j

D

j

)

,

γ

j

=

(λ

P

j

D

j

− λ

N

j

D

j

)

(λ

P

j

D

j

− λ

N

j

D

j

) + (λ

N

j

D

j

− λ

P

j

D

j

)

,

(3)

the decision rules (P)–(N) can be re-expressed as follows:

(P) If Pr(D

j

|[x]

B

) ≥ α

j

and Pr(D

j

|[x]

B

) ≥ γ

j

, decide x ∈ POS(D

j

|π

B

);

(

B) If Pr(D

j

|[x]

B

) ≤ α

j

and Pr(D

j

|[x]

B

) ≥ β

j

, decide x ∈ BND(D

j

|π

B

);

(

N) If Pr(D

j

|[x]

B

) ≤ β

j

and Pr(D

j

|[x]

B

) ≤ γ

j

, decide x ∈ NEG(D

j

|π

B

).

The conditions of rule (B) suggest that α

j

>β

j

may be a reasonable condition so that the boundary region may be

nonempty. By setting α

j

>β

j

, we obtain the following condition on the loss function:

(C2)

(λ

N

j

D

j

− λ

B

j

D

j

)

(λ

B

j

D

j

− λ

N

j

D

j

)

>

(λ

B

j

D

j

− λ

P

j

D

j

)

(λ

P

j

D

j

− λ

B

j

D

j

)

.

Conditions (C1) and (C2) imply that 1 ≥ α

j

> γ

j

>β

j

≥ 0. After tie breaking, the following simplified rules are obtained:

(P) If Pr(D

j

|[x]

B

) ≥ α

j

, decide x ∈ POS(D

j

|π

B

);

(

B) If β

j

< Pr(D

j

|[x]

B

)<α

j

, decide x ∈ BND(D

j

|π

B

);

(

N) If Pr(D

j

|[x]

B

) ≤ β

j

, decide x ∈ NEG(D

j

|π

B

).

剩余21页未读,继续阅读

资源评论

weixin_38657139

- 粉丝: 9

- 资源: 955

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功