没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

在本文中,我们提出了一种基于线性动态系统(LDS)的动作识别新方法。我们的主要贡献是双重的。首先,我们将LDS引入到动作识别中。 LDS描述了动态纹理,该纹理在时间上表现出一定的平稳性。由于时空补丁比视频序列更类似于线性时不变系统,因此采用它们来建模从视频序列中提取的时空补丁。值得注意的是,LDS不在欧几里德空间中生活。因此,我们采用核主角来度量LDS之间的相似度,然后使用多类谱聚类来生成特征表示袋的码本。其次,我们提出了一种监督式码本删减方法,以保留可区分的视觉单词并抑制每个动作类别中的噪声。选择使类间距离最大化而使类内距离最小化的视觉词进行分类。我们的方法在三个基准数据集上产生了最先进的性能。特别是,在具有挑战性的UCF体育和故事片数据集上进行的实验证明了该方法在逼真的复杂场景中的有效性。

资源推荐

资源详情

资源评论

Action recognition using linear dynamic systems

Haoran Wang

a,b

, Chunfeng Yuan

b

, Guan Luo

b

, Weiming Hu

b,

n

, Changyin Sun

a

a

School of Automation, Southeast University, Nanjing, China

b

National Laboratory of Pattern Recognition, Institute of Automation, CAS, Beijing, China

article info

Article history:

Received 20 April 2012

Received in revised form

26 November 2012

Accepted 1 December 2012

Available online 12 December 2012

Keywords:

Linear dynamic system

Kernel principal angle

Multiclass spectral clustering

Supervised codebook pruning

Action recognition

abstract

In this paper, we propose a novel approach based on Linear Dynamic Systems (LDSs) for action

recognition. Our main contributions are two-fold. First, we introduce LDSs to action recognition. LDSs

describe the dynamic texture which exhibits certain stationarity properties in time. They are adopted to

model the spatiotemporal patches which are extracted from the video sequence, because the

spatiotemporal patch is more analogous to a linear time invariant system than the video sequence.

Notably, LDSs do not live in the Euclidean space. So we adopt the kernel princip al angle to measure the

similarity between LDSs, and then the multiclass spectral clustering is used to generate the codebook

for the bag of features representation. Second, we propose a supervised codebook pruning method to

preserve the discriminative visual words and suppress the noise in each action class. The vis ual words

which maximize the inter-class distance and minimize the intra-class distance are selected for

classification. Our approach yields the state-of-the-art performance on three benchmark datasets.

Especially, the experiments on the challenging UCF Sports and Feature Films datasets demonstrate the

effectiveness of the proposed approach in realistic complex scenarios.

& 2012 Elsevier Ltd. All rights reserved.

1. Introduction

Automatic recognition of human actions in videos is useful for

surveillance, content-based summarization, and human–computer

interaction applications. Yet, it is still a challenging problem. In

recent years, a large number of researchers have addressed this

problem as evidenced by several survey papers [1–4].

Action representation is important for action recognition.

There are appearance-based representation [5,40], shape-based

representation [6,41], optical-flow-based representation [7,42],

volume-based representation [8,43] and interest-point-based

representation [9,44]. Among them, methods using local interest

point features together with the bag of visual words model are

greatly popular, due to their simple implementation and good

performance. The bag of visual words approaches are robust to

noise, occlusion and geometric variation, without requirement for

reliable tracking on a particular subject. Despite recent develop-

ments, the representation of local regions in videos is still an open

field of research.

Dynamic textures are sequences of images of moving scenes that

exhibit certain stationarity properties in time, such as sea-waves,

smoke, foliage, whirlwind etc. They capture the dynamic informa-

tion in the motion of objects. Doretto et al. [10] show that dynamic

textures can be modeled using a LDS. Tools from system identifica-

tion are borrowed to capture the essence of dynamic textures. Once

learned, the LDS model has predictive power and can be used for

extrapolating dynamic textures with negligible computational cost.

In tradition, LDS is used to describe dynamic textures of video

sequence [11,12]. But a video sequence is usually not a linear time

invariant system due in part to its long time span and complex

changes. Compared with video sequence, the spatiotemporal patch

is analogous to a linear time invariant system. Moreover, LDS

exhibits mo re dynamic information, which is important for the

representation of moving scenes, than traditional local features.

Several categorization algorithms have been proposed based

on the LDS parameters, which live in a non-Euclidean space.

Among these methods, Vishwanathan et al. [13] use Binet–

Cauchy kernels to compare the parameters of two LDSs. Chan

and Vasconcelos [14] use both the KL divergence and the Martin

distance [12,15] as a metric between dynamic systems. Woolfe

and Fitzgibbon [16] use the family of Chernoff distances, and the

distances between cepstrum coefficients are adopted as the

metrics between LDSs. These methods usually define a distance

measurement between the model parameters of two dynamic

systems. Once such a metric has been defined, classifiers such as

nearest neighbors or support vector machines can be used to

categorize a query video sequence based on the training data.

However, all the above approaches are supervised classification.

They are not suitable for the codebook generation in the bag

of words representation.

Contents lists available at SciVerse ScienceDirect

journal homepage: www.elsevier.com/locate/pr

Pattern Recognition

0031-3203/$ - see front matter & 2012 Elsevier Ltd. All rights reserved.

http://dx.doi.org/10.1016/j.patcog.2012.12.001

*

Corresponding author. Tel.: þ86 13910900826.

E-mail address: wmhu@nlpr.ia.ac.cn (W. Hu).

Pattern Recognition 46 (2013) 1710–1718

Dictionary learning is still an open problem. Successful extrac-

tion of good features from videos is crucial to action recognition.

Several studies [17–19] have been made to extract discriminative

visual words for classification. In a codebook, some visual words

are discriminative, but some visual words are noise which has

negative influence on classification. An effective dictionary learn-

ing method, which selects those discriminative visual words for

classification, can improve the accuracy of recognition.

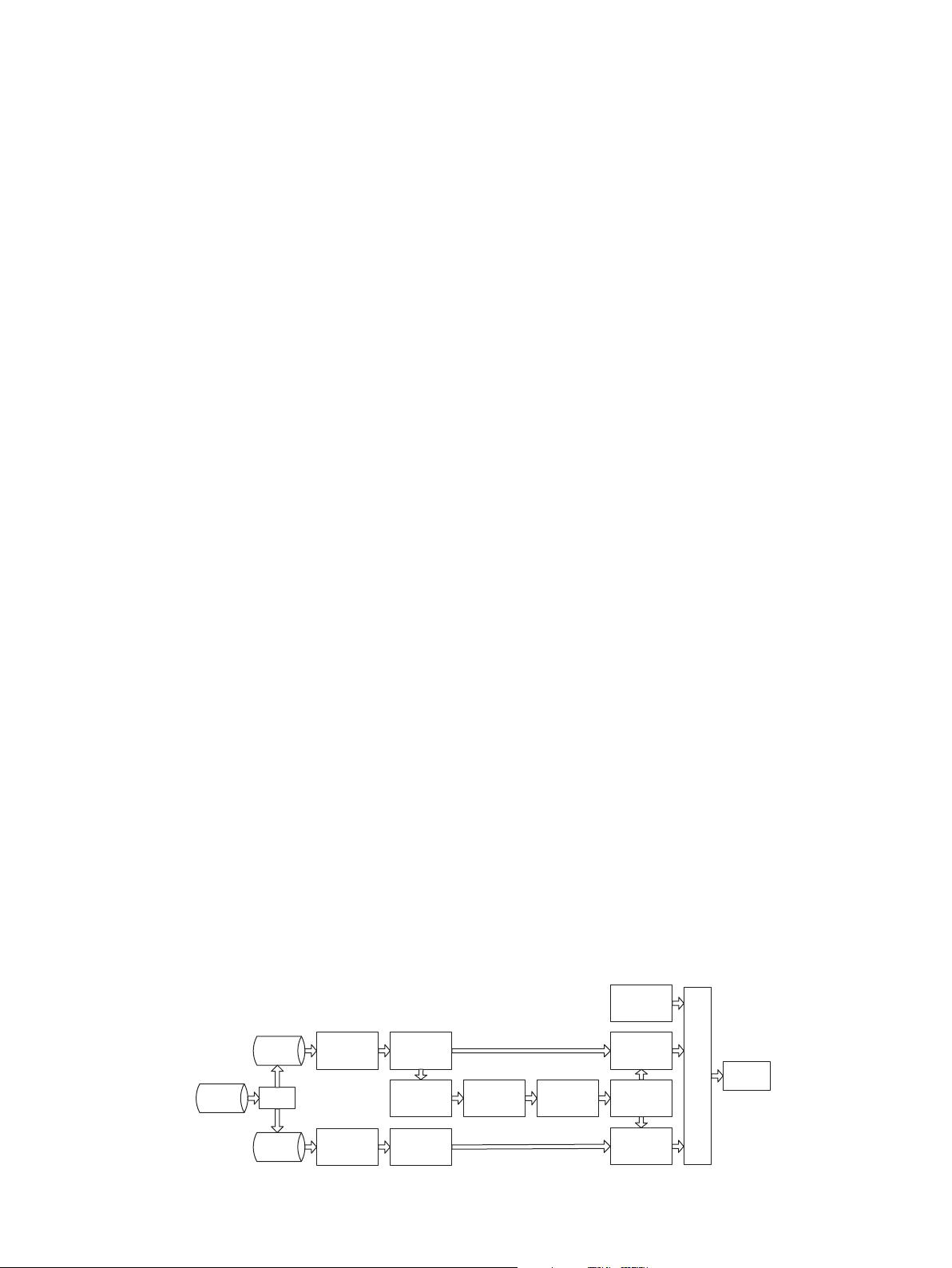

In this paper, we introduce the Linear Dynamic Systems to

action recognition. We replace traditional gradient and optical flow

features of interest points by LDS. LDS describes the temporal

evolution in a spatiotemporal patch which is analogous to a linear

time invariant system. It captures more dynamic information than

traditional local features. So, we utilize LDS as the local descriptor

which lives in a non-Euclidean space. To obtain the codebook for

the bag of features representation, existing methods typically

cluster local features by K-means. But the K-means method does

not fit the non-Euclidean space. In [15], the high-dimensional non-

Euclidean space is mapped to a low-dimensional Euclidean space,

and then the clustering algorithm suitable for the Euclidean space is

used to generate the codebook. But the transformation is an

approximation, and it is not the optimal solution. In our method,

we adopt the kernel principal angle [20] to measure the similarity

between LDSs, and then use the multiclass spectral clustering

[21–23] to compute the codebook of LDSs. As a discriminative

approach, the spectral clustering does not make assumptions about

the global structure of data. What makes it appealing is the global-

optimal solution in the relaxed continuous domain and the nearly

global-optimal solution in the discrete domain. In the codebook, not

all the visual words are discriminative for classification. To extract

the discriminative visual words and remove the noise in each action

class, we propose a supervised codebook pruning method. The

algorithm is effective and linear-complexity. Furthermore, it is fairly

general and can be used to deal with many areas relative to the

codebook. Fig. 1 shows the flowchart of our framework.

The remainder of this paper is organized as follows. Section 2

gives a review of related approaches about action recognition. Section

3 introduces the LDS-based codebook formation. Section 4 proposes

the supervised codebook pruning method. Section 5 demonstrates

the experimental results. Section 6 concludes this paper.

2. Related work

We review the related work on interest-point-based represen-

tations and dictionary learning methods for action recognition.

2.1. Interest-point-based representations

Much work has recently demonstrated the effectiveness of the

interest-point-based representation on action recognition tasks.

Local descriptors based on normalized pixel values, brightness

gradients and windowed optical flows are evaluated for action

recognition by Dollar et al. [34]. Experiments on three datasets

(KTH human actions, facial expressions and mouse behavior)

show that gradient descriptors achieve excellent results. Those

descriptors are computed by concatenating all gradient vectors in

a region or by building histograms on gradient components.

Primarily based on gradient magnitudes, they suffer from sensi-

tivity to illumination changes. Laptev and Lindeberg [35] inves-

tigate single-scale and multi-scale N-jets, histograms of optical

flows, and histograms of gradients as local descriptors for video

sequences. Best performance is obtained with optical flow and

spatio-temporal gradients. Instead of the direct quantization of

the gradient orientations, each component of the gradient vector

is quantized separately. In later work, Laptev et al. [9,36] apply a

coarse quantization to gradient orientations. As only spatial

gradients have been used, histogram features based on optical

flow are employed in order to capture the temporal information.

The computation of optical flow is rather expensive and the result

depends on the regularization method [37]. Klaser et al. [38] build

a descriptor with pure spatio-temporal 3D gradients which are

robust and cheap to compute. They perform orientation quantiza-

tion with up to 20 bins by using regular polyhedrons. But this

descriptor is still computed by building histograms on gradient

components, and ignores the spatiotemporal information.

The strategy of generating compound neighborhood-based

features, which are explored initially for static images and object

recognition [39–41], has been extended to videos. One approach

is to subdivide the space–time volume globally using a coarse grid

of histogram bins [9,42–44]. Another approach places grids

around the raw interest points, and designs a new representation

using the positions of the interest points that fall within the grid

cells surrounding that central point [29]. In contrast to previous

methods which compute the feature of each interest point, the

neighborhood-based features use the distribution of interest

points as the descriptor.

2.2. Dictionary learning

To the best of our knowledge, not much work on dictionary

learning has been reported for action recognition. Liu and Shah

[17] propose an approach to automatically discover the optimal

number of visual word clusters by utilizing maximization of

mutual information. In later work, Liu et al. [19] use Page

Rank to mine the most informative static features. In order to

further construct compact yet discriminative visual vocabularies,

a divisive information-theoretic algorithm is employed to group

semantically related features. However, their formulation is

intractable and requires approximation, and it may not learn

the optimal dictionary. Brendel and Todorovic [18] store multiple

diverse exemplars per activity class, and learn a sparse diction-

ary of most discriminative exemplars. But the exemplars are

extracted based on human-body postures which are difficult to

Dataset Split

Training

videos

Test

videos

Interest point

detection

Interest point

detection

LDS features

LDS features

Kernel principal

angles

Multiclass

spectral cluster

Dictionary

pruning

Codebook

Histograms of

BOF

Histograms of

BOF

SVM

Ground truth

Evaluation

Fig. 1. Flowchart of the proposed framework.

H. Wang et al. / Pattern Recognition 46 (2013) 1710–1718 1711

剩余8页未读,继续阅读

资源评论

weixin_38655987

- 粉丝: 8

- 资源: 933

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 计算机语言学中单调队列算法的C++实现

- 印度未来水资源需求与干预策略的建模与评估 - 超出人均用水比率模型的应用

- 鲜亮的高景观色彩,专注景观的小程序组件库.zip

- 历史新闻传播模型及其优化研究 - 社交网络与图模型的应用

- 社会网络中信息流与舆论演变的多层动态模型研究

- 信息传播模型与过滤技术研究-基于社会网络与媒体影响的SIR模型及其应用

- 首个 Taro 多端统一实例 - 网易严选(小程序 + H5 + React Native) - By 趣店 FED.zip

- 教育捐赠模型优化与高校投资策略分析

- 毕业设计-基于Java的网络小说信息爬取与分析软件项目源码+数据库+文档说明

- 餐饮小程序源码.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功