SC17, November 12–17, 2017, Denver, CO, USA Siyang Li, Youyou Lu, Jiwu Shu, Yang Hu, and Tao Li

performance bottleneck is caused by the network latency among

dierent nodes. Recent distributed le systems distribute metadata

either to data servers [

13

,

26

,

40

,

47

] or to a metadata server cluster

(MDS cluster) [

11

,

31

,

40

,

52

] to scale the metadata service. In such

distributed metadata services, a metadata operation may need to

access multiple server nodes. Considering these accesses have to

be atomic [

9

,

26

,

48

,

51

] or performed in correct order [

27

] to keep

consistency, a le operation needs to traverse dierent server nodes

or access a single node many times. Under such circumstance, the

network latency can severely impact the inter-node access perfor-

mance of distributed metadata service.

Our goal in this paper is two-fold, (1) to reduce the network

latency within metadata operation; (2) to fully utilize KV-store’s

performance benets. Our key idea is to reduce the dependencies

among le system metadata (i. e. , the logical organization of le

system metadata), ensuring that important operation only commu-

nicates with one or two metadata servers during its life cycle.

To such an end, we propose LocoFS a loosely-coupled metadata

service in a distributed le system, to reduce network latency and

improve utilization of KV store. LocoFS rst cuts the le metadata

(i. e. , le

inode

) from the directory tree. These le metadata are

organized independently and they form a at space, where the

dirent-inode

relationship for les are kept with the le

inode

using the form of reverted index. This attened director y tree struc-

ture matches the KV access patterns better. LocoFS also divides

the le metadata into two parts: access part and content part. This

partition in the le metadata further improves the utilization of

KV stores for some operations which only use part of metadata.

In such ways, LocoFS reorganizes the le system directory tree

with reduced dependency, enabling higher eciency in KV based

accesses. Our contributions are summarized as follows:

(1)

We propose a attened directory tree structure to decouple the

le metadata and directory metadata. The attened directory

tree reduces dependencies among metadata, resulting in lower

latency.

(2)

We also further decouple the le metadata into two parts to

make their accesses in a KV friendly way. This separation fur-

ther improves le system metadata performance on KV stores.

(3)

We implement and evaluate LocoFS. Evaluations show that Lo-

coFS achieves 100K IOPS for le

create

and

mkdir

when using

one metadata server, achieve 38% of KV-store’s performance.

LocoFS also achieves low latency and maintains scalable and

stable performance.

The rest of this paper is organized as follows. Section 2 discusses

the implication of directory structure in distributed le system

and the motivation of this paper. Section 3 describes the design

and implementation of the proposed loosely-coupled metadata ser-

vice, LocoFS. It is evaluated in Section 4. Related work is given in

Section 5, and the conclusion is made in Section 6.

2 MOTIVATION

In this section, we rst demonstrate the huge performance gap

between distributed le system (DFS) metadata and key-value (KV)

stores. We then explore the design of current DFS directory tree

to identify the performance bottlenecks caused by the latency and

scalability.

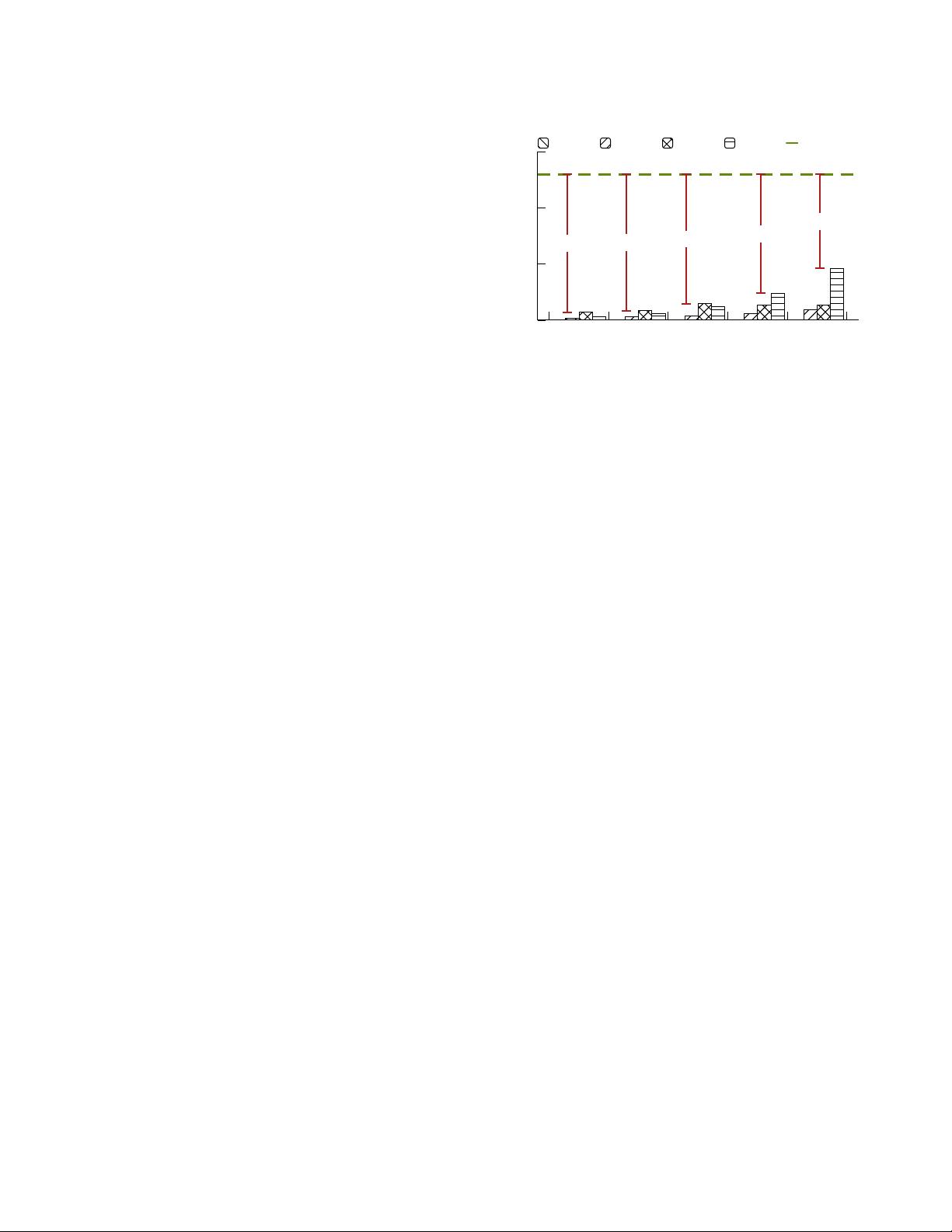

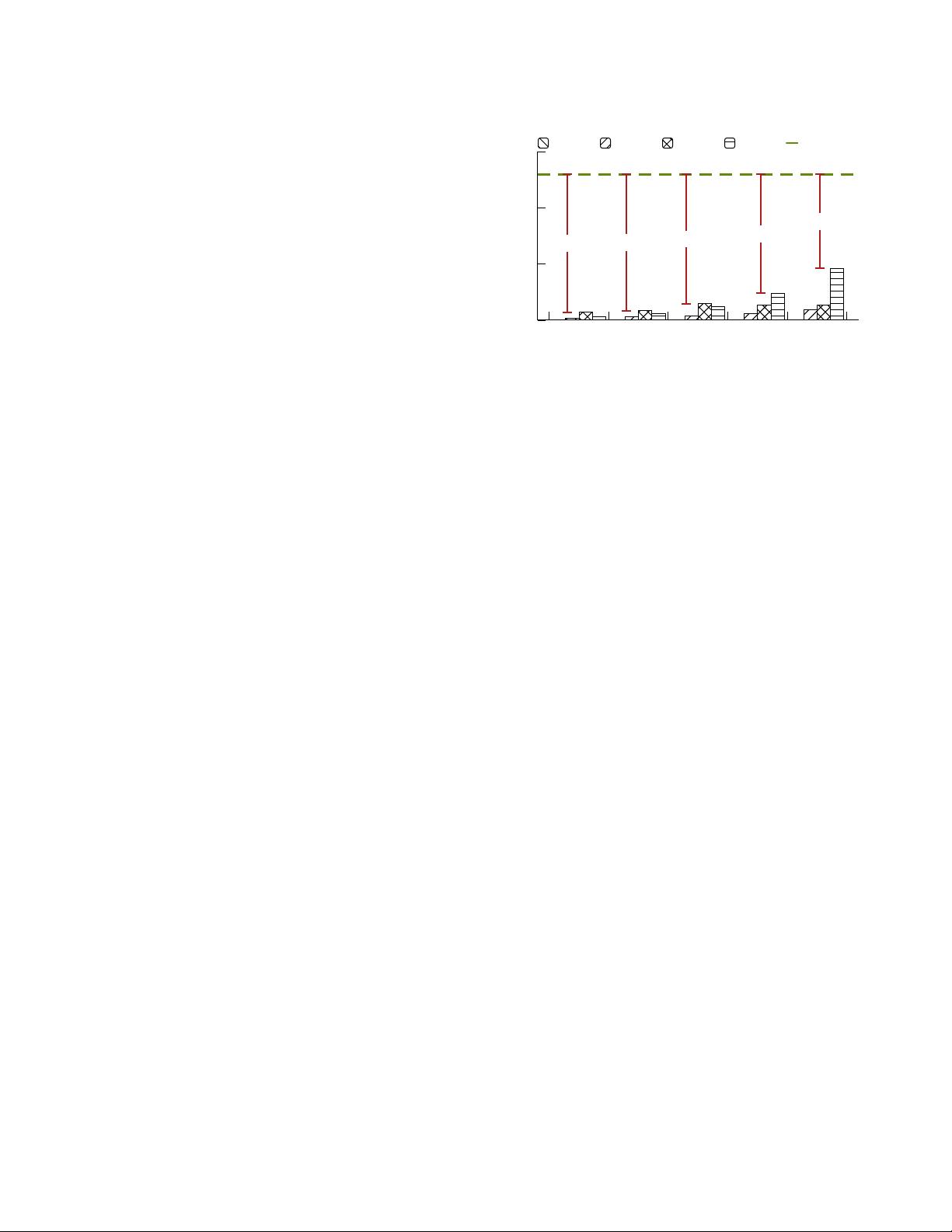

CephFS Gluster Lustre IndexFS Single-KV

File Creation (OPS)

0

100k

200k

300k

Number of Metadata Servers

1 2 4 8 16

Figure 1: Performance Gap between File System Metadata

(Lustre, CephFS and IndexFS) and KV Stores (Kyoto Cabinet

(Tree DB)).

2.1 Performance Gap Between FS Metadata and

KV Store

There are four major schemes for metadata management in dis-

tributed le system: single metadata server (single-MDS) scheme

(e.g., HDFS), multi-metadata servers schemes (multi-MDS) with

hash-based scheme(e.g., Gluster [

13

]), directory-based scheme (e.g.,

CephFS [

46

]), stripe-based scheme (e.g., Lustre DNE, Giga+ [

36

]).

Comparing with directory-based scheme, hash-based and stripe-

based schemes achieve better scalability but sacrice the locality

on single node. One reason is that the multi-MDS schemes issue

multiple requests to the MDS even if these requests are located in

the same server. As shown in gure 1 compared with the KV, the le

system with single MDS on both one node (95% IOPS degradation)

and mulitple nodes (65% IOPS degradation on 16 nodes).

From the gure 1 , we can also see that IndexFS, which stores

metadata using LevelDB, achieves an IOPS that is only 1. 6% of

LevelDB [

16

], when using one single server. To achieve the Kyoto

Cabinet’s performance on a single server, IndexFS needs to scale-out

to 32 servers. Therefore, there is still a large headroom to exploit the

performance benets of key-value stores in the le system metadata

service.

2.2 Problems with File System Directory Tree

We further study the le system directory tree structure to under-

stand the underline reasons of the huge performance gap. We nd

that the cross-server operations caused by strong dependencies

among DFS metadata dramatically worsen the metadata perfor-

mance. We identify two major problems as discussed in the follow-

ing.

2.2.1 Long Locating Latency. Distributed le systems spread

metadata to multiple servers to increase the metadata processing

capacity. A metadata operation needs to communicate with multiple

metadata servers, and this may lead to high latency of metadata

operations. Figure 2 shows an example of metadata operation in

a distributed le system with distributed metadata service. In this

example,

inodes

are distributed in server n1 to n4. If there is a

request to access le 6, the le system client has to access

n

1 rst

to read dir 0, and then read dir 1, 5 and 6 sequentially. When these

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功