648 W. Min et al.

1 3

from general visual recognition to facilitate mobile visual

search. Furthermore, they released a data set for perfor-

mance evaluation. Chatzilari et al. [10] performed an exten-

sive comparative study of different recognition approaches

on the mobile device by evaluating the performance of the

feature extraction and encoding algorithms. Compared with

general visual recognition, mobile visual recognition has its

unique challenges:

–– Limited network bandwidth With the development of

the Internet communicate technology, such as 4G, the

bandwidth of networks increased fast. However, there

is still a bottleneck in many areas, especially those

densely populated ones, where many people are using

mobile devices simultaneously. Many mobile visual

systems extract features in the mobile side. However,

the amount of visual features sent from the mobile side

to the server should be reduced to satisfy the real-time

query requirement, which probably leads to the deg-

radation of the recognition performance. Therefore,

under the limitation of the network bandwidth, how

to send compressed features without affecting the rec-

ognition performance is a challenging problem in the

mobile recognition environment.

–– Limited battery power Existing mobile devices have lim-

ited capacity of the power. Sending a feature vector of the

query image saves network bandwidth and further reduces

the transmission cost. However, computing features will

consume the power of the battery significantly. Obviously,

this challenges the tolerant attitudes of users to a short bat-

tery running time, since recharging is usually inconvenient

for users, especially when they are traveling.

–– Diverse photo-taking conditions Because of different

camera configurations in the mobile device (e.g., dif-

ferent resolutions) and diverse indoor/outdoor condi-

tions (e.g., varying weather conditions), how to achieve

robust visual recognition under these conditions is also

very challenging.

To solve these problems, many existing works [13, 33, 41,

108] have developed different visual recognition methods

to improve the mobile visual recognition experience. These

methods directly extract the visual features for image rep-

resentation, including deep features [52]. To reduce the

amount of data sent from the mobile device to the server,

some encoding methods on the mobile side have been

developed to compress the visual features, such as SURF

[7], CHoG [8], and BoHB [40]. However, one shortcom-

ing of these approaches is that they mainly analyze the

content alone, while ignore the rich contextual information

(e.g., the GPS and time information) easily acquired by the

mobile device, which can speed up the recognition time

and improve the recognition performance.

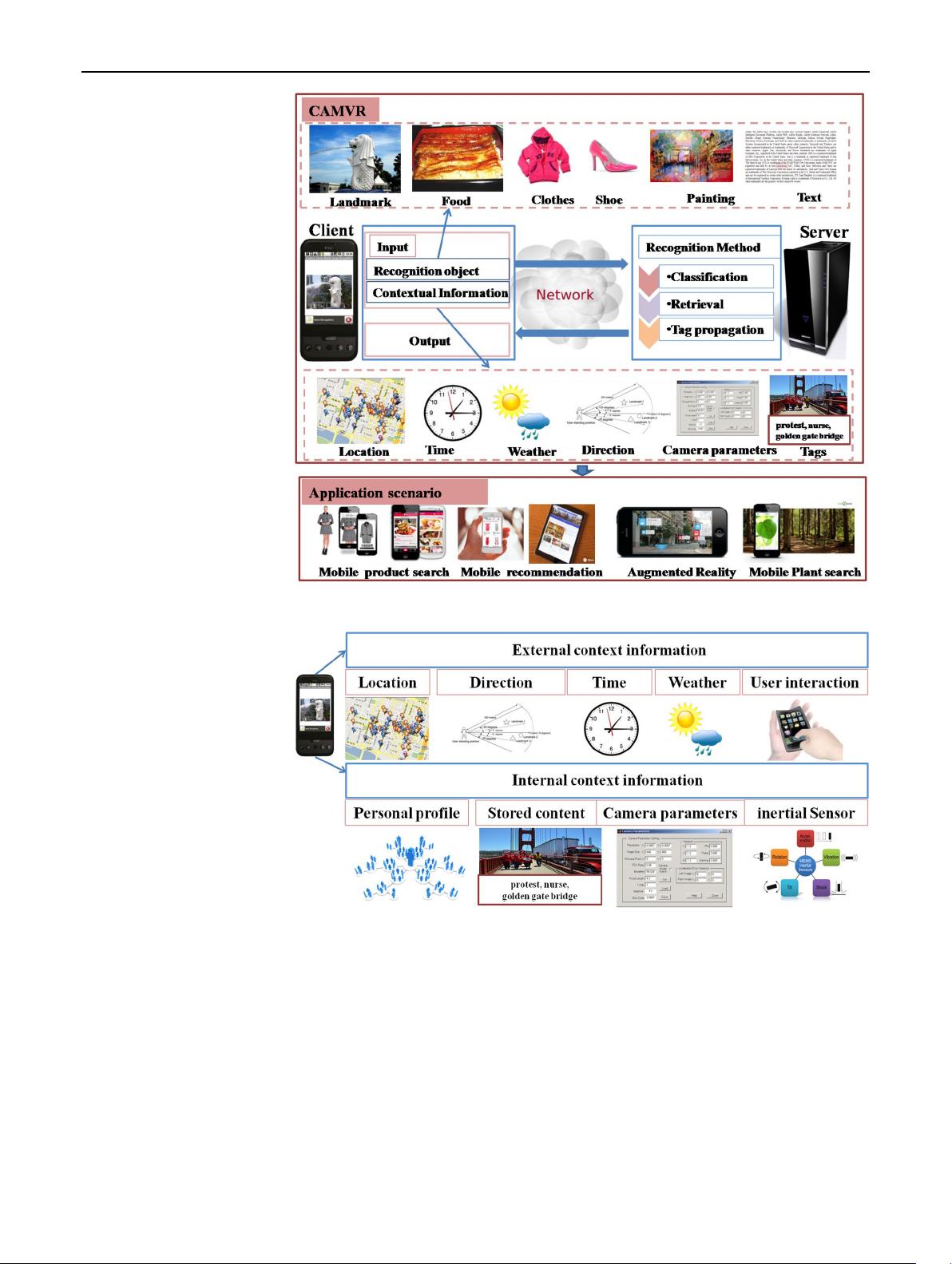

In fact, mobile devices bring a lot of contextual infor-

mation, which can be categorized into two levels: one is

the internal contextual information which is intrinsically

contained in the mobile devices, such as stored textual/

visual content, camera, and other sensor’s parameters.

The other is the external contextual information which

could be easily acquired by the mobile device, such as

time and geo-location. Researchers have exploited many

of them to improve the recognition performance. Com-

monly used contexts include location, direction, time, text,

gravity, acceleration, and other camera parameters. For

example, in [95], content analysis is essentially filtered

by a pre-defined area centered at the GPS location of the

query image. Chen et al. [11] utilized the GPS informa-

tion to narrow the search space for landmark recognition.

Ji et al. [51] designed a GPS-based location discriminative

vocabulary coding scheme, which achieves extremely low-

bit-rate query transmission for mobile landmark search.

Chen et al. [19, 22] combined the visual information with

the contextual information, including the location and the

direction information for mobile landmark recognition.

Runge et al. [86] suggested the tags of images using the

location name and time period. Gui et al. [36] fused out-

puts of inertial sensors and computer vision techniques

for mobile scene recognition. In such cases, utilizing the

contextual information in mobile visual recognition can

speed up the recognition time and improve the recognition

performance.

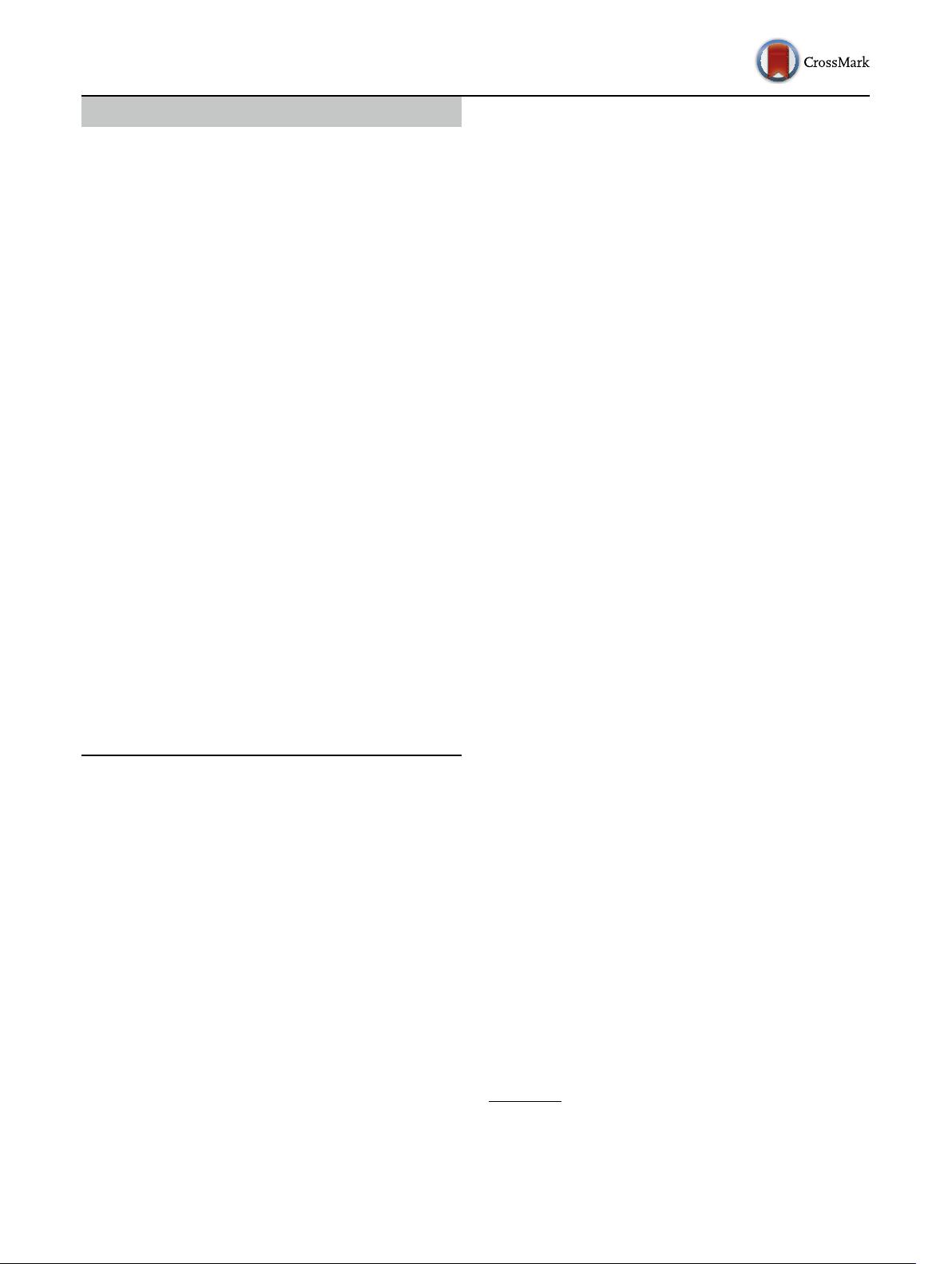

In this survey, we give a comprehensive overview of

Context-Aware Mobile Visual Recognition (CAMVR). A

typical pipeline for CAMVR is shown in the top of Fig. 1.

For the client side, the input is the captured object (e.g.,

one landmark, food, clothes and painting) and the contex-

tual information acquired by the mobile phone (e.g., loca-

tion, time, and weather). After the input information is sent

to the server, one recognition method (e.g., classification

and retrieval) from the server side is selected to recognize

the object and the relevant information is returned to the

user as the output. From the overall system, we can review

CAMVR from three different aspects, namely contextual

information, recognition method, and recognition types.

Based on the CAMVR system, there are great poten-

tial applications (in the bottom of Fig. 1), such as mobile

product search, mobile recommendation, and augmented

reality.

The rest of the survey is organized as follows: In Sect. 2

through Sect. 4, we survey the state-of-the-art approaches

of CAMVR according to different contextual information,

different recognition methods, and different recognition

types, respectively. In Sect. 5, we introduce various appli-

cation scenarios based on CAMVR. Finally, we conclude

the paper with a discussion of future research directions in

Sect. 6.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功