没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

Efficiently Compiling Efficient Query Plans for Modern HardwareThomas Neumann Technische Universität MünchenMunich, Germanyneumann@in.tum.deABSTRACT As main memory grows, query performance is more and more determined by the raw CPU costs of query processing itself. The classical iterator style query processing technique is very simple and flexible, but shows poor performance on modern CPUs due to lack of locality and frequent instruction mis- predictions. Several techniques like batch oriented

资源推荐

资源详情

资源评论

Efficiently Compiling Efficient Query Plans

for Modern Hardware

Thomas Neumann

Technische Universit

¨

at M

¨

unchen

Munich, Germany

neumann@in.tum.de

ABSTRACT

As main memory grows, query performance is more and more

determined by the raw CPU costs of query processing itself.

The classical iterator style query processing technique is very

simple and flexible, but shows poor performance on modern

CPUs due to lack of locality and frequent instruction mis-

predictions. Several techniques like batch oriented processing

or vectorized tuple processing have been proposed in the

past to improve this situation, but even these techniques are

frequently out-performed by hand-written execution plans.

In this work we present a novel compilation strategy that

translates a query into compact and efficient machine code

using the LLVM compiler framework. By aiming at good

code and data locality and predictable branch layout the

resulting code frequently rivals the performance of hand-

written C++ code. We integrated these techniques into the

HyPer main memory database system and show that this

results in excellent query performance while requiring only

modest compilation time.

1. INTRODUCTION

Most database systems translate a given query into an

expression in a (physical) algebra, and then start evaluating

this algebraic expression to produce the query result. The

traditional way to execute these algebraic plans is the iterator

model [8], sometimes also called Volcano-style processing [4]:

Every physical algebraic operator conceptually produces a

tuple stream from its input, and allows for iterating over this

tuple stream by repeatedly calling the next function of the

operator.

This is a very nice and simple interface, and allows for

easy combination of arbitrary operators, but it clearly comes

from a time when query processing was dominated by I/O

and CPU consumption was less important: First, the next

function will be called for every single tuple produced as

intermediate or final result, i.e., millions of times. Second,

the call to next is usually a virtual call or a call via a function

pointer. Consequently, the call is even more expensive than

a regular call and degrades the branch prediction of modern

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that copies

bear this notice and the full citation on the first page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specific

permission and/or a fee. Articles from this volume were invited to present

their results at The 37th International Conference on Very Large Data Bases,

August 29th - September 3rd 2011, Seattle, Washington.

Proceedings of the VLDB Endowment, Vol. 4, No. 9

Copyright 2011 VLDB Endowment 2150-8097/11/06... $ 10.00.

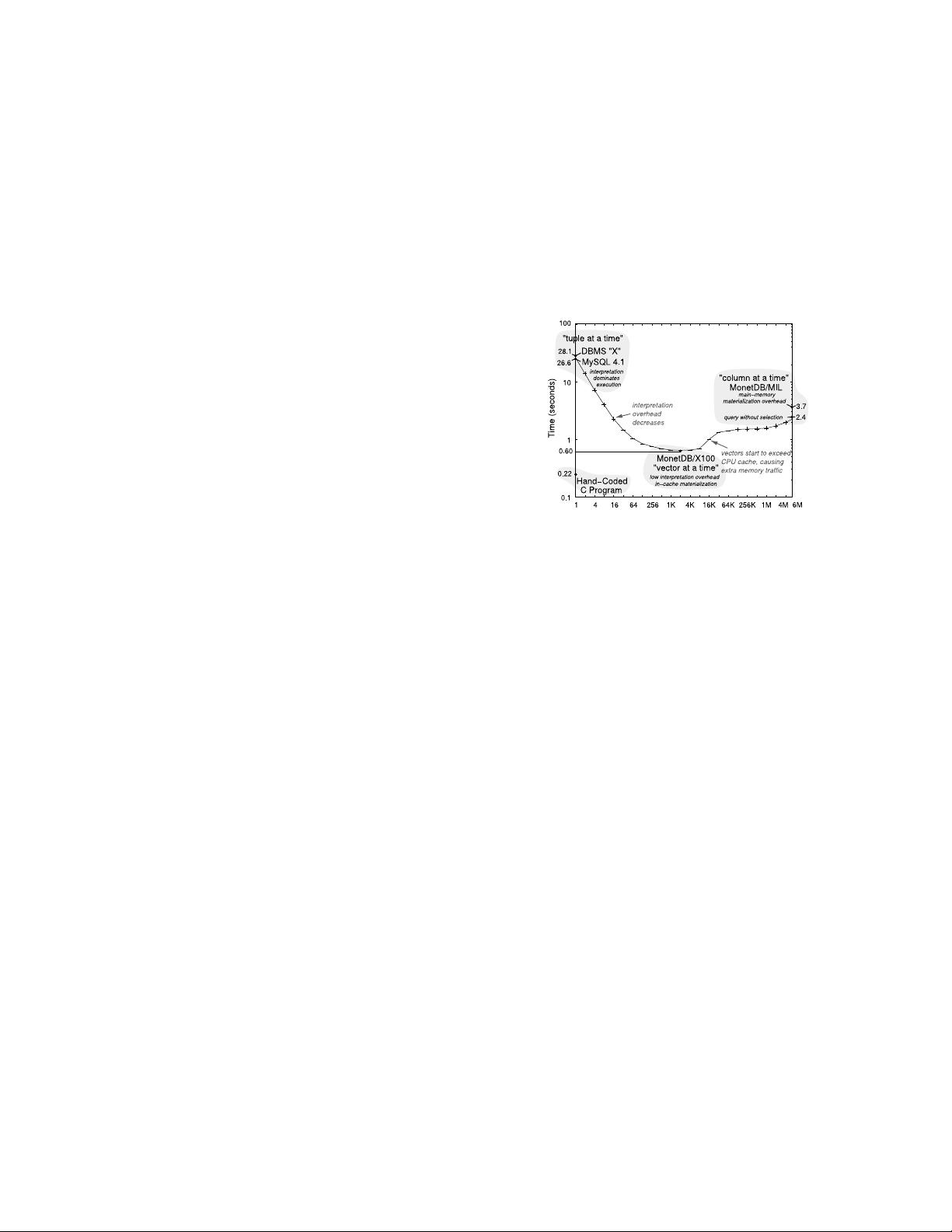

Figure 1: Hand-written code vs. execution engines

for TPC-H Query 1 (Figure 3 of [16])

CPUs. Third, this model often results in poor code locality

and complex book-keeping. This can be seen by considering

a simple table scan over a compressed relation. As the tuples

must be produced one at a time, the table scan operator has

to remember where in the compressed stream the current

tuple is and jump to the corresponding decompression code

when asked for the next tuple.

These observations have led some modern systems to a

departure from this pure iterator model, either internally

(e.g., by internally decompressing a number of tuples at

once and then only iterating over the decompressed data), or

externally by producing more than one tuple during each next

call [11] or even producing all tuples at once [1]. This block-

oriented processing amortizes the costs of calling another

operator over the large number of produced tuples, such

that the invocation costs become negligible. However, it also

eliminates a major strength of the iterator model, namely the

ability to pipeline data. Pipelining means that an operator

can pass data to its parent operator without copying or

otherwise materializing the data. Selections, for example,

are pipelining operators, as they only pass tuples around

without modifying them. But also more complex operators

like joins can be pipelined, at least on one of their input

sides. When producing more than one tuple during a call

this pure pipelining usually cannot be used any more, as the

tuples have to be materialized somewhere to be accessible.

This materialization has other advantages like allowing for

vectorized operations [2], but in general the lack of pipelining

is very unfortunate as it consumes more memory bandwidth.

An interesting observation in this context is that a hand-

written program clearly outperforms even very fast vectorized

systems, as shown in Figure 1 (originally from [16]). In a

way that is to be expected, of course, as a human might use

tricks that database management systems would never come

539

up with. On the other hand the query in this figure is a

simple aggregation query, and one would expect that there

is only one reasonable way to evaluate this query. Therefore

the existing query evaluation schemes seem to be clearly

suboptimal.

The algebraic operator model is very useful for reasoning

over the query, but it is not necessarily a good idea to exhibit

the operator structure during query processing itself. In this

paper we therefore propose a query compilation strategy that

differs from existing approaches in several important ways:

1.

Processing is data centric and not operator centric.

Data is processed such that we can keep it in CPU

registers as long as possible. Operator boundaries are

blurred to achieve this goal.

2.

Data is not pulled by operators but pushed towards

the operators. This results in much better code and

data locality.

3.

Queries are compiled into native machine code using

the optimizing LLVM compiler framework [7].

The overall framework produces code that is very friendly to

modern CPU architectures and, as a result, rivals the speed

of hand-coded query execution plans. In some cases we can

even outperform hand-written code, as using the LLVM as-

sembly language allows for some tricks that are hard to do in

a high-level programming language like C++. Furthermore,

by using an established compiler framework, we benefit from

future compiler, code optimization, and hardware improve-

ments, whereas other approaches that integrate processing

optimizations into the query engine itself will have to update

their systems manually. We demonstrate the impact of these

techniques by integrating them into the HyPer main-memory

database management system [5] and performing various

comparisons with other systems.

The rest of this paper is structured as follows: We first

discuss related work in Section 2. We then explain the overall

architecture of our compilation framework in Section 3. The

actual code generation for algebraic operators is discussed in

more details in Section 4. We explain how different advanced

processing techniques can be integrated into the framework

in Section 5. We then show an extensive evaluation of our

techniques in Section 6 and draw conclusions in Section 7.

2. RELATED WORK

The classical iterator model for query evaluation was pro-

posed quite early [8], and was made popular by the Volcano

system [4]. Today, it is the most commonly used execution

strategy, as it is flexible and quite simple. As long as query

processing was dominated by disk I/O the iterator model

worked fine. However, as the CPU consumption became an

issue, some systems tried to reduce the high calling costs

of the iterator model by passing blocks of tuples between

operators [11]. This greatly reduces the number of function

invocations, but causes additional materialization costs.

Modern main-memory database systems look at the prob-

lem again, as for them CPU costs is a critical issue. The

MonetDB system [1, 9] goes to the other extreme, and mate-

rializes all intermediate results, which eliminates the need

to call an input operator repeatedly. Besides simplifying

operator interaction, materialization has other advantages,

too, but it also causes significant costs. The MonetDB/X100

system [1] (which evolved into VectorWise) selected a mid-

dle ground by passing large vectors of data and evaluating

queries in a vectorized manner on each chunk. This offers

excellent performance, but, as shown in Figure 1, still does

not reach the speed of hand-written code.

Another way to improve query processing is to compile

the query into some kind of executable format, instead of

using interpreter structures. In [13] the authors proposed

compiling the query logic into Java Bytecode, which allows

for using the Java JVM. However this is relatively heavy

weight, and they still use the iterator model, which limits

the benefits. Recent work on the HIQUE system proposed

compiling the query into C code using code templates for

each operator [6]. HIQUE eliminates the iterator model by

inlining result materialization inside the operator execution.

However, contrary to our proposal, the operator boundaries

are still clearly visible. Furthermore, the costs of compiling

the generated C code are quite high [6].

Besides these more general approaches, many individual

techniques have been proposed to speed up query processing.

One important line of work is reducing the impact of branch-

ing, where [14] showed how to combine conjunctive predicates

such that the trade-off between number of branches and num-

ber of evaluated predicates is optimal. Other work has looked

at processing individual expressions more efficiently by using

SIMD instructions [12, 15].

3. THE QUERY COMPILER

3.1 Query Processing Architecture

We propose a very different architecture for query process-

ing (and, accordingly, for query compilation). In order to

maximize the query processing performance we have to make

sure that we maximize data and code locality. To illustrate

this point, we first give a definition of pipeline-breaker that

is more restrictive than in standard database systems: An

algebraic operator is a pipeline breaker for a given input side

if it takes an incoming tuple out of the CPU registers. It is

a full pipeline breaker if it materializes all incoming tuples

from this side before continuing processing.

This definition is slightly hand-waving, as a single tuple

might already be too large to fit into the available CPU

registers, but for now we pretend that we have a sufficient

number of registers for all input attributes. We will look

at this in more detail in Section 4. The main point is that

we consider spilling data to memory as a pipeline-breaking

operation. During query processing, all data should be kept

in CPU registers as long as possible.

Now the question is, how can we organize query processing

such that the data can be kept in CPU registers as long as

possible? The classical iterator model is clearly ill-suited

for this, as tuples are passed via function calls to arbitrary

functions – which always results in evicting the register

contents. The block-oriented execution models have fewer

passes across function boundaries, but they clearly also break

the pipeline as they produce batches of tuples beyond register

capacity. In fact any iterator-style processing paradigm that

pulls data up from the input operators risks breaking the

pipeline, as, by offering an iterator-base view, it has to offer

a linearized access interface to the output of an arbitrarily

complex relational operator. Sometimes operators could

produce a certain small number of output tuples together

cheaply, without need for copying.

We therefore reverse the direction of data flow control.

Instead of pulling tuples up, we push them towards the con-

sumer operators. While pushing tuples, we continue pushing

until we reach the next pipeline-breaker. As a consequence,

540

剩余11页未读,继续阅读

资源评论

weixin_38530536

- 粉丝: 4

- 资源: 970

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于Vue.js快速构建python桌面应用程序的模板项目源码+运行教程(支持打包为可执行文件).zip

- 防护具检测57-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

- 视频下载-b站视频下载器

- CSV数据操作的工具包-含合并CSV文件、Excel转CSV、CSV转XLSX、统计CSV行数、重命名表头、选择和重排CSV列等功能.zip

- App商店优化(ASO)权威指南:提高App可见度与转化率的技术策略

- Pangu-Agent: 强化学习与大型语言模型相结合的一般智能体框架

- TomVPN_3.0.7.apk

- AEC论文解读 - ACOUSTIC ECHO CANCELLATION WITH THE DUAL-SIGNAL TRANSFORMATION LSTM NETWORK

- Vegetation Studio 1.5.3

- 阀门检测49-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功