没有合适的资源?快使用搜索试试~ 我知道了~

激活函数论文Funnel Activation for Visual Recognition1

试读

17页

需积分: 0 0 下载量 170 浏览量

更新于2022-08-03

收藏 2.24MB PDF 举报

在视觉识别任务中,激活函数的作用至关重要,它们引入非线性特性,使神经网络能够学习更复杂的模式。本文提出的“Funnel Activation”(FReLU)是一种新的2D激活函数,旨在扩展ReLU和PReLU的功能,同时保持较低的计算开销。

ReLU(Rectified Linear Unit)是最早被广泛采用的激活函数,其表达式为y = max(x, 0),它在x小于0时返回0,否则返回x本身。PReLU(Parametric ReLU)进一步引入了可学习的参数p,表达式为y = max(x, px),通过这个参数可以适应不同的输入分布。然而,这些传统的激活函数忽略了空间条件的影响,无法捕捉图像中的复杂布局。

FReLU(Funnel Activation)则引入了一个2D的空间条件T(·),使得激活函数的形式变为y = max(x, T(x))。这里的T(·)通过简单的卷积操作实现,能够在像素级别进行建模,有效地捕获图像中复杂的视觉结构。这种设计允许FReLU通过常规的卷积层来适应和处理具有挑战性的复杂图像,而不需要使用更复杂、计算效率更低的卷积结构。

实验部分,FReLU在ImageNet图像分类、COCO对象检测以及语义分割等任务上展示了显著的性能提升和鲁棒性。相比于传统的ReLU和PReLU,FReLU能够更好地保留和处理有用的信息,提高模型的识别精度,尤其是在处理具有丰富细节和复杂结构的图像时。

此外,FReLU的另一个优点是其简洁的设计。尽管增加了像素级别的建模能力,但其计算成本相对较低,这使得FReLU在实际应用中更具吸引力。通过与现有模型的比较,研究证明了即使使用常规卷积,FReLU也能达到与复杂卷积结构相当的准确性,这对于资源有限的环境或需要高效计算的应用来说是一个显著的优势。

Funnel Activation为视觉识别任务提供了一种有效且实用的激活函数替代方案,通过引入2D空间条件,增强了CNN对图像中复杂信息的处理能力,同时保持了模型的计算效率。这一研究成果不仅推动了激活函数领域的创新,也为深度学习模型的优化提供了新的思路。有兴趣的读者可以通过提供的链接进一步了解和使用该方法。

Funnel Activation for Visual Recognition

Ningning Ma

1

, Xiangyu Zhang

2?

, and Jian Sun

2

1

Hong Kong University of Science and Technology

2

MEGVII Technology

nmaac@cse.ust.hk, {zhangxiangyu,sunjian}@megvii.com

Abstract. We present a conceptually simple but effective funnel acti-

vation for image recognition tasks, called Funnel activation (FReLU),

that extends ReLU and PReLU to a 2D activation by adding a neg-

ligible overhead of spatial condition. The forms of ReLU and PReLU

are y = max(x, 0) and y = max(x, px), respectively, while FReLU is in

the form of y = max(x, T(x)), where T(·) is the 2D spatial condition.

Moreover, the spatial condition achieves a pixel-wise modeling capac-

ity in a simple way, capturing complicated visual layouts with regular

convolutions. We conduct experiments on ImageNet, COCO detection,

and semantic segmentation tasks, showing great improvements and ro-

bustness of FReLU in the visual recognition tasks. Code is available at

https://github.com/megvii-model/FunnelAct.

Keywords: funnel activation, visual recognition, CNN

1 Introduction

Convolutional neural networks (CNNs) have achieved state-of-the-art perfor-

mance in many visual recognition tasks, such as image classification, object de-

tection, and semantic segmentation. As popularized in the CNN framework, one

major kind of layer is the convolution layer, another is the non-linear activation

layer.

First in the convolution layers, capturing the spatial dependency adaptively

is challenging, many advances in more complex and effective convolutions have

been proposed to grasp the local context adaptively in images [7,18]. The ad-

vances achieve great success especially on dense prediction tasks (e.g., semantic

segmentation, object detection). Driven by the advances in more complex convo-

lutions and their less efficient implementations, a question arises: Could regular

convolutions achieve similar accuracy, to grasp the challenging complex images?

Second, usually right after capturing spatial dependency in a convolution

layer linearly, then an activation layer acts as a scalar non-linear transformation.

Many insightful activations have been proposed [31,14,5,25], but improving the

performance on visual tasks is challenging, therefore currently the most widely

used activation is still the Rectified Linear Unit (ReLU) [32]. Driven by the

?

Corresponding author

arXiv:2007.11824v2 [cs.CV] 24 Jul 2020

2 Ningning Ma et al.

0.5

1.0

1.5

Moreeffective

Transferbetter

Accuracy

Improvement (%)

FReLU

Swish

PReLU

Classification

Detection

Segmentation

0

ReLU

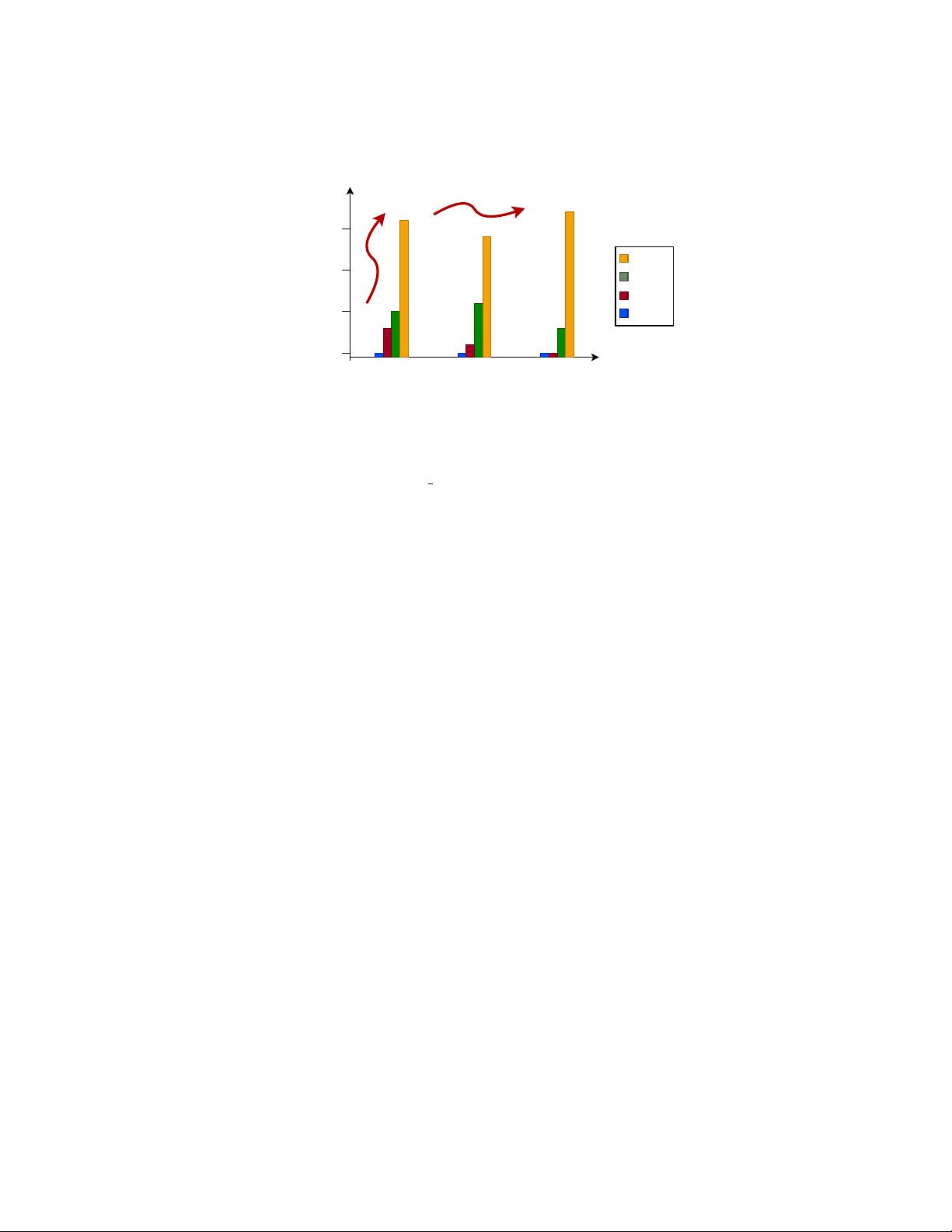

Fig. 1. Effectiveness and generalization performance. We set the ReLU network as

the baseline, and show the relative improvement of accuracy on the three basic tasks

in computer vision: image classification (Top-1 accuracy), object detection (mAP),

and semantic segmentation (mean IU). We use the ResNet-50 [15] as the backbone

pre-trained on the ImageNet dataset, to evaluate the generalization performance on

COCO and CityScape datasets. FReLU is more effective, and transfer better on all of

the three tasks.

distinct roles of the convolution layers and activation layers, another question

arises: Could we design an activation specifically for visual tasks?

To answer both questions raised above, we show that the simple but effec-

tive visual activation, together with the regular convolutions, can also achieve

significant improvements on both dense and sparse predictions (e.g. image clas-

sification, see Fig. 1). To achieve the results, we identify spatially insensitiveness

in activations as the main obstacle impeding visual tasks from achieving sig-

nificant improvements and propose a new visual activation that eliminates this

barrier. In this work, we present a simple but effective visual activation that

extends ReLU and PReLU to a 2D visual activation.

Spatially insensitiveness is addressed in modern activations for visual tasks.

As popularized in the ReLU activation, non-linearity is performed using a max(·)

function, the condition is the hand-designed zero, thus in the scalar form: y =

max(x, 0). The ReLU activation consistently achieves top accuracy on many

challenging tasks. Through a sequence of advances [31,14,5,25], many variants of

ReLU modify the condition in various ways and relatively improve the accuracy.

However, further improvement is challenging for visual tasks.

Our method, called Funnel activation (FReLU), extends the spirit of

ReLU/PReLU by adding a spatial condition (see Fig. 2) which is simple to im-

plement and only adds a negligible computational overhead. Formally, the form

of our proposed method is y = max(x, T(x)), where T(x) represents the simple

and efficient spatial contextual feature extractor. By using the spatial condition

in activations, it simply extends ReLU and PReLU to a visual parametric ReLU

with a pixel-wise modeling capacity.

Funnel Activation for Visual Recognition 3

Our proposed visual activation acts as an efficient but much more effective

alternative to previous activation approaches. To demonstrate the effectiveness

of the proposed visual activation, we replace the normal ReLU in classification

networks, and we use the pre-trained backbone to show its generality on the

other two basic vision tasks: object detection and semantic segmentation. The

results show that FReLU not only improves performance on a single task but

also transfers well to other visual tasks.

2 Related Work

Scalar activations Scalar activations are activations with single input and sin-

gle output, in the form of y = f(x). The Rectified Linear Unit (ReLU) [13,23,32]

is the most widely used scalar activation on various tasks [26,38], in the form of

y = max(x, 0). It is simple and effective for various tasks and datasets. To mod-

ify the negative part, many variants have been proposed, such as Leaky ReLU

[31], PReLU [14], ELU [5]. They keep the positive part identity and make the

negative part dependent on the sample adaptively.

Other scalar methods such as the sigmoid non-linearity has the form σ(x) =

1/(1+e

−x

), and the Tanh non-linearity has the form tanh(x) = 2σ(2x)−1. These

activations are not widely used in deep CNNs mainly because they saturate and

kill gradients, also involve expensive operations (exponentials, etc.).

Many advances followed [25,39,1,16,35,10,46], and recent searching technique

contributes to a new searched scalar activation called Swish [36] by combing a

comprehensive set of unary functions and binary functions. The form is y =

x ∗ Sigmoid(x), outperforms other scalar activations on some structures and

datasets, and many searched results show great potential.

Contextual conditional activations Besides the scalar activation which only

depends on the neuron itself, conditional activation is a many-to-one function,

which activates the neurons conditioned on contextual information. A represen-

tative method is Maxout [12], it extends the layer to a multi-branch and selects

the maximum. Most activations apply a non-linearity on the linear dot prod-

uct between the weights and the data, which is: f(w

T

x + b). Maxout computes

the max(w

T

1

x + b

1

, w

T

2

x + b

2

), and generalizes ReLU and Leaky ReLU into the

same framework. With dropout [17], the Maxout network shows improvement.

However, it increases the complexity too much, the numbers of parameters and

multiply-adds has doubled and redoubled.

Contextual gating methods [8,44] use contextual information to enhance the

efficacy, especially on RNN based methods, because the feature dimension is

relatively smaller. There are also on CNN based methods [34], since 2D feature

size has a large dimension, the method is used after a feature reduction.

The contextually conditioned activations are usually channel-wise methods.

However, in this paper, we find the spatial dependency is also important in the

non-linear activation functions. We use light-weight CNN technique depth-wise

separable convolution to help with the reduction of additional complexity.

4 Ningning Ma et al.

Spatial dependency modeling Learning better spatial dependency is chal-

lenging, Some approaches use different shapes of convolution kernels [41,42,40]

to aggregate the different ranges of spatial dependences. However, it requires a

multi-branch that decreases efficiency. Advances in convolution kernels such as

atrous convolution [18] and dilated convolution [47] also lead to better perfor-

mance by increasing the receptive field.

Another type of methods learn the spatial dependency adaptively, such as

STN [22], active convolution [24], deformable convolution [7]. These methods

adaptively use the spatial transformations to refine the short-range dependencies,

especially for dense vision tasks (e.g. object detection, semantic segmentation).

Our simple FReLU even outperforms them without complex convolutions.

Moreover, the non-local network provides the methods to capture long-range

dependencies to address this problem. GCNet [3] provides a spatial attention

mechanism to better use the spatial global context. Long-range modeling meth-

ods achieve better performance but still require additional blocks into the origin

network structure, which decreases efficiency. Our method address this issue in

the non-linear activations, solve this issue better and more efficiently.

Receptive field The region and size of receptive field are essential in vision

recognition tasks [50,33]. The work on effective receptive field [29,11] finds that

different pixels contribute unequally and the center pixels have a larger impact.

Therefore, many methods have been proposed to implement the adaptive recep-

tive field [7,51,49]. The methods achieve the adaptive receptive field and improve

the performance, by involving additional branches in the architecture, such as

developing more complex convolutions or utilizing the attention mechanism. Our

method also achieves the same goal, but in a more simple and efficient manner

by introducing the receptive field into the non-linear activations. By using the

more adaptive receptive field, we can approximate the layouts in common com-

plex shapes, thus achieve even better results than the complex convolutions, by

using the efficient regular convolutions.

3 Funnel Activation

FReLU is designed specifically for visual tasks and is conceptually simple: the

condition is a hand-designed zero for ReLU and a parametric px for PReLU, to

this we modify it to a 2D funnel-like condition dependent on the spatial context.

The visual condition helps extract the fine spatial layout of an object. Next, we

introduce the key elements of FReLU, including the funnel condition and the

pixel-wise modeling capacity, which are the main missing parts in ReLU and its

variants.

ReLU We begin by briefly reviewing the ReLU activation. ReLU, in the form

max(x, 0), uses the max(·) to serve as non-linearity and uses a hand-designed

zero as the condition. The non-linear transformation acts as a supplement of

the linear transformation such as convolution and fully-connected layers.

剩余16页未读,继续阅读

资源推荐

资源评论

114 浏览量

122 浏览量

107 浏览量

136 浏览量

175 浏览量

2013-01-08 上传

144 浏览量

173 浏览量

120 浏览量

157 浏览量

2019-04-13 上传

166 浏览量

2021-04-04 上传

107 浏览量

2021-05-13 上传

2021-04-26 上传

184 浏览量

142 浏览量

2021-02-12 上传

2021-06-25 上传

2022-04-18 上传

2022-12-27 上传

126 浏览量

2021-06-13 上传

资源评论

woo静

- 粉丝: 33

- 资源: 347

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 快速定制中国传统节日头像(源码)

- hcia 复习内容的实验

- 准Z源光伏并网系统MATLAB仿真模型,采用了三次谐波注入法SPWM调制,具有更高的电压利用效率 并网部分采用了电压外环电流内环 电池部分采用了扰动观察法,PO Z源并网和逆变器研究方向的同学可

- 海面目标检测跟踪数据集.zip

- 欧美风格, 节日主题模板

- 西门子1200和三菱FXU通讯程序

- 11种概率分布的拟合与ks检验,可用于概率分析,可靠度计算等领域 案例中提供11种概率分布,具体包括:gev、logistic、gaussian、tLocationScale、Rayleigh、Log

- 机械手自动排列控制PLC与触摸屏程序设计

- uDDS源程序publisher

- 中国风格, 节日 主题, PPT模板

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功