没有合适的资源?快使用搜索试试~ 我知道了~

资源详情

资源评论

资源推荐

IEEE TRANSACTIONS ON NEURAL NETWORKS, VOL. 10, NO. 4, JULY 1999 925

A Geometrical Representation of McCulloch–Pitts

Neural Model and Its Applications

Ling Zhang and Bo Zhang

Abstract— In this paper, a geometrical representation of

McCulloch-Pitts neural model is presented. From the represen-

tation, a clear visual picture and interpretation of the model can

be seen. Two interesting applications based on the interpretation

are discussed. They are 1) a new design principle of feedforward

neural networks and 2) a new proof of mapping abilities of

three-layer feedforward neural networks.

Index Terms—Feedforward neural networks, measurable func-

tions, neighborhood covering.

I. INTRODUCTION

I

N 1943, McCulloch and Pitts [1] first presented a mathe-

matical model (M-P model) of a neuron. Since then many

artificial neural networks have developed from the well-known

M-P model [2], [3].

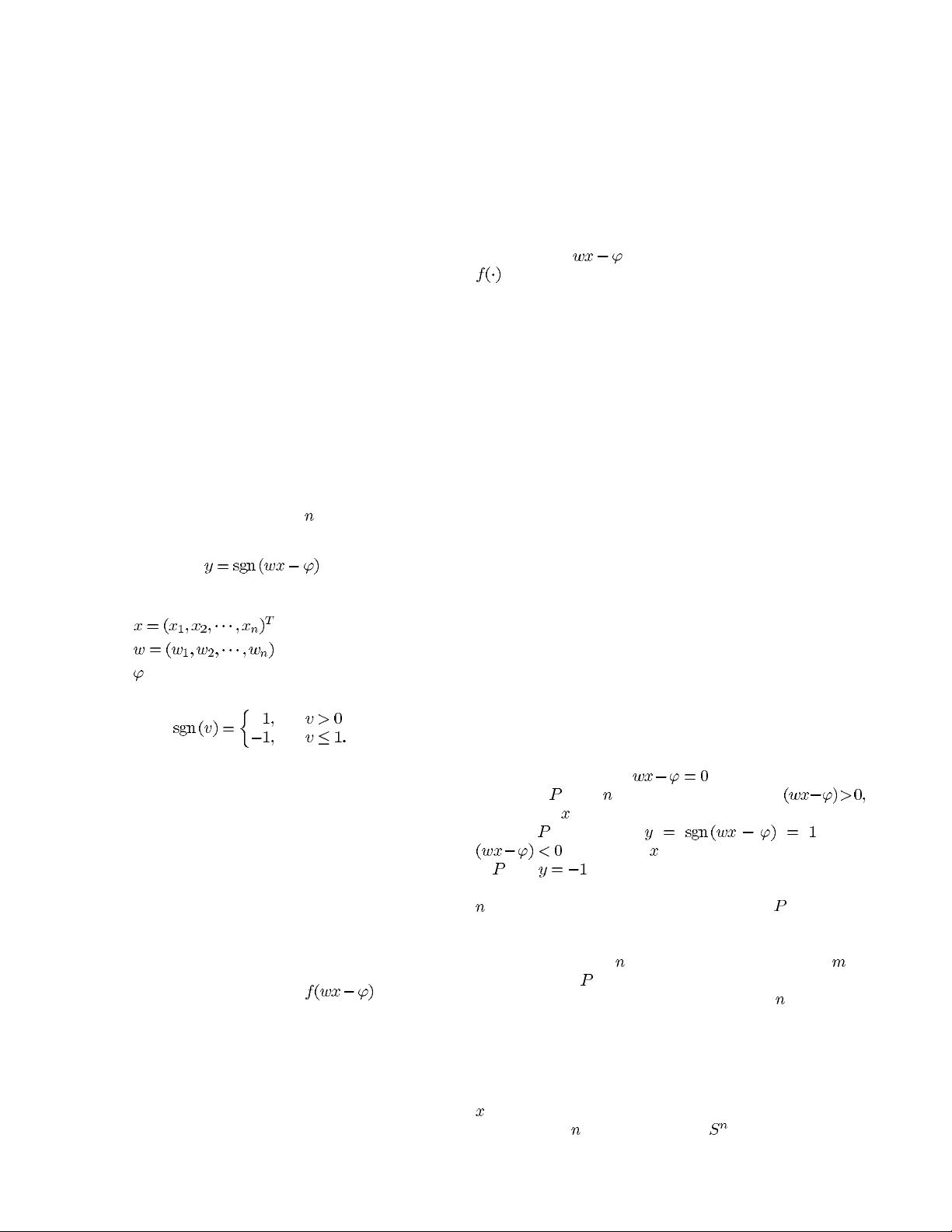

An M-P neuron is an element with

inputs and one output.

The general form of its function is

where

—an input vector

—a weight vector

—a threshold

(1)

Rumelhart et al. [12] presented the concept of feedforward

neural networks and their corresponding learning algorithm,

back propagation (BP), which provided the means for neural

networks to be practicable. In essence, the BP is a gradient

descent approach. In BP, the node function is replaced by

a class of sigmoid functions, i.e., infinitely differentiable

functions, in order to use mathematics with ease. Although

BP is a widely used learning algorithm it is still limited

by some disadvantages such as low convergence speed, poor

performance of the network, etc.

In order to overcome the learning complexity of BP and

other well-known algorithms, several improvements have been

presented. Since the node function

of the M-P

Manuscript received March 11, 1999; revised January 30, 1998, December

15, 1998, and March 11, 1999. This work was supported by the National

Nature Science Foundation of China and the National Key Basic Research

Program of China.

L. Zhang is with The State Key Lab of Intelligent Technology and Systems,

Artificial Intelligence Institute, Anhui University, Anhui, China.

B. Zhang is with the Department of Computer Science, Tsinghua University,

Beijing, China.

Publisher Item Identifier S 1045-9227(99)05482-X.

model can be regarded as a function of two functions: a

linear function

and a sign (or characteristic) function

, generally, there are two ways to reduce the learning

complexity. One is to replace the linear function by a quadratic

function or a distance function. For example, the polynomial-

time-trained hyperspherical classifier presented in [7] and [8]

and the restricted Coulomb energy algorithm presented in [6]

and [14] are neural networks using some distance function as

their node functions. A variety of classifiers such as those

based on radial basis functions (RBF) [9]–[13] and fuzzy

classifiers with hyperboxes [15] and ellipsoidal regions based

on Gaussian basis function [16], etc., use the same idea,

i.e., replacing the linear function by a quadratic function.

Although the learning capacity of a neural network can be

improved by making the node functions more complicated,

the improvement of the learning complexity would be limited

due to the complexity of functions. Another way to enhance

the learning capacity is by changing the topological structure

of the network. For example, in [17] and [18], the number of

hidden layers and/or the number of connections between layers

are increased. Similarly, the learning capacity is improved

at the price of increasing the complexity of the network. A

detailed description of the above methods can be found in

[19].

Now the problem is whether we can reduce the learning

complexity of a neural network and still maintain the simplic-

ity of the M-P model and its corresponding network. Some

researchers tried to do so by directly using the geometrical

interpretation of the M-P model, however, they have been

unsuccessful. Note that

can be interpreted as a

hyperplane

in an -dimensional space. When

input vector falls into the positive half-space of the hy-

perplane

. Meanwhile, . When

, input vector falls into the negative half-space

of

, and . In summary, the function of an M-P neuron

can geometrically be regarded as a spatial discriminator of an

-dimensional space divided by the hyperplane . Rujan et al.

[4], [5] intended to use such a geometrical interpretation

to analyze the behavior of neural networks. Unfortunately,

when the dimension

of the space and the number of

the hyperplanes

(i.e., the number of neurons) increase, the

mutual intersection among these hyperplanes in

-dimensional

space will become too complex to analyze. Therefore, so far

the geometrical representation has still rarely been used to

improve the learning capacity of complex neural networks.

In order to overcome this difficulty, a new representation

is presented as follows. First, assume that each input vector

has an equal length (norm). Thus all input vectors will be

restricted to an

-dimensional sphere . (In general cases, the

1045–9227/99$10.00 1999 IEEE

0

一曲歌长安

- 粉丝: 735

- 资源: 302

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- (源码)基于SimPy和贝叶斯优化的流程仿真系统.zip

- (源码)基于Java Web的个人信息管理系统.zip

- (源码)基于C++和OTL4的PostgreSQL数据库连接系统.zip

- (源码)基于ESP32和AWS IoT Core的室内温湿度监测系统.zip

- (源码)基于Arduino的I2C协议交通灯模拟系统.zip

- coco.names 文件

- (源码)基于Spring Boot和Vue的房屋租赁管理系统.zip

- (源码)基于Android的饭店点菜系统.zip

- (源码)基于Android平台的权限管理系统.zip

- (源码)基于CC++和wxWidgets框架的LEGO模型火车控制系统.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0