没有合适的资源?快使用搜索试试~ 我知道了~

基于序列到序列模型的神经网络构造1

试读

7页

需积分: 0 0 下载量 67 浏览量

更新于2022-08-03

收藏 79KB PDF 举报

【基于序列到序列模型的神经网络构造1】

序列到序列(Sequence-to-Sequence,Seq2Seq)模型是一种在自然语言处理领域广泛应用的神经网络架构,它由编码器(Encoder)和解码器(Decoder)两部分组成。这种模型最早由Sutskever等人在2014年提出,并在2015年的论文“Neural Conversational Model”中进一步探讨了其在对话生成中的应用。Seq2Seq模型的核心思想是将输入序列(如一句话或一段文本)编码成一个固定长度的向量,然后通过解码器将这个向量解码为另一个序列(如翻译后的句子或对话回复)。

在《A Neural Conversational Model》这篇论文中,Vinyals和Le提出了一个简单但强大的对话模型,该模型利用了Seq2Seq框架。与传统的对话系统相比,该模型无需特定领域的手工规则,能进行端到端的训练,减少了对人工设计规则的依赖。模型通过预测对话中的下一个句子来生成对话,只需在大规模的对话数据集上进行训练。

模型的编码器部分接收输入对话的历史句子,将这些句子转化为一个紧凑的表示,这通常通过循环神经网络(如LSTM或GRU)实现,它们能够捕获序列中的长期依赖性。解码器则基于编码器生成的上下文向量生成响应,同样使用循环神经网络,并且在生成每个单词时可能会利用自注意力机制来关注输入序列的不同部分。

论文的实验部分展示了模型在两个不同数据集上的性能:一个特定领域的IT帮助台对话数据集和一个开放领域的电影对话转录数据集。在IT帮助台数据集中,模型能通过对话解决技术问题,显示了在特定领域内的应用能力。而在电影对话数据集上,模型能执行简单的常识推理任务,表明它可以从大量、嘈杂和广泛的数据中学习知识。

尽管如此,模型也存在一些局限性,最明显的是一致性问题。由于Seq2Seq模型通常针对最大化生成序列的概率进行优化,而不是对话质量或连贯性,因此可能会产生不一致或无逻辑的回答。这是未来研究需要解决的关键挑战之一,例如引入强化学习策略或者更复杂的对话管理机制来提高模型的连贯性和一致性。

基于序列到序列模型的神经网络对话系统为自然语言理解和机器智能提供了新的视角,它简化了对话系统的构建过程,降低了对人工规则的依赖,并展现出在不同场景下的应用潜力。随着技术的不断进步,这类模型有望在对话生成、自动客服、虚拟助手等领域发挥更大的作用。

arXiv:1506.05869v1 [cs.CL] 19 Jun 2015

A Neural Conversational Model

Oriol Vinyals VINYALS@GOOGLE.COM

Google

Quoc V. Le QVL@GOOGLE.COM

Google

Abstract

Conversational modeling is an important task in

natural language understanding and machine in-

telligence. Although previous approaches ex-

ist, they are often restricted to specific domains

(e.g., booking an airline ticket) and require hand-

crafted rules. In this paper, we present a sim-

ple approach for this task which uses the recently

proposed sequence to sequence framework. Our

model converses by predicting the next sentence

given the previous sentence or sentences in a

conversation. The strength of our model is that

it can be trained end-to-end and thus requires

much fewer hand-crafted rules. We find that this

straightforward model can generate simple con-

versations given a large conversational training

dataset. Our preliminarysuggest that, despite op-

timizing the wrong objective function, the model

is able to extract knowledge from both a domain

specific dataset, and from a large, noisy, and gen-

eral domain dataset of movie subtitles. On a

domain-specific IT helpdesk dataset, the model

can find a solution to a technical problem via

conversations. On a noisy open-domain movie

transcript dataset, the model can perform simple

forms of common sense reasoning. As expected,

we also find that the lack of consistency is a com-

mon failure mode of our model.

1. Introduction

Advances in end-to-end training of neural networks have

led to remarkableprogress in many domains such as speech

recognition, computer vision, and language processing.

Recent work suggests that neural networks can do more

than just mere classification, they can be used to map com-

Proceedings of the 31

st

International Conference on Machine

Learning, Lille, France, 2015. JMLR: W&CP volume 37. Copy-

right 2015 by the author(s).

plicated structures to other complicated structures. An ex-

ample of this is the task of mapping a sequence to another

sequence which has direct applications in natural language

understanding (

Sutskever et al., 2014). One of the major

advantages of this framework is that it requires little feature

engineering and domain specificity whilst matching or sur-

passing state-of-the-art results. This advance, in our opin-

ion, allows researchers to work on tasks for which domain

knowledge may not be readily available, or for tasks which

are simply too hard to model.

Conversational modeling can directly benefit from this for-

mulation because it requires mapping between queries and

reponses. Due to the complexity of this mapping, conver-

sational modeling has previously been designed to be very

narrow in domain, with a major undertaking on feature en-

gineering. In this work, we experiment with the conversa-

tion modeling task by casting it to a task of predicting the

next sequence given the previous sequence or sequences

using recurrent networks (

Sutskever et al., 2014). We find

that this approach can do surprisingly well on generating

fluent and accurate replies to conversations.

We test the model on chat sessions from an IT helpdesk

dataset of conversations, and find that the model can some-

times track the problem and provide a useful answer to

the user. We also experiment with conversations obtained

from a noisy dataset of movie subtitles, and find that the

model can hold a natural conversation and sometimes per-

form simple forms of common sense reasoning. In both

cases, the recurrent nets obtain better perplexity compared

to the n-gram model and capture important long-range cor-

relations. From a qualitative point of view, our model is

sometimes able to produce natural conversations.

2. Related Work

Our approach is based on recent work which pro-

posed to use neural networks to map sequences to se-

quences (

Kalchbrenner & Blunsom, 2013; Sutskever et al.,

2014; Bahdanau et al., 2014). This framework has been

used for neural machine translation and achieves im-

A Neural Conversational Model

provements on the English-French and English-German

translation tasks from the WMT’14 dataset (

Luong et al.,

2014; Jean et al., 2014). It has also been used for

other tasks such as parsing (Vinyals et al., 2014a) and

image captioning (

Vinyals et al., 2014b). Since it is

well known that vanilla RNNs suffer from vanish-

ing gradients, most researchers use variants of the

Long Short Term Memory (LSTM) recurrent neural net-

work (

Hochreiter & Schmidhuber, 1997).

Our work is also inspired by the recent success of neu-

ral language modeling (

Bengio et al., 2003; Mikolov et al.,

2010; Mikolov, 2012), which shows that recurrent neural

networks are rather effective models for natural language.

More recently, work by Sordoni et al. (

Sordoni et al., 2015)

and Shang et al. (

Shang et al., 2015), used recurrent neural

networks to model dialogue in short conversations (trained

on Twitter-style chats).

Building bots and conversational agents has been pur-

sued by many researchers over the last decades, and it

is out of the scope of this paper to provide an exhaus-

tive list of references. However, most of these systems

require a rather complicated processing pipeline of many

stages (

Lester et al., 2004; Will, 2007; Jurafsky & Martin,

2009). Our work differs from conventional systems by

proposing an end-to-end approach to the problem which

lacks domain knowledge. It could, in principle, be com-

bined with other systems to re-score a short-list of can-

didate responses, but our work is based on producing an-

swers given by a probabilistic model trained to maximize

the probability of the answer given some context.

3. Model

Our approach makes use of the sequence to sequence

(seq2seq) model described in (

Sutskever et al., 2014). The

model is based on a recurrent neural network which reads

the input sequence one token at a time, and predicts the

output sequence, also one token at a time. During training,

the true output sequence is given to the model, so learning

can be done by backpropagation. The model is trained to

maximize the cross entropy of the correct sequence given

its context. During inference, given that the true output se-

quence is notobserved, we simply feed the predicted output

token as input to predict the next output. This is a “greedy”

inference approach. A less greedy approach would be to

use beam search, and feed several candidates at the previ-

ous step to the next step. The predicted sequence can be

selected based on the probability of the sequence.

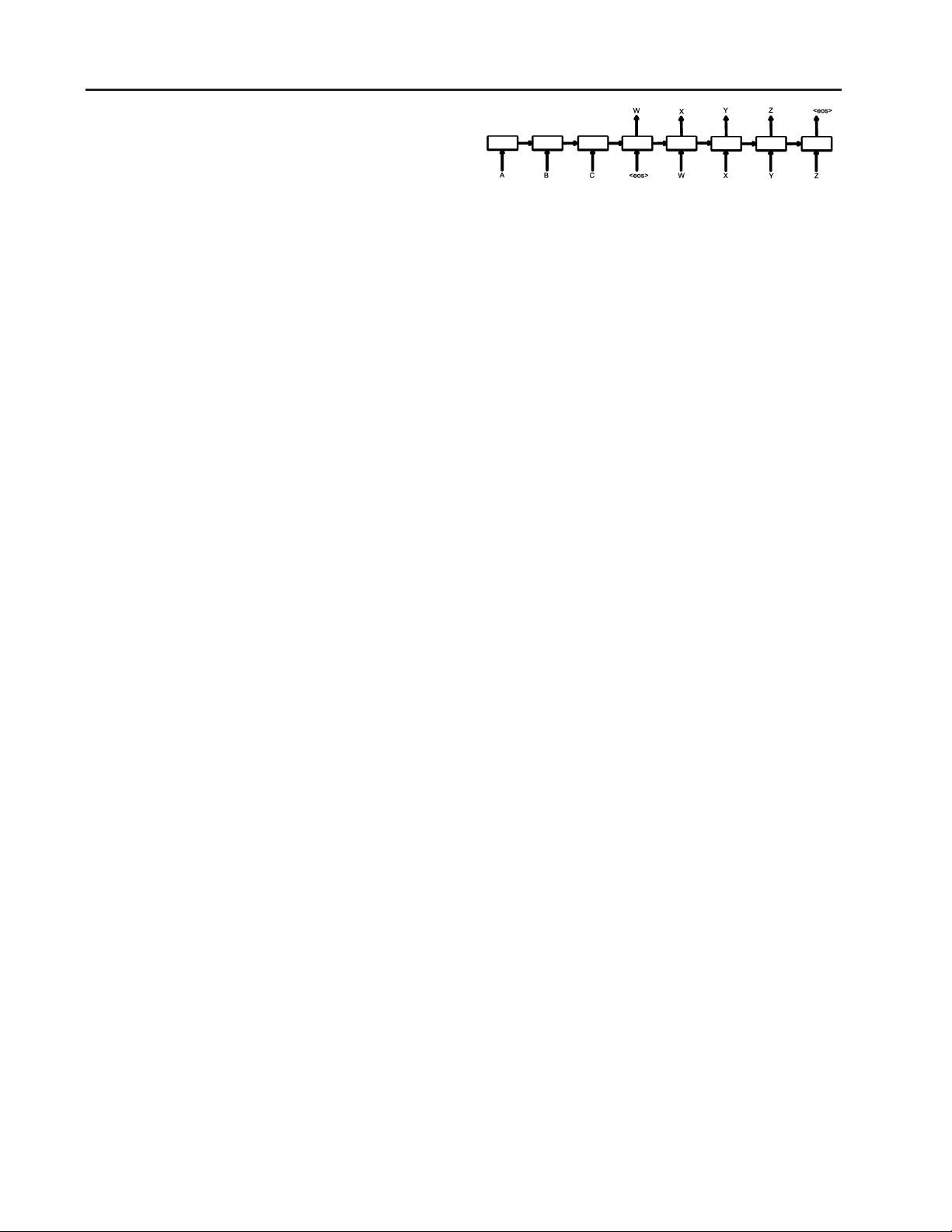

Concretely, suppose that we observe a conversation with

two turns: the first person utters “ABC”, and another replies

“WXYZ”. We can use a recurrent neural network, and train

to map “ABC” to “WXYZ” as shown in Figure

1 above.

Figure 1.

Using the seq2seq framework for modeling conversa-

tions.

The hidden state of the model when it receives the end of

sequence symbol “<eos>” can be viewed as the thought

vector because it stores the information of the sentence, or

thought, “ABC”.

The strength of this model lies in its simplicity and gener-

ality. We can use this model for machine translation, ques-

tion/answering, and conversations without major changes

in the architecture.

Unlike easier tasks like translation, however, a model like

sequence to sequence will not be able to successfully

“solve” the problem of modeling dialogue due to sev-

eral obvious simplifications: the objective function being

optimized does not capture the actual objective achieved

through human communication, which is typically longer

term and based on exchangeof information rather than next

step prediction. The lack of a model to ensure consistency

and general world knowledge is another obvious limitation

of a purely unsupervised model.

4. Datasets

In our experiments we used two datasets: a closed-domain

IT helpdesk troubleshooting dataset and an open-domain

movie transcript dataset. The details of the two datasets are

as follows.

4.1. IT Helpdesk Troubleshooting dataset

Our first set of experiments used a dataset which is ex-

tracted from a IT helpdesk troubleshooting chat service. In

this service, costumers are dealing with computer related

issues, and a specialist is helping them walking through a

solution. Typical interactions (or threads) are 400 words

long, and turn taking is clearly signaled. Our training set

contains 30M tokens, and 3M tokens were used as valida-

tion. Some amount of clean up was performed, such as

removing common names, numbers, and full URLs.

4.2. OpenSubtitles dataset

We also experimented our model with the OpenSubtitles

dataset (

Tiedemann, 2009). This dataset consists of movie

conversations in XML format. It contains sentences uttered

by characters in movies. We applied a simple processing

step removing XML tags and obvious non-conversational

剩余6页未读,继续阅读

资源推荐

资源评论

112 浏览量

131 浏览量

131 浏览量

124 浏览量

181 浏览量

181 浏览量

105 浏览量

2019-12-30 上传

2021-09-26 上传

2021-09-27 上传

170 浏览量

112 浏览量

192 浏览量

166 浏览量

资源评论

wxb0cf756a5ebe75e9

- 粉丝: 28

- 资源: 283

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- python圣诞树代码-Python编程实现圣诞树绘制方法

- 车床电动四方刀架sw14可编辑全套设计资料100%好用.zip

- 埃斯顿ER3-400-SR机器人sw18全套设计资料100%好用.zip

- html圣诞树代码大全可复制免费-HTML和CSS技术实现静态与动态圣诞树

- 多功能机械手sw18全套设计资料100%好用.zip

- python圣诞树代码-Python实现不同方式绘制圣诞树的方法与代码实例

- 电能自动平衡代步车设计x_t全套设计资料100%好用.zip

- 电子元件自动上料机sw17全套设计资料100%好用.zip

- html圣诞树代码大全可复制免费-HTML与CSS结合JavaScript实现的圣诞树网页动画教程

- input_TP_pre2.xlsx

- 多头称重传感器设计sw10全套设计资料100%好用.zip

- 翻斗式往复升降机构sw20可编辑全套设计资料100%好用.zip

- 防尘线性模组内部结构ug10全套设计资料100%好用.zip

- 焊接责任人培训资料.zip

- 无损检测资料.zip

- 基于 pyqt的GeoIP 的 IP 位置追踪工具

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功