Published as a conference paper at ICLR 2017

2 RELATED WORK

2.1 RESTARTS IN GRADIENT-FREE OPTIMIZATION

When optimizing multimodal functions one may want to find all global and local optima. The

tractability of this task depends on the landscape of the function at hand and the budget of func-

tion evaluations. Gradient-free optimization approaches based on niching methods (Preuss, 2015)

usually can deal with this task by covering the search space with dynamically allocated niches of

local optimizers. However, these methods usually work only for relatively small search spaces,

e.g., n < 10, and do not scale up due to the curse of dimensionality (Preuss, 2010). Instead, the

current state-of-the-art gradient-free optimizers employ various restart mechanisms (Hansen, 2009;

Loshchilov et al., 2012). One way to deal with multimodal functions is to iteratively sample a large

number λ of candidate solutions, make a step towards better solutions and slowly shape the sampling

distribution to maximize the likelihood of successful steps to appear again (Hansen & Kern, 2004).

The larger the λ, the more global search is performed requiring more function evaluations. In order

to achieve good anytime performance, it is common to start with a small λ and increase it (e.g., by

doubling) after each restart. This approach works best on multimodal functions with a global funnel

structure and also improves the results on ill-conditioned problems where numerical issues might

lead to premature convergence when λ is small (Hansen, 2009).

2.2 RESTARTS IN GRADIENT-BASED OPTIMIZATION

Gradient-based optimization algorithms such as BFGS can also perform restarts to deal with mul-

timodal functions (Ros, 2009). In large-scale settings when the usual number of variables n is on

the order of 10

3

− 10

9

, the availability of gradient information provides a speedup of a factor of

n w.r.t. gradient-free approaches. Warm restarts are usually employed to improve the convergence

rate rather than to deal with multimodality: often it is sufficient to approach any local optimum to

a given precision and in many cases the problem at hand is unimodal. Fletcher & Reeves (1964)

proposed to flesh the history of conjugate gradient method every n or (n + 1) iterations. Powell

(1977) proposed to check whether enough orthogonality between ∇f (x

t−1

) and ∇f (x

t

) has been

lost to warrant another warm restart. Recently, O’Donoghue & Candes (2012) noted that the iterates

of accelerated gradient schemes proposed by Nesterov (1983; 2013) exhibit a periodic behavior if

momentum is overused. The period of the oscillations is proportional to the square root of the local

condition number of the (smooth convex) objective function. The authors showed that fixed warm

restarts of the algorithm with a period proportional to the conditional number achieves the optimal

linear convergence rate of the original accelerated gradient scheme. Since the condition number is

an unknown parameter and its value may vary during the search, they proposed two adaptive warm

restart techniques (O’Donoghue & Candes, 2012):

• The function scheme restarts whenever the objective function increases.

• The gradient scheme restarts whenever the angle between the momentum term and the

negative gradient is obtuse, i.e, when the momentum seems to be taking us in a bad direc-

tion, as measured by the negative gradient at that point. This scheme resembles the one of

Powell (1977) for the conjugate gradient method.

O’Donoghue & Candes (2012) showed (and it was confirmed in a set of follow-up works) that these

simple schemes provide an acceleration on smooth functions and can be adjusted to accelerate state-

of-the-art methods such as FISTA on nonsmooth functions.

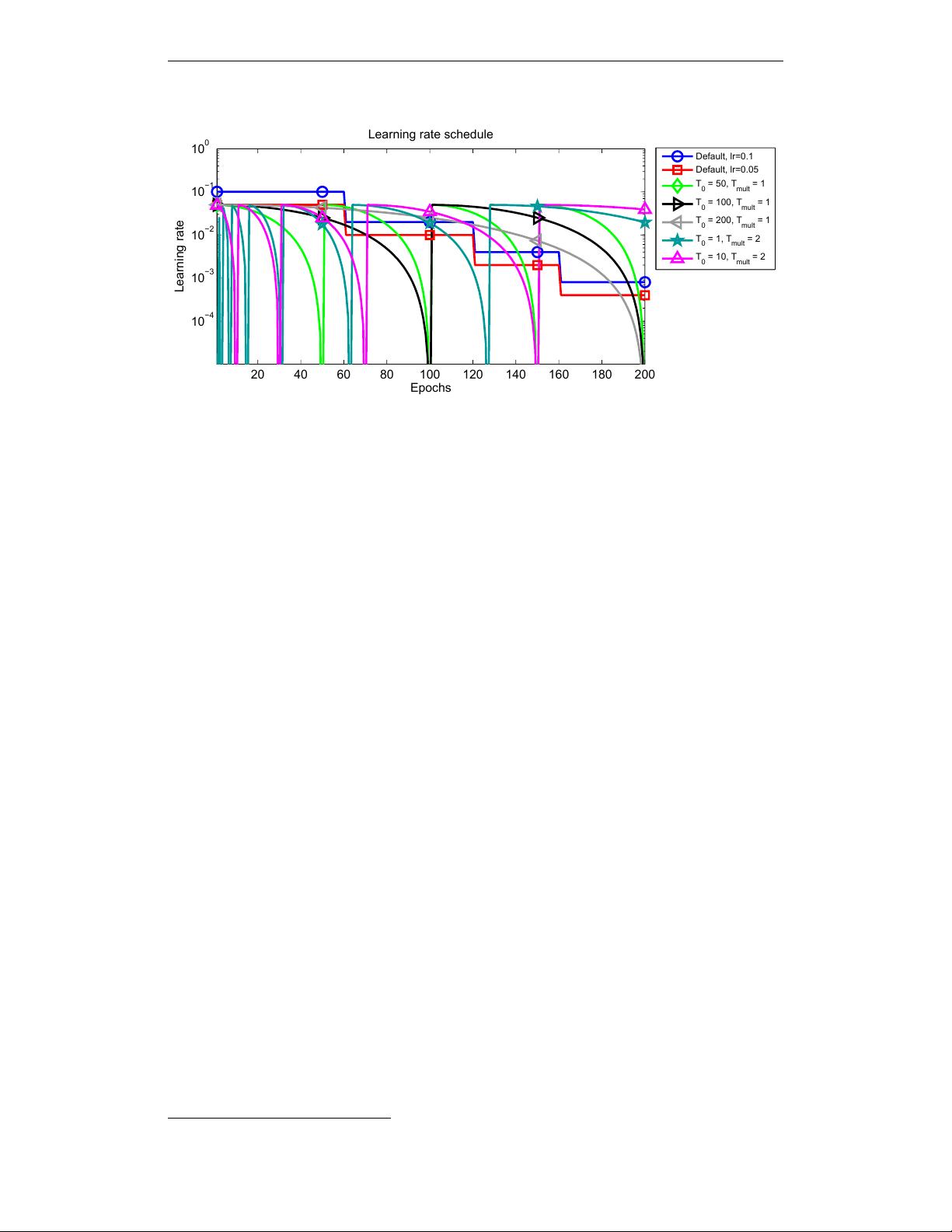

Smith (2015; 2016) recently introduced cyclical learning rates for deep learning, his approach is

closely-related to our approach in its spirit and formulation but does not focus on restarts.

Yang & Lin (2015) showed that Stochastic subGradient Descent with restarts can achieve a linear

convergence rate for a class of non-smooth and non-strongly convex optimization problems where

the epigraph of the objective function is a polyhedron. In contrast to our work, they never increase

the learning rate to perform restarts but decrease it geometrically at each epoch. To perform restarts,

they periodically reset the current solution to the averaged solution from the previous epoch.

3

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

评论0