IMM

INFORMATICS AND MATHEMATICAL MODELLING

Technical University of Denmark

DK-2800 Kgs. Lyngby – Denmark

DACE

AMATLAB Kriging Toolbox

Version 2.0, August 1, 2002

Søren N. Lophaven

Hans Bruun Nielsen

Jacob Søndergaard

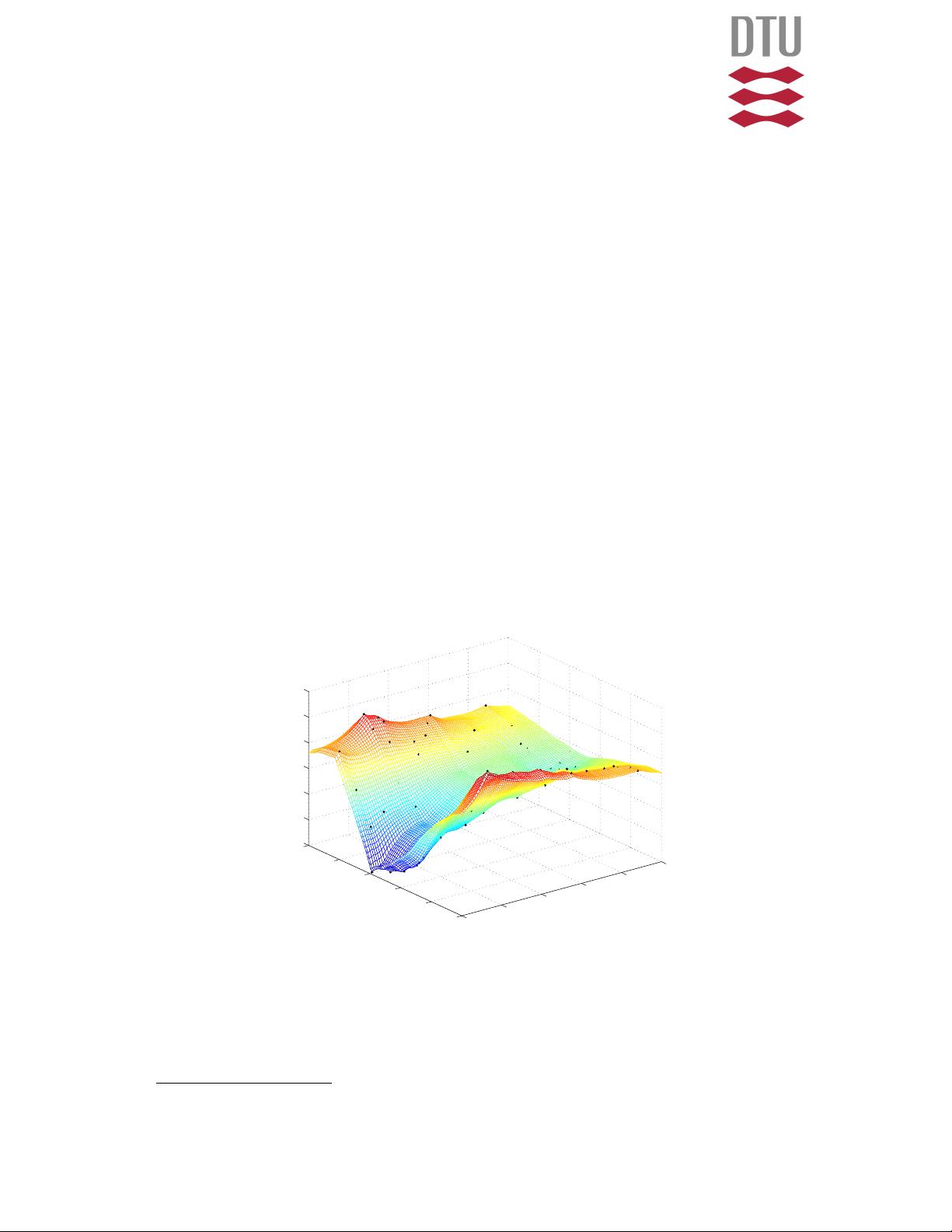

0

20

40

60

80

100

0

20

40

60

80

100

34

36

38

40

42

44

46

Technical Report IMM-TR-2002-12

Please direct communication to Hans Bruun Nielsen (hbn@imm.dtu.dk)

Contents

1. Introduction 1

2. Modelling and Prediction 1

2.1. The Kriging Predictor .......................... 2

2.2. Regression Models ............................ 5

2.3. Correlation Models ............................ 6

3. Generalized Least Squares Fit 9

3.1. Computational Aspects .......................... 10

4. Experimental Design 12

4.1. Rectangular Grid ............................. 12

4.2. Latin Hypercube Sampling ........................ 12

5. Reference Manual 13

5.1. Model Construction ............................ 14

5.2. Evaluate the Model ............................ 15

5.3. Regression Models ............................ 16

5.4. Correlation Models ............................ 17

5.5. Experimental Design ........................... 18

5.6. Auxiliary Functions ............................ 19

5.7. Data Files ................................. 20

6. Examples of Usage 21

6.1. Work-through Example .......................... 21

6.2. Adding a Regression Function ...................... 24

7. Notation 24

1. Introduction

This report describes the background for and use of the software package DACE

(Design and Analysis of Computer Experiments), which is a Matlab toolbox for

working with kriging approximations to computer models.

The typical use of this software is to construct a kriging approximation model based

on data from a computer experiment, and to use this approximation model as a

surrogate for the computer model. Here, a computer experiment is a collection of

pairs of input and responses from runs of a computer model. Both the input and

the response from the computer model are likely to be high dimensional.

The computer models we address are deterministic, and thus a response from a

model lacks random error, i.e., repeated runs for the same input parameters gives

the same response from the model.

Often the approximation models are needed as a part of a design problem, in which

the best set of parameters for running the computer model is determined. This

is for example problems where a computer model is fitted to physical data. This

design problem is related to the more general problem of predicting output from a

computer model at untried inputs.

In Section 2 we consider models for computer experiments and efficient predictors,

Section 3 discusses generalized least squares and implementation aspects, and in

Section 4 we consider experimental design for the predictors. Section 5 is a reference

manual for the toolbox, and finally examples of usage and list of notation are given

in Sections 6 and 7.

2. Modelling and Prediction

Given a set of m design sites S =[s

1

··· s

m

]

>

with s

i

∈IR

n

and responses

Y =[y

1

··· y

m

]

>

with y

i

∈IR

q

. The data is assumed to satisfy the normalization

conditions

1

µ[S

:,j

]=0, V

£

S

:,j

,S

:,j

¤

=1,j=1,...,n ,

µ[Y

:,j

]=0, V

£

Y

:,j

,Y

:,j

¤

=1,j=1,...,q ,

(2.1)

where X

:,j

is the vector given by the jth column in matrix X, and µ[ ·] and V

£

·, ·

¤

denote respectively the mean and the covariance.

Following [9] we adopt a model ˆy that expresses the deterministic response y(x) ∈IR

q

,

for an n dimensional input x ∈D⊆IR

n

, as a realization of a regression model F and

1

The user does not have to think of this: The first step in the model construction is to normalize

the given S, Y so that (2.1) is satisfied, see (5.1) below.

1

a random function (stochastic process),

ˆy

`

(x)=F(β

:,`

,x)+z

`

(x),`=1,...,q . (2.2)

We use a regression model which is a linear combination of p chosen functions

f

j

:IR

n

7→ IR ,

F ( β

:,`

,x)=β

1,`

f

1

(x)+···β

p,`

f

p

(x)

=[f

1

(x)··· f

p

(x)] β

:,`

≡ f(x)

>

β

:,`

. (2.3)

The coefficients {β

k,`

} are regression parameters.

The random process z is assumed to have mean zero and covariance

E

£

z

`

(w)z

`

(x)

¤

= σ

2

`

R(θ, w, x),`=1,...,q (2.4)

between z(w) and z(x), where σ

2

`

is the process variance for the `th component

of the response and R(θ, w, x) is the correlation model with parameters θ.An

interpretation of the model (2.2) is that deviations from the regression model, though

the response is deterministic, may resemble a sample path of a (suitably chosen)

stochastic process z. In the following we will focus on the kriging predictor for y.

First, however, we must bear in mind that the true value can be written as

y

`

(x)=F(β

:,`

,x)+α(β

:,`

,x) , (2.5)

where α is the approximation error. The assumption is that by proper choice of β

this error behaves like “white noise” in the region of interest, i.e., for x ∈D.

2.1. The Kriging Predictor

For the set S of design sites we have the expanded m×p design matrix F with

F

ij

= f

j

(s

i

),

F =[f(s

1

)··· f(s

m

)]

>

, (2.6)

with f (x) defined in (2.3). Further, define R as the matrix R of stochastic-process

correlations between z’s at design sites,

R

ij

= R(θ, s

i

,s

j

),i,j=1,...,m . (2.7)

At an untried point x let

r(x)=[R(θ, s

1

,x) ··· R(θ, s

m

,x)]

>

(2.8)

be the vector of correlations between z’s at design sites and x.

Now, for the sake of convenience, assume that q = 1, implying that β = β

:,1

and

Y = Y

:,1

, and consider the linear predictor

ˆy(x)=c

>

Y, (2.9)

2

with c = c(x) ∈IR

m

. The error is

ˆy(x) − y(x)=c

>

Y−y(x)

=c

>

(Fβ +Z)−(f(x)

>

β +z)

= c

>

Z −z +

¡

F

>

c−f(x)

¢

>

β,

where Z =[z

1

... z

m

]

>

are the errors at the design sites. To keep the predictor

unbiased we demand that F

>

c − f(x) = 0, or

F

>

c(x)=f(x). (2.10)

Under this condition the mean squared error (MSE) of the predictor (2.9) is

ϕ(x)=E

£

(ˆy(x) −y(x))

2

¤

=E

£¡

c

>

Z − z

¢

2

¤

=E

£

z

2

+c

>

ZZ

>

c −2c

>

Zz

¤

= σ

2

¡

1+c

>

Rc − 2c

>

r

¢

. (2.11)

The Lagrangian function for the problem of minimizing ϕ with respect to c and

subject to the constraint (2.10) is

L(c, λ)=σ

2

¡

1+c

>

Rc − 2c

>

r

¢

− λ

>

(F

>

c − f) . (2.12)

The gradient of (2.12) with respect to c is

L

0

c

(c, λ)=2σ

2

(Rc − r) − Fλ ,

and from the first order necessary conditions for optimality (see e.g. [7, Section 12.2])

we get the following system of equations

·

RF

F

>

0

¸·

c

˜

λ

¸

=

·

r

f

¸

, (2.13)

where we have defined

˜

λ = −

λ

2σ

2

.

The solution to (2.13) is

˜

λ =(F

>

R

−1

F)

−1

(F

>

R

−1

r−f),

c=R

−1

(r−F

˜

λ).

(2.14)

The matrix R and therefore R

−1

is symmetric, and by means of (2.9) we find

3

- 1

- 2

前往页