没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

Apache Spark has become the engine to enhance many of the capabilities of the ever-present Apache Hadoop environment. For Big Data, Apache Spark meets a lot of needs and runs natively on Apache Hadoop’s YARN. By running Apache Spark in your Apache Hadoop environment, you gain all the security, governance, and scalability inherent to that platform. Apache Spark is also extremely well integrated with Apache Hive and gains access to all your Apache Hadoop tables utilizing integrated security.

资源推荐

资源详情

资源评论

DZONE.COM/REFCARDZ

1

257204

Apache Spark

UPDATED BY TIM SPANN BIG DATA SOLUTIONS ENGINEER, HORTONWORKS

WRITTEN BY ASHWINI KUNTAMUKKALA SOFTWARE ARCHITECT, SCISPIKE

WHY APACHE SPARK?

Apache Spark has become the engine to enhance many of the

capabilities of the ever-present Apache Hadoop environment. For

Big Data, Apache Spark meets a lot of needs and runs natively on

Apache Hadoop’s YARN. By running Apache Spark in your Apache

Hadoop environment, you gain all the security, governance, and

scalability inherent to that platform. Apache Spark is also extremely

well integrated with Apache Hive and gains access to all your Apache

Hadoop tables utilizing integrated security.

Apache Spark has begun to really shine in the areas of streaming data

processing and machine learning. With first-class support of Python

as a development language, PySpark allows for data scientists,

engineers and developers to develop and scale machine learning with

ease. One of the features that has expanded this is the support for

Apache Zeppelin notebooks to run Apache Spark jobs for exploration,

data cleanup, and machine learning. Apache Spark also integrates

with other important streaming tools in the Apache Hadoop space,

namely Apache NiFi and Apache Kafka. I like to think of Apache Spark

+ Apache NiFi + Apache Kafka as the three amigos of Apache Big Data

ingest and streaming. The latest version of Apache Spark is 2.2.

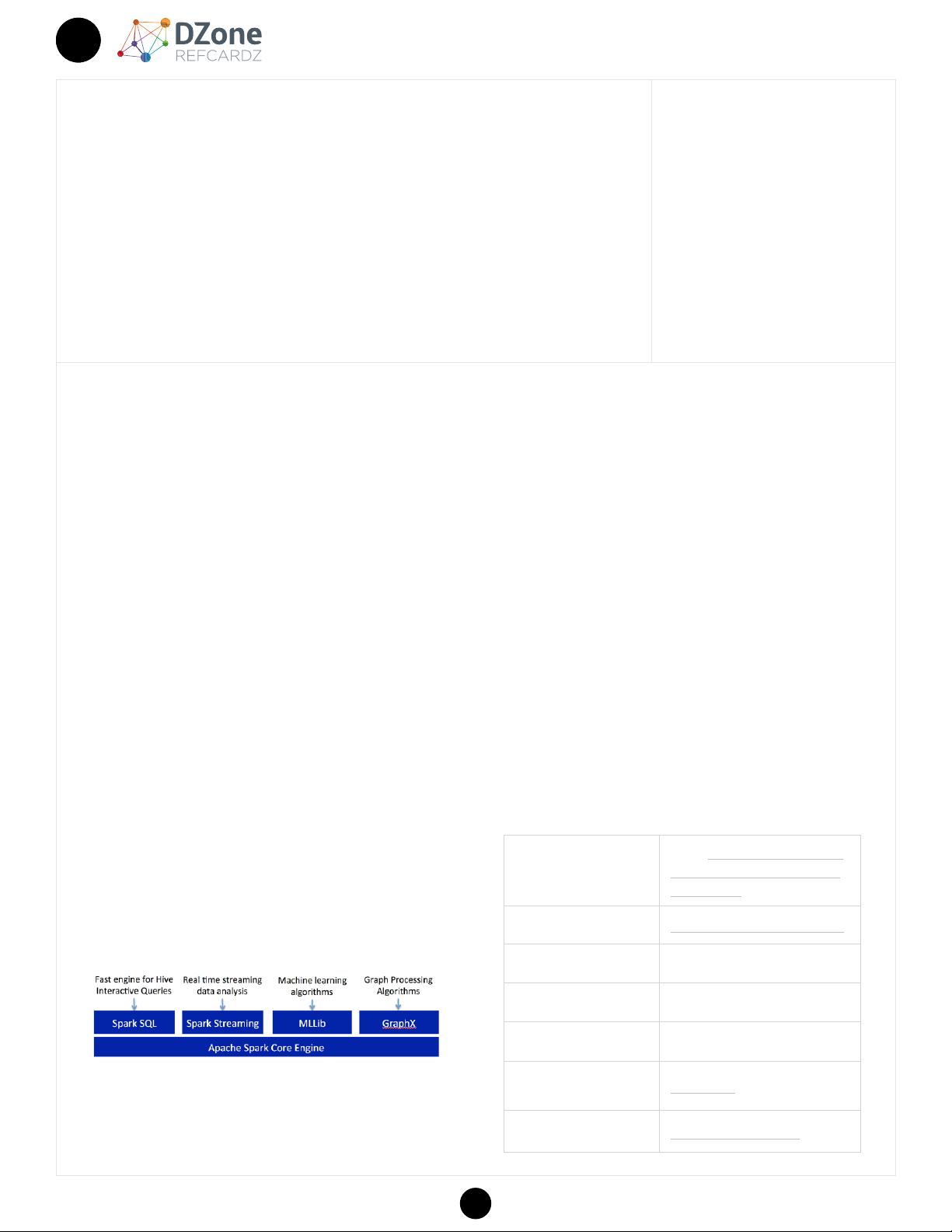

ABOUT APACHE SPARK

Apache Spark is an open source, Hadoop-compatible, fast and

expressive cluster-computing data processing engine. It was created

at AMPLabs in UC Berkeley as part of Berkeley Data Analytics Stack

(BDAS). It is a top-level Apache project. The below figure shows the

various components of the current Apache Spark stack.

It has six major benefits:

1. Lightning speed of computation because data are loaded in

distributed memory (RAM) over a cluster of machines. Data can

be quickly transformed iteratively and cached on demand for

subsequent usage.

2. Highly accessible through standard APIs built in Java, Scala,

Python, R, and SQL (for interactive queries) and has a rich set of

machine learning libraries available out of the box.

3. Compatibility with existing Hadoop 2.x (YARN) ecosystems so

companies can leverage their existing infrastructure.

4. Convenient download and installation processes. Convenient

shell (REPL: Read-Eval-Print-Loop) to interactively learn the APIs.

5. Enhanced productivity due to high-level constructs that keep

the focus on content of computation.

6. Multiple user notebook environments supported by Apache

Zeppelin.

Also, Spark is implemented in Scala, which means that the code is

very succinct and fast and requires JVM to run.

HOW TO INSTALL APACHE SPARK

The following table lists a few important links and prerequisites:

Current Release

2.2.0 @ apache.org/dyn/closer.lua/

spark/spark-2.2.0/spark-2.2.0-bin-

hadoop2.7.tgz

Downloads Page

spark.apache.org/downloads.html

JDK Version (Required) 1.8 or higher

Scala Version (Required) 2.11 or higher

Python (Optional) [2.7, 3.5)

Simple Build Tool (Re-

quired)

scala-sbt.org

Development Version

github.com/apache/spark

CONTENTS

∠

WHY APACHE SPARK?

∠ ABOUT APACHE SPARK

∠ HOW TO INSTALL APACHE SPARK

∠ HOW APACHE SPARK WORKS

∠ RESILIENT DISTRIBUTED DATASET

∠ DATAFRAMES

∠ RDD PERSISTENCE

∠ SPARK SQL

∠ SPARK STREAMING

DZONE.COM/REFCARDZ

2

APACHE SPARK

Building Instructions

spark.apache.org/docs/latest/

building-spark.html

Maven 3.3.9 or higher

Hadoop + Spark Instal-

lation

docs.hortonworks.com/HDP

Documents/Ambari-2.6.0.0/

bk_ambari-i0nstallation/content/

ch_Getting_Ready.html

Apache Spark can be configured to run standalone or on Hadoop

2 YARN. Apache Spark requires moderate skills in Java, Scala, or

Python. Here we will see how to install and run Apache Spark in the

standalone configuration.

1. Install JDK 1.8+, Scala 2.11+, Python 3.5+ and Apache Maven.

2. Download Apache Spark 2.2.0 Release.

3. Untar and unzip spark-2.2.0.tgz in a specified directory.

4. Go to the directory and run sbt to build Apache Spark.

export MAVEN_OPTS="-Xmx2g -XX:ReservedCodeCacheSize=512m"

mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.3 -Phive

-Phive thriftserver -DskipTests clean package

5. Launch Apache Spark standalone REPL. For Scala, use:

./spark-shell

For Python, use:

./pyspark

6. Go to SparkUI @ http://localhost:4040

This is a good quick start, but I recommend utilizing a Sandbox or

an available Apache Zeppelin notebook to begin your exploration of

Apache Spark.

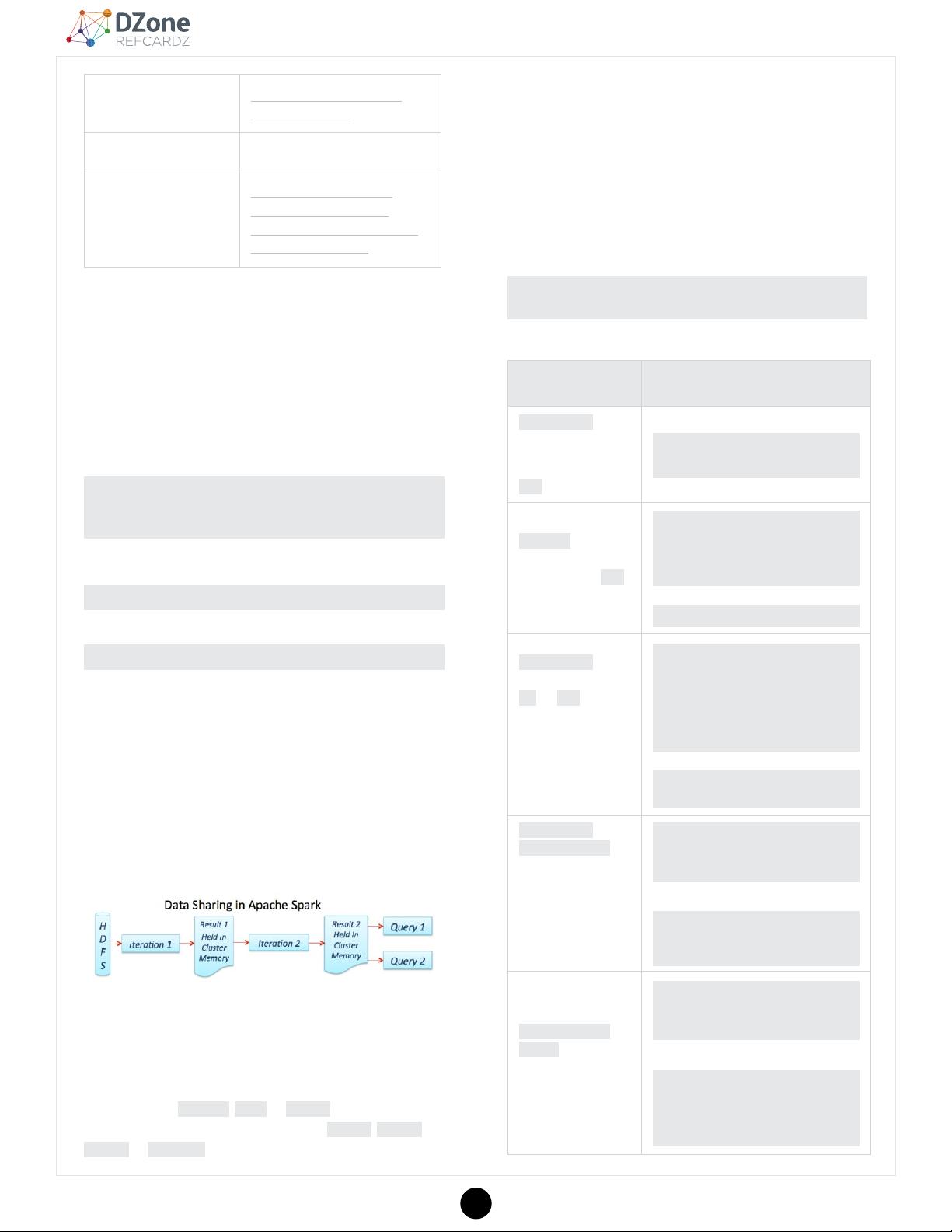

HOW APACHE SPARK WORKS

The Apache Spark engine provides a way to process data in distributed

memory over a cluster of machines. The figure below shows a logical

diagram of how a typical Spark job processes information.

RESILIENT DISTRIBUTED DATASET

The core concept in Apache Spark is the resilient distributed dataset

(RDD). It is an immutable distributed collection of data, which is

partitioned across machines in a cluster. It facilitates two types of

operations: transformations and actions. A transformation is an

operation such as

filter()

,

map()

, or

union()

on an RDD that yields

another RDD. An action is an operation such as

count()

,

first()

,

take(n)

, or

collect()

that triggers a computation, returns a value

back to the Driver program, or writes to a stable storage system like

Apache Hadoop HDFS. Transformations are lazily evaluated in that

they don’t run until an action warrants it. The Apache Spark Driver

remembers the transformations applied to an RDD, so if a partition

is lost (say a worker machine goes down), that partition can easily be

reconstructed on some other machine in the cluster. That is why is it

called “Resilient.”

The following code snippets show how we can do this in Python using

the Spark 2 PySpark shell.

%spark2.pyspark

guten = spark.read.text('/load/55973-0.txt')

COMMONLY USED TRANSFORMATIONS

Transformation &

Purpose

Example & Result

filter(func)

Purpose: new RDD by

selecting those data

elements on which

func

returns true

shinto = guten.filter( guten.Variable

contains("Shinto") )

map(func)

Purpose: return new

RDD by applying

func

on each data element

val rdd =

sc.parallelize(List(1,2,3,4,5))

val times2 = rdd.map(*2) times2.

collect()

Result:

Array[Int] = Array(2, 4, 6, 8, 10)

flatMap(func)

Purpose: Similar to

map

but

func

returns

a sequence instead of

a value. For example,

mapping a sentence

into a sequence of

words

val rdd=sc.

parallelize(List(“Spark is

awesome”,”It is fun”))

val fm=rdd.flatMap(str=>str.

split(“ “))

fm.collect()

Result:

Array[String] = Array(Spark, is,

awesome, It, is, fun)

reduceByKey(-

func,[numTasks])

Purpose: To aggregate

values of a key using a

function. “numTasks”

is an optional parame-

ter to specify a number

of reduce tasks

val word1=fm.map(word=>(word,1))

val wrdCnt = word1.

reduceByKey(_+_)wrdCnt.collect()

Result:

Array[(String, Int)] =

Array((is,2), (It,1),

(awesome,1), (Spark,1), (fun,1))

groupByKey([num-

Tasks])

Purpose: To convert

(K,V) to (K,Iterable<V>)

val cntWrd = wrdCnt.map{case

(word, count) => (count, word)}

cntWrd.groupByKey().collect()

Result:

Array[(Int, Iterable[String])] =

Array((1,ArrayBuffer(It,

awesome, Spark, fun)),

(2,ArrayBuffer(is)))

剩余6页未读,继续阅读

资源评论

过往记忆

- 粉丝: 4376

- 资源: 275

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 微信小程序毕业设计-基于SSM的电影交流小程序【代码+论文+PPT】.zip

- 微信小程序毕业设计-基于SSM的食堂线上预约点餐小程序【代码+论文+PPT】.zip

- 锐捷交换机的堆叠,一个大问题

- 微信小程序毕业设计-基于SSM的校园失物招领小程序【代码+论文+PPT】.zip

- MATLAB《结合萨克拉门托模型和遗传算法为乐安河流域建立一个水文过程预测模型》+项目源码+文档说明

- 基于人工神经网络/随机森林/LSTM的径流预测项目

- 微信小程序毕业设计-基于SSM的驾校预约小程序【代码+论文+PPT】.zip

- Aspose.Words 18.7 版本 Word转成PDF无水印

- 微信小程序毕业设计-基于Python的摄影竞赛小程序【代码+论文+PPT】.zip

- PCS7 Drive ES APL V9.1

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功