IEEE TRANSACTIONS ON BROADCASTING, VOL. 57, NO. 2, JUNE 2011 491

Semi-Automatic 2D-to-3D Conversion Using

Disparity Propagation

Xun Cao, Student Member, IEEE, Zheng Li, and Qionghai Dai, Senior Member, IEEE

Abstract—Estimating 3D information from an image sequence

has long been a challenging problem, especially for dynamic

scenes. In this paper, a novel semi-automatic 2D-to-3D conversion

method is presented to estimate the disparity maps for regular

2D video shots. Our method requires only a few user-scribbles

on very sparse key frames, and then other frames of the video

are converted to 3D automatically. Multiple objects are first

segmented by the input user-scribbles. Then, the initial disparity

map is assigned to each key frame with the aid of various preset

disparity models for each object. After the disparity assignment

step, disparity maps for other frames of the video are obtained

through a disparity propagation strategy taking into account both

color similarity and motion information. Finally, the 3D video

is synthesized according to the type of 3D display device. Our

method is verified on different kinds of challenging sequences

containing occlusion, textureless regions, color ambiguity, large

displacement movements, etc. The experimental results show

that our method has better performance than the state-of-the-art

2D-to-3D conversion systems.

Index Terms—Broadcasting, multimedia system, three-dimen-

sional vision.

I. INTRODUCTION

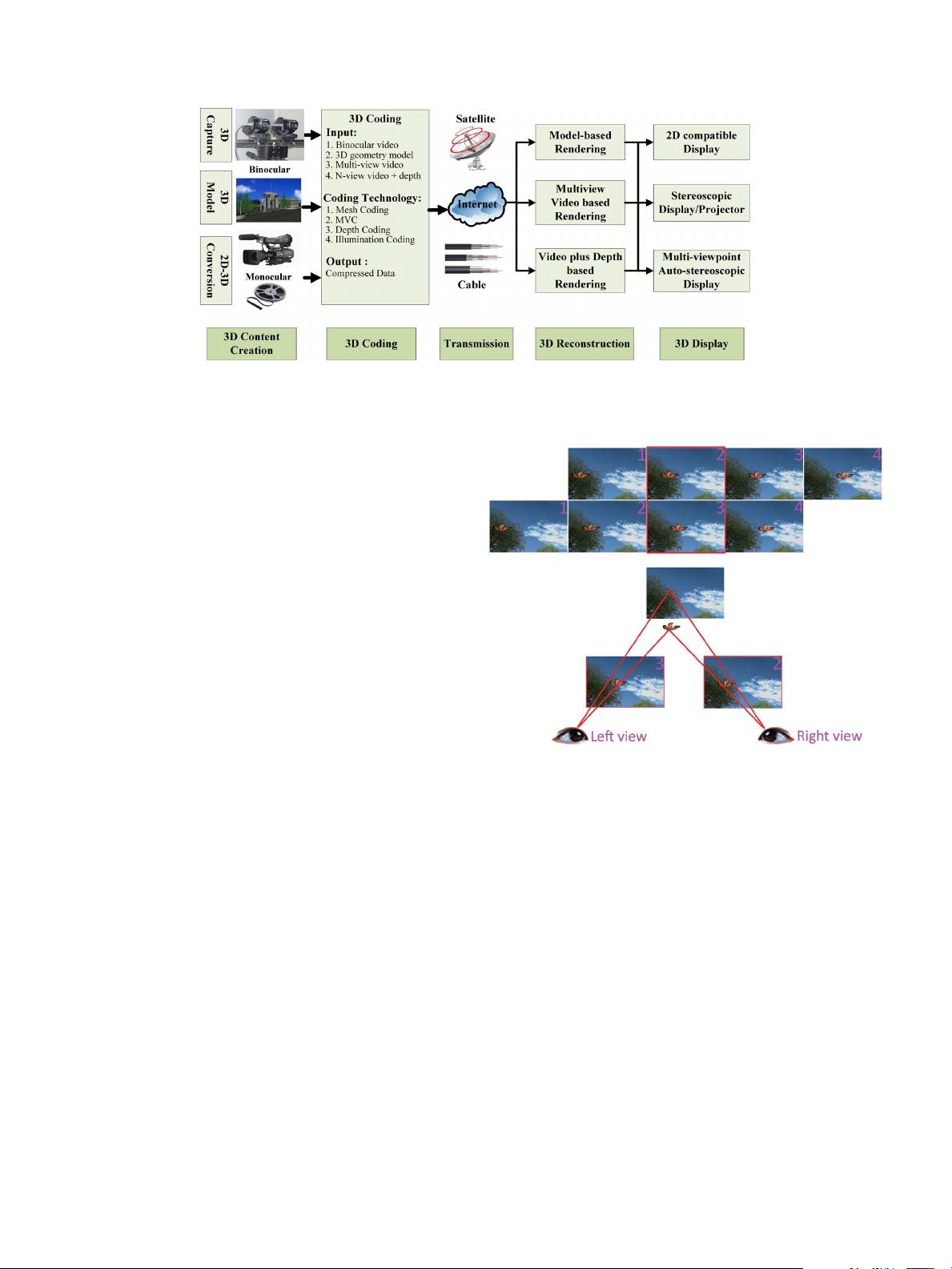

3D can be regarded as the next revolution for many applica-

tions such as television, movies, and video games. The smash

hit movie “Avatar” has demonstrated great success in the use

of 3D and announced the approach of the 3D era. However,

the tremendous production cost and the complicated 3D gener-

ation process have revealed another fact, that is, despite the re-

markable development of stereoscopic display technologies and

3D display devices (e.g. stereo projectors, auto-stereoscopic dis-

plays, holographic), there is still little 3D content to be played

on these systems. The lack of 3D content is becoming a severe

bottleneck for the entire 3D industry (see Fig. 1).

The purpose of 2D-to-3D conversion techniques is to esti-

mate 3D information from monocular video shots, which is

useful for converting conventional 2D video into 3D content

[1]. An effective and efficient 2D-to-3D conversion technique

can lead to 3D content creation at a lower cost and with less

time. Moreover, 2D-to-3D conversion can make full use of the

Manuscript received July 15, 2010; revised January 10, 2011; accepted Feb-

ruary 07, 2011. Date of publication April 19, 2011; date of current version

May 25, 2011. The work was supported by the National Basic Research Project

(2010CB731800) and the Key Projects of NSFC (61035002 and 60932007).

The authors are with the Department of Automation, Tsinghua University

and Tsinghua National Laboratory for Information Science and Technology

(TNList), Beijing 100084, China (e-mail: cao-x06@mails.tsinghua.edu.cn;

lizheng07@mails.tsinghua.edu.cn; qhdai@tsinghua.edu.cn).

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TBC.2011.2127650

vast amount of old material generated many years ago, which

is almost impossible to re-capture in 3D. As a result, 2D-to-3D

conversion techniques can greatly alleviate the serious shortage

of 3D content. Generally, current 2D-to-3D conversion algo-

rithms can be divided into two categories: 1) semi-automatic

methods with human-computer interactive operations [2]–[6],

2) fully-automatic methods which directly output 3D video

from 2D input without any user interactions involved [7]–[9].

Semi-automatic 2D-to-3D conversion unsurprisingly has better

performance than fully-automatic methods because of the

high-level knowledge provided by users. However, the study of

automatic 2D-to-3D conversion methods is still very necessary

because human participation is impractical in many scenarios.

In most semi-automatic 2D-to-3D conversion frameworks

[4]–[6], certain frames (

key frames) of the video sequence are

annotated with 3D information (e.g., depth or disparity informa-

tion) by users, and other frames (non-key frames) are converted

to 3D automatically. This framework is feasible based on the

observation that most frames are similar to others and there

exists much correlation within a single video shot. Some other

works deal with 2D-to-3D conversion from a machine learning

perspective [6], [10]; they first train from user-annotated pixels,

then infer the 3D information of other pixels based on their

training. These methods, with key frames and non-key frames,

are also semi-automatic. Nevertheless, a tradeoff must be faced

between labor cost and 3D conversion effect. Better 3D effects

are expected if more time is spent annotating key frames, with

an extreme case being the manual conversion of all frames in

the video, which would be extraordinarily time-consuming and

almost impossible in practice. Therefore, recent efforts have

been made on 3D conversion with just a few user-scribbles

[4]. These methods greatly facilitate user operation, but the

conversion results still leave much room for improvement. In

this paper, we propose a convenient semi-automatic 2D-to-3D

conversion scheme with only a few user strokes while main-

taining high accuracy in 3D effects. A dense disparity map on

each key frame is first generated with the aid of strokes. This

includes two steps: multiple object segmentation and disparity

assignment. Then, the dense disparity maps are propagated

from the key frames to non-key frames. Although a similar

disparity propagation strategy is presented in [5], there remain

many problems such as occlusion, large displacement move-

ments, color ambiguity, textureless regions, blurred edges,

and camera zoom in/out. In the following, we will detail the

proposed 2D-to-3D scheme and show how to tackle the afore-

mentioned issues. The most important contributions of the

paper are: 1) a convenient multiple objects segmentation tool

called “multi-snapping” together with disparity assignment to

0018-9316/$26.00 © 2011 IEEE

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功