3

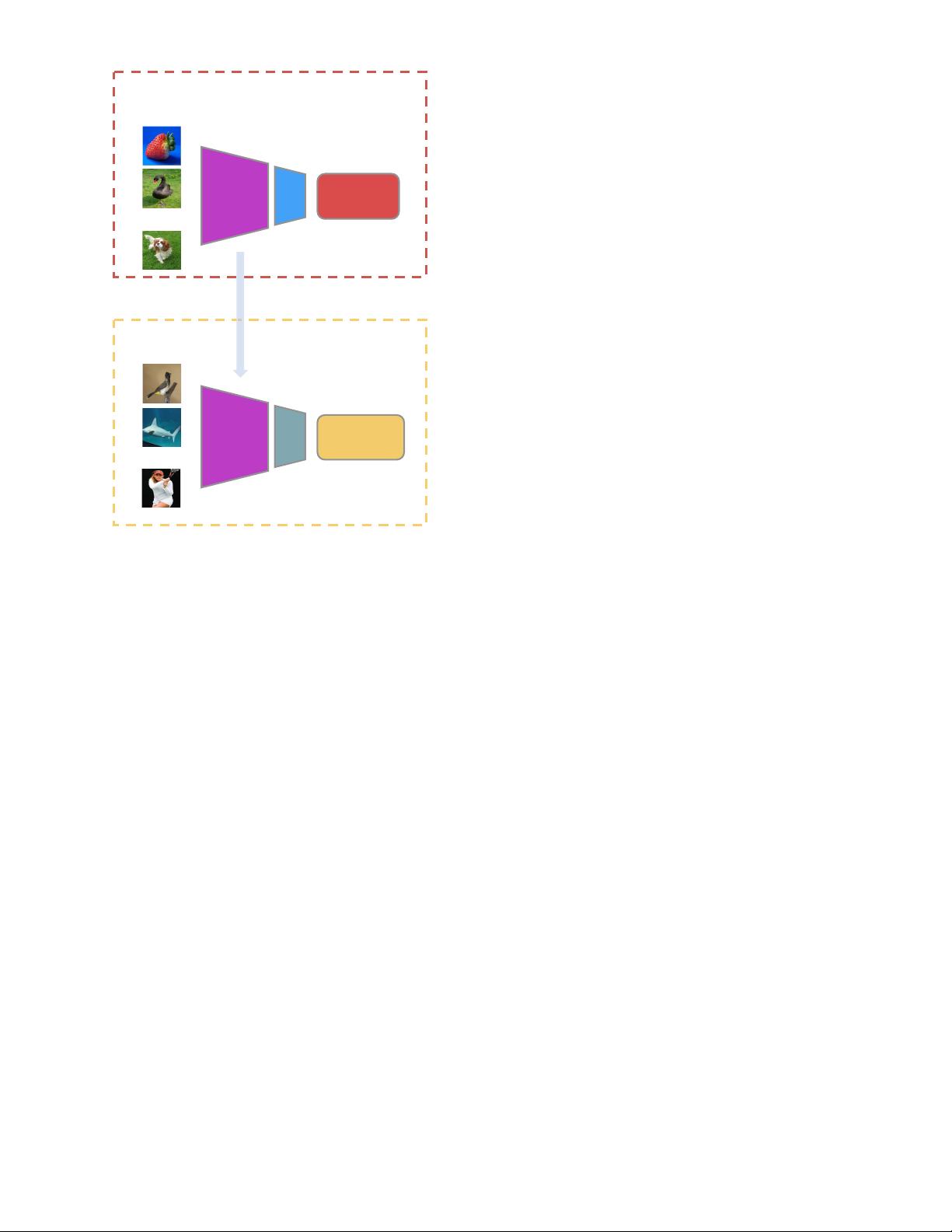

2 FORMULATION OF DIFFERENT LEARNING

SCHEMAS

Based on the training labels, visual feature learning methods

can be grouped into the following four categories: super-

vised, semi-supervised, weakly supervised, and unsuper-

vised. In this section, the four types of learning methods are

compared and key terminologies are defined.

2.1 Supervised Learning Formulation

For supervised learning, given a dataset X, for each data

X

i

in X, there is a corresponding human-annotated label

Y

i

. For a set of N labeled training data D = {X

i

}

N

i=0

, the

training loss function is defined as:

loss(D) = min

θ

1

N

N

X

i=1

loss(X

i

, Y

i

). (1)

Trained with accurate human-annotated labels, the su-

pervised learning methods obtained break-through results

on different computer vision applications [1], [4], [8], [16].

However, data collection and annotation usually are ex-

pensive and may require special skills. Therefore, semi-

supervised, weakly supervised, and unsupervised learning

methods were proposed to reduce the cost.

2.2 Semi-Supervised Learning Formulation

For semi-supervised visual feature learning, given a small

labeled dataset X and a large unlabeled dataset Z, for each

data X

i

in X, there is a corresponding human-annotated

label Y

i

. For a set of N labeled training data D

1

= {X

i

}

N

i=0

and M unlabeled training data D

2

= {Z

i

}

M

i=0

, the training

loss function is defined as:

loss(D

1

, D

2

) = min

θ

1

N

N

X

i=1

loss(X

i

, Y

i

)+

1

M

M

X

i=1

loss(Z

i

, R(Z

i

, X)),

(2)

where the R(Z

i

, X) is a task-specific function to represent

the relation between each unlabeled training data Z

i

with

the labeled dataset X.

2.3 Weakly Supervised Learning Formulation

For weakly supervised visual feature learning, given a

dataset X, for each data X

i

in X, there is a correspond-

ing coarse-grained label C

i

. For a set of N training data

D = {X

i

}

N

i=0

, the training loss function is defined as:

loss(D) = min

θ

1

N

N

X

i=1

loss(X

i

, C

i

). (3)

Since the cost of weak supervision is much lower than

the fine-grained label for supervised methods, large-scale

datasets are relatively easier to obtain. Recently, several

papers proposed to learn image features from web collected

images using hashtags as category labels [21], [22], and

obtained very good performance [21].

2.4 Unsupervised Learning Formulation

Unsupervised learning refers to learning methods that do

not need any human-annotated labels. This type of methods

including fully unsupervised learning methods in which

the methods do not need any labels at all, as well as self-

supervised learning methods in which networks are ex-

plicitly trained with automatically generated pseudo labels

without involving any human annotation.

2.4.1 Self-supervised Learning

Recently, many self-supervised learning methods for visual

feature learning have been developed without using any

human-annotated labels [23], [24], [25], [26], [27], [28], [29],

[30], [31], [32], [33], [33], [34], [35]. Some papers refer to

this type of learning methods as unsupervised learning [36],

[37], [38], [39], [40], [41], [42], [43], [44], [45], [46], [47], [48].

Compared to supervised learning methods which require a

data pair X

i

and Y

i

while Y

i

is annotated by human labors,

self-supervised learning also trained with data X

i

along

with its pseudo label P

i

while P

i

is automatically gener-

ated for a pre-defined pretext task without involving any

human annotation. The pseudo label P

i

can be generated by

using attributes of images or videos such as the context of

images [18], [19], [20], [36], or by traditional hand-designed

methods [49], [50], [51].

Given a set of N training data D = {P

i

}

N

i=0

, the training

loss function is defined as:

loss(D) = min

θ

1

N

N

X

i=1

loss(X

i

, P

i

). (4)

As long as the pseudo labels P are automatically gen-

erated without involving human annotations, then the

methods belong to self-supervised learning. Recently, self-

supervised learning methods have achieved great progress.

This paper focuses on the self-supervised learning methods

that mainly designed for visual feature learning, while the

features have the ability to be transferred to multiple visual

tasks and to perform new tasks by learning from limited

labeled data. This paper summarizes these self-supervised

feature learning methods from different perspectives includ-

ing network architectures, commonly used pretext tasks,

datasets, and applications, etc.

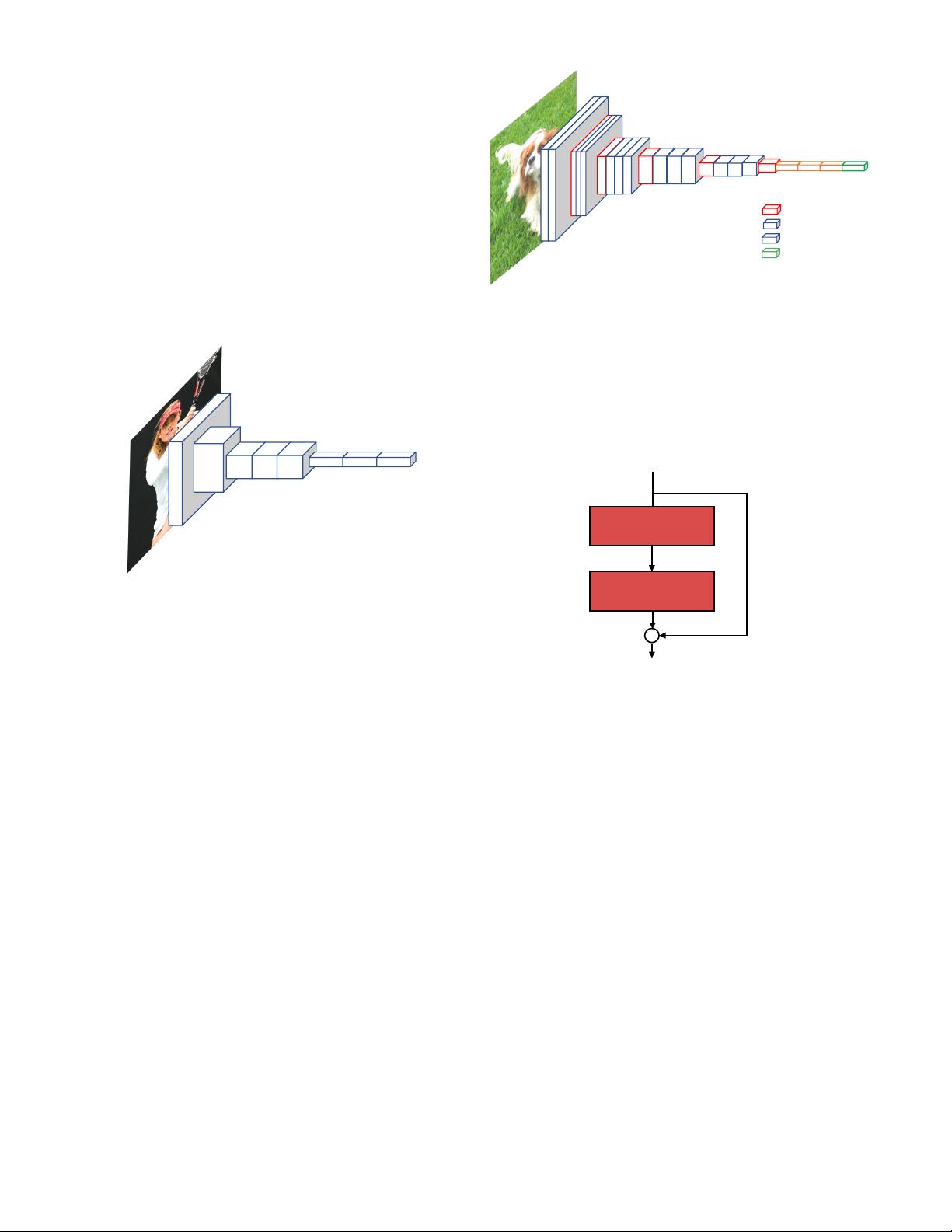

3 COMMON DEEP NETWORK ARCHITECTURES

No matter the categories of learning methods, they share

similar network architectures. This section reviews common

architectures for learning both image and video features.

3.1 Architectures for Learning Image Features

Various 2DConvNets have been designed for image feature

learning. Here, five milestone architectures for image feature

learning including AlexNet [8], VGG [9], GoogLeNet [10],

ResNet [11], and DenseNet [12] are reviewed.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功