没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

Understanding Learning Rates and How It Improves Performance in Deep Learning This post is an attempt to document my understanding on the following topic: What is the learning rate? What is it’s signibcance? How does one systematically arrive at a good learning rate? Why do we change the learning rate during training? How do we deal with learning rates when using pretrained model?

资源推荐

资源详情

资源评论

HadzZulkii

Follow

DataScientistatSEEK

Jan22 · 8minread

UnderstandingLearningRatesandHowIt

ImprovesPerformanceinDeepLearning

This post is an attempt to document my understanding on the following

topic:

What is the learning rate? What is it’s signicance?

How does one systematically arrive at a good learning rate?

Why do we change the learning rate during training?

How do we deal with learning rates when using pretrained model?

Much of this post are based on the stu written by past fast.ai fellows

[1], [2], [5] and [3]. This is a concise version of it, arranged in a way

for one to quickly get to the meat of the material. Do go over the

references for more details.

Firsto,whatisalearningrate?

Learning rate is a hyper-parameter that controls how much we are

adjusting the weights of our network with respect the loss gradient. The

lower the value, the slower we travel along the downward slope. While

this might be a good idea (using a low learning rate) in terms of making

sure that we do not miss any local minima, it could also mean that we’ll

be taking a long time to converge — especially if we get stuck on a

plateau region.

The following formula shows the relationship.

new_weight = existing_weight — learning_rate * gradient

•

•

•

•

Typically learning rates are congured naively at random by the user.

At best, the user would leverage on past experiences (or other types of

learning material) to gain the intuition on what is the best value to use

in setting learning rates.

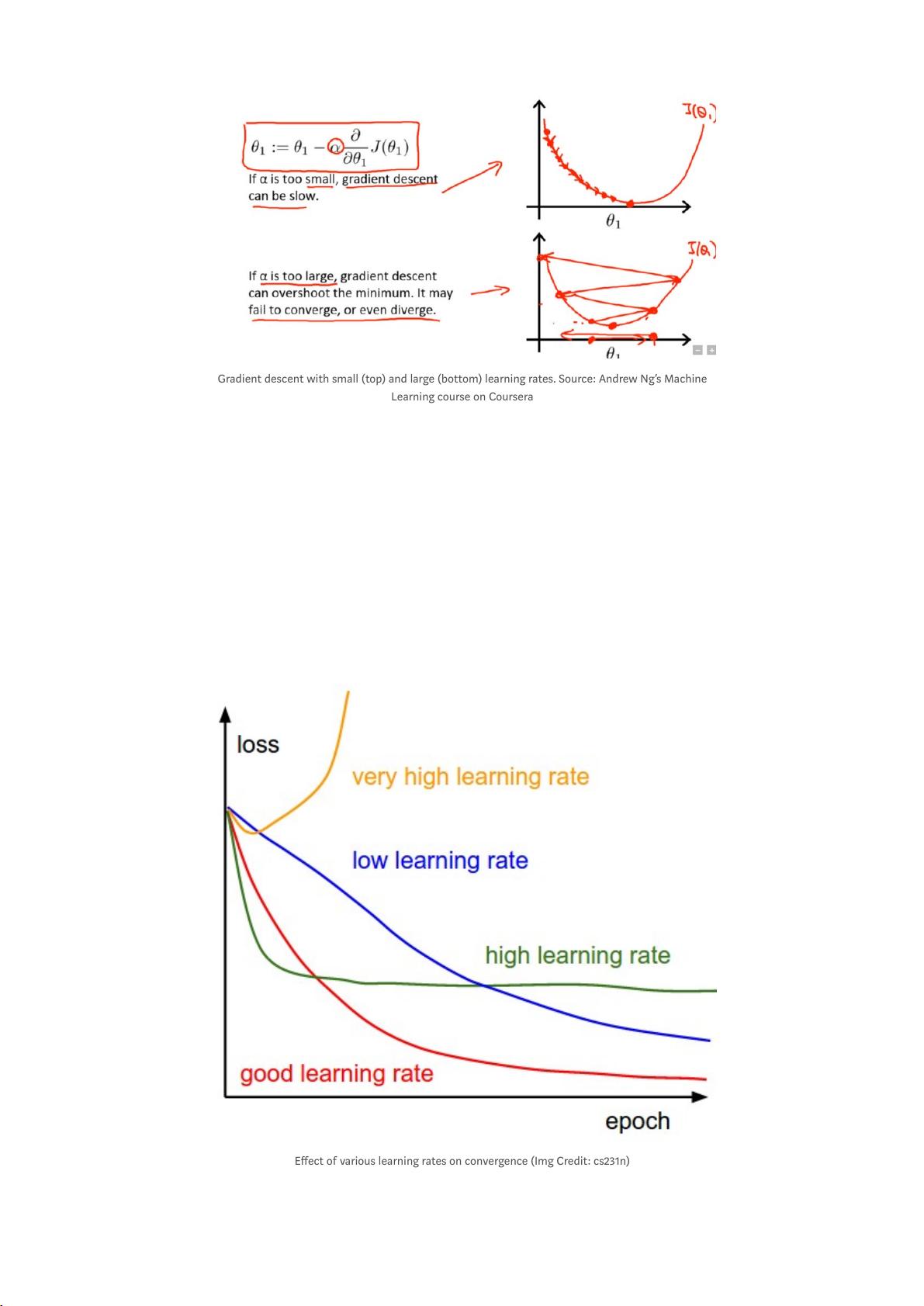

As such, it’s often hard to get it right. The below diagram demonstrates

the dierent scenarios one can fall into when conguring the learning

rate.

Gradientdescentwithsmall(top)andlarge(bottom)learningrates.Source:AndrewNg’sMachine

LearningcourseonCoursera

Eectofvariouslearningratesonconvergence(ImgCredit:cs231n)

剩余11页未读,继续阅读

资源评论

leez888

- 粉丝: 0

- 资源: 2

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 多线框物体架子检测28-YOLO(v5至v11)、COCO、CreateML、Paligemma、VOC数据集合集.rar

- Python快速排序算法详解及优化策略

- 政务大数据资源平台设计方案

- 完结17章SpringBoot3+Vue3 开发高并发秒杀抢购系统

- 基于MATLAB的信号处理与频谱分析系统

- 人大金仓(KingBase)备份还原文档

- SecureCRT.9.5.1.3272.v2.CN.zip

- CHM助手:制作CHM联机帮助的插件使用手册

- 大数据硬核技能进阶 Spark3实战智能物业运营系统完结26章

- Python个人财务管理系统(Personal Finance Management System)

- 多边形框架物体检测18-YOLO(v5至v11)、COCO、CreateML、TFRecord、VOC数据集合集.rar

- 虚拟串口VSPXD软件(支持64Bit)

- 机器学习金融反欺诈项目数据

- GAMMA软件新用户手册中文版

- CIFAR-10 64*64训练测试数据集

- cd35f259ee4bbfe81357c1aa7f4434e6.mp3

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功