Lecture 10 - 8 Feb 2016

Fei-Fei Li & Andrej Karpathy & Justin JohnsonFei-Fei Li & Andrej Karpathy & Justin Johnson

Lecture 10 - 8 Feb 2016

1

Lecture 10:

Recurrent Neural Networks

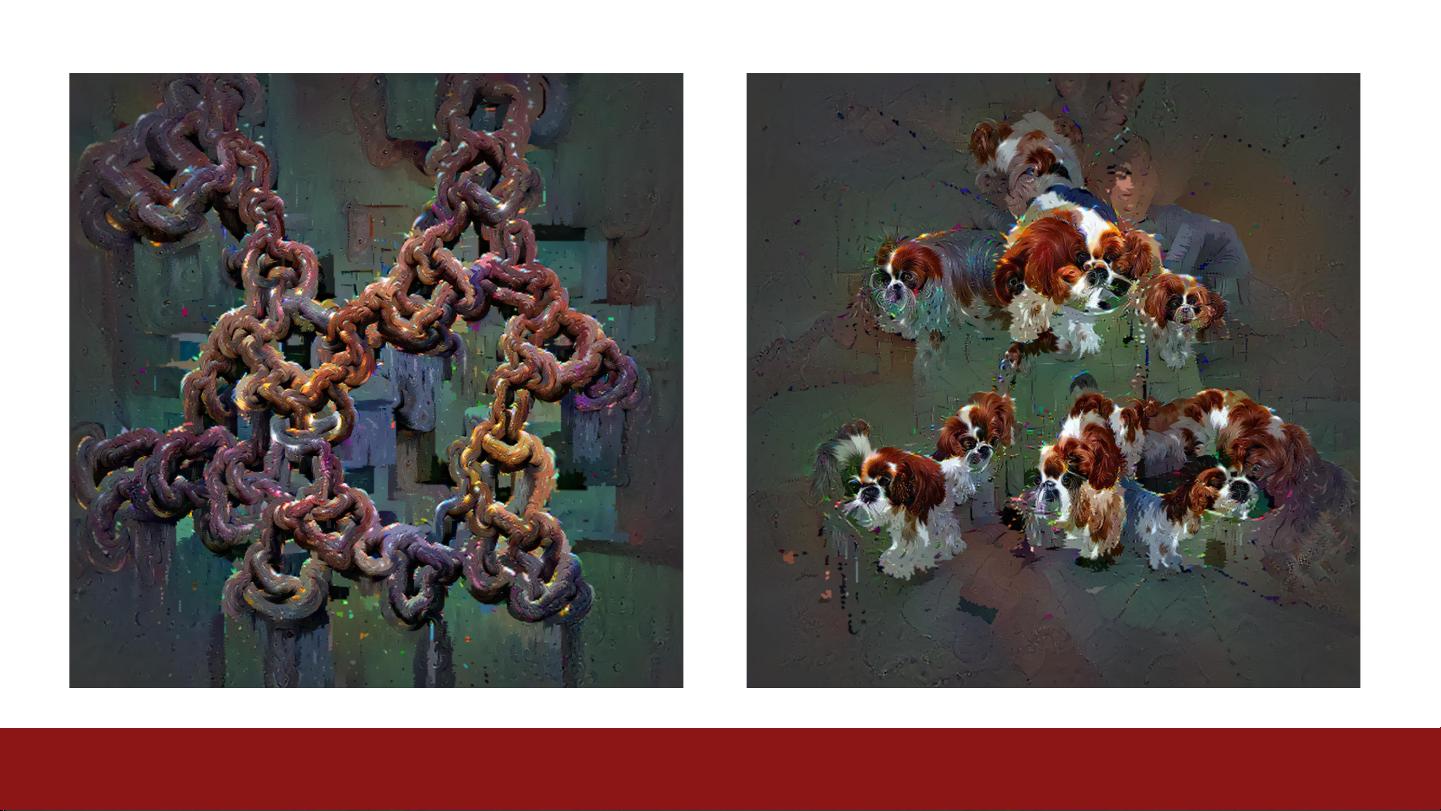

卷积神经网络 - RNNs allow a lot of flexibility in architecture design - Vanilla RNNs are simple but don’t work very well - Common to use LSTM or GRU: their additive interactions improve gradient flow - Backward flow of gradients in RNN can explode or vanish. Exploding is controlled with gradient clipping. Vanishing is controlled with additive interactions (LSTM) - Better/simpler architectures are a hot topic of current research - Better understanding (both theoretical and empirical) is needed.

winter1516_lecture10.zip (1个子文件)

winter1516_lecture10.zip (1个子文件)  winter1516_lecture10.pdf 7.15MB

winter1516_lecture10.pdf 7.15MB

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益

我的收益  我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功