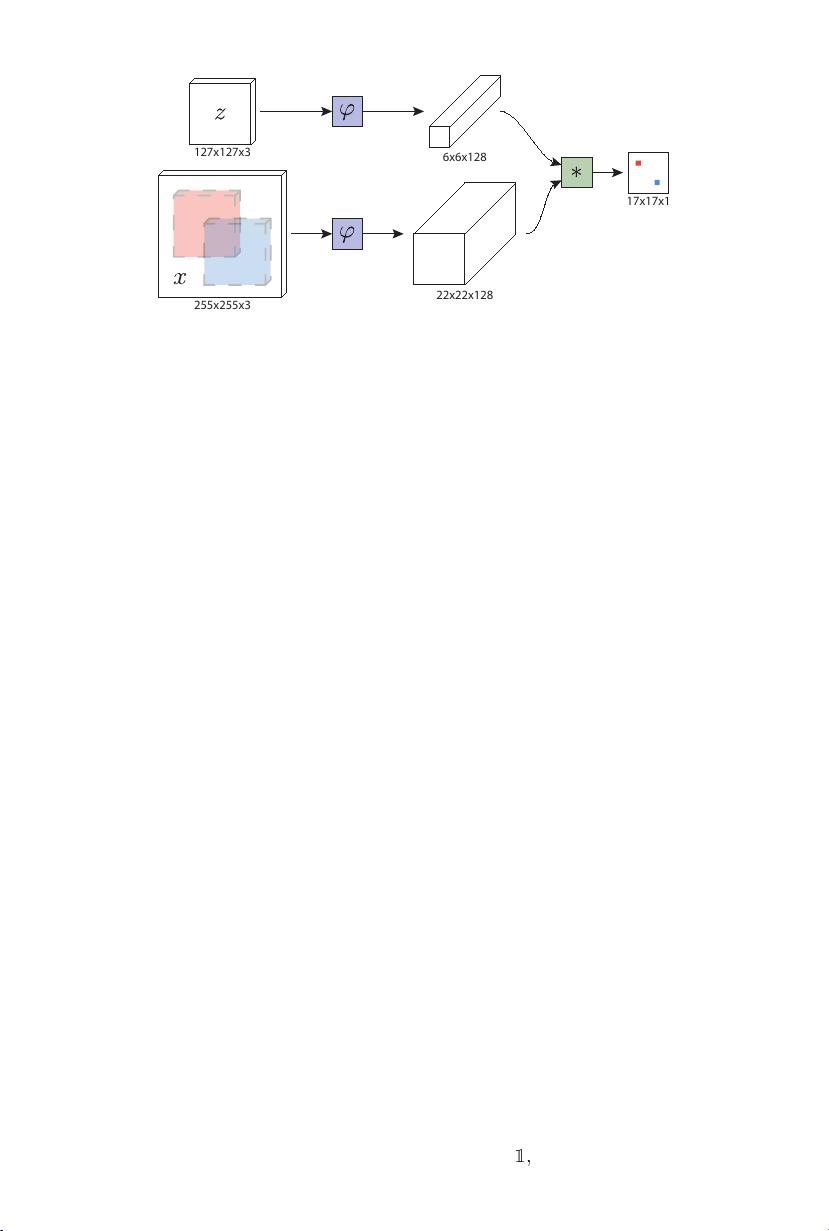

传统上,任意对象跟踪的问题是通过专门在线学习对象外观模型来解决的,使用视频本身作为唯一的训练数据。尽管这些方法取得了成功,但他们仅在线的方法本质上限制了他们可以学习的模型的丰富性。最近,已经进行了几次尝试来利用深度卷积网络的表达能力。然而,当事先不知道要跟踪的对象时,有必要在线执行随机梯度下降以调整网络的权重,这会严重影响系统的速度。在本文中,我们为基本跟踪算法配备了一个新的全卷积暹罗网络,该网络在ILSVRC15数据集上进行端到端训练,用于视频中的对象检测。我们的跟踪器以超过实时的帧速率运行,尽管极其简单,但在多个基准测试中实现了最先进的性能。 ### 基于Objective-C用于目标跟踪的全卷积暹罗网络 #### 概述 在计算机视觉领域,目标跟踪是一项关键任务,特别是在视频监控、无人驾驶汽车、增强现实等应用场景中发挥着重要作用。传统的目标跟踪方法往往依赖于在线学习目标外观模型的方式,这种方法虽然能够较好地适应目标的变化,但其模型的复杂性和准确性受限于可用的数据量和实时计算资源。近年来,随着深度学习技术的发展,特别是深度卷积神经网络(Deep Convolutional Neural Networks, DCNNs)的引入,目标跟踪领域的研究取得了显著进展。 #### 全卷积暹罗网络(Fully-Convolutional Siamese Networks) 本论文提出了一种基于全卷积暹罗网络的目标跟踪算法,旨在克服传统在线学习方法的局限性,并充分利用深度卷积网络的强大表达能力。暹罗网络(Siamese Networks)是一种特殊的神经网络结构,通常用于相似性学习任务中,如图像检索、人脸识别等。它由两个或多个共享权重的子网络组成,每个子网络接收不同的输入,并通过比较它们的输出来评估输入之间的相似性。 ##### 网络架构与训练 - **网络架构**:全卷积暹罗网络采用端到端的训练方式,在ILSVRC15数据集上进行预训练,用于视频中的目标检测任务。该网络包括两个分支,分别处理目标模板和搜索区域的图像。通过最小化两个分支输出特征图之间的距离来学习目标的表征。 - **训练过程**:网络在大规模数据集(如ILSVRC15)上预先训练,获取丰富的特征表示能力。然后,对于具体的跟踪任务,只需要提供目标模板图像,网络即可快速适应并跟踪目标,而无需进一步的在线训练或权重调整。 #### 实时性能与准确率 - **实时性能**:全卷积暹罗网络能够在保持高精度的同时,实现超过实时的帧速率运行。这对于实时应用至关重要,例如在自动驾驶系统中,需要即时响应以确保安全。 - **准确率**:尽管其实现极其简单,但该跟踪器在多个基准测试中展现了与现有最先进的目标跟踪方法相当甚至更优的表现。 #### 应用场景 - **视频监控**:在大型商场、机场等公共场所,实时识别和跟踪可疑行为对提高安全性至关重要。 - **无人驾驶**:在复杂的交通环境中,准确识别并跟踪行人、车辆等动态障碍物对于保证行车安全至关重要。 - **增强现实**:在AR应用中,精确跟踪用户的视线方向或手势动作有助于提升用户体验。 #### 结论 基于全卷积暹罗网络的目标跟踪算法不仅解决了传统在线学习方法的局限性,而且实现了高速且准确的目标跟踪。该方法的成功表明,通过将深度学习技术和特定领域的知识相结合,可以在实际应用中获得显著的性能提升。未来的研究方向可能包括探索更高效的网络架构、改进特征提取以及更好地处理遮挡和背景干扰等问题。

剩余15页未读,继续阅读

- 粉丝: 9993

- 资源: 240

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

信息提交成功

信息提交成功