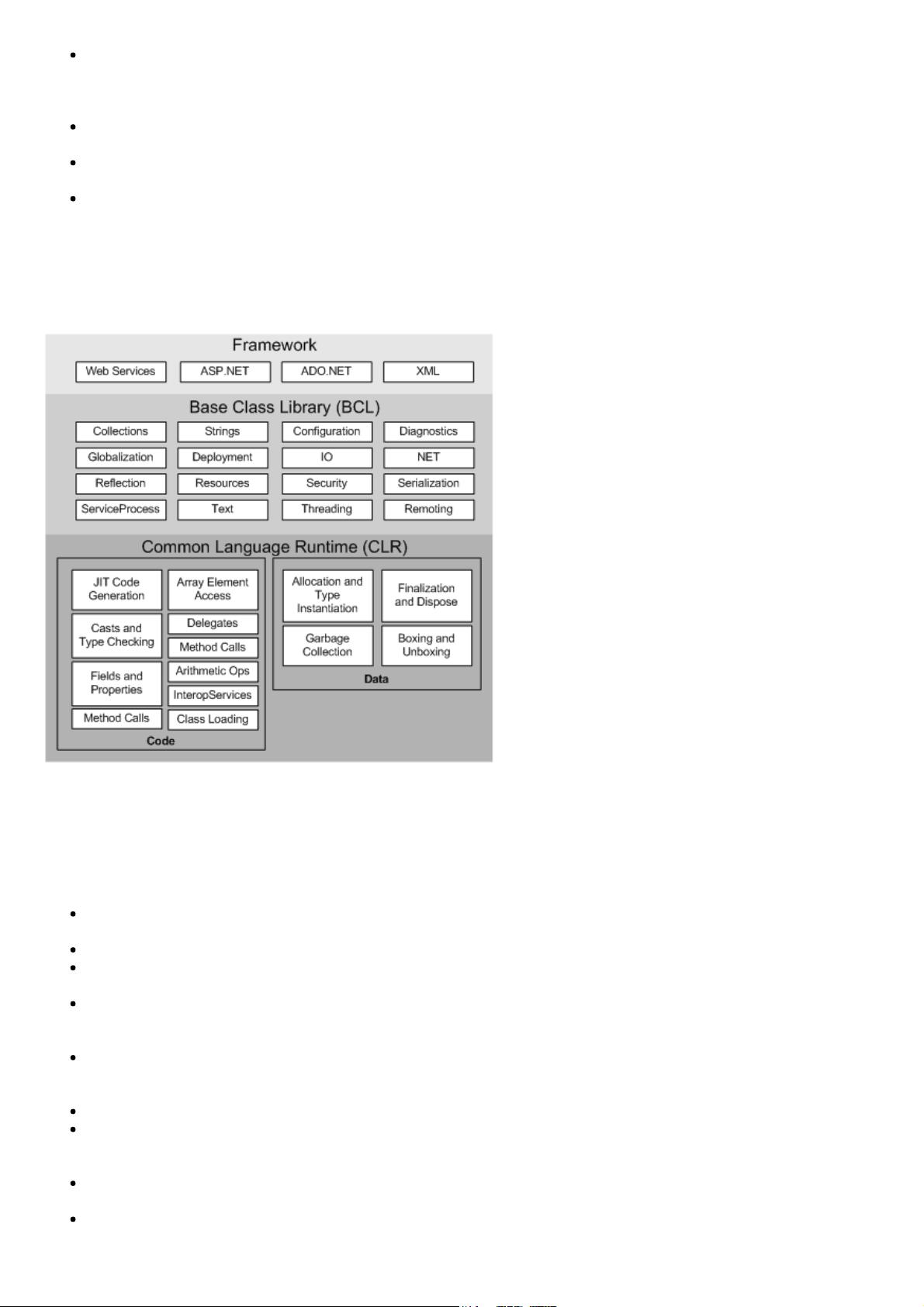

在.NET平台上,托管代码性能优化是一个重要课题,微软官方文档提供了一系列的设计和编程技巧来帮助开发者提升其.NET应用程序的性能。本章的目的是提供一系列优化和改进托管代码性能的技术,指导开发者编写可以充分利用公共语言运行时(Common Language Runtime,简称CLR)高性能特性的托管代码。 关键内容涵盖了以下几个方面: 1. **类设计考虑**:在.NET架构中,合理设计类结构是性能优化的首要步骤。设计时应考虑如何通过继承、封装和多态性等面向对象的原则来简化代码逻辑,提高代码复用性和可维护性。 2. **实现考虑**:实现阶段需要考虑代码的可读性、可测试性和错误处理。在设计模式的选择上,例如工厂模式、单例模式、策略模式等,需要结合具体业务场景合理运用。 3. **垃圾回收(Garbage Collection,简称GC)详解**:CLR提供的垃圾回收机制管理内存的分配和回收,可以减少内存泄漏和碎片化问题,但不当的使用也可能导致性能瓶颈。文档中提供了有关垃圾回收的深入讲解以及如何编写代码以减少垃圾回收的影响。 4. **终结器和Dispose模式**:在.NET中,终结器(Finalize)和Dispose模式用于资源释放。了解它们的工作原理和最佳实践对于编写资源安全的代码至关重要。 5. **内存固定(Pinning)**:固定内存可以防止垃圾回收器移动托管对象,这在与非托管代码交互时尤其重要。文档提供了何时以及如何正确地进行内存固定的指南。 6. **多线程编程详解**:多线程能够提升应用程序的性能和响应能力,但是不当的同步可能会导致死锁、竞态条件等问题。文档深入讲解了多线程编程的概念,并给出了一些指导原则。 7. **异步调用详解**:异步编程可以提升应用程序的性能和用户体验,特别是在I/O密集型操作中。文档探讨了如何有效使用异步编程模式,包括异步编程模型***。 8. **锁和同步详解**:在多线程环境中,正确的锁和同步机制对于避免竞争条件和确保线程安全至关重要。文档详细讲解了锁机制的设计和使用。 9. **值类型和引用类型**:理解.NET中的值类型和引用类型之间的差异对于编写高效的代码非常重要。文档阐释了值类型和引用类型之间的转换和性能影响。 10. **装箱和取消装箱详解**:装箱(Boxing)和取消装箱(Unboxing)是.NET将值类型和引用类型数据相互转换的过程,不恰当的使用会导致性能问题。文档提供了关于装箱和取消装箱的详细说明及最佳实践。 11. **异常管理**:异常处理是.NET编程中重要的部分,但是不恰当的异常处理方式会严重影响性能。文档中讨论了如何合理地使用异常处理来保持代码的性能。 12. **迭代和循环**:循环是程序中不可或缺的部分,但也是性能瓶颈的常见来源。文档提供了迭代和循环的优化技巧。 13. **字符串操作**:字符串在.NET中是不可变的,因此不当的字符串处理会带来性能问题。文档详细介绍了字符串操作的最佳实践。 14. **数组和集合**:数组和集合在.NET中是最基本的数据结构,文档针对如何选择和使用数组和集合提供了技巧和建议。 15. **使用Ngen.exe预编译代码**:Ngen.exe是一个.NET工具,可以将.NET中间语言(Intermediate Language,简称IL)代码转换为本机代码。预编译可以加快启动时间,提升性能。文档讲述了如何使用Ngen.exe进行代码的预编译。 在应用这些技巧和最佳实践时,开发者应该理解它们的应用场景,权衡不同技术的利弊,以达到最佳的性能优化效果。由于文档已标记为过时内容,因此在实际操作中,开发者还应该查阅最新的官方文档和社区资源以获取最新的性能优化信息。

剩余49页未读,继续阅读

- 粉丝: 0

- 资源: 2

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 自研DSP28335+移相全桥+纯程序实现同步整流 目前在DSP固有损耗2W的情况下,输出120W效率接近94% 就是铝基板+平面变压器玩起来太贵,不好做小批量,335现在也很贵 基于035的低

- 黏菌优化算法优化用于分类 回归 时序预测 黏菌优化支持向量机SVM,最小二乘支持向量机LSSVM,随机森林RF,极限学习机ELM,核极限学习机KELM,深度极限学习机DELM,BP神经网络,长短时记忆

- 灰狼优化算法优化用于分类 回归 时序预测 灰狼优化支持向量机SVM,最小二乘支持向量机LSSVM,随机森林RF,极限学习机ELM,核极限学习机KELM,深度极限学习机DELM,BP神经网络,长短时记忆

- 麻雀搜索算法优化用于分类 回归 时序预测 麻雀优化支持向量机SVM,最小二乘支持向量机LSSVM,随机森林RF,极限学习机ELM,核极限学习机KELM,深度极限学习机DELM,BP神经网络,长短时记忆

- 模型开发域控制Simulik自动生成代码 DSP2833x基于模型的电机控制设计 MATLAb Simulik自动生成代码 基于dsp2833x 底层驱动库的自动代码生成 MATLAB Simu

- 随机配置网络模型SCN做多输入单输出的拟合预测建模 程序内注释详细直接替数据就可以使用 程序语言为matlab 程序直接运行可以出拟合预测图,迭代优化图,线性拟合预测图,多个预测评价指标 P

- comsol中相场方法模拟多孔介质中驱替的计算案例 提供采用相场方法模拟多孔介质中驱替的算例,可在此基础上学会多孔介质中的驱替模拟,得到水驱油(或其他两相)后多孔介质中的残余油分布,计算采出程度随时间

- 该模型为内置式PMSM的电压反馈弱磁法,转速环输出给定转矩,输出转矩经牛顿迭代数值求的MTPA的最优dq电流,当电压超过直流母线电压时,构建电压闭环输出负的d轴电流进行弱磁扩速

- MATLAB应用数字散斑相关方法计算位移应变p文件资料包(参数可调) 专业性和针对性强

- 光伏控制器,mppt光伏最大功率点跟踪扰动观察法变步长扰动观察法仿真模型

- 基于fpga的半带滤波器仿真程序 1.软件:vivado 2.语言:Verilog 3.具体流程:包括ip核实现版本与非ip核实现版本,包含信号发生,合成,半带滤波器,抽取变频,fifo,fft流程

- 多目标 灰狼算法 多目标 冷热电微网 低碳调度 MATLAB代码:基于多目标灰狼算法的冷热电综合三联供微网低碳经济调度 参考文档:《基于改进多目标灰狼算法的冷热电联供型微电网运行优化-戚艳》灰狼算法

- 电动汽车控制器,纯电动汽车仿真、纯电动公交、纯电动客车、纯电动汽车动力性仿真、经济性仿真、续航里程仿真 模型包括电机、电池、车辆模型 有两种模型2选1: 1 完全用matlab simulink搭

- No.3 纵向速度控制-MPC控制(Carsim2019,Matlab2018a) 特殊说明:如需要电车版本的请咨询 采用上层控制器和下层控制器 目标为控制车辆的纵向速度,使其跟踪上期望纵向速度曲线

- HEV并联(IPS) 车辆仿真 simulink stateflow搭建 模型包含工况路普输入,驾驶员模型,车辆控制模型(CD CS 状态切 以及EV HEV Engine模式转), 电池及电机系统模

- 用信捷XDH总线控制6轴运动,电子凸轮定长切断带折叠,本程序用于一次性床单机,ST加梯形图编写,三期验证时间加密锁

信息提交成功

信息提交成功