Reining in the Outliers in Map-Reduce Clusters using Mantri

Ganesh Ananthanarayanan

†⋄

Srikanth Kandula

†

Albert Greenberg

†

Ion Stoica

⋄

Yi Lu

†

Bikas Saha

‡

Edward Harris

‡

†

Microso Research

⋄

UC Berkeley

‡

Microso Bing

Abstract– Experience from an operational Map-Reduce

cluster reveals that outliers signicantly prolong job com-

pletion. e causes for outliers include run-time con-

tention for processor, memory and other resources, disk

failures, varying bandwidth and congestion along net-

work paths and, imbalance in task workload. We present

Mantri, a system that monitors tasks and culls outliers us-

ing cause- and resource-aware techniques. Mantri’s strate-

gies include restarting outliers, network-aware placement

of tasks and protecting outputs of valuable tasks. Using

real-time progress reports, Mantri detects and acts on out-

liers early in their lifetime. Early action frees up resources

that can be used by subsequent tasks and expedites the job

overall. Acting based on the causes and the resource and

opportunity cost of actions lets Mantri improve over prior

work that only duplicates the laggards. Deployment in

Bing’s production clusters and trace-driven simulations

show that Mantri improves job completion times by .

Introduction

In a very short time, Map-Reduce has become the domi-

nant paradigm for large data processing on compute clus-

ters. Soware frameworks based on Map-Reduce [

, ,

] have been deployed on tens of thousands of machines

to implement a variety of applications, such as building

search indices, optimizing advertisements, and mining

social networks.

While highly successful, Map-Reduce clusters come

with their own set of challenges. One such challenge is

the oen unpredictable performance of the Map-Reduce

jobs. A job consists of a set of tasks which are organized in

phases. Tasks in a phase depend on the results computed

by the tasks in the previous phase and can run in paral-

lel. When a task takes longer to nish than other similar

tasks, tasks in the subsequent phase are delayed. At key

points in the job, a few such outlier tasks can prevent the

rest of the job from making progress. As the size of the

cluster and the size of the jobs grow, the impact of outliers

increases dramatically. Addressing the outlier problem is

critical to speed up job completion and improve cluster

eciency.

Even a few percent of improvement in the eciency

of a cluster consisting of tens of thousands of nodes can

save millions of d ollars a year. In addition, nishing pro-

duction jobs quickly is a competitive advantage. Doing

so predictably allows SLAs to be met. In iterative mod-

ify/ debug/ analyze development cycles, the ability to it-

erate faster improves programmer productivity.

In this paper, we characterize the impact and causes

of outliers by measuring a large Map-Reduce production

cluster. is cluster is up to two orders of magnitude

larger than those in previous publications [

, , ] and

exhibits a high level of concurrency due to many jobs si-

multaneously running on the cluster and many tasks on

a machine. We nd that variation in completion times

among functionally similar tasks is large and that outliers

inate the completion time of jobs by 34% at median.

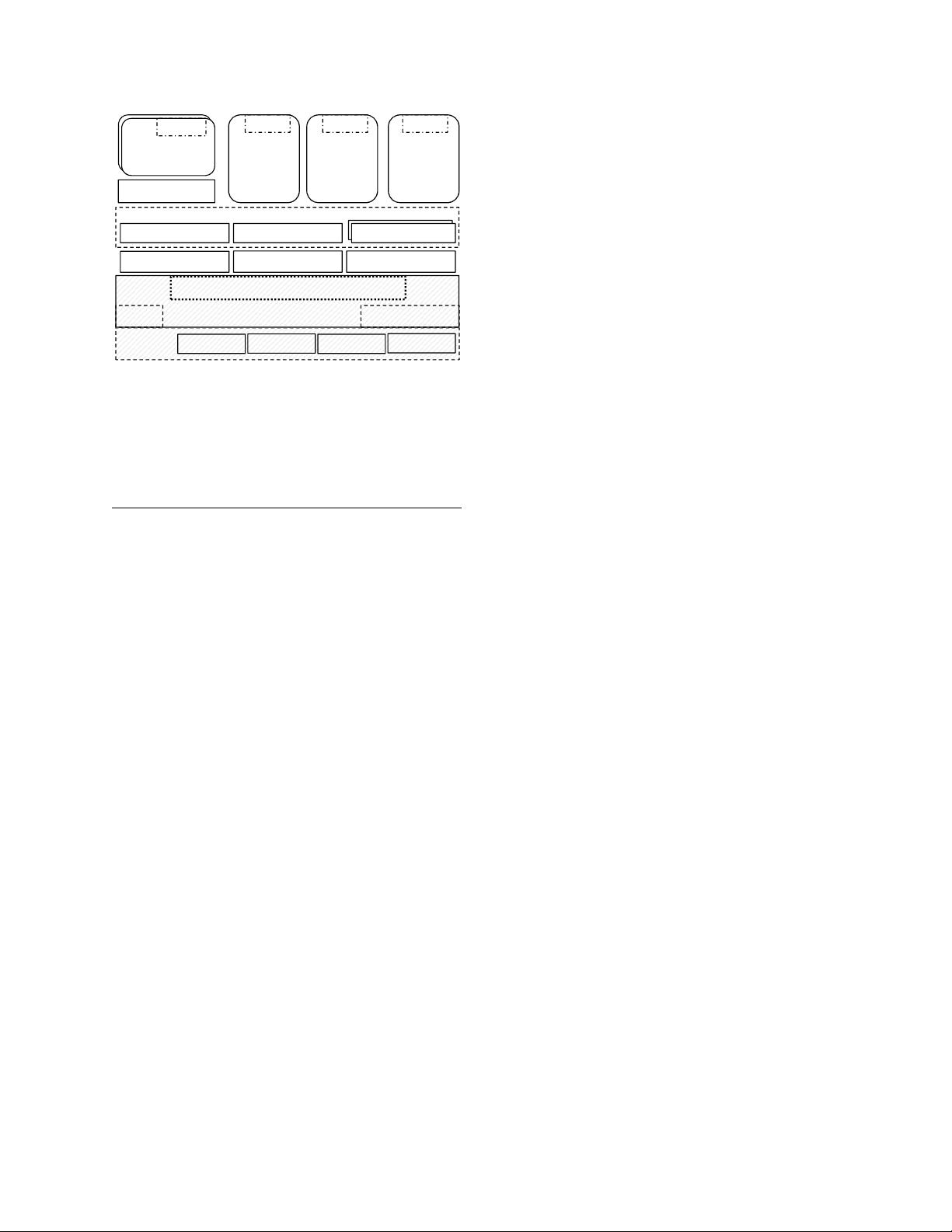

We identify three categories of root causes for outliers

that are induced by the interplay between storage, net-

work and str ucture of Map-Reduce jobs. First, machine

characteristics play a key role in the performance of tasks.

ese include static aspects such as hardware reliabil-

ity (e.g., disk failures) and dynamic aspects such as con-

tention for processor, memory and other resources. Sec-

ond, network characteristics impact the d ata transfer rates

of tasks. Datacenter networks are over-subscribed leading

to variance in congestion among dierent paths. Finally,

the specics of Map-Reduce leads to imbalance in work –

partitioning data over a low entropy key space oen leads

to a skew in the input sizes of tasks.

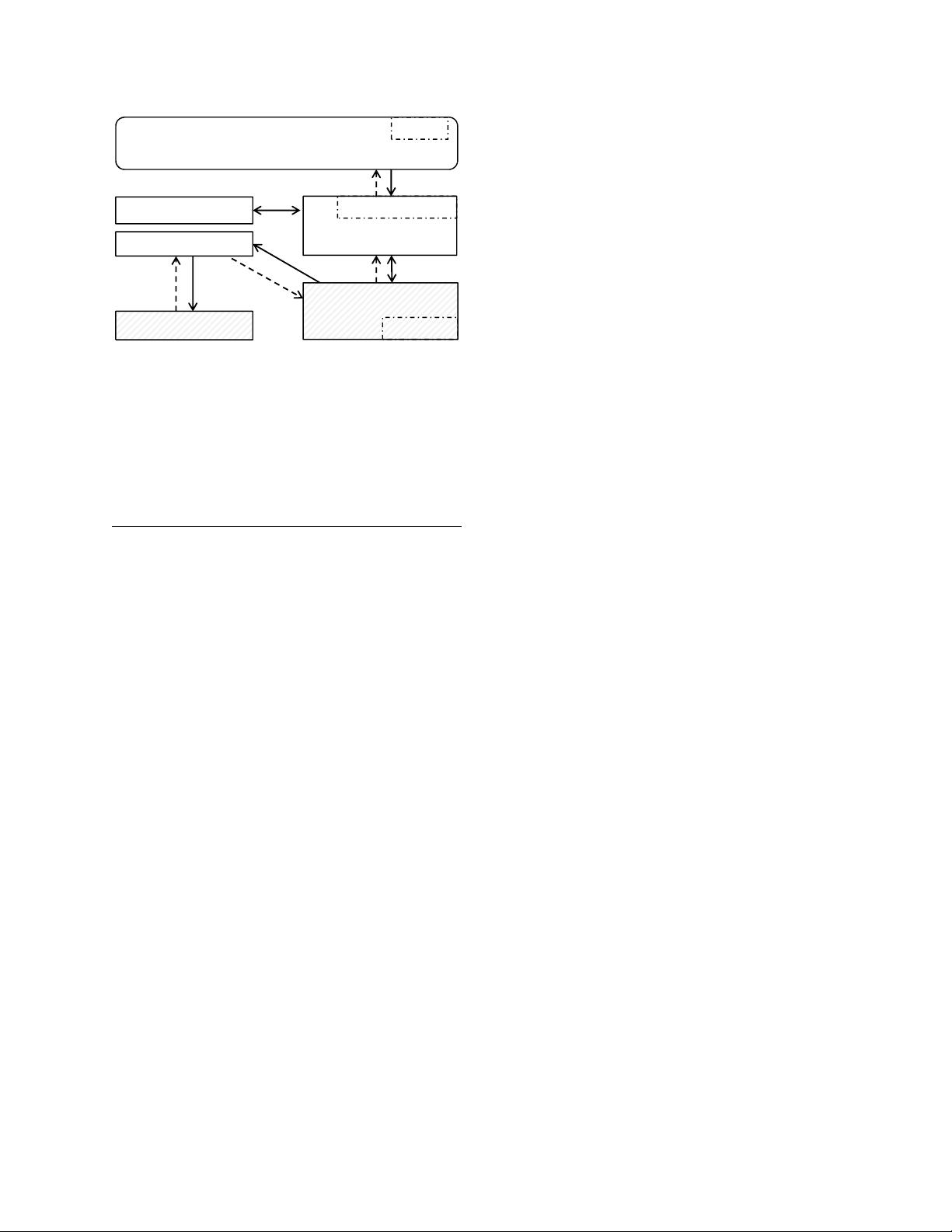

We present Mantri1, a system that monitors tasks and

culls outliers based on their causes. It uses the follow-

ing techniques: (i) Restarting outlier tasks cognizant of

resource constraints and work imbalances, (ii) Network-

aware placement of tasks, and (iii) Protecting output of

tasks based on a cost-benet analysis.

e detailed analysis and decision process employed by

Mantri is a key departure from the state-of-the-art for out-

lier mitigation in Map-Reduce implementations [

, ,

]; these fo cus only on duplicating tasks. To our knowl-

edge, none of them protect against data loss induced re-

computations or network congestion induced outliers.

Mantri places tasks based on the locations of their data

sources as well as the current utilization of network links.

On a task’s completion, Mantri replicates its output if the

1From Sanskrit, a minister who keeps the king’s court in order

- 1

- 2

- 3

- 4

前往页