没有合适的资源?快使用搜索试试~ 我知道了~

资源推荐

资源详情

资源评论

Systematic attribute reductions based on double granulation structures and

three-view uncertainty measures in interval-set decision systems

Xin Xie

a,b

, Xianyong Zhang

a,b,c,∗

a

School of Mathematical Sciences, Sichuan Normal University, Chengdu 610066, China

b

Institute of Intelligent Information and Quantum Information, Sichuan Normal University, Chengdu 610066, China

c

Visual Computing and Virtual Reality Key Laboratory of SiChuan Province, Sichuan Normal University, Chengdu 610066, China

Abstract

Attribute reductions eliminate redundant information to become valuable in data reasoning. In the data context of

interval-set decision systems (ISDSs), attribute reductions rely on granulation structures and uncertainty measures;

however, the current structures and measures exhibit the singleness limitations, so their enrichments imply corre-

sponding improvements of attribute reductions. Aiming at ISDSs, a fuzzy-equivalent granulation structure is proposed

to improve the existing similar granulation structure, dependency degrees are proposed to enrich the existing condition

entropy by using algebra-information fusion, so 3×2 attribute reductions are systematically formulated to contain both

a basic reduction algorithm (called CAR) and five advanced reduction algorithms. At the granulation level, the similar

granulation structure is improved to the fuzzy-equivalent granulation structure by removing the granular repeatability,

and two knowledge structures emerge. At the measurement level, dependency degrees are proposed from the algebra

perspective to supplement the condition entropy from the information perspective, and mixed measures are gener-

ated by fusing dependency degrees and condition entropies from the algebra-information viewpoint, so three-view

and three-way uncertainty measures emerge to acquire granulation monotonicity/non-monotonicity. At the reduction

level, the two granulation structures and three-view uncertainty measures two-dimensionally produce 3×2 heuristic re-

duction algorithms based on attribute significances, and thus five new algorithms emerge to improve an old algorithm

(i.e., CAR). As finally shown by data experiments, 3 × 2-systematic construction measures and attribute reductions

exhibit the effectiveness and development, comparative results validate the three-level improvements of granulation

structures, uncertainty measures, and reduction algorithms on ISDSs. This study resorts to tri-level thinking to enrich

the theory and application of three-way decision.

Keywords: Attribute reduction; Interval-set decision system; Granulation structure; Uncertainty measure; Condition

entropy; Granulation monotonicity/non-monotonicity

1. Introduction

Attribute reductions in rough set theory are related to feature selections in machine learning, and they mainly

reduce data dimensionality to facilitate information processing and knowledge discovery [1]. Attribute reductions

have various approaches, especially on classification tasks and learning [2, 3, 4, 5, 6, 7, 8, 9]. Attribute reductions

have become a fundamental research topic, and they are extensively applied in multiple fields such as formal concept

analysis [10, 11, 12].

Rough set theory explores data reasoning, and thus it relies on decision systems with data representations. Tra-

ditionally, attribute values of samples are single-valued, so single-valued decision systems (SVDSs) are mainly em-

ployed to multiple generic environments [13, 14, 15, 16, 17]. In practical scenarios, attribute values are often uncer-

tain or fuzzy, so they can exhibit interval-based forms. Accordingly, Yao [18] introduced interval sets, and relevant

concepts based on interval sets (including interval-set information tables (ISITs) and interval-set decision systems

(ISDSs) [19]) gained continuous research. For example, Zhong and Huang [20] analyzed granulation structures of

interval sets from measure and set; Lin et al. [21] introduced the conjunction form and dominance relation in ISITs;

Li et al. [22] discussed the concept representation and rule induction in incomplete ISITs; Wang [23] gave the fuzzy

∗

Corresponding author

Email addresses: 702374273@qq.com (Xin Xie), xianyongzh@sina.com.cn (Xianyong Zhang )

Preprint submitted to International Journal of Approximate Reasoning May 5, 2024

preference relation in ISITs; Zhang et al. [24] discussed the uncertainty measurement in ISITs; recently, attribute

reduction algorithms in ISDSs got some discussions [25, 26, 27, 28]. Clearly, ISDSs have the significance of data

learning; however, their attribute reductions and corresponding heuristic algorithms are relatively rare, so are worth

advancing for better approximate reasoning. ISDSs-driven attribute reductions usually rely on granulation structures

and uncertainty measures, and thus the two aspects are next analyzed to induce the corresponding enrichments and

improvements of attribute reductions.

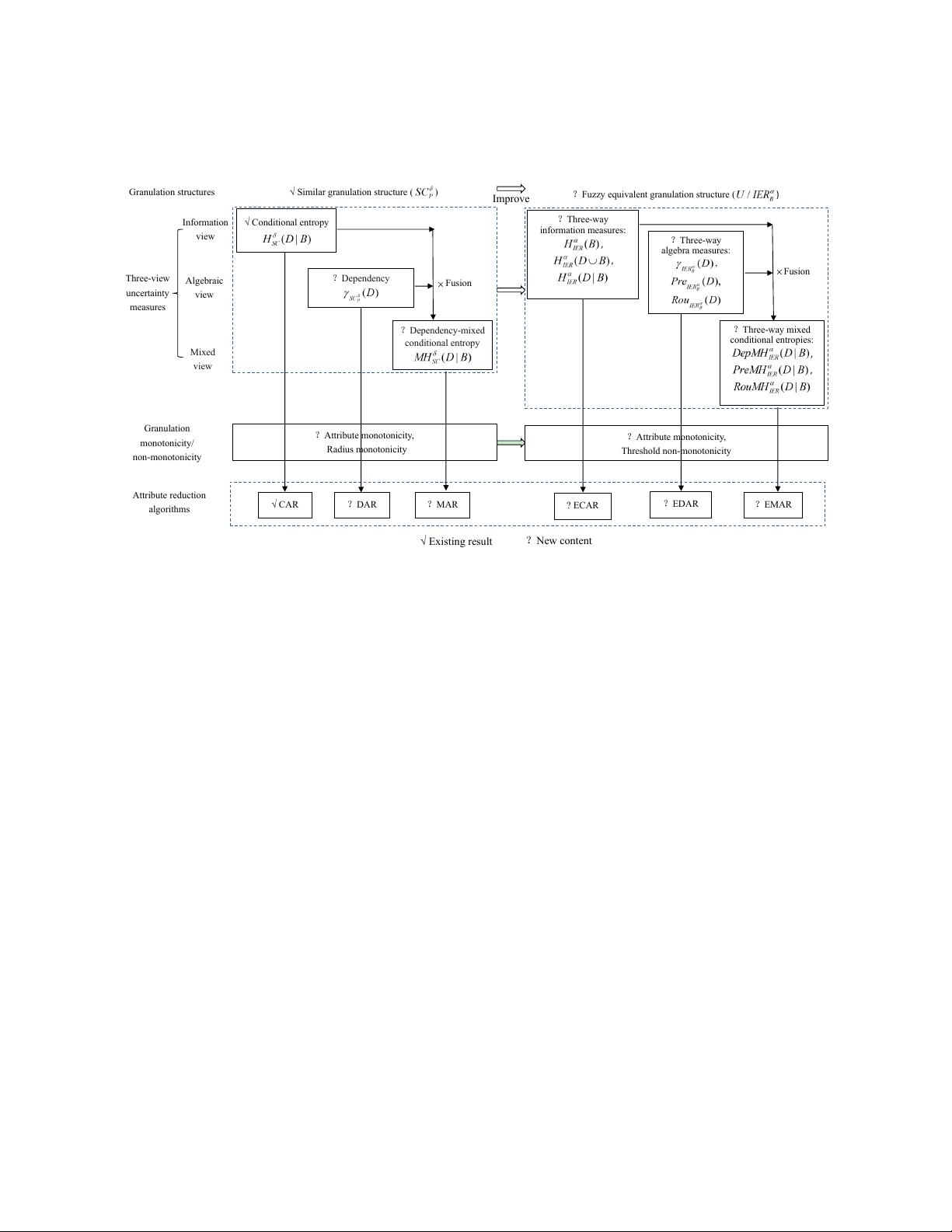

(a) Similar granulation structure (b) Fuzzy-equivalent granulation structure

Figure 1: Two granulation structures on interval-set decision system.

Aiming at ISDSs, the sample granulation is fundamental, and it usually relies on the object similarity [24, 26]. In

recent studies, sample similarity functions are primarily developed, and they further generate the similar granulation

structure. This granulation method formulates a sample partition structure that accurately traces the sample’s location,

but it also carries two limitations for further advancement.

• The similar granulation structure may contain repeated granules sometimes. As shown in Fig. 1(a) with circle

labels of similarity classes, the classes of samples x

1

, x

2

, x

4

are the same {x

1

, x

2

, x

3

, x

4

, x

5

}, so including all the

three samples in the granulation structure would involve the granule {x

1

, x

2

, x

3

, x

4

, x

5

} three times. The granular

redundancy easily causes the information deviation to impact uncertainty measurement.

• The similar granulation structure may contain different granules with the same overlapping sample, and this

case leads to the duplicate counting of the same sample. As shown in Fig. 1(a), sample x

1

has a similarity

class {x

1

, x

2

, x

3

, x

4

, x

5

}, while sample x

5

has a similarity class {x

1

, x

2

, x

4

, x

5

}; although {x

1

, x

2

, x

3

, x

4

, x

5

} and

{x

1

, x

2

, x

4

, x

5

} are different, including them in a granular structure would produce the double counting of samples

x

1

, x

2

, x

4

, x

5

. The overlap redundancy is not conducive for information optimization and accurate measurement.

This paper first addresses the two issues from similar granulation structure, and we propose a new structure based

on fuzzy similarity relation, called the fuzzy-equivalent granulation structure. As shown in Fig. 1(b), we eventu-

ally present the new structure {{x

3

}, {x

5

}, {x

1

, x

2

, x

4

}} in terms of similarity classes G

x

1

, G

x

2

, G

x

3

, G

x

4

, G

x

5

in Fig. 1(a),

and the three granules {x

3

}, {x

5

}, {x

1

, x

2

, x

4

} have advantages of non-repetition and non-overlap to improve the initial

similarity-granular structure, i.e., {G

x

1

, G

x

2

, G

x

3

, G

x

4

, G

x

5

} in Fig. 1(a). In other words, the fuzzy-equivalent granu-

lation structure can eliminate the two redundancy problems (related to granule repetition and sample interaction) of

similar granulation structure, and thus its consequent informatization and measurement would have the corresponding

superiority.

Uncertainty measures based on knowledge granulation play a crucial role in rough learning, and their various

forms are utilized for attribute reductions [29, 30, 31, 32]. In particular, algebraic and informational measures adhere

to uncertainty modeling and information theory, and their heterogeneous fusion can induce the powerful measurement

reinforcement and efficient reduction algorithm [33, 34, 35]. For instance, Jiang et al. [36] proposed the relative

decision entropy on attribute dependency degree for feature reduction, Wang et al. [37] introduced neighborhood

self-information on approximation accuracy for attribute reduction, Xu et al. [38] fused the neighborhood credibil-

ity/coverage and neighborhood joint entropy to improve attribute reductions. In terms of ISDSs, uncertainty measures

also facilitate attribute reductions. Recent attribute reductions in [25, 26, 27, 28] resort to only a single form of alge-

braic and informational measures; more generally, the fused uncertainty measures and improved reduction algorithms

are rarely concerned, so they become a valuable topic. This paper later focuses on ISDSs-driven attribute reductions

and heuristic algorithms by measure fusion and reduct enrichment. In [26], a condition entropy is proposed by modi-

fying the classical condition entropy in [39], and this information measure induces an effective algorithm of attribute

2

reduction, called CAR. For metric and algorithmic enrichments, we will introduce the algebraic dependency γ to

combine the condition entropy H to produce an algebraic-informational measure (1 − γ)H (called the mixed condition

entropy), and thus three-view uncertainty measures H, γ, (1 − γ)H emerge to further formulate three-view attribute

reductions for learning development and classification improvement.

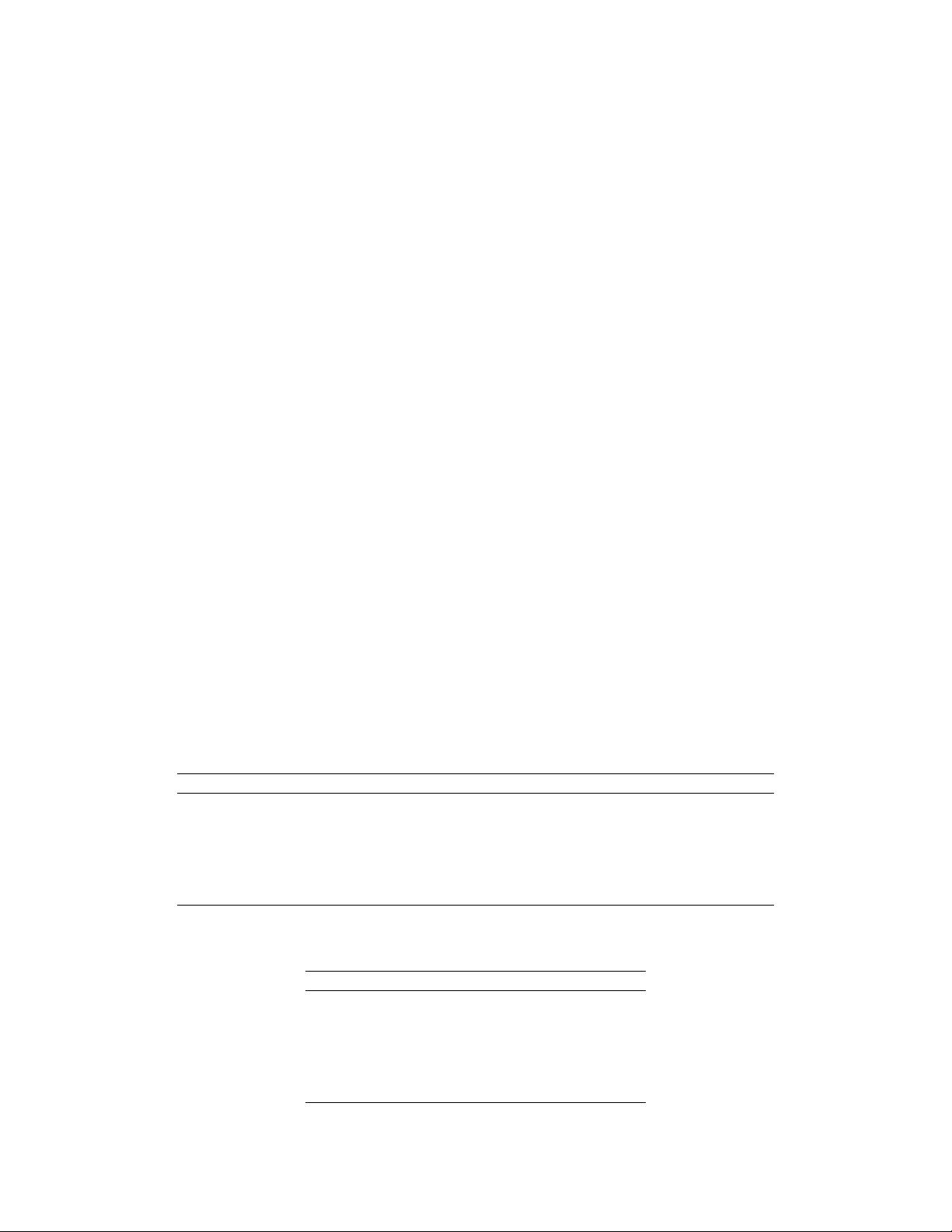

?Fuzzy equivalent granulation structure (

)

?Three-way

algebra measures:

,

Fusion

?Three-way

information measures

:

,

,

√ Similar granulation structure ( )

√ CAR

√ Existing result

? ECAR

?

EDAR

?

EMAR

?

DAR

?

MAR

?New content

?Three-way mixed

conditional entropies:

,

,

√ Conditional entropy

?Attribute monotonicity,

Radius monotonicity

Attribute reduction

algorithms

Three-view

uncertainty

measures

Granulation

monotonicity/

non-monotonicity

Algebraic

view

Information

view

Granulation structures

Mixed

view

?Dependency

Improve

?Dependency-mixed

conditional entropy

?Attribute monotonicity,

Threshold non-monotonicity

Fusion

Figure 2: Research framework on granulation structures, uncertainty measures, and attribute reductions.

According to the above backgrounds and thoughts, this paper mainly makes three-level improvements of gran-

ulation structures, uncertainty measures, and attribute reductions in terms of ISDSs, and our research framework is

reflected by Fig. 2, which has more details for measure construction.

(1) Regarding ISDSs, the existing similar granulation structure has the two redundancy problems (related to gran-

ule repetition and sample interaction), and the fuzzy-equivalent granulation structure is proposed to make corre-

sponding improvements, as demonstrated and analyzed from Fig. 1 (especially its subfigure (b)). Thus, there are

two granulation structures, and the new mode can be calculated by a corresponding algorithm (i.e., Algorithm

2).

(2) The dependency degree is used to match the existing condition entropy in [26], and thus the fusion measure

is generated by the multiplication operator. There are three uncertainty measures from the algebraic, informa-

tional, and algebraic-informational viewpoints. Thus, three-view uncertainty measures emerge with relevant

algorithms (i.e., Algorithms 1 and 3), and their granulation monotonicity/non-monotonicity on attribute subsets

and parameter thresholds are researched and revealed.

(3) The above two-granulation structures and three-view measures are combined to induce 3 × 2 uncertainty mea-

sures, and the latter further motivate attribute reductions and corresponding heuristic algorithms within the

3 × 2 = 6 framework. Thus, two structural reducts and six metric reducts are defined, and their relationships of

strongness derivation and intersection interaction are revealed. In terms of metric significance, six reduction al-

gorithms are systematically established (in Algorithm 4), and they contain both the current algorithm CAR [26]

and five novel reduction algorithms (called DAR, MAR, ECAR, EDAR, EMAR). The new reduction algorithms

enrich and improve CAR.

(4) At last, data experiments are performed to verify the three-level improvements, so that the new granulation

structures, uncertainty measures, and attribute reductions all exhibit the effectiveness. In particular, the five im-

proved reduction algorithms generally outperform the contrastive algorithm CAR to acquire better classification

performances.

3

Three-way decision is an important methodology with Triading-Acting-Optimizing [40], and it relies on three-level

thinking [41] to provide three-level analysis [42] and corresponding supports. Aiming at ISDSs, three-level improve-

ments of granulation structures, uncertainty measures, and attribute reductions first fall into the existing framework

of three-level thinking and analysis [41, 42, 43, 44], and the corresponding contributions then bring new hierarchical

development especially for three-level uncertainty measures and attribute reductions. This study adheres to three-level

thinking and analysis, so it could enrich the theory and application of three-way decision.

The remaining of this paper on ISDSs is organized as follows. Section 2 reviews the similar granulation struc-

ture, and combines the mixed condition entropy. Section 3 proposes the fuzzy-equivalent granulation structure, and

correspondingly constructs three-view uncertainty measures. Section 4 determines systematic attribute reductions

and six corresponding heuristic reduction algorithms. Section 5 performs data experiments to make the effectiveness

validation and superiority comparison. Section 6 finally concludes this study.

2. Three-view uncertainty measures based on similar granulation structure

In real-life scenarios, information is often incomplete, and sample-value descriptions usually resort to upper and

lower bounds. Therefore, concepts of interval sets are initially proposed by Yao [18] and are further refined by Zhang

et al. [24]. In this section, the relevant formal context of ISDSs is reviewed to offer the similar granulation structure,

and the corresponding three-view uncertainty measures are formulated by proposing a mixed conditional entropy.

2.1. Similar granulation structure on ISDSs

Herein, ISDS and its basic similar granulation structure are successively recalled.

Definition 1 (ISDS [24]). An interval set, denoted as A =

[

A

1

, A

2

]

=

n

A ∈ 2

U

| A

1

⊆ A ⊆ A

2

o

, is a set where A

1

and

A

2

are subsets of a reference set U. The lower boundary set of A is A

1

and the upper boundary set is A

2

. If A

1

= A

2

,

then A is an ordinary set.

Based on the definition of interval sets, we can formulate ISDS implying the interval-set decision system/table.

IS DS = (U, C

S

D, V, f ) is a four-tuple group. Here, U = {x

1

, x

2

, · · · , x

n

} = {x

i

| i = 1, 2, · · · , n} is a finite nonempty

set of universe, C =

a

1

, a

2

, · · · , a

|C|

is a finite nonempty set of condition attributes, D = {d} is related to a decision

attribute d, V=

S

a∈A⊆C

V

a

represents the set of attribute values (where V

a

is a nonempty set of values for a ∈ C), and

f : U × A → V (A ⊆ C) is an information function. In particular, the value of sample x ∈ U under condition attribute

a ∈ A ⊆ C is an interval set, i.e. f (x, a) =

x

−

a

, x

+

a

with x

−

a

⊆ x

+

a

, x

−

a

⊆ V

a

, x

+

a

⊆ V

a

.

Example 1. For illustrations, two ISDSs in [24] are introduced in Tables 1 and 2, and they are symbolic and numerical

types, respectively.

Table 1: An interval-set decision table in symbolic form [24].

U Listening Speaking Reading Writing Excellent

x

1

[{C, S }, {E, C, S }] [{E}, {E, C, S }] [{C, S }, {C, S , F}] [{E, S }, {E, C, S }] Yes

x

2

[{C, S }, {C, S, F}] [{S }, {S, F}] [{S, F}, {C, S , F}] [{C, S }, {C, S }] Yes

x

3

[{C}, {C, S }] [{S }, {S, F}] [{F}, {S, F}] [{E, S }, {E, C, S }] No

x

4

[{C}, {C, F}] [{S }, {S, F}] [{S }, {S }] [{C, S }, {C, S }] No

x

5

[{S }, {S, F}] [{F}, {C, F}] [{E}, {E, S }] [{C, S }, {C, S }] Yes

x

6

[{S }, {S }] [{S }, {C, S }] [{C, S }, {C, S }] [{C, S }, {C, S }] No

Table 2: An interval-set decision system in numerical form [24].

U a

1

a

2

a

3

D

x

1

[{0}, {0, 1, 2}] [{1, 2}, {1, 2, 3}] [{0, 2}, {0, 1, 2}] 1

x

2

[{2}, {2, 3}] [{2, 3}, {1, 2, 3}] [{1, 2}, {1, 2}] 1

x

3

[{2}, {2, 3}] [{3}, {2, 3}] [{0, 2}, {0, 1, 2}] 2

x

4

[{2}, {2, 3}] [{2}, {2}] [{1, 2}, {1, 2}] 2

x

5

[{3}, {1, 3}] [{0}, {0, 2}] [{1, 2}, {1, 2}] 2

x

6

[{2}, {1, 3}] [{1, 2}, {1, 2}] [{1, 2}, {1, 2}] 1

4

In Table 1, C collects condition attributes “Listening, Speaking, Reading, Writing”, and D has the decision

attribute “Excellent”. The intersection of the row and column represents the attribute value of the sample under the

corresponding attribute. For example, the first unit [{C, S }, {E, C, S }] represents the attribute value of the sample x

1

under the conditional attribute “Listening”, and it has the interval-set form. Table 2 can be similarly analyzed.

In ISDSs, equivalence relations are not applicable, so the interval set similarity is proposed to construct similarity

classes and the similar granulation structure [24].

Definition 2 ([24]). Let A =

[

A

1

, A

2

]

and B =

[

B

1

, B

2

]

be two interval sets. The possible degree of A relative to B is

PD

(A−B)

=

1

2

(

|A

1

∩ B

1

|

|A

1

|

+

|A

2

∩ B

2

|

|A

2

|

), (1)

where | ∗ | denotes the cardinality of set ∗. The similarity degree between two interval sets is

S D

(AB)

=

1

2

[PD

(A−B)

+ PD

(B−A)

], (2)

where PD

(A−B)

and PD

(B−A)

are the possible degrees of A relative to B and B relative to A, respectively. Specifically

in ISDS, two samples x, y ∈ U regarding attribute c ∈ C concern two interval sets x = [x

−

, x

+

], y = [y

−

, y

+

], so their

similarity degree is

S D

c

(x, y) =

1

2

[PD

(x−y)

+ PD

(y−x)

]. (3)

Definition 3 (Similar granulation structure [24]). For IS DS = (U, C

S

D, V, f ) with a threshold δ ∈ [0, 1], the δ-

interval similarity relation about attribute c ∈ C is

S R

δ

c

=

{

(x, y) ∈ U × U | S D

c

(x, y) ≥ δ

}

, (4)

and the corresponding similarity class of object x is S C

δ

c

(x) = {y ∈ U | (x, y) ∈ S R

δ

c

}. Regrading a non-empty attribute

subset B ⊆ C, the class of δ-interval similarity of sample x ∈ U is

S C

δ

B

(x) = {y ∈ U | (x, y) ∈ S R

δ

B

}, (5)

where S R

δ

B

= {(x, y) ∈ U × U | ∧

c∈B

S D

c

(x, y) ≥ δ} means the similarity relation. All the similarity classes formulate

the similar granulation structure:

−−−→

S C

δ

B

= (S C

δ

B

(x

1

), S C

δ

B

(x

2

), · · · , S C

δ

B

(x

n

)). (6)

Example 2. Considering ISDS in Table 2, we concern four attribute subsets on

B : {a

1

}, {a

1

, a

2

}, {a

1

, a

3

}, {a

1

, a

2

, a

3

}.

For δ = 0.5, similarity classes can be obtained, and relevant similar granulation structures become

−−−−→

S C

0.5

a

1

=

−−−−−−−→

S C

0.5

{a

1

,a

3

}

= ({x

1

}, {x

2

, x

3

, x

4

, x

6

}, {x

2

, x

3

, x

4

, x

6

}, {x

2

, x

3

, x

4

, x

6

}, {x

5

, x

6

}, {x

2

, x

3

, x

4

, x

5

, x

6

}),

−−−−−−−→

S C

0.5

{a

1

,a

2

}

=

−−−−−−−−−→

S C

0.5

{a

1

,a

2

,a

3

}

= ({x

1

}, {x

2

, x

3

, x

6

}, {x

2

, x

3

}, {x

4

, x

6

}, {x

5

}, {x

2

, x

4

, x

6

}).

(7)

2.2. Basic three-view uncertainty measures with mixed conditional entropy

The conditional entropy and dependency degree serve as two fundamental uncertainty measures, and they rep-

resent the informational and algebraic perspectives, respectively. By reviewing and combining the two measures,

we here propose a mixed conditional entropy, and this integrated measure mainly follows the algebra-informational

perspective. Next, we discuss the three-view uncertainty measures, mainly based on the similar granulation structure

on ISDSs. In later studies, IS DS = (U, C

S

D, V, f ) with B ⊆ C and δ ∈ [0, 1] serves as a common context, and

D

j

∈ U/D represents the decision class in decision classification U/D; moreover, the logarithmic function “log” is

related to bottom number 2.

5

剩余28页未读,继续阅读

资源评论

谢大虾

- 粉丝: 42

- 资源: 5

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 提升工程效率的必备工具:IPAddressApp-无显示器远程调试的新选择

- 山东理工大学2024 离散数学思维导图

- IOS面试常问的问题及回答

- 船只检测13-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

- 51单片机的温度监测与控制(温控风扇)

- 一个冒险者开发(只开发了底层)

- 船只检测10-TOD-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

- 基于Web的智慧城市实验室主页系统设计与实现+vue(源码).rar

- InCode AI IDE

- triton-2.1.0-cp311-cp311-win-amd64.whl

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功