没有合适的资源?快使用搜索试试~ 我知道了~

信息检索中的多模型路由技术:基于多个专家嵌入模型的RouterRetriever研究与应用

0 下载量 132 浏览量

2024-10-25

11:56:37

上传

评论

收藏 454KB PDF 举报

温馨提示

内容概要:本文介绍了一种名为 RouterRetriever 的信息检索模型,它利用多个领域的专家嵌入模型以及路由机制来选择最适合每个查询的专家。相比于传统的单一通用模型或多任务训练模型,RouterRetriever 能够在各种基准测试上取得更好的性能,尤其在跨域数据集上有显著优势。作者详细探讨了不同专家组合对性能的影响,发现增加新领域的专家可以显著提高系统性能,而增加同一领域内的专家则提升有限。此外,通过实验证明了参数化知识对提取嵌入向量的重要性和效率。 适合人群:从事信息检索、自然语言处理和机器学习的研究人员和技术开发者。 使用场景及目标:①适用于需要高精度跨领域信息检索的应用;②可用于改进现有的信息检索系统,特别是在特定领域表现不佳的情况下;③可以帮助研究人员探索不同领域间的关联性。 其他说明:文章还讨论了路由机制的细节,指出未来研究方向之一是如何进一步优化路由技术以提高计算效率。

资源推荐

资源详情

资源评论

ROUTERRETRIEVER: Exploring the Benefits of Routing

over Multiple Expert Embedding Models

Hyunji Lee

κ

*

Luca Soldaini

α

Arman Cohan

γ,α

Minjoon Seo

κ

Kyle Lo

α

κ

KAIST AI

α

Allen Institute for AI

γ

Yale University

hyunji.amy.lee@kaist.ac.kr {lucas, kylel}@allenai.org

Abstract

Information retrieval methods often rely on a single em-

bedding model trained on large, general-domain datasets

like MSMARCO. While this approach can produce a re-

triever with reasonable overall performance, models trained

on domain-specific data often yield better results within their

respective domains. While prior work in information retrieval

has tackled this through multi-task training, the topic of com-

bining multiple domain-specific expert retrievers remains un-

explored, despite its popularity in language model generation.

In this work, we introduce ROUTERRETRIEVER, a retrieval

model that leverages multiple domain-specific experts along

with a routing mechanism to select the most appropriate ex-

pert for each query. It is lightweight and allows easy addi-

tion or removal of experts without additional training. Eval-

uation on the BEIR benchmark demonstrates that ROUTER-

RETRIEVER outperforms both MSMARCO-trained (+2.1 ab-

solute nDCG@10) and multi-task trained (+3.2) models. This

is achieved by employing our routing mechanism, which sur-

passes other routing techniques (+1.8 on average) commonly

used in language modeling. Furthermore, the benefit gener-

alizes well to other datasets, even in the absence of a spe-

cific expert on the dataset. To our knowledge, ROUTERRE-

TRIEVER is the first work to demonstrate the advantages

of using multiple domain-specific expert embedding models

with effective routing over a single, general-purpose embed-

ding model in retrieval tasks

1

.

Introduction

While a single embedding model trained on large-scale

general-domain datasets like MSMARCO (Campos et al.

2016) often performs well, research shows that models

trained on domain-specific datasets, even if smaller, can

achieve superior results within those domains (Izacard et al.

2021; Bonifacio et al. 2022). Moreover, finetuning on MS-

MARCO after pretraining with contrastive learning can

sometimes degrade performance on specific datasets (Wang

et al. 2023; Lee et al. 2023). To improve embedding models

for domain-specific datasets, previous studies have explored

approaches such as data construction (Wang et al. 2021; Ma

et al. 2020) and domain adaptation methods (Xin et al. 2021;

Fang et al. 2024). However, less attention has been paid to

*

Work performed during internship at AI2.

1

Code in https://github.com/amy-hyunji/RouterRetriever

leveraging multiple expert embedding models and routing

among them to select the most suitable one during inference.

In this work, we introduce ROUTERRETRIEVER, a re-

trieval model that leverages multiple domain-specific experts

with a routing mechanism to select the most suitable expert

for each instance. For each domain, we train gates (experts),

and during inference, the model determines the most rele-

vant expert by computing the average similarity between the

query and a set of pilot embeddings representing each ex-

pert, selecting the expert with the highest similarity score.

ROUTERRETRIEVER is lightweight, as it only requires the

training of parameter-efficient LoRA module (Hu et al.

2021) for each expert, resulting in a minimal increase in pa-

rameters. Additionally, ROUTERRETRIEVER offers signifi-

cant flexibility: unlike a single model that requires retraining

when domains are added or removed, ROUTERRETRIEVER

simply adds or removes experts without the need for further

training.

Evaluation on the BEIR benchmark (Thakur et al. 2021)

with various combinations of experts highlights the ben-

efits of having multiple expert embedding models with a

routing mechanism compared to using a single embed-

ding model. When keeping the total number of training

datasets constant, ROUTERRETRIEVER consisted of only

domain-specific experts without an MSMARCO expert out-

performs both a model trained on the same dataset in a multi-

task manner and a model trained with MSMARCO. Also,

adding domain-specific experts tends to improve perfor-

mance even when an expert trained on a large-scale general-

domain dataset like MSMARCO is already present, sug-

gesting that, despite the capabilities of a general-domain

experts, domain-specific experts provide additional bene-

fits, underscoring their importance. Moreover, ROUTERRE-

TRIEVER consistently improves performance as new experts

are added, whereas multi-task training tends to show per-

formance degradation when a certain number of domains

are included. This indicates the advantage of having sepa-

rate experts for each domain and using a routing mechanism

to select among them. Notably, the benefits of ROUTER-

RETRIEVER generalize not only to datasets that have cor-

responding experts but also to additional datasets without

specific experts.

We further explore the factors behind these performance

benefits. First, ROUTERRETRIEVER consistently shows im-

arXiv:2409.02685v1 [cs.IR] 4 Sep 2024

proved performance with the addition of more experts

(gates), suggesting that broader domain coverage by experts

enhances retrieval accuracy. This trend holds even in an or-

acle setting, where the gate that maximizes performance is

always selected. Notable, adding a new expert for a different

domain yields greater performance gains than adding addi-

tional experts within the same domain. Second, we observe

that parametric knowledge influences embedding extraction.

This observation supports the idea that training with domain-

specific knowledge improves the quality of embedding ex-

traction of the domain. Last, the performance difference be-

tween an instance-level oracle (which routes each instance

to its best expert) and a dataset-level oracle (which routes

queries to the expert with the highest average performance

for the dataset) suggests that queries may benefit from a

knowledge of other domains, supporting the effectiveness

of our routing technique. Our results point to potential re-

search opportunities in improving routing techniques among

multiple expert retrievers, a direction that leads to the devel-

opment of a retriever system that performs well across both

general and domain-specific datasets.

Related Works

Domain Specific Retriever There exists substantial re-

search on retrieval models that aim to improve perfor-

mance on domain-specific tasks. One approach focuses on

dataset augmentation. As domain-specific training datasets

are often unavailable and can be costly to construct, re-

searchers have developed methods that either train mod-

els in an unsupervised manner (Lee, Chang, and Toutanova

2019; Gao, Yao, and Chen 2021; Gao and Callan 2021) or

fine-tune models on pseudo-queries generated for domain-

specific datasets (Bonifacio et al. 2022; Ma et al. 2020;

Wang et al. 2021). Another approach is developing domain-

specific embeddings. A common approach is training in a

multi-task manner over domain-specific datasets (Lin et al.

2023; Wang et al. 2021). Recent works have aimed to im-

prove domain-specific retrievers by developing instruction-

following retrieval models (Asai et al. 2022; Weller et al.

2024; Oh et al. 2024; Su et al. 2022; Wang et al. 2023);

instruction contains such domain knowledge. Another ex-

ample is Fang et al. (2024) which trains a soft token for

domain-specific knowledge. While these methods also aim

to extract good representative embeddings for the input text,

these methods rely on a single embedding model and pro-

duce domain-specific embeddings by additionally including

domain-specific knowledge (e.g., appended as instructions)

to the input. ROUTERRETRIEVER differs from these prior

methods by allowing for the employment of multiple embed-

ding models where rather than providing the domain knowl-

edge to the input, added to the model as parametric knowl-

edge to produce the domain representative embeddings.

Routing Techniques Various works have focused on de-

veloping domain-specific experts and routing mechanisms

to improve general performance in generation tasks. One ap-

proach simultaneously trains experts (gates) and the rout-

ing mechanism (Sukhbaatar et al. 2024; Muqeeth et al.

2024). Another line of work includes post-hoc techniques

Query

Base

Encoder

Gate A

Expert

Encoder A

Pilot Embeddings for A

Gate B

Gate C

1

2

Final Query Embedding

Pilot Embedding Library

Expert

Encoder B

Pilot Embeddings for B

Expert

Encoder C

Pilot Embeddings for C

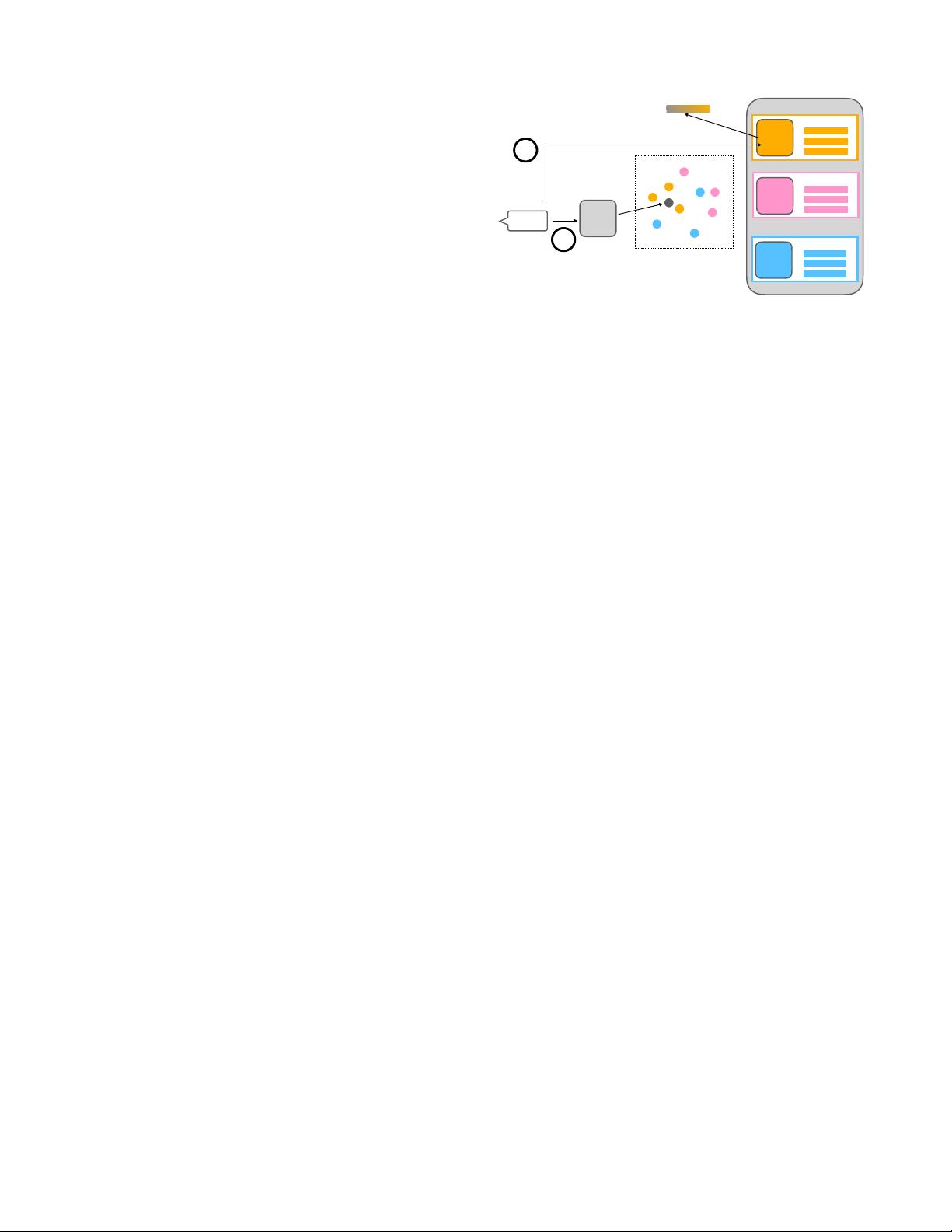

Figure 1: ROUTERRETRIEVER:

1

Given a query, we first

extract its embedding using a base encoder. We then cal-

culate an average similarity between the query embedding

(black dot) and the pilot embeddings for each gate (orange

dots for Gate A, red dots for Gate B, and blue dots for Gate

C). The gate with the highest average similarity (Gate A in

this case) is selected.

2

The final query embedding is then

produced by passing the query to Expert Encoder A, which

consists of the base encoder combined with Gate A, the se-

lected expert gate (LoRA).

that do not require additional training for routing. Some

approaches use the model itself as the knowledge source

by training it on domain-specific knowledge (Feng et al.

2023), incorporate domain-specific knowledge in the token

space (Belofsky 2023; Shen et al. 2024), or select the most

relevant source from a sampled training dataset of each do-

main (Ye et al. 2022; Jang et al. 2023). Routing techniques

have also been investigated for improving generation qual-

ity in retrieval-augmented generation tasks; Mallen et al.

(2022) explores routing to decide whether to utilize exter-

nal knowledge and Jeong et al. (2024) focuses on routing

to choose among different retrieval approaches. However,

there has been less emphasis on applying these techniques

to information retrieval tasks. In this work, we investigate

the benefits of leveraging multiple domain-specific experts

and routing mechanisms in information retrieval, contrasting

this approach with the traditional methods of using a sin-

gle embedding model trained on a general-domain dataset

or multi-task training across various domains. Additionally,

we find that simply adapting routing techniques from gener-

ation tasks to information retrieval does not yield high per-

formance, underscoring the importance of developing rout-

ing techniques tailored specifically for information retrieval.

Router Retriever

In this section, we introduce ROUTERRETRIEVER, a re-

trieval model composed of a base retrieval model and mul-

tiple domain-specific experts (gates). As shown in Figure 1,

for a given input query,

1

the most appropriate embedding

is selected using a routing mechanism. Then,

2

the query

embedding is generated by passing the query through the

selected gate alongside the base encoder.

In the offline time, we train the experts (gates) with

Algorithm 1: Constructing Pilot Embedding Library

Require: Domain-specific training datasets D

1

, . . . , D

T

, gates G = {g

1

, . . . , g

T

}

1: Initialize empty set P = {} for the pilot embedding library

2: for each dataset D

i

in {D

1

, . . . , D

T

} do

3: Initialize an empty list L

i

← [ ]

4: for each instance x

j

in D

i

do

5: g

max

(x

j

) ← arg max

g

l

∈G

Perf.(g

l

, x

j

) // Find the gate with maximum performance g

max

for instance x

j

6: Add pair (x

j

, g

max

) to L

i

7: end for

8: for each gate g

m

in G do

9: Group

m

← {x

j

| g

max

= g

m

for (x

j

, g

max

) in L

i

} // Group all instances x

j

for which g

m

is the maximum performing

gate

10: if Group

m

is not empty then

11: E ← BaseEncoder(Group

m

) // Extract embeddings using the base encoder

12: c

m

← k-means(E, k = 1) // Compute the centroid embedding by clustering cluster size 1, which is the pilot

embedding

13: if g

m

exists in P then

14: Append c

m

to the list associated with g

m

in P (P[g

m

])

15: else

16: Add a new entry {g

m

: [c

m

]} to P

17: end if

18: end if

19: end for

20: end for

21: Output: Pilot embeddings library P

domain-specific training datasets and construct a pilot em-

bedding library. This library contains pairs of pilot em-

beddings for each domain along with the corresponding ex-

pert trained on that domain. Please note that this process is

performed only once. During inference (online time), when

given an input query, a routing mechanism determines the

appropriate expert. We calculate the similarity score be-

tween the input query embedding and the pilot embeddings

in the pilot embedding library, and then choose the expert

with the highest average similarity score.

We use Contriever (Izacard et al. 2021) as the base en-

coder and train parameter-efficient LoRA (Hu et al. 2021)

for each domain as the gate for that domain keeping the

model lightweight. For example, in the case of Figure 1,

ROUTERRETRIEVER includes a base encoder with three

gates (experts): Gate A, Gate B, and Gate C, and the Expert

Encoder A is composed of the base encoder with Gate A

(LoRA trained on a dataset from domain A) added. This ap-

proach allows for the flexible addition or removal of domain-

specific gates, enabling various gate combinations without

requiring further training for the routing mechanism.

Experts (Gates) For each domain D

i

, where i = 1, . . . , T

and T is the total number of domains, we train a separate

expert (gate) g

i

using the corresponding domain dataset. Af-

ter the training step, we have a total of T different gates,

G = {g

1

, g

2

, . . . , g

T

}, with each gate g

i

specialized for a

specific domain.

Pilot Embedding Library Given a domain-specific train-

ing dataset D

i

= {x

1

, . . . , x

k

} where x

j

is an instance

in D

i

, we perform inference using all gates G to iden-

tify which gate provides the most suitable representative

embedding for each instance (line 4-7 in Alg. 1). For

each instance x

j

, we select g

max

, the gate that demon-

strates the highest performance, defined as g

max

(x

i

) =

arg max

g

j

∈G

Performance(g

j

, x

i

). This process produces

pairs (x

j

, g

max

) for all instances in the dataset D

i

.

Next, we group these pairs by g

max

, constructing T

groups, one for each domain. Then for each group, we per-

form k-means clustering with cluster size 1 to get the pilot

embedding (line 8-19 in Alg. 1). In specific, with the con-

structed pairs (x

j

, g

max

), we group them by the ones that

have the same g

max

, Group

m

, which contains list of in-

stances x

j

with same gate as the max gate. This results in

T groups, one for each domain (m = 1, · · · , T ). If the

Group

m

is not empty, we first extract all embeddings for

instances in the group with the base encoder (BaseModel).

We then apply k-means clustering () to these embeddings

with a cluster size of one. The centroid of this cluster c

m

is

taken as the pilot embedding for the domain. This results in

one pilot embedding per group, yielding a maximum of T

pilot embeddings for the training dataset D

i

. Each of these

embeddings is associated with a different gate, representing

the most suitable one for that domain. Please note that since

when Group

m

is empty, we do not extract pilot embedding

for the empty group (cluster), thereby the number of pilot

embeddings for the training dataset could be less than T .

By repeating this process across all domain-specific train-

ing datasets D

1

, . . . , D

T

, we obtain T pilot embeddings for

each gate, one from each domain-specific training dataset

(repeating line 3-19 in Alg. 1 for all training dataset

D

1

· · · D

T

). Consequently, the pilot embeddings contains

剩余14页未读,继续阅读

资源评论

豪AI冰

- 粉丝: 73

- 资源: 68

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 电子学习资料基础资料数字逻辑电路计数器

- 模板文档开发与应用基础教程

- 电子学习资料基础资料循环彩灯

- 自动作业平台sw18可编辑全套技术资料100%好用.zip.zip

- 电子学习资料基础资料有线对讲机电路图

- 电子学习资料基础资料远距离调频无线话筒

- 电子学习资料基础资料智能电力线载波电话系统

- 电子学习资料基础资料自激多谐振荡器闪光灯

- 电子学习资料基础资料电感线圈的简易制作

- 电子学习资料基础资料手机充电器电路原理图及充电器的安全标准

- 电子学习资料基础资料数字放大器

- 电子学习资料STM32开发板例程(库函数版本)

- 电子学习资料数字电子系统设计(CPLD)

- 电子学习资料数字教师手册辅导教学使用

- uniapp项目实战教程含源码多端合一SpringBoot2.X+Vue+UniAPP全栈开发医疗小程序

- uniapp项目实战教程含源码多端合一uni-app+springboot实战某音短视频app

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功