没有合适的资源?快使用搜索试试~ 我知道了~

GPipe:大规模模型并行训练的有效解决方案

试读

11页

需积分: 0 1 下载量 9 浏览量

更新于2024-09-14

收藏 527KB PDF 举报

本文介绍了GPipe——一种利用微批次管道并行性实现大型神经网络高效扩展的库。GPipe支持将任意深度神经网络分解成层序列并在不同加速器上执行。它引入了一种新颖的管道并行算法和批量分割方法,可以在多设备环境下同步梯度更新,使得硬件利用率高同时保持训练稳定性。文中展示了GPipe应用在图像分类与多语言神经机器翻译领域的成功实验效果,证实了GPipe的强大性能以及其灵活性。不论是对于研究人员还是工程实践者来说,都能有效提高深层模型特别是巨型规模下计算任务的工作效率。

适合人群:专注于神经网络研究的研究人员,需要大规模模型的应用团队成员。

使用场景及目标:适用于需要突破单一加速器内存限制,构建更大更复杂的机器学习模型的情景,特别是希望借助GPU集群或其他加速设备来扩展训练能力的专业人士。目标是在有限的硬件条件下最大程度优化神经网络的容量。

其他说明:相比现有的一些解决方案如SPMD、Pipeline等方式,GPipe提供更广泛的任务适应性和更低通信开销。然而需要注意当前版本假设每个单层仍然符合单个加速卡的显存配置。

GPipe: Easy Scaling with Micro-Batch Pipeline

Parallelism

Yanping Huang

huangyp@google.com

Youlong Cheng

ylc@google.com

Ankur Bapna

ankurbpn@google.com

Orhan Firat

orhanf@google.com

Mia Xu Chen

miachen@google.com

Dehao Chen

dehao@google.com

HyoukJoong Lee

hyouklee@google.com

Jiquan Ngiam

jngiam@google.com

Quoc V. Le

qvl@google.com

Yonghui Wu

yonghui@google.com

Zhifeng Chen

zhifengc@google.com

Abstract

Scaling up deep neural network capacity has been known as an effective approach

to improving model quality for several different machine learning tasks. In many

cases, increasing model capacity beyond the memory limit of a single accelera-

tor has required developing special algorithms or infrastructure. These solutions

are often architecture-specific and do not transfer to other tasks. To address the

need for efficient and task-independent model parallelism, we introduce GPipe, a

pipeline parallelism library that allows scaling any network that can be expressed

as a sequence of layers. By pipelining different sub-sequences of layers on sep-

arate accelerators, GPipe provides the flexibility of scaling a variety of different

networks to gigantic sizes efficiently. Moreover, GPipe utilizes a novel batch-

splitting pipelining algorithm, resulting in almost linear speedup when a model

is partitioned across multiple accelerators. We demonstrate the advantages of

GPipe by training large-scale neural networks on two different tasks with distinct

network architectures: (i) Image Classification: We train a 557-million-parameter

AmoebaNet model and attain a top-1 accuracy of 84.4% on ImageNet-2012, (ii)

Multilingual Neural Machine Translation: We train a single 6-billion-parameter,

128-layer Transformer model on a corpus spanning over 100 languages and achieve

better quality than all bilingual models.

1 Introduction

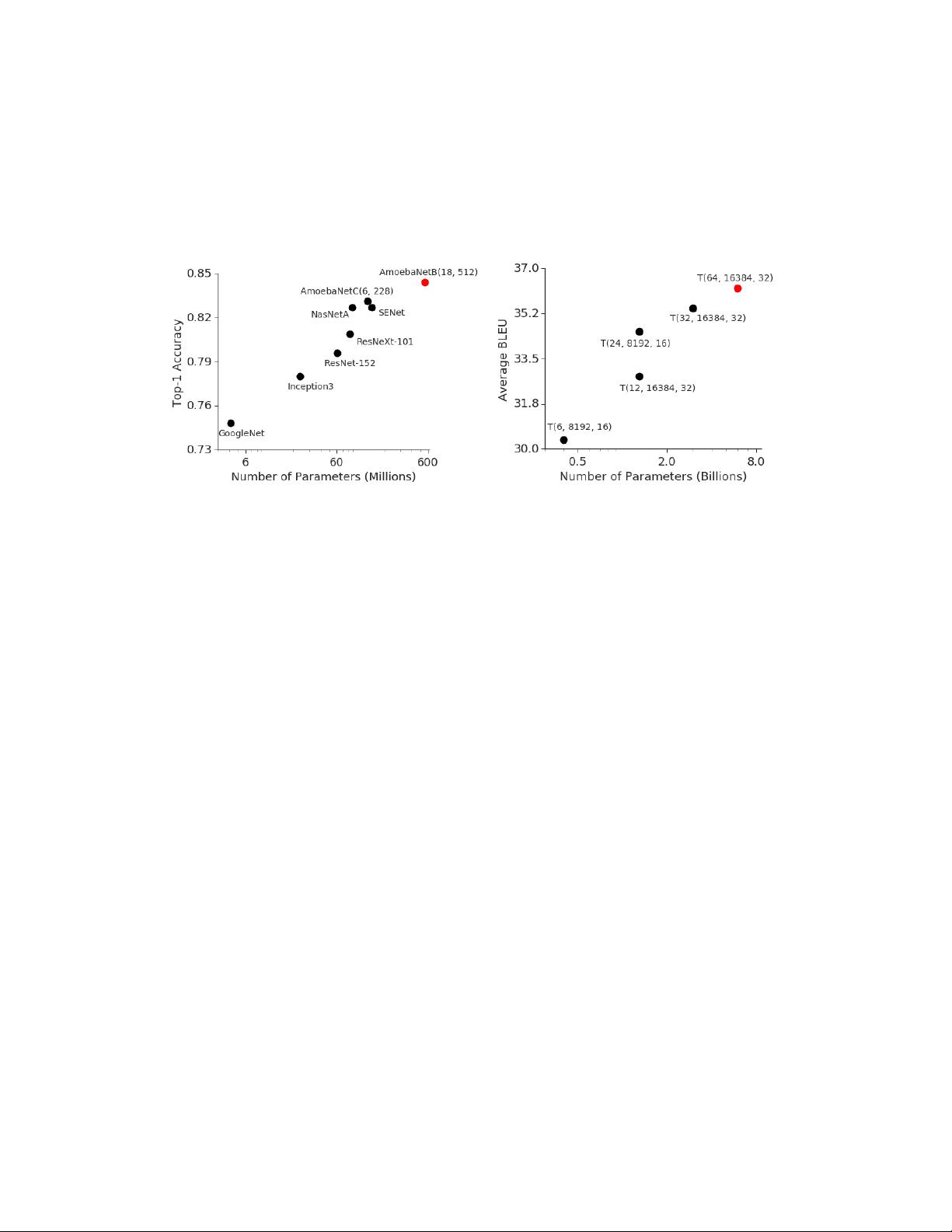

Deep learning has seen great progress over the last decade, partially thanks to the development of

methods that have facilitated scaling the effective capacity of neural networks. This trend has been

most visible for image classification, as demonstrated by the accuracy improvements on ImageNet

with the increase in model capacity (Figure 1a). A similar phenomenon can also be observed in

the context of natural language processing (Figure 1b) where simple shallow models of sentence

representations [1, 2] are outperformed by their deeper and larger counterparts [3, 4].

While larger models have brought remarkable quality improvements to several fields, scaling neural

networks introduces significant practical challenges. Hardware constraints, including memory

limitations and communication bandwidths on accelerators (GPU or TPU), force users to divide larger

Preprint. Under review.

arXiv:1811.06965v5 [cs.CV] 25 Jul 2019

Figure 1: (a) Strong correlation between top-1 accuracy on ImageNet 2012 validation dataset [

5

]

and model size for representative state-of-the-art image classification models in recent years [

6

,

7

,

8

,

9

,

10

,

11

,

12

]. There has been a

36×

increase in the model capacity. Red dot depicts

84.4%

top-1

accuracy for the 550M parameter AmoebaNet model. (b) Average improvement in translation quality

(BLEU) compared against bilingual baselines on our massively multilingual in-house corpus, with

increasing model size. Each point,

T (L, H, A)

, depicts the performance of a Transformer with

L

encoder and

L

decoder layers, a feed-forward hidden dimension of

H

and

A

attention heads. Red dot

depicts the performance of a 128-layer 6B parameter Transformer.

models into partitions and to assign different partitions to different accelerators. However, efficient

model parallelism algorithms are extremely hard to design and implement, which often requires the

practitioner to make difficult choices among scaling capacity, flexibility (or specificity to particular

tasks and architectures) and training efficiency. As a result, most efficient model-parallel algorithms

are architecture and task-specific. With the growing number of applications of deep learning, there is

an ever-increasing demand for reliable and flexible infrastructure that allows researchers to easily

scale neural networks for a large variety of machine learning tasks.

To address these challenges, we introduce GPipe, a flexible library that enables efficient training of

large neural networks. GPipe allows scaling arbitrary deep neural network architectures beyond the

memory limitations of a single accelerator by partitioning the model across different accelerators and

supporting re-materialization on every accelerator [

13

,

14

]. With GPipe, each model can be specified

as a sequence of layers, and consecutive groups of layers can be partitioned into cells. Each cell is

then placed on a separate accelerator. Based on this partitioned setup, we propose a novel pipeline

parallelism algorithm with batch splitting. We first split a mini-batch of training examples into

smaller micro-batches, then pipeline the execution of each set of micro-batches over cells. We apply

synchronous mini-batch gradient descent for training, where gradients are accumulated across all

micro-batches in a mini-batch and applied at the end of a mini-batch. Consequently, gradient updates

using GPipe are consistent regardless of the number of partitions, allowing researchers to easily train

increasingly large models by deploying more accelerators. GPipe can also be complemented with

data parallelism to further scale training.

We demonstrate the flexibility and efficiency of GPipe on image classification and machine translation.

For image classification, we train the AmoebaNet model on

480 × 480

input from the ImageNet 2012

dataset. By increasing the model width, we scale up the number of parameters to

557

million and

achieve a top-1 validation accuracy of 84.4%. On machine translation, we train a single 128-layer

6-billion-parameter multilingual Transformer model on 103 languages (102 languages to English).

We show that this model is capable of outperforming the individually trained 350-million-parameter

bilingual Transformer Big [15] models on 100 language pairs.

2 The GPipe Library

We now describe the interface and the main design features of GPipe. This open-source library is

implemented under the Lingvo [

16

] framework. The core design features of GPipe are generally

applicable and can be implemented for other frameworks [17, 18, 19].

2

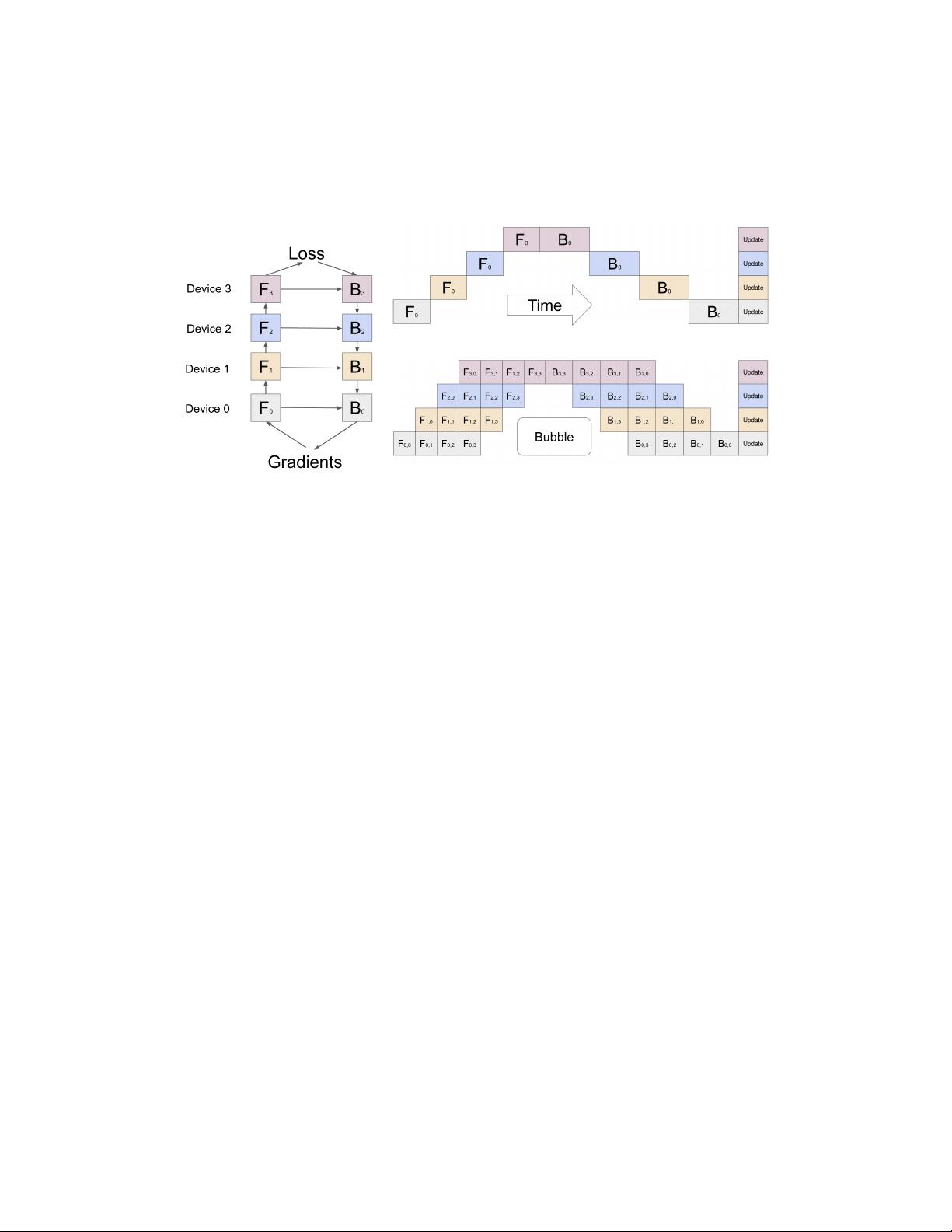

Figure 2: (a) An example neural network with sequential layers is partitioned across four accelerators.

F

k

is the composite forward computation function of the

k

-th cell.

B

k

is the back-propagation

function, which depends on both

B

k+1

from the upper layer and

F

k

. (b) The naive model parallelism

strategy leads to severe under-utilization due to the sequential dependency of the network. (c) Pipeline

parallelism divides the input mini-batch into smaller micro-batches, enabling different accelerators to

work on different micro-batches simultaneously. Gradients are applied synchronously at the end.

(b)

(a) (c)

2.1 Interface

Any deep neural network can be defined as a sequence of

L

layers. Each layer

L

i

is composed of

a forward computation function

f

i

, and a corresponding set of parameters

w

i

. GPipe additionally

allows the user to specify an optional computation cost estimation function,

c

i

. With a given number

of partitions

K

, the sequence of

L

layers can be partitioned into

K

composite layers, or cells. Let

p

k

consist of consecutive layers between layers

i

and

j

. The set of parameters corresponding to

p

k

is

equivalent to the union of

w

i

,

w

i+1

, . . . ,

w

j

, and its forward function would be

F

k

= f

j

◦. . .◦f

i+1

◦f

i

.

The corresponding back-propagation function

B

k

can be computed from

F

k

using automatic symbolic

differentiation. The cost estimator, C

k

, is set to Σ

j

l=i

c

l

.

The GPipe interface is extremely simple and intuitive, requiring the user to specify: (i) the number of

model partitions

K

, (ii) the number of micro-batches

M

, and (iii) the sequence and definitions of

L

layers that define the model. Please refer to supplementary material for examples.

2.2 Algorithm

Once the user defines the sequence of layers in their network in terms of model parameters

w

i

, forward

computation function

f

i

, and the cost estimation function

c

i

, GPipe partitions the network into

K

cells and places the

k

-th cell on the

k

-th accelerator. Communication primitives are automatically

inserted at partition boundaries to allow data transfer between neighboring partitions. The partitioning

algorithm minimizes the variance in the estimated costs of all cells in order to maximize the efficiency

of the pipeline by syncing the computation time across all partitions.

During the forward pass, GPipe first divides every mini-batch of size

N

into

M

equal micro-batches,

which are pipelined through the

K

accelerators. During the backward pass, gradients for each

micro-batch are computed based on the same model parameters used for the forward pass. At the end

of each mini-batch, gradients from all

M

micro-batches are accumulated and applied to update the

model parameters across all accelerators. This sequence of operations is illustrated in Figure 2c.

If batch normalization [

20

] is used in the network, the sufficient statistics of inputs during training

are computed over each micro-batch and over replicas if necessary [

21

]. We also track the moving

average of the sufficient statistics over the entire mini-batch to be used during evaluation.

3

剩余10页未读,继续阅读

资源推荐

资源评论

2019-09-25 上传

139 浏览量

2023-11-16 上传

148 浏览量

2022-03-18 上传

2022-03-18 上传

151 浏览量

2023-05-28 上传

170 浏览量

2023-10-18 上传

156 浏览量

2021-09-26 上传

2022-03-18 上传

133 浏览量

139 浏览量

107 浏览量

193 浏览量

2008-11-04 上传

161 浏览量

109 浏览量

103 浏览量

158 浏览量

146 浏览量

资源评论

豪AI冰

- 粉丝: 73

- 资源: 68

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功