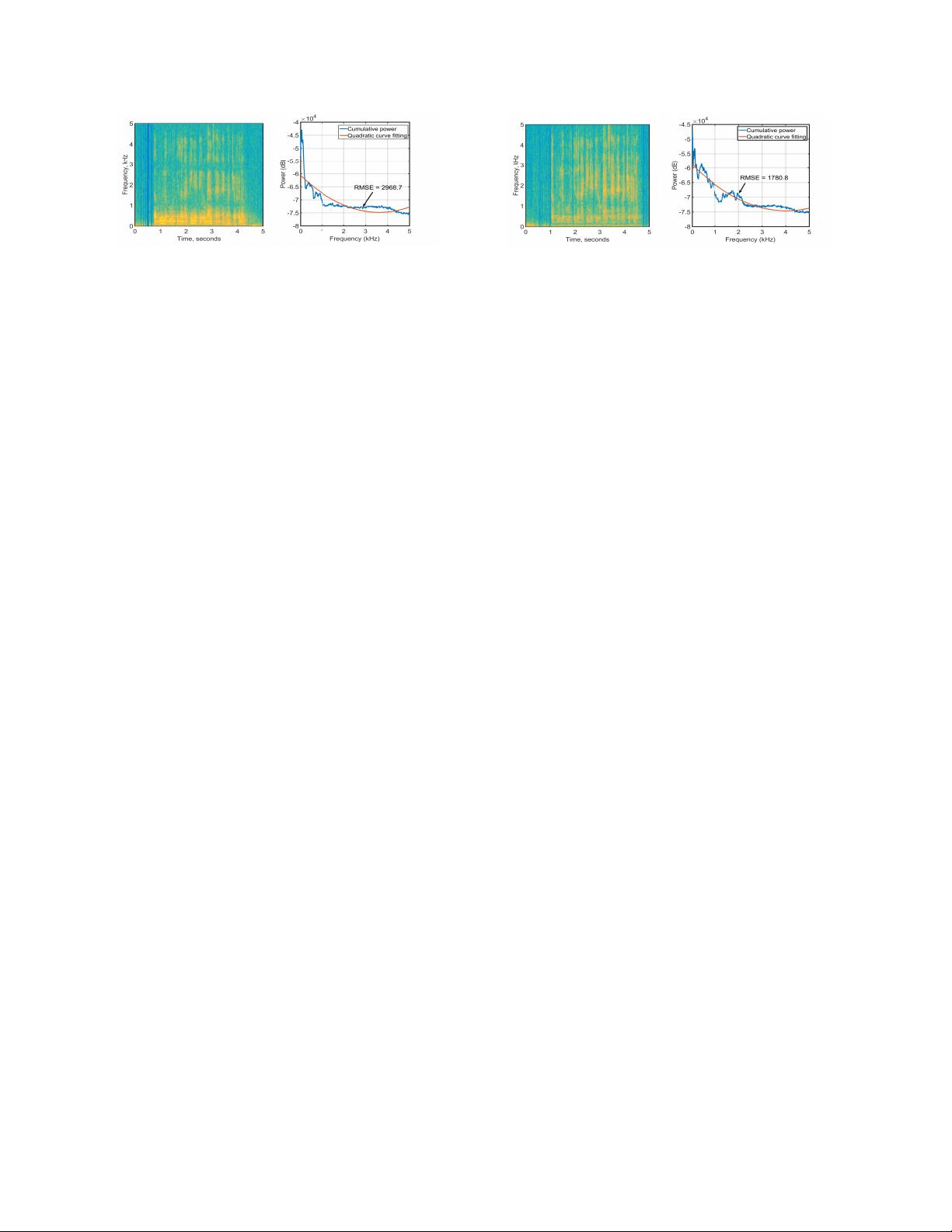

consequence, the overall power distribution over the audible

frequency range often show some uniformity and linearity. (2)

With human voices, the sum of power observed across lower

frequencies is relatively higher than the sum observed across

higher frequencies [15, 29]. As a result, there are significant

differences in the cumulative power distributions between

live-human voices and those replayed through loudspeakers.

Void extracts those differences as classification features to

accurately detect replay attacks.

Our key contributions are summarized below:

•

Design of a fast and light voice replay attack detection

system that uses a single classification model and just 97

classification features related to signal frequencies and cu-

mulative power distribution characteristics. Unlike existing

approaches that rely on multiple deep learning models and

do not provide much insight into complex spectral features

being extracted [7, 30], we explain the characteristics of

key spectral power features, and why those features are

effective in detecting voice spoofing attacks.

•

Evaluation of voice replay attack detection accuracy using

two large datasets consisting of 255,173 voice samples col-

lected from 120 participants, 15 playback devices and 12

recording devices, and 18,030 ASVspoof competition voice

samples collected from 42 participants, 26 playback speak-

ers and 25 recording devices, respectively, demonstrating

0.3% and 11.6% EER. Based on the latter EER, Void would

be ranked as the second best solution in the ASVspoof 2017

competition. Compared to the best-performing solution

from that competition, Void is about 8 times faster and uses

153 times less memory in detection. Void achieves 8.7%

EER on the ASVspoof dataset when combined with an

MFCC-based model – MFCC is already available through

speech recognition services, and would not require addi-

tional computation.

•

Evaluation of Void’s performance against hidden com-

mand, inaudible voice command, voice synthesis, equal-

ization (EQ) manipulation attacks, and combining replay at-

tacks with live-human voices showing 99.7%, 100%, 90.2%,

86.3%, and 98.2% detection rates, respectively.

2 Threat Model

2.1 Voice replay attacks

We define live-human audio sample as a voice utterance ini-

tiated from a human user that is directly recorded through

a microphone (such that would normally be processed by a

voice assistant). In a voice replay attack, an attacker uses a

recording device (e.g., a smartphone) in a close proximity

to a victim, and first records the victim’s utterances (spoken

words) of voice commands used to interact with voice assis-

tants [3, 11, 12]. The attacker then replays the recorded sam-

ples using an in-built speaker (e.g., available on her phone) or

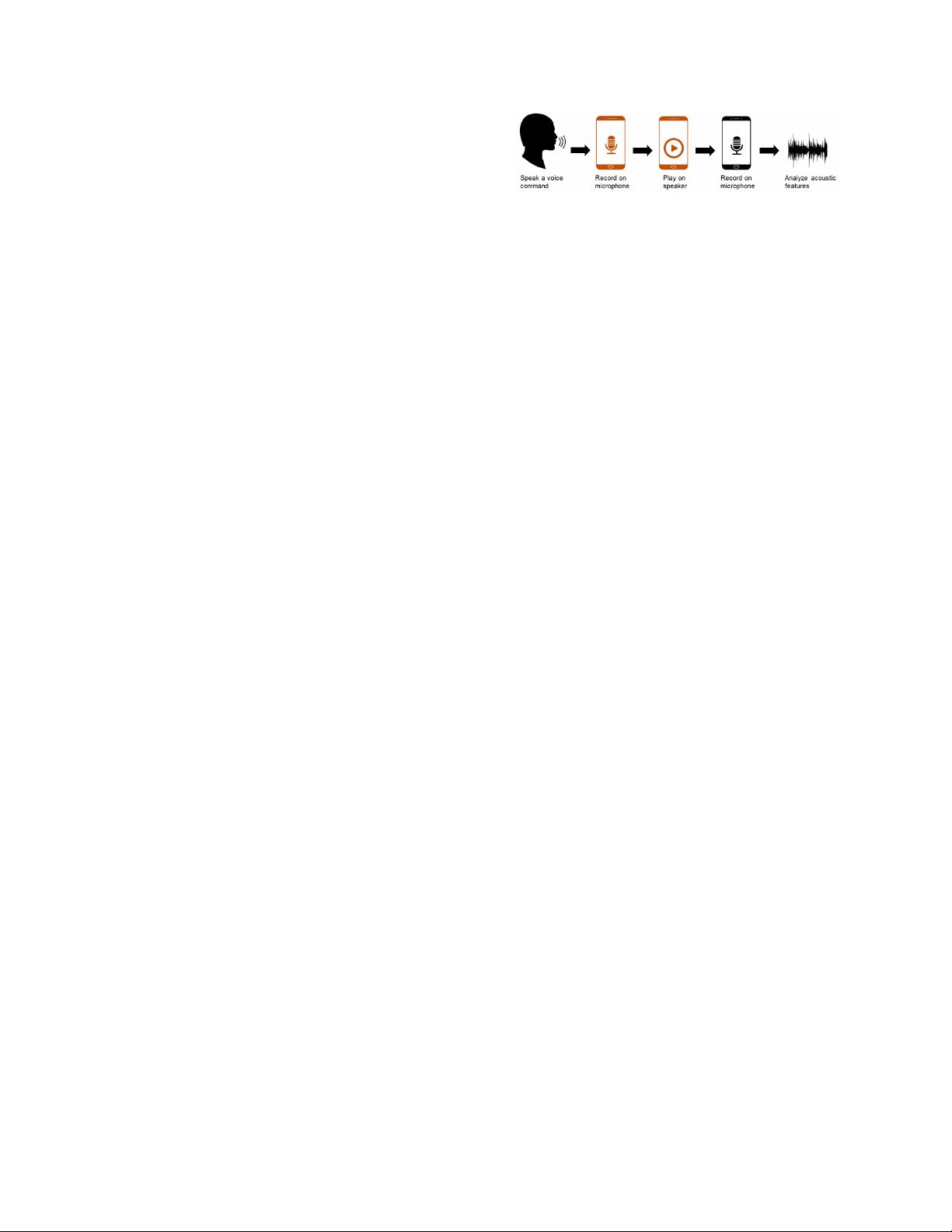

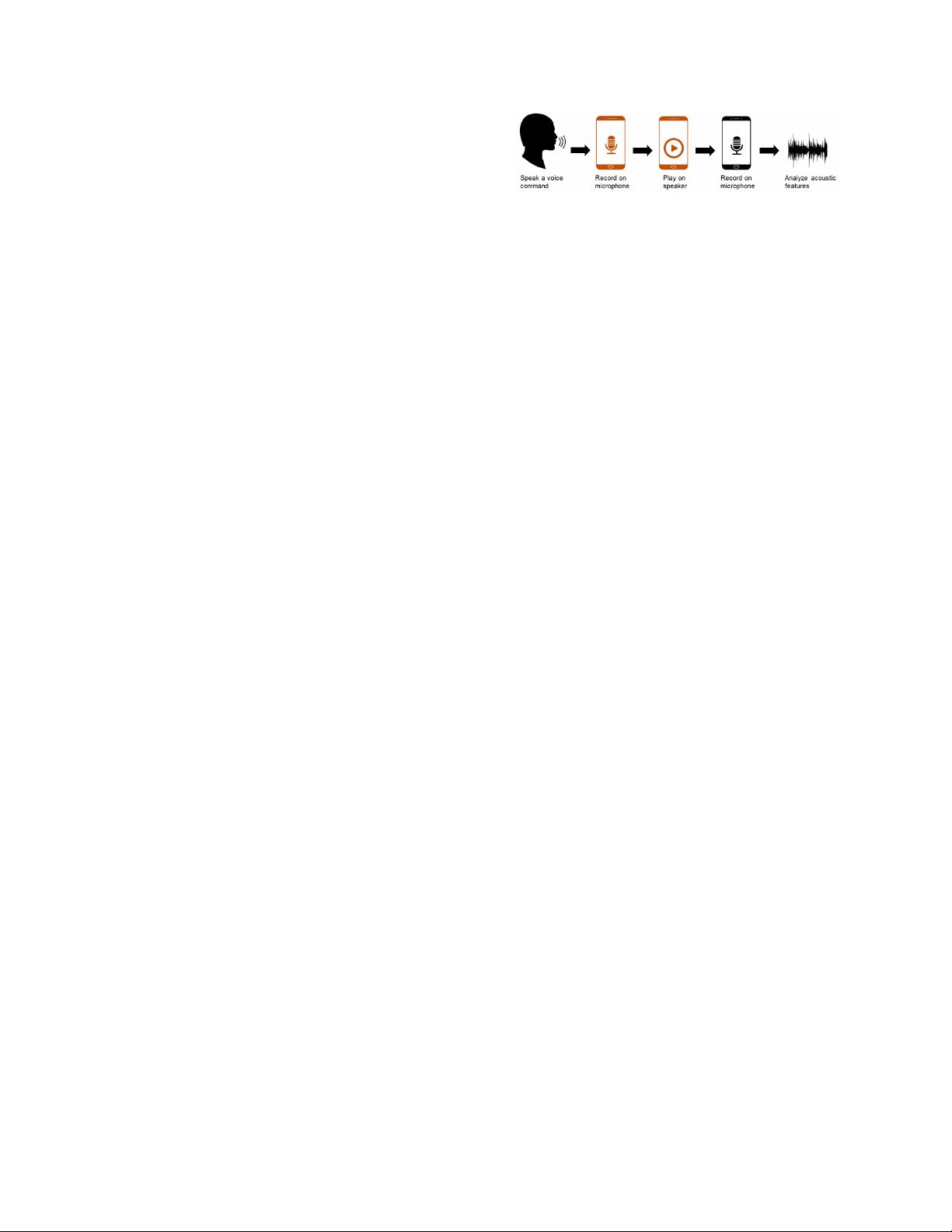

Figure 1: Steps for a voice replay attack.

a standalone speaker to complete the attack (see Figure 1).

Voice replay attack may be the easiest attack to perform

but it is the most difficult one to detect as the recorded voices

have similar characteristics compared to the victim’s live

voices. In fact, most of the existing voice biometric-based

authentication (human speaker verification) systems (e.g.,

[31, 32]) are vulnerable to this kind of replay attack.

2.2 Adversarial attacks

We also consider more sophisticated attacks such as “hidden

voice command” [24, 25], “inaudible voice command” [18

–

20], and “voice synthesis” [6, 12] attacks that have been dis-

cussed in recent literature. Further, EQ manipulation attacks

are specifically designed to game the classification features

used by Void by adjusting specific frequency bands of attack

voice signals.

3 Requirements

3.1 Latency and model size requirements

Our conversations with several speech recognition engineers

at a large IT company (that run their own voice assistant ser-

vices with millions of subscribed users) revealed that there are

strict latency and computational power usage requirements

that must be considered upon deploying any kind of machine

learning-based services. This is because additional use of

computational power and memory through continuous invo-

cation of machine learning algorithms may incur (1) unac-

ceptable costs for businesses, and (2) unacceptable latency

(delays) for processing voice commands. Upon receiving a

voice command, voice assistants are required to respond im-

mediately without any noticeable delay. Hence, processing

delays should be close to 0 second – typically, engineers do

not consider solutions that add 100 or more milliseconds of

delay as portable solutions. A single GPU may be expected to

concurrently process 100 or more voice sessions (streaming

commands), indicating that machine learning algorithms must

be lightweight, simple, and fast.

Further, as part of future solutions, businesses are consid-

ering on-device voice assistant implementations (that would

not communicate with remote servers) to improve response

latency, save server costs, and minimize privacy issues related

to sharing users’ private voice data with remote servers. For

such on-device solutions with limited computing resources

available, the model and feature complexity and size (CPU

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功