of image patch-pairs for training are known. Third, the features

learned by DNN have rotation invariance, scale invariance, and

translation invariance by learning from different transformed

images. Fourth, for the registration of new images, the mapping

function is learned by itself and its transformed images, without

requiring an assistance by other images.

For each image registration, it is time consuming that training

the network start from scratch. To reduce the computation cost,

we apply transfer learning by taking the trained network of other

images as initial network and then fine-tune it by our target

images. Experiments show that not only the training time is

greatly reduced, but our framework gains a better registration

performance.

In summary, the main contribution of this work has threefold.

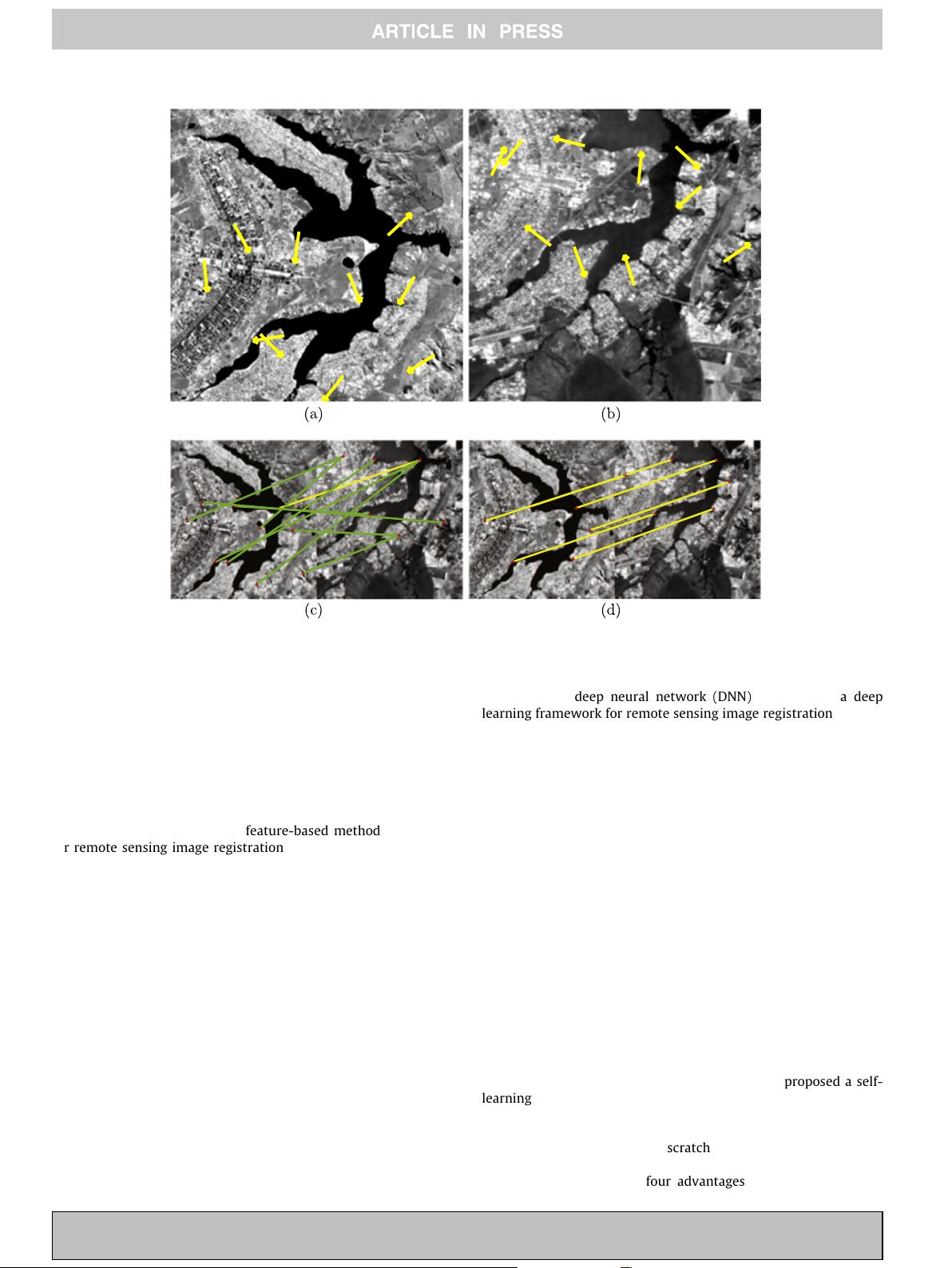

(1) We propose a deep learning framework for remote sensing

image registration, which directly learn the end-to-end

mapping between the image patch pairs and their matching

labels.

(2) We propose a self-learning to slove the small data and data

labeling problem in remote sensing image registration. The

mapping function is learned from itself and its transformed

images.

(3) We apply the transfer learning to reduce the training cost by

taking the trained network of other images as initial net-

work, and then fine-tune it using target images.

The rest of this paper is organized as follows. Section 2 intro-

duces the related work of image registration and deep learning.

Section 3 details our deep learning framework for remote sensing

image registration. Section 4 show the extensive experiments

and analysis on our proposal. We conclude in Section 5.

2. Related work

2.1. Image registration

In this section, we briefly introduce the existing image registra-

tion methods about aforementioned two categories: area-based

methods and feature-based methods.

The first widely studied methods for image registration were

area-based (Maes et al., 1997; Kern and Pattichis, 2007; Suri and

Reinartz, 2009; Liang et al., 2013). It transforms the registration

problem into an optimization problem that maximizes the similar-

ity between reference image and sensed image. Liang et al. (2013)

proposed a spatial and mutual information (SMI) as the similarity

metric for searching similar local regions by using ant colony opti-

mization. To speed up computing MI, Patel and Thakar (2015) esti-

mated MI based on maximum likelihood. However, the area-based

methods heavily rely on pixel intensities, then are sensitive to illu-

mination change and noises. Another idea was to find the optimal

parameters in other domains. Reddy and Chatterji (1996) proposed

a fast Fourier transform-based (FFT-based) method to find the opti-

mal matching in frequency domain, but it did not meet the

required accuracy. With the development of feature point extrac-

tion, a new trend was trying to build geometric transform by

matching feature points. As the particular imaging mechanism, a

large variety of the SIFT algorithm had been proposed to remote

sensing image registration (Schwind et al., 2010; Sedaghat et al.,

2011; Wang et al., 2012; Fan et al., 2013; Ye and Shan, 2014; Fan

et al., 2015; Ma et al., 2017). Schwind et al. (2010) proposed

SIFT-OCT that skipped the first octave of the scale space to reduce

the influence of noise. Wang et al. (2012) applied the bilateral filter

(BF) to construct anisotropic scale space. It could preserve more

details by combining two Gaussian filter on the spatial space and

intensity. Meanwhile, progresses had also been done on feature

matching. Wu et al. (2015) proposed a fast sample consensus

(FSC) algorithm that applied high correct rate matching points to

calculate the transform parameters, and then selected the match-

ing points that have only subpixel error. Kupfer et al. (2015) pro-

posed a mode-seeking SIFT (MS-SIFT) method that defined new

mode scale, mode rotation difference and mode translations for

feature points. It could refine the result by eliminating outliers

whose horizontal or vertical shift is far than mode translation.

Ma et al. (2017) proposed modified SIFT feature and robust feature

matching method to overcome the intensity difference between

image pairs, where the feature matching combine the feature dis-

tance, FSC and MS-SIFT.

Additionally, some papers are focus on the geometric struc-

ture or shape features of images. Ye et al. propose to represent

the structural properties of images by a new feature descriptor

named the histogram of orientated phase congruency (HOPC)

(Ye et al., 2017a; Ye and Shen, 2016), and then take NCC as

similarity metric for template matching. Ye et al. (2017b) pro-

pose a novel shape descriptor for image matching based on

dense local self-similarity (DLSS) and normalized cross-

correlation (NCC). Yang et al. (2017) propose to combine the

shape context feature and SIFT feature for remote sensing

image registration.

In the meantime, other researches put effort on integrating

the advantages of area-based methods and feature-based meth-

ods (Yong et al., 2009; Ma et al., 2010; Goncalves et al., 2011;

Gong et al., 2014). Ma et al. (2010) applied normalized cross-

correlation (NCC) to acquire control points with better spatial

distribution after SIFT preliminary registration. Xu et al.

(2016a) proposed an iterative multi-level strategy to adjust

parameters, which re-extracts and re-matches features, to

improve the registration. Gong et al. (2014) proposed a coarse-

to-fine method for image registration that acquired the coarse

results by SIFT and then achieved the precise registration based

on mutual information. Goncalves et al. (2011) combined image

segmentation and SIFT, which extracted objects from images

using Otsus thresholding method, and then apply SIFT to obtain

matching points from objects.

We can see that the conventional feature-based methods

require a careful engineering and domain knowledge to design fea-

ture extractor. This makes the handcrafted features somehow

specific, but less generalized.

2.2. Deep learning

Deep learning has achieved great successes in the areas of com-

puter vision (Farabet et al., 2013; Simoserra et al., 2015), speech

processing (Hinton et al., 2012; Graves et al., 2013) and image pro-

cessing (Krizhevsky et al., 2012; Simonyan and Zisserman, 2014;

Russakovsky et al., 2015; Ren et al., 2016). In deep learning, the

popular models are deep belief network (DBN), auto-encoder

(AE) and convolution neural networks (CNN). They share a similar

structure stacked with multiple layers (Bengio, 2009), each layer

abstracts the former features to higher-level features by a non-

linear mode. Some deep models can be used to exploit the distribu-

tion characteristics of data by minimizing the reconstruction error

(Hinton et al., 2006; Vincent et al., 2010; Lecun et al., 2015), and

other models are used to acquire semantic features by stochastic

gradient descent in back propagation (BP) algorithm (Rumelhart

et al., 1986).

Deep learning has been introduced to the area of remote sens-

ing image and shown superiority and robustness (Han et al.,

2015a; Cheng et al., 2016a,b; Scott et al., 2017; Cheng et al.,

2015; Romero et al., 2016; Yao et al., 2016; Zhou et al., 2016;

Zhao and Du, 2016b,a; Gong et al., 2016; Zhang et al., 2016;

S. Wang et al. / ISPRS Journal of Photogrammetry and Remote Sensing xxx (2018) xxx–xxx

3

Please cite this article in press as: Wang, S., et al. A deep learning framework for remote sensing image registration. ISPRS J. Photogram. Remote Sensing

(2018), https://doi.org/10.1016/j. isprsjprs.2017.12.012

丶沉默2019-01-07很有帮助,我很喜欢

丶沉默2019-01-07很有帮助,我很喜欢 cosine_2023-10-30非常有用的资源,可以直接使用,对我很有用,果断支持

cosine_2023-10-30非常有用的资源,可以直接使用,对我很有用,果断支持 我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功