没有合适的资源?快使用搜索试试~ 我知道了~

《A Survey of the Recent Architectures of Deep Convolutional Neur...

需积分: 27 4 下载量 107 浏览量

2021-03-03

23:38:00

上传

评论

收藏 1.02MB PDF 举报

温馨提示

人工智能领域—计算机视觉最新文章观察,2019《A Survey of the Recent Architectures of Deep Convolutional Neural Networks》67页pdf原文

资源详情

资源评论

资源推荐

1

A Survey of the Recent Architectures of Deep Convolutional Neural Networks

Asifullah Khan

1, 2*

, Anabia Sohail

1, 2

,

Umme Zahoora

1

, and Aqsa Saeed Qureshi

1

1

Pattern Recognition Lab, DCIS, PIEAS, Nilore, Islamabad 45650, Pakistan

2

Deep Learning Lab, Center for Mathematical Sciences, PIEAS, Nilore, Islamabad 45650, Pakistan

asif@pieas.edu.pk

Abstract

Deep Convolutional Neural Networks (CNNs) are a special type of Neural Networks, which

have shown state-of-the-art performance on various competitive benchmarks. The powerful

learning ability of deep CNN is largely due to the use of multiple feature extraction stages

(hidden layers) that can automatically learn representations from the data. Availability of a large

amount of data and improvements in the hardware processing units have accelerated the research

in CNNs, and recently very interesting deep CNN architectures are reported. The recent race in

developing deep CNNs shows that the innovative architectural ideas, as well as parameter

optimization, can improve CNN performance. In this regard, different ideas in the CNN design

have been explored such as the use of different activation and loss functions, parameter

optimization, regularization, and restructuring of the processing units. However, the major

improvement in representational capacity of the deep CNN is achieved by the restructuring of the

processing units. Especially, the idea of using a block as a structural unit instead of a layer is

receiving substantial attention. This survey thus focuses on the intrinsic taxonomy present in the

recently reported deep CNN architectures and consequently, classifies the recent innovations in

CNN architectures into seven different categories. These seven categories are based on spatial

exploitation, depth, multi-path, width, feature map exploitation, channel boosting, and attention.

Additionally, this survey also covers the elementary understanding of CNN components and

sheds light on its current challenges and applications.

Keywords: Deep Learning, Convolutional Neural Networks, Architecture, Representational

Capacity, Residual Learning, and Channel Boosted CNN.

2

1 Introduction

Machine Learning (ML) algorithms belong to a specialized area in Artificial Intelligence (AI),

which endows intelligence to computers by learning the underlying relationships among the data

and making decisions without being explicitly programmed. Different ML algorithms have been

developed since the late 1990s, for the emulation of human sensory responses such as speech and

vision, but they have generally failed to achieve human-level satisfaction [1]–[6]. The

challenging nature of Machine Vision (MV) tasks gives rise to a specialized class of Neural

Networks (NN), known as Convolutional Neural Network (CNN) [7].

CNNs are considered as one of the best techniques for learning image content and have shown

state-of-the-art results on image recognition, segmentation, detection, and retrieval related tasks

[8], [9]. The success of CNN has captured attention beyond academia. In industry, companies

such as Google, Microsoft, AT&T, NEC, and Facebook have developed active research groups

for exploring new architectures of CNN [10]. At present, most of the frontrunners of image

processing competitions are employing deep CNN based models.

The topology of CNN is divided into multiple learning stages composed of a combination of the

convolutional layer, non-linear processing units, and subsampling layers [11]. Each layer

performs multiple transformations using a bank of convolutional kernels (filters) [12].

Convolution operation extracts locally correlated features by dividing the image into small slices

(similar to the retina of the human eye), making it capable of learning suitable features. Output of

the convolutional kernels is assigned to non-linear processing units, which not only helps in

learning abstraction but also embeds non-linearity in the feature space. This non-linearity

generates different patterns of activations for different responses and thus facilitates in learning

of semantic differences in images. Output of the non-linear function is usually followed by

subsampling, which helps in summarizing the results and also makes the input invariant to

geometrical distortions [12], [13].

The architectural design of CNN was inspired by Hubel and Wiesel’s work and thus largely

follows the basic structure of primate’s visual cortex [14], [15]. CNN first came to limelight

through the work of LeCuN in 1989 for the processing of grid-like topological data (images and

3

time series data) [7], [16]. The popularity of CNN is largely due to its hierarchical feature

extraction ability. Hierarchical organization of CNN emulates the deep and layered learning

process of the Neocortex in the human brain, which automatically extract features from the

underlying data [17]. The staging of learning process in CNN shows quite resemblance with

primate’s ventral pathway of visual cortex (V1-V2-V4-IT/VTC) [18]. The visual cortex of

primates first receives input from the retinotopic area, where multi-scale highpass filtering and

contrast normalization is performed by the lateral geniculate nucleus. After this, detection is

performed by different regions of the visual cortex categorized as V1, V2, V3, and V4. In fact,

V1 and V2 portion of visual cortex are similar to convolutional, and subsampling layers, whereas

inferior temporal region resembles the higher layers of CNN, which makes inference about the

image [19]. During training, CNN learns through backpropagation algorithm, by regulating the

change in weights with respect to the input. Minimization of a cost function by CNN using

backpropagation algorithm is similar to the response based learning of human brain. CNN has

the ability to extract low, mid, and high-level features. High level features (more abstract

features) are a combination of lower and mid-level features. With the automatic feature

extraction ability, CNN reduces the need for synthesizing a separate feature extractor [20]. Thus,

CNN can learn good internal representation from raw pixels with diminutive processing.

The main boom in the use of CNN for image classification and segmentation occurred after it

was observed that the representational capacity of a CNN can be enhanced by increasing its

depth [21]. Deep architectures have an advantage over shallow architectures, when dealing with

complex learning problems. Stacking of multiple linear and non-linear processing units in a layer

wise fashion provides deep networks the ability to learn complex representations at different

levels of abstraction. In addition, advancements in hardware and thus the availability of high

computing resources is also one of the main reasons of the recent success of deep CNNs. Deep

CNN architectures have shown significant performance of improvements over shallow and

conventional vision based models. Apart from its use in supervised learning, deep CNNs have

potential to learn useful representation from large scale of unlabeled data. Use of the multiple

mapping functions by CNN enables it to improve the extraction of invariant representations and

consequently, makes it capable to handle recognition tasks of hundreds of categories. Recently, it

is shown that different level of features including both low and high-level can be transferred to a

4

generic recognition task by exploiting the concept of Transfer Learning (TL) [22]–[24].

Important attributes of CNN are hierarchical learning, automatic feature extraction, multi-

tasking, and weight sharing [25]–[27].

Various improvements in CNN learning strategy and architecture were performed to make CNN

scalable to large and complex problems. These innovations can be categorized as parameter

optimization, regularization, structural reformulation, etc. However, it is observed that CNN

based applications became prevalent after the exemplary performance of AlexNet on ImageNet

dataset [21]. Thus major innovations in CNN have been proposed since 2012 and were mainly

due to restructuring of processing units and designing of new blocks. Similarly, Zeiler and

Fergus [28] introduced the concept of layer-wise visualization of features, which shifted the

trend towards extraction of features at low spatial resolution in deep architecture such as VGG

[29]. Nowadays, most of the new architectures are built upon the principle of simple and

homogenous topology introduced by VGG. On the other hand, Google group introduced an

interesting idea of split, transform, and merge, and the corresponding block is known as

inception block. The inception block for the very first time gave the concept of branching within

a layer, which allows abstraction of features at different spatial scales [30]. In 2015, the concept

of skip connections introduced by ResNet [31] for the training of deep CNNs got famous, and

afterwards, this concept was used by most of the succeeding Nets, such as Inception-ResNet,

WideResNet, ResNext, etc [32]–[34].

In order to improve the learning capacity of a CNN, different architectural designs such as

WideResNet, Pyramidal Net, Xception etc. explored the effect of multilevel transformations in

terms of an additional cardinality and increase in width [32], [34], [35]. Therefore, the focus of

research shifted from parameter optimization and connections readjustment towards improved

architectural design (layer structure) of the network. This shift resulted in many new architectural

ideas such as channel boosting, spatial and channel wise exploitation and attention based

information processing etc. [36]–[38].

In the past few years, different interesting surveys are conducted on deep CNNs that elaborate

the basic components of CNN and their alternatives. The survey reported by [39] has reviewed

the famous architectures from 2012-2015 along with their components. Similarly, in the

5

literature, there are prominent surveys that discuss different algorithms of CNN and focus on

applications of CNN [20], [26], [27], [40], [41]. Likewise, the survey presented in [42] discussed

taxonomy of CNNs based on acceleration techniques. On the other hand, in this survey, we

discuss the intrinsic taxonomy present in the recent and prominent CNN architectures. The

various CNN architectures discussed in this survey can be broadly classified into seven main

categories namely; spatial exploitation, depth, multi-path, width, feature map exploitation,

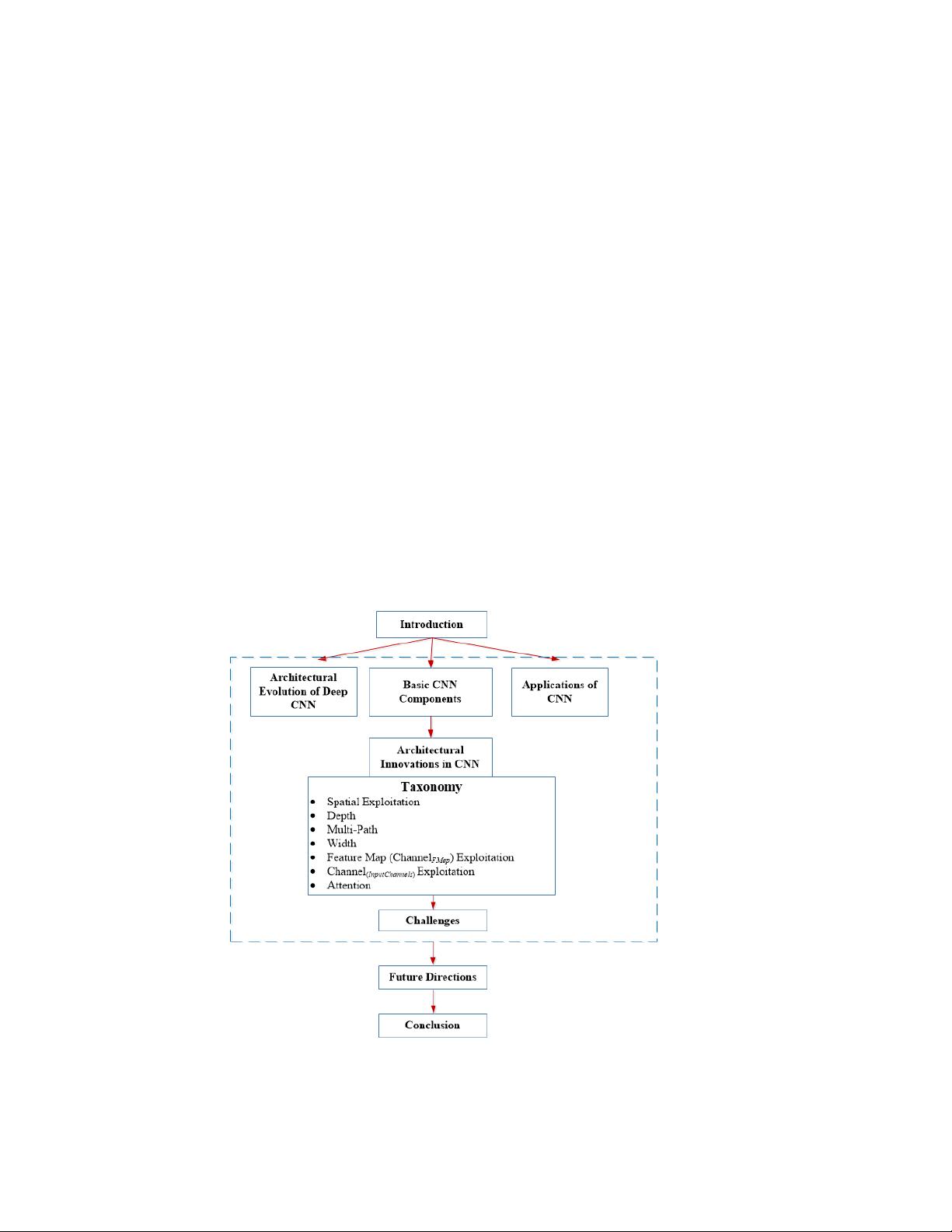

channel boosting, and attention based CNNs. The rest of the paper is organized in the following

order (shown in Fig. 1): Section 1 summarizes the underlying basics of CNN, its resemblance

with primate’s visual cortex, as well as its contribution in MV. In this regard, Section 2 provides

the overview on basic CNN components and Section 3 discusses the architectural evolution of

deep CNNs. Whereas, Section 4, discusses the recent innovations in CNN architectures and

categorizes CNNs into seven broad classes. Section 5 and 6 shed light on applications of CNNs

and current challenges, whereas section 7 discusses future work and last section draws

conclusion.

Fig. 1: Organization of the survey paper.

剩余66页未读,继续阅读

一个处女座的程序猿

- 粉丝: 124w+

- 资源: 59

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0