没有合适的资源?快使用搜索试试~ 我知道了~

基于深度学习的特征点检测,主要用端到端的方式实现,在该论文中,讲了该方法与几种传统方法的比较

资源推荐

资源详情

资源评论

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/308277668

LIFT: Learned Invariant Feature Transform

Conference Paper · October 2016

DOI: 10.1007/978-3-319-46466-4_28

CITATIONS

81

READS

664

4 authors, including:

Some of the authors of this publication are also working on these related projects:

Learning Descriptors for Object Recognition and 3D Pose Estimation View project

Electron imaging and 3D reconstruction of dislocations View project

Kwang Moo Yi

University of Victoria

38 PUBLICATIONS527 CITATIONS

SEE PROFILE

Vincent Lepetit

Université Bordeaux 1

199 PUBLICATIONS11,362 CITATIONS

SEE PROFILE

Pascal Fua

École Polytechnique Fédérale de Lausanne

597 PUBLICATIONS24,589 CITATIONS

SEE PROFILE

All content following this page was uploaded by Vincent Lepetit on 06 March 2018.

The user has requested enhancement of the downloaded file.

LIFT: Learned Invariant Feature Transform

Kwang Moo Yi

∗,1

, Eduard Trulls

∗,1

, Vincent Lepetit

2

, Pascal Fua

1

1

Computer Vision Laboratory, Ecole Polytechnique F´ed´erale de Lausanne (EPFL)

2

Institute for Computer Graphics and Vision, Graz University of Technology

{kwang.yi, eduard.trulls, pascal.fua}@epfl.ch, lepetit@icg.tugraz.at

Abstract. We introduce a novel Deep Network architecture that imple-

ments the full feature point handling pipeline, that is, detection, orienta-

tion estimation, and feature description. While previous works have suc-

cessfully tackled each one of these problems individually, we show how to

learn to do all three in a unified manner while preserving end-to-end dif-

ferentiability. We then demonstrate that our Deep pipeline outperforms

state-of-the-art methods on a number of benchmark datasets, without

the need of retraining.

Keywords: Local Features, Feature Descriptors, Deep Learning

1 Introduction

Local features play a key role in many Computer Vision applications. Find-

ing and matching them across images has been the subject of vast amounts

of research. Until recently, the best techniques relied on carefully hand-crafted

features [1–5]. Over the past few years, as in many areas of Computer Vision,

methods based in Machine Learning, and more specifically Deep Learning, have

started to outperform these traditional methods [6–10].

These new algorithms, however, address only a single step in the complete

processing chain, which includes detecting the features, computing their orienta-

tion, and extracting robust representations that allow us to match them across

images. In this paper we introduce a novel Deep architecture that performs all

three steps together. We demonstrate that it achieves better overall performance

than the state-of-the-art methods, in large part because it allows these individual

steps to be optimized to perform well in conjunction with each other.

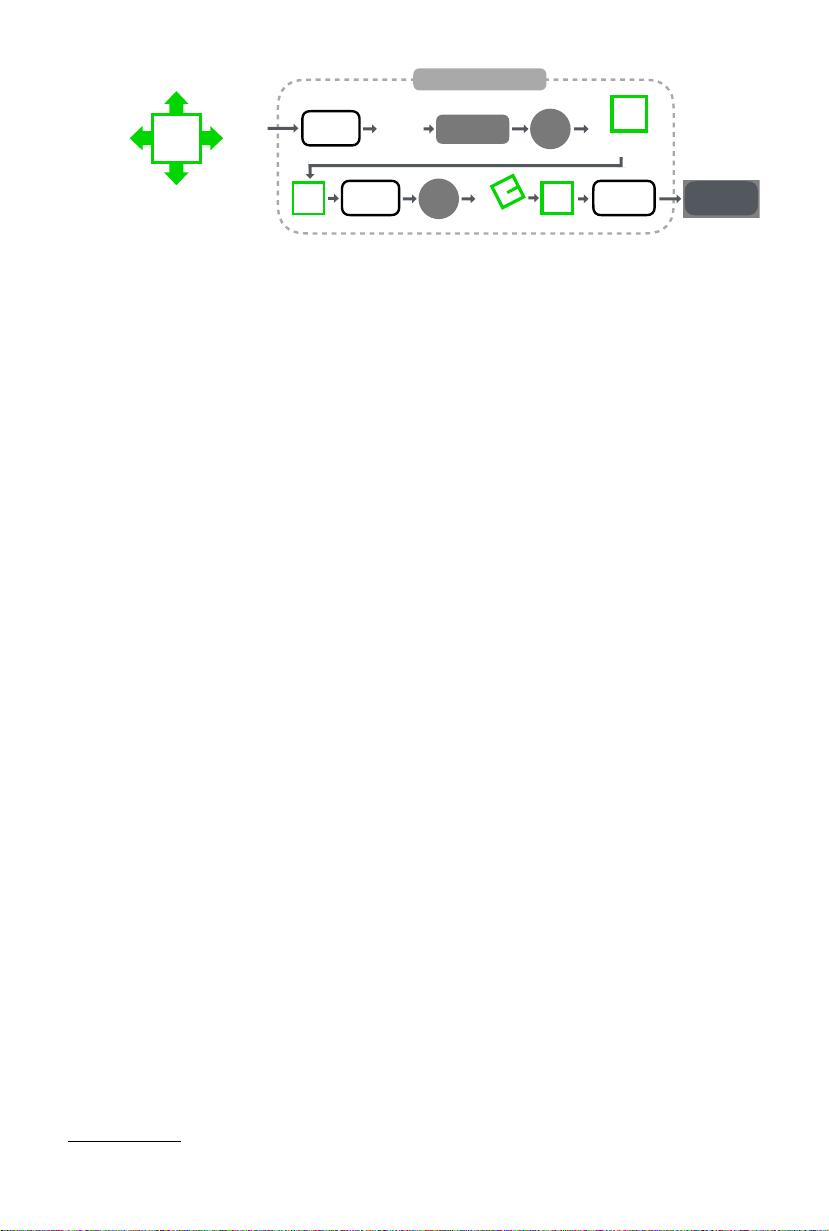

Our architecture, which we refer to as LIFT for Learned Invariant Feature

Transform, is depicted by Fig. 1. It consists of three components that feed into

each other: the Detector, the Orientation Estimator, and the Descriptor. Each

one is based on Convolutional Neural Networks (CNNs), and patterned after

recent ones [6, 9, 10] that have been shown to perform these individual functions

well. To mesh them together we use Spatial Transformers [11] to rectify the

∗ First two authors contributed equally.

This work was supported in part by the EU FP7 project MAGELLAN under grant

number ICT-FP7-611526.

2 K. M. Yi, E. Trulls, V. Lepetit, P. Fua

DET

Crop

ORI

Rot

DESC

LIFT pipeline

SCORE MAP

softargmax

description

vector

Fig. 1. Our integrated feature extraction pipeline. Our pipeline consists of three major

components: the Detector, the Orientation Estimator, and the Descriptor. They are

tied together with differentiable operations to preserve end-to-end differentiability.

1

image patches given the output of the Detector and the Orientation Estimator.

We also replace the traditional approaches to non-local maximum suppression

(NMS) by the soft argmax function [12]. This allows us to preserve end-to-end

differentiability, and results in a full network that can still be trained with back-

propagation, which is not the case of any other architecture we know of.

Also, we show how to learn such a pipeline in an effective manner. To this

end, we build a Siamese network and train it using the feature points produced

by a Structure-from-Motion (SfM) algorithm that we ran on images of a scene

captured under different viewpoints and lighting conditions, to learn its weights.

We formulate this training problem on image patches extracted at different scales

to make the optimization tractable. In practice, we found it impossible to train

the full architecture from scratch, because the individual components try to op-

timize for different objectives. Instead, we introduce a problem-specific learning

approach to overcome this problem. It involves training the Descriptor first,

which is then used to train the Orientation Estimator, and finally the Detector,

based on the already learned Descriptor and Orientation Estimator, differenti-

ating through the entire network. At test time, we decouple the Detector, which

runs over the whole image in scale space, from the Orientation Estimator and

Descriptor, which process only the keypoints.

In the next section we briefly discuss earlier approaches. We then present our

approach in detail and show that it outperforms many state-of-the-art methods.

2 Related work

The amount of literature relating to local features is immense, but it always

revolves about finding feature points, computing their orientation, and matching

them. In this section, we will therefore discuss these three elements separately.

2.1 Feature Point Detectors

Research on feature point detection has focused mostly on finding distinctive

locations whose scale and rotation can be reliably estimated. Early works [13,

1

Figures are best viewed in color.

剩余16页未读,继续阅读

资源评论

Zero-lei

- 粉丝: 7

- 资源: 1

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于Pygame库实现新年烟花效果的Python代码

- 浪漫节日代码 - 爱心代码、圣诞树代码

- 睡眠健康与生活方式数据集,睡眠和生活习惯关联分析()

- 国际象棋检测10-YOLO(v5至v9)、COCO、CreateML、Paligemma数据集合集.rar

- 100个情侣头像,唯美手绘情侣头像

- 自动驾驶不同工况避障模型(perscan、simulink、carsim联仿),能够避开预设的(静态)障碍物

- 使用Python和Pygame实现圣诞节动画效果

- 数据分析-49-客户细分-K-Means聚类分析

- 车辆轨迹自适应预瞄跟踪控制和自适应p反馈联合控制,自适应预苗模型和基于模糊p控制均在simulink中搭建 个人觉得跟踪效果相比模糊pid效果好很多,轨迹跟踪过程,转角控制平滑自然,车速在36到72

- 企业可持续发展性数据集,ESG数据集,公司可持续发展性数据(可用于多种企业可持续性研究场景)

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功