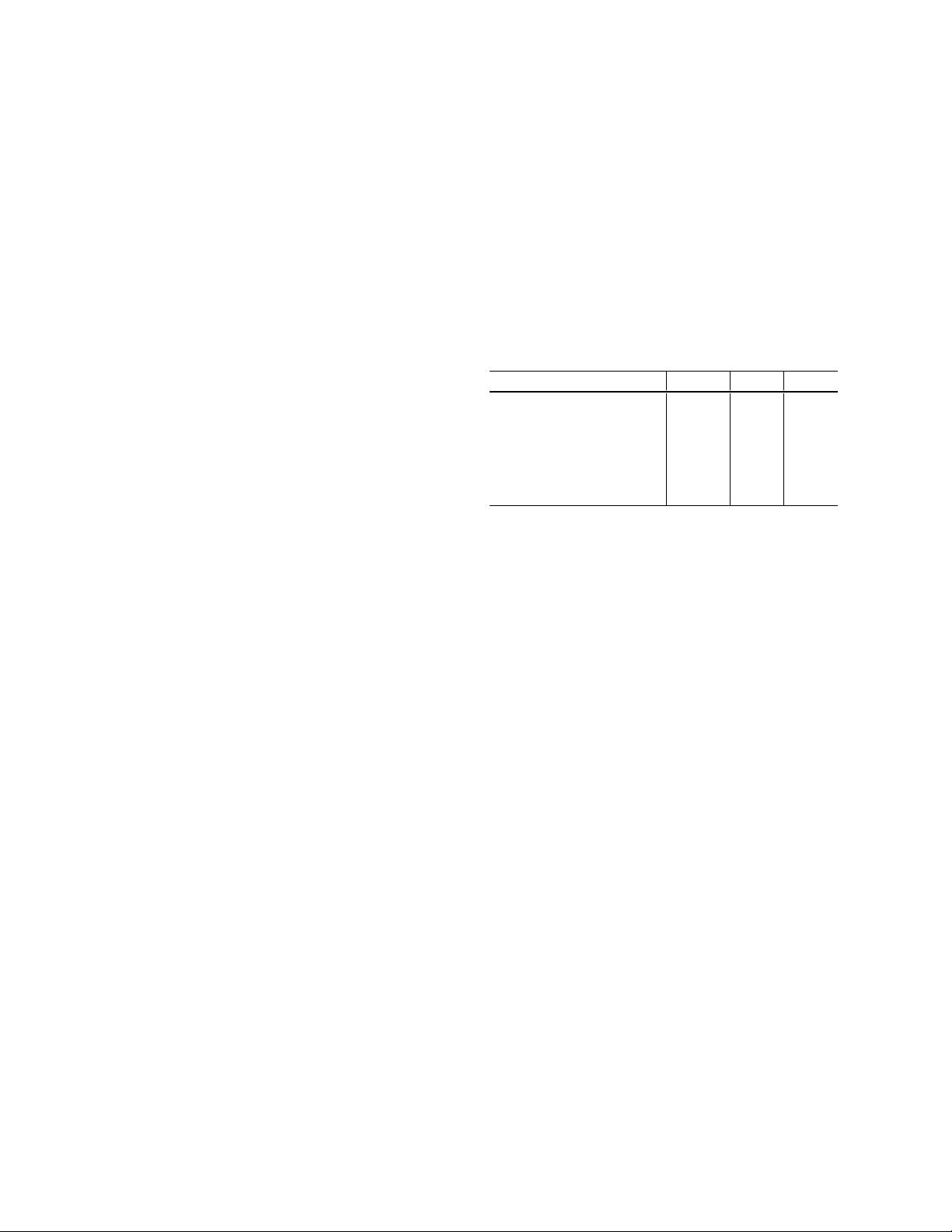

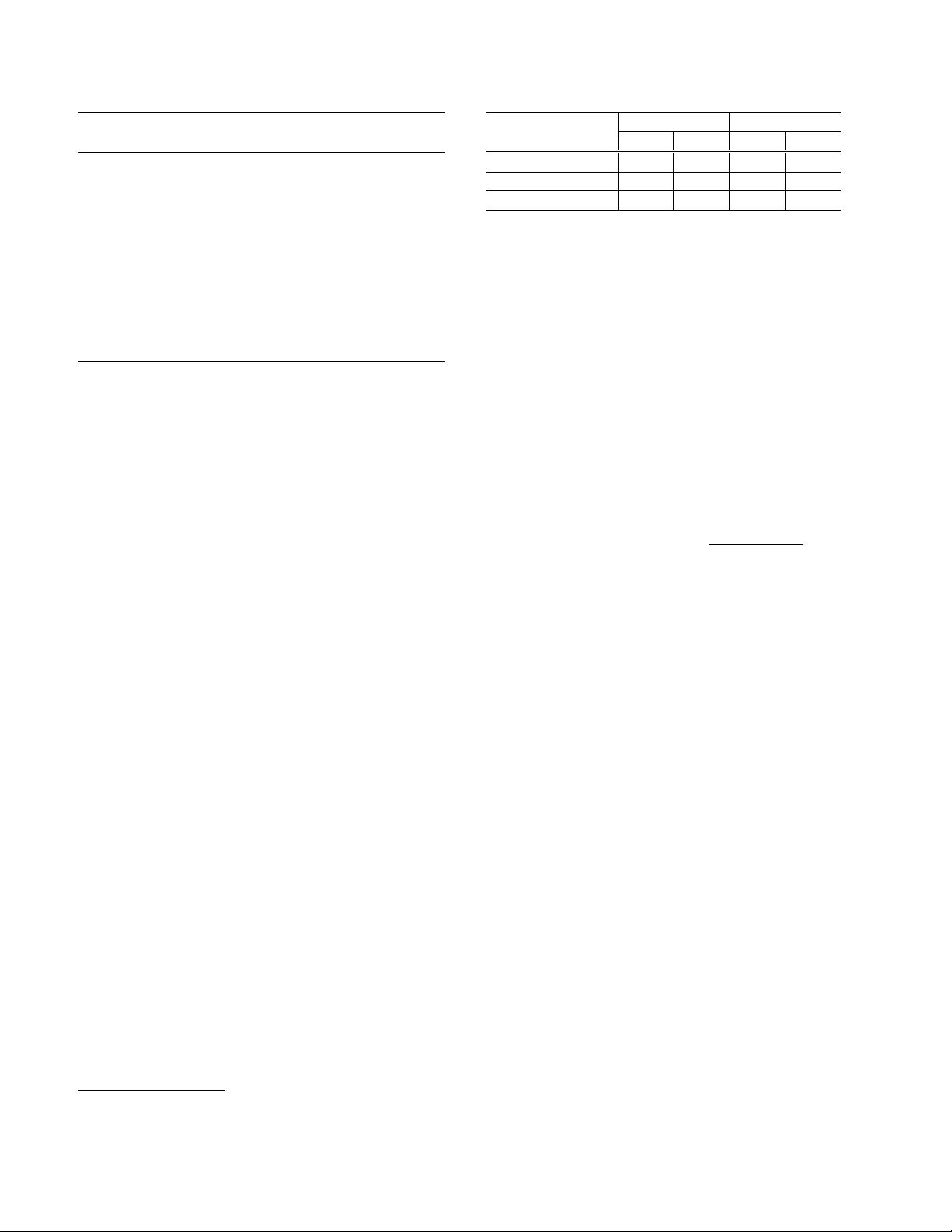

Much of the recent progress made in image classification research can be credited to training proce durere finements, such as changes in data augmentations and optimization methods. In theliterature ,however, most refinements are ei-ther brieflymentioned asimplement ationdetails or only vis-ibl 在人工智能领域,图像分类一直是一个核心的研究主题,它不仅推动着相关技术的发展,而且在实际应用中占据了举足轻重的地位。随着深度学习技术的不断进步,图像分类模型的性能也得到了显著提升。不过,这些进步并不是仅仅依赖于模型架构的创新,更多的时候,它来自于对训练过程的精细化调整。在这样的背景下,"图像分类调参技巧-李沐.pdf"这篇文献提供了一个全面的视角,关注于那些微小但至关重要的改进策略,这些策略在提升模型性能方面发挥了关键作用。 本文献首先指出,图像分类研究中取得的进展很大程度上得益于训练过程的改进,其中包括数据增强方法和优化方法的变化。这些改进往往在文献中被一笔带过或仅作为实施细节提及,但其实它们对于模型的最终性能有着不可忽视的影响。针对这一点,作者们展开了一系列的实证评估,以验证这些细微调整对于模型性能的影响。 文中详细讨论了多种“tricks”,如特定卷积层的步长调整和学习率调度等,这些策略在表面上看似微不足道,但它们的累积效应却能显著提升模型的准确率。通过将这些技巧应用到经典的图像分类模型ResNet-50上,作者们证明了这些调参方法的有效性,其在ImageNet上的验证集top-1准确率从75.3%提高到了79.29%。这一结果不仅令人惊讶,而且超越了诸多先进的网络架构,如SE-ResNeXt-50等。这说明即使是较为传统的模型,在经过精心调参后,也能展现出与最新架构相媲美甚至更优异的性能。 不仅如此,文献中的研究还展示了这些调参技巧的泛化能力。它们不仅适用于其他网络结构,如Inception V3和MobileNet,而且在不同数据集,如Place365中也同样有效。更进一步,通过这些方法训练出的模型,在目标检测、语义分割等其他深度学习任务中也表现出了更优的迁移学习性能。 文献的结构条理清晰,作者们首先建立了基准训练流程,然后逐一讨论了各种改进技巧。具体包括了数据增强的多样性、优化算法的选择、学习率调度策略的优化,以及针对特定层结构的修改等。作者们通过详细的实验和分析,向读者展示了如何在保持模型训练效率的同时,有效地提升其在图像分类任务中的性能。 总结来说,本文献强调了深度学习模型优化过程中细节的重要性。通过持续的实验和微调,即使是微小的参数变化也可能成为提升模型性能的关键。这对于人工智能领域的研究者和工程师们来说,是一个重要的启示。在探索新的网络架构的同时,我们不应忽视现有模型的潜力。通过不断地尝试和优化训练过程,我们完全有可能达到甚至超过新模型的性能。这不仅有助于推动图像分类技术的进步,也为深度学习在其他领域的应用提供了宝贵的参考。

剩余9页未读,继续阅读

- 粉丝: 2431

- 资源: 57

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- yolox_cfp_s.pth

- CFAR-radar-algorithm-MATLAB-GUI-master.zip

- I2 Localization v2.8.22 f4

- 盒子检测13-YOLO(v5至v11)、COCO、CreateML、Paligemma、TFRecord、VOC数据集合集.rar

- 大黄蜂塔防.exe大黄蜂塔防1.exe大黄蜂塔防2.exe

- 2024大模型在金融行业的落地探索.pptx

- 盒子检测49-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord数据集合集.rar

- IMG_20241224_190113.jpg

- 【安卓源代码】奶牛管理新加功能(完整前后端+mysql+说明文档).zip

- 【安卓源代码】群养猪生长状态远程监测(完整前后端+mysql+说明文档).zip

- 基于分治法的快速排序算法设计与分析报告

- 糖果店冲击.exe糖果店冲击1.exe糖果店冲击2.exe

- 目标靶子检测29-YOLO(v5至v9)、COCO、CreateML、Paligemma、TFRecord数据集合集.rar

- MATLAB优化工具箱使用教程

- simulink-master.zip

- 硬币、塑料、瓶子检测13-YOLO(v7至v11)、COCO、CreateML、Paligemma、TFRecord、VOC数据集合集.rar

信息提交成功

信息提交成功