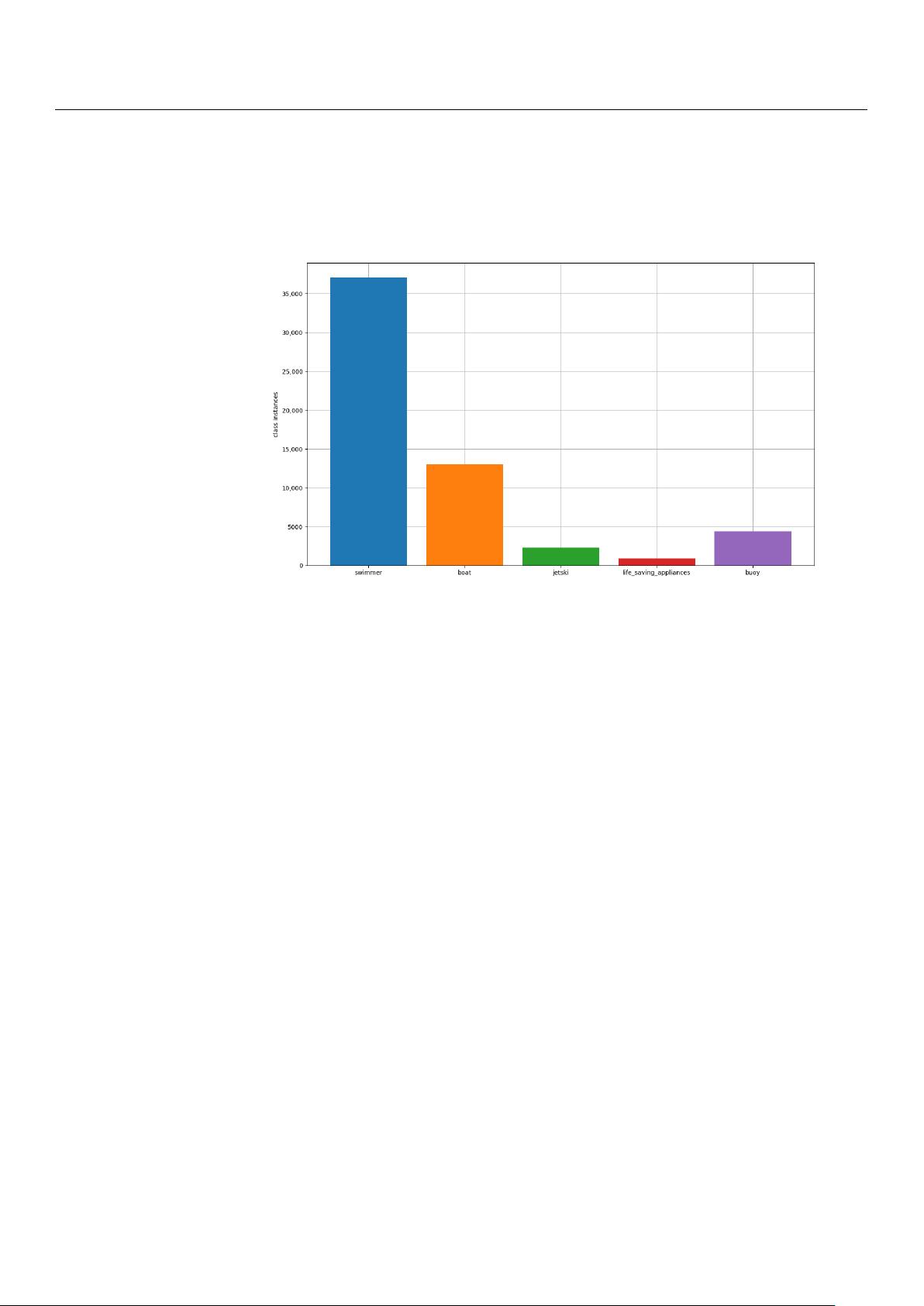

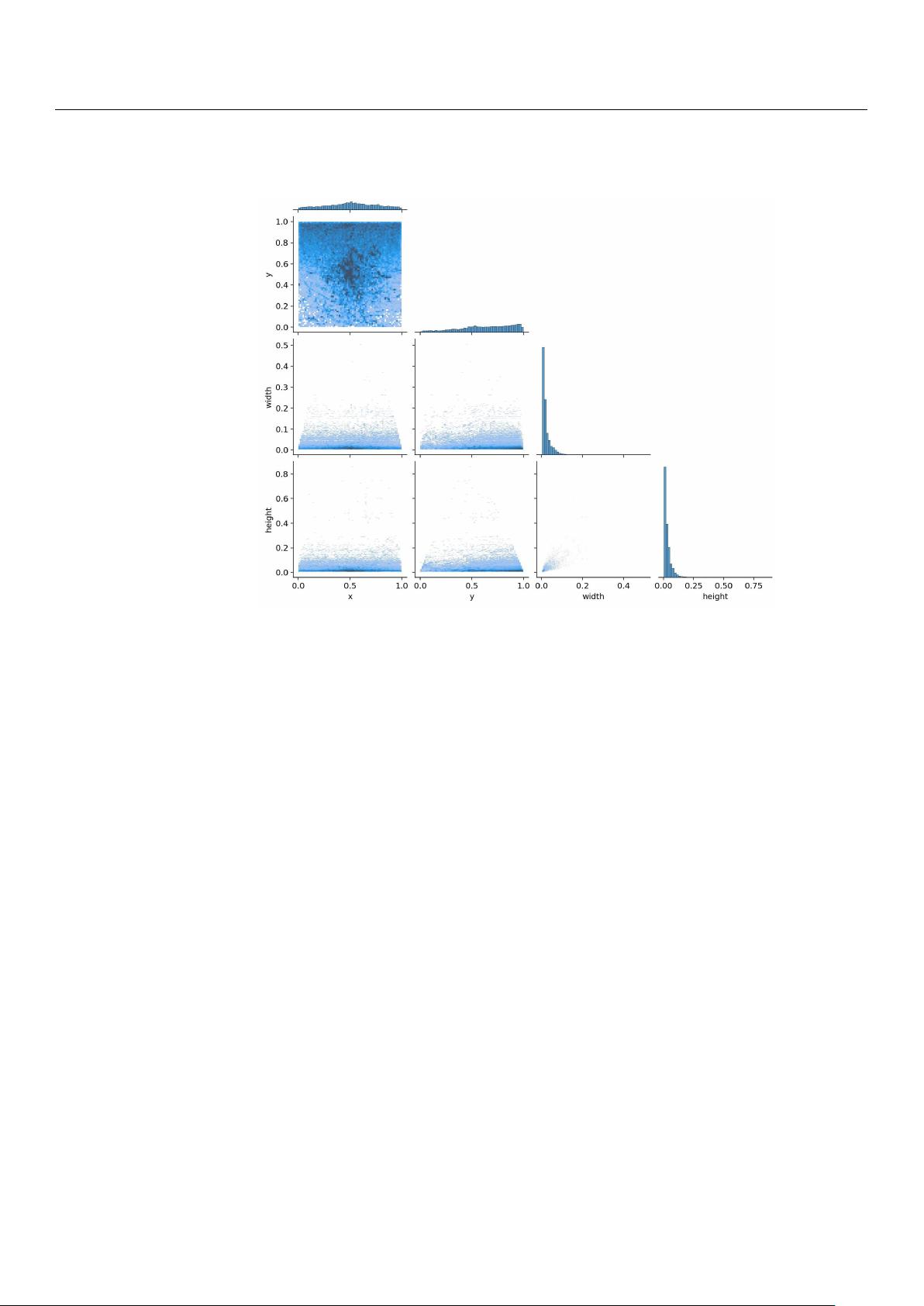

特定领域在尝试训练复杂的深度学习架构时,面临着很大的挑战,特别是当可用数据集有限且不平衡时。在海事环境中使用航空图像进行实时目标检测是一个典型的例子。尽管SeaDronesSee是这个任务中最广泛和最完整的数据集,但它受到了严重的类别不平衡的影响。为了解决这个问题,我们介绍了POSEIDON,一种特别针对物体检测数据集的数据增强工具。我们的方法通过将原始训练集中的物体和样本结合起来,同时利用图像元数据作出知情决策。我们在YOLOv5和YOLOv8上评估了我们的方法,并证明了它优于其他平衡技术,如错误权重,分别提高了2.33%和4.6%。 【YOLOv8训练与应用】在特定领域,如海事环境中的实时目标检测,深度学习模型的训练面临着巨大挑战,尤其是数据集有限且类别分布不平衡。为了解决这一问题,文章介绍了POSEIDON,一个专为小型目标检测数据集设计的数据增强工具。 在深度学习中,数据集的质量和多样性对于模型的性能至关重要。然而,在像海事环境这样的领域,获取全面且均衡的目标检测数据集并不容易。SeaDronesSee虽然在这个领域是最广泛使用的数据集,但其类别不平衡的问题限制了模型的性能。POSEIDON通过创新的数据增强策略来弥补这一不足,它结合原始训练集中的物体和样本,同时利用图像的元数据来做出明智的决策,生成新的训练样本。 数据增强是一种有效的方法,可以扩大训练集的规模,增加模型对各种情况的泛化能力。POSEIDON在此基础上,特别关注于处理小目标检测的问题,这对于像海事环境中的无人机航空图像分析尤其重要。通过智能地调整和扩展现有数据,该工具能够帮助缓解类别不平衡,提高模型在稀有类别上的检测性能。 文章中提到,POSEIDON在YOLOv5和YOLOv8两个流行的实时目标检测框架上进行了评估。YOLO系列,尤其是YOLOv8,以其高效和准确的检测能力而知名。实验结果表明,POSEIDON相比于错误权重等传统平衡技术,能显著提升模型性能。在YOLOv5上,总体提升了2.33%,而在YOLOv8上更是达到了4.6%的提升,这进一步证实了POSEIDON在应对数据不平衡问题上的有效性。 POSEIDON的提出对于深度学习社区具有重要意义,它提供了一种针对特定问题的解决方案,有助于提升在有限和不平衡数据集上的模型训练效果。这种方法可以被其他类似领域的研究者借鉴,用于改进他们的目标检测系统,特别是在资源有限的情况下。 POSEIDON数据增强工具通过优化数据集,增强了YOLO系列模型在处理小目标检测和类别不平衡问题上的能力。这不仅提升了模型的准确性和泛化性,还为深度学习在实际应用中的挑战提供了新的解决思路。随着更多类似工具和技术的发展,我们可以期待在未来,即使在数据集条件受限的情况下,深度学习模型也能实现更出色的表现。

剩余14页未读,继续阅读

- 粉丝: 4985

- 资源: 6

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 2015-2024年上市公司商道融绿esg评级数据(年度)

- DeepSeek:通用人工智能从入门到精通的技术解析与应用指南

- 离散扩展龙伯格观测器:扰动补偿功能下的鲁棒性能优化及动态响应增强策略,离散扩展龙伯格观测器:具有扰动补偿功能的高鲁棒性预测控制系统,一种具有扰动补偿功能的离散扩展龙伯格观测器,有较好的参数摄动扰动抑制

- 无刷直流电机BLDC三闭环控制系统的Matlab Simulink仿真模型搭建:原理、波形记录与参数详解,无刷直流电机BLDC三闭环控制系统的Matlab Simulink仿真模型搭建:原理、波形记录

- 基于Python的Django-vue基于spark的短视频推荐系统的设计与实现源码-说明文档-演示视频.zip

- DeepSeek写的重力球迷宫手机小游戏

- 单相变压器绕组与铁芯振动形变仿真模型:洛伦兹力与磁致伸缩效应下的动态响应分析,COMSOL单相变压器绕组与铁芯振动形变仿真模型:基于洛伦兹力与磁致伸缩效应的时域分析,comsol的单相变压器绕组及铁芯

- 新兴经济体二氧化碳排放报告2024.pdf

- 激光熔覆技术:COMSOL模拟建模与视频教程服务,助力激光研究人员与工程师的专业提升,激光熔覆技术:COMSOL软件下的建模与视频教程应用指南,COMSOL 激光 激光熔覆 名称:激光熔覆 适用人群:

- 2000-2023年上市公司价值链升级数据(含原始数据+计算代码+结果)

- COMSOL仿真下的钢架无损超声检测:焊接区域及周边缺陷识别技术,角钢梁纵波转横波检测原理揭秘,Comsol仿真技术下的钢架无损超声检测:角钢梁缺陷的精准识别与定位,Comsol仿真钢架无损超声检测

- 基于FPGA的图像坏点像素修复算法实现及Matlab辅助验证:探索其原理、测试与使用视频教程 注:标题中的“可刀”一词在此上下文中并无实际意义,因此未被包含在标题中 标题长度符合要求,并尽量简洁明了

- 2008-2022年各省环境污染指数数据(原始数据+结果).xlsx

- zhaopin_mzhan.apk

- 权威科研机构发布钢轨表面缺陷检测数据集,含400张图像和8种类别缺陷,mAP达0.8,附赠lunwen,钢轨表面缺陷检测数据集:包含400张图片与八种缺陷类别,适用于目标检测算法训练与研究 ,钢轨表面

- C形永磁辅助同步磁阻电机Maxwell参数化模型:转子手绘设计及关键参数优化分析,基于Maxwell参数化模型的C形永磁辅助同步磁阻电机研究:转子手绘非UDP模块的参数化设计及优化分析,C形永磁辅助同

信息提交成功

信息提交成功