没有合适的资源?快使用搜索试试~ 我知道了~

High-Performance and Scalable GPU Graph Traversal

需积分: 9 4 下载量 15 浏览量

2018-07-07

10:30:07

上传

评论

收藏 3.72MB PDF 举报

温馨提示

graph processing, 高性能计算。 Breadth-First Search (BFS) is a core primitive for graph traversal and a basis for many higher-level graph analysis algorithms. It is also representative of a class of parallel computations whose memory accesses and work distribution are both irregular and data dependent. Recent work has demonstrated the plausibility of GPU sparse graph traversal, but has tended to focus on asymptotically inefficient algorithms that perform poorly on graphs with nontrivial diameter.

资源推荐

资源详情

资源评论

i

i

i

i

i

i

i

i

14

High-Performance and Scalable GPU Graph Traversal

DUANE MERRILL and MICHAEL GARLAND, NVIDIA Corporation

and ANDREW GRIMSHAW, University of Virginia

Breadth-First Search (BFS) is a core primitive for graph traversal and a basis for many higher-level graph

analysis algorithms. It is also representative of a class of parallel computations whose memory accesses and

work distribution are both irregular and data dependent. Recent work has demonstrated the plausibility of

GPU sparse graph traversal, but has tended to focus on asymptotically inefficient algorithms that perform

poorly on graphs with nontrivial diameter.

We present a BFS parallelization focused on fine-grained task management constructed from efficient

prefix sum computations that achieves an asymptotically optimal O(|V|+|E|) gd work complexity. Our

implementation delivers excellent performance on diverse graphs, achieving traversal rates in excess of

3.3 billion and 8.3 billion traversed edges per second using single- and quad-GPU configurations, respec-

tively. This level of performance is several times faster than state-of-the-art implementations on both CPU

and GPU platforms.

Categories and Subject Descriptors: G.2.2 [Discrete Mathematics]: Graph Theory—Graph Algorithms;

D.1.3 [Programming Techniques]: Concurrent programming; F.2.2 [Analysis of Algorithms and

Problem Complexity]: Nonnumerical Algorithms and Problems—Computations on discrete structures,

Geometrical problems and computations

General Terms: Design, Algorithms, Performance

Additional Key Words and Phrases: Breadth-first search, GPU, graph algorithms, parallel algorithms, prefix

sum, graph traversal, sparse graphs

ACM Reference Format:

Merrill, D., Garland, M., and Grimshaw, A. 2015. High-performance and scalable GPU graph traversal. ACM

Trans. Parallel Comput. 1, 2, Article 14 (January 2015), 30 pages.

DOI:http://dx.doi.org/10.1145/2717511

1. INTRODUCTION

Algorithms for analyzing sparse relationships represented as graphs provide crucial

tools in many computational fields, ranging from genomics to electronic design au-

tomation to social network analysis. In this article, we explore the parallelization of

one fundamental graph algorithm on GPUs: breadth-first search (BFS). BFS is a com-

mon building block for more sophisticated graph algorithms, yet simple enough so

that we can analyze its behavior in depth. It is also used as a core computational ker-

nel in a number of benchmark suites, including Parboil [Stratton et al. 2012], Rodinia

[Che et al. 2009], and the emerging Graph500 supercomputer benchmark [Graph500

List 2011].

Contemporary processor architecture provides increasing parallelism in order to

deliver higher throughput while maintaining energy efficiency. Modern GPUs are at

the leading edge of this trend, provisioning tens of thousands of data-parallel threads.

D. Merrill was supported in part by a NVIDIA Graduate Fellowship.

Authors’ addresses: D. Merrill (corresponding author), M. Garland, NVIDIA Corporation; email:

duane.merrill@gmail.com; A. Grimshaw, University of Virginia, Charlottesville, VA.

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted

without fee provided that copies are not made or distributed for profit or commercial advantage and that

copies bear this notice and the full citation on the first page. Copyrights for third-party components of this

work must be honored. For all other uses, contact the Owner/Author.

2015 Copyright is held by the author/owner(s). 2329-4949/2015/01-ART14

DOI:http://dx.doi.org/10.1145/2717511

ACM Transactions on Parallel Computing, Vol. 1, No. 2, Article 14, Publication date: January 2015.

i

i

i

i

i

i

i

i

14:2 D. Merrill et al.

Despite their high computational throughput, GPUs might appear poorly suited for

sparse graph computation. In particular, BFS is representative of a class of algorithms

for which it is hard to obtain significantly better performance from parallelization.

Optimizing memory usage is nontrivial because memory access patterns are deter-

mined by the structure of the input graph, and parallelization f urther introduces

concerns of contention, load imbalance, and underutilization on multithreaded archi-

tectures [Agarwal et al. 2010; Leiserson and Schardl 2010; Xia and Prasanna 2009].

The wide data parallelism of GPUs can be particularly sensitive to these performance

issues.

Prior work on parallel graph algorithms has relied on two key architectural fea-

tures for performance: the first is multithreading and overlapped computation for

hiding memory latency, and the second is fine-grained synchronization, specifically

atomic read-modify-write operations. Atomic mechanisms are convenient for coor-

dinating the dynamic placement of data into shared data structures and for arbi-

trating contended status updates [Agarwal et al. 2010; Bader and Madduri 2006a;

Bader et al. 2005].

Modern GPU architectures provide both of these features, however, serializations

from atomic collisions are particularly expensive for GPUs in terms of efficiency and

performance. In general, mutual exclusion does not scale to thousands of parallel

processing elements. Furthermore, the cost of fine-grained and dynamic serialization

between threads within the same GPU SIMD unit is greater than that of more tra-

ditional, overlapped SMT threads. For example, all SIMD lanes may be made to wait

while the collisions of only a few are serialized.

For machines with wide data parallelism, we argue that software-based prefix sum

computations [Blelloch 1990; Hillis and Steele 1986] are a more suitable approach for

cooperative data placement. Prefix sum is a bulk-synchronous algorithmic primitive

that can be used to compute scatter offsets for concurrent threads, given their dynamic

allocation requirements. Efficient GPU prefix sums [Merrill and Grimshaw 2009] allow

to reorganize sparse and uneven workloads into dense and uniform ones in all phases

of graph traversal.

Our work as described in this article makes contributions in the following areas.

— Parallelization strategy. We present a GPU BFS parallelization that performs an

asymptotically optimal linear amount of work. It is the first to incorporate fine-

grained parallel adjacency list expansion. We also introduce local duplicate detection

techniques for avoiding race conditions that create redundant work. We demonstrate

that our approach delivers high performance on a broad spectrum of structurally

diverse graphs and, to our knowledge, we also describe the first design for multi-

GPU graph traversal.

— Empirical performance characterization. We present detailed analyses that isolate

and analyze the expansion and contraction aspects of BFS throughout the traversal

process. We reveal that serial and warp-centric expansion techniques described by

prior work significantly underutilize the GPU for important graph genres, and also

show that the fusion of neighbor expansion and inspection within the same kernel

often yields worse performance than performing them s eparately.

— High performance. We demonstrate that our methods deliver excellent performance

on a diverse body of real-world graphs. Our implementation achieves traversal rates

in excess of 3.3 billion and 8.3 billion traversed edges per second (TE/s) for single-

and quad-GPU configurations, respectively. In context, recent state-of-the-art paral-

lel implementations achieve 0.7 billion and 1.3 billion TE/s for similar datasets on

single- and quad-socket multicore processors [Agarwal et al. 2010]. Our implemen-

tations are publicly available via the B40C Project [Merrill 2011].

ACM Transactions on Parallel Computing, Vol. 1, No. 2, Article 14, Publication date: January 2015.

i

i

i

i

i

i

i

i

High-Performance and Scalable GPU Graph Traversal 14:3

2. BACKGROUND

Modern NVIDIA GPU processors consist of tens of multiprocessor cores, each manag-

ing on the order of a thousand hardware-scheduled threads. Each multiprocessor core

employs data-parallel SIMD (single instruction, multiple data) techniques in which a

single instruction stream is executed by a fixed-size grouping of threads called a warp.

A cooperative thread array (or CTA) is a group of threads that will be co-located on

the same multiprocessor and share a local scratch memory. Parallel threads are used

to execute a single program, or kernel. A sequence of kernel invocations is bulk syn-

chronous: each kernel is initially presented with a consistent view of the results from

the previous.

The efficiency of GPU architecture stems from the bulk-synchronous and SIMD as-

pects of the machine model. They facilitate excellent processor utilization on uniform

workloads having regularly structured computation. When the computation becomes

dynamic and varied, mismatches with the underlying architecture can result in signif-

icant performance penalties. For example, performance can be degraded by irregular

memory access patterns that cannot be coalesced, or that result in arbitrary mem-

ory bank conflicts, control-flow divergences between SIMD warp threads that result in

thread serialization, and load imbalances between barrier synchronization points that

result in resource underutilization [Owens et al. 2008]. I n this work, we make exten-

sive use of local prefix sum computation as a foundation for reorganizing sparse and

uneven workloads into dense and uniform ones.

2.1. Breadth-First Search

BFS is a graph traversal algorithm that systematically explores every reachable ver-

tex from a given source, where closer vertices are visited first. Fundamental uses of

BFS include: identifying all of the connected components within a graph, finding the

diameter of a tree, and testing a graph for bipartiteness [Cormen et al. 2001]. More so-

phisticated problems incorporating BFS include: identifying the reachable set of heap

items during garbage collection [Cheney 1970], belief propagation in statistical in-

ference [Gonzalez et al. 2009], finding community structure in networks [Newman

and Girvan 2004], and computing the maximum flow/minimum cut for a given graph

[Hussein et al. 2007].

We consider graphs of the form G = (V, E)withasetV of n vertices and a set E of

m directed edges. Given a source vertex v

s

, our goal is to traverse the vertices of G in

breadth-first order starting at v

s

. Each newly discovered vertex v

i

will be labeled by:

(a) its distance d

i

from v

s

and/or (b) the predecessor vertex p

i

immediately preceding

it on the shortest path to v

s

. For simplicity, we identify the vertices v

0

.. v

n−1

using

integer indices. The pair (v

i

, v

j

) indicates a directed edge in the graph from v

i

→ v

j

,

and the adjacency list A

i

=

v

j

|(v

i

, v

j

) 2 E

is the set of neighboring vertices incident

on vertex v

i

. We treat undirected graphs as symmetric directed graphs containing both

(v

i

, v

j

) and (v

j

, v

i

) for each undirected edge. In this article, all graph sizes and traversal

rates are measured in terms of directed edge counts.

We represent the graph using an adjacency matrix A whose rows are the adjacency

lists A

i

. The number of edges within sparse graphs is typically only a constant factor

larger than n. We use the well-known compressed sparse row (CSR) format to store

the graph in memory consisting of two arrays.

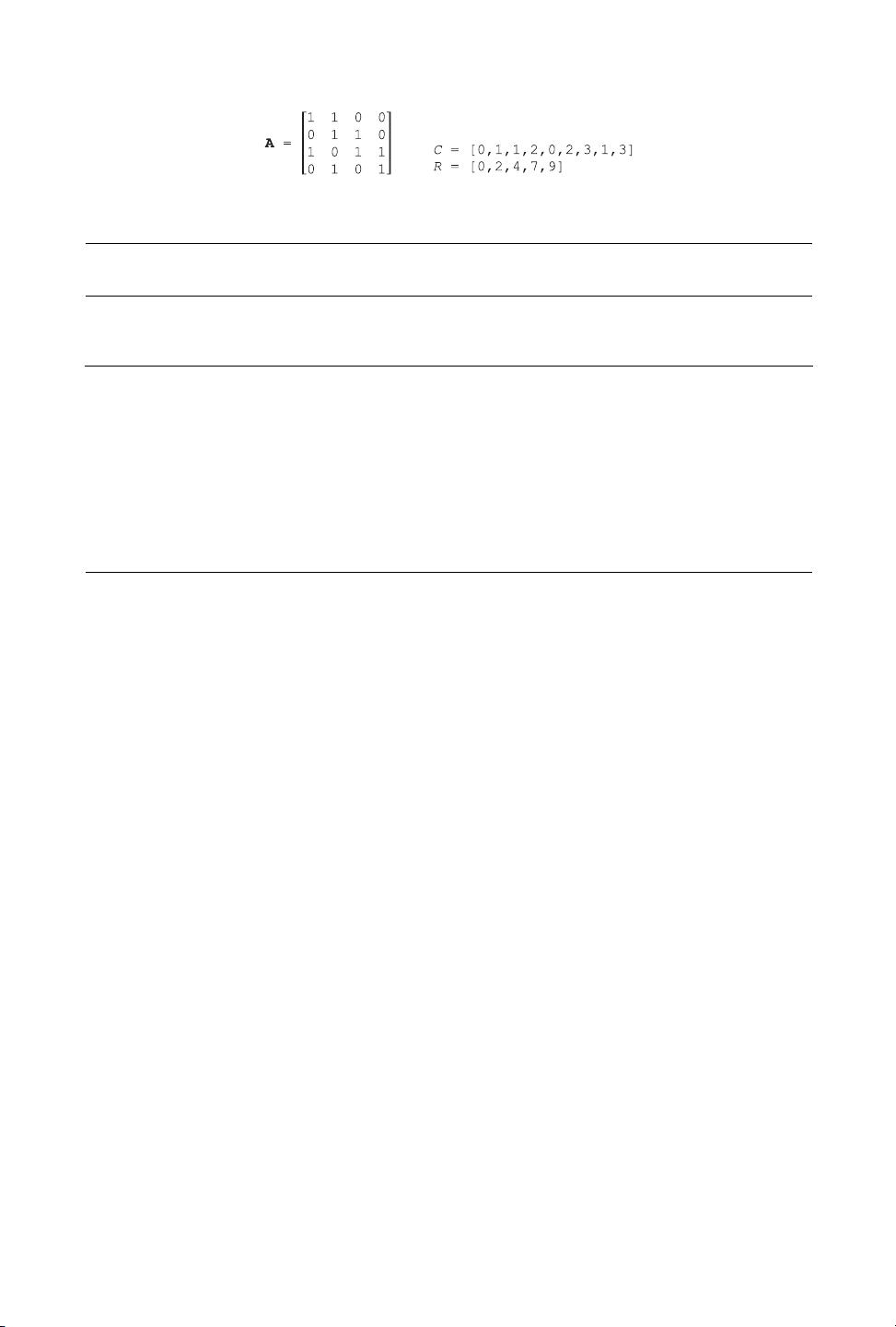

Figure 1 provides a simple example, where the column-indices array C is formed

from the set of the adjacency lists concatenated into a single array of m integers. The

row-offsets R array contains n + 1 integers, and entry R[ i] is the index in C of the

adjacency list A

i

.

ACM Transactions on Parallel Computing, Vol. 1, No. 2, Article 14, Publication date: January 2015.

i

i

i

i

i

i

i

i

14:4 D. Merrill et al.

Fig. 1. Example CSR representation: column-indices array C and row-offsets array R comprise the

adjacency matrix A.

ALGORITHM 1 The simple sequential breadth-first search algorithm for marking vertex distances from

the source s. Alternatively, a shortest-paths search tree can be constructed by marking i as j ’s predecessor

in line 11.

Input: Vertex set V, row-offsets array R, column-indices array C, source vertex s

Output: Array dist[0..n-1] with dist[ v] holding the distance from s to v

Functions: Enqueue(val) inserts val at the end of the queue instance. Dequeue() returns the front

element of the queue instance.

1 Q := {}

2 for i in V:

3 dist[i] :=

4 dist[s] := 0

5 Q.Enqueue(s)

6 while (Q != {}) :

7 i = Q.Dequeue()

8 for offset in R[i] .. R[i+1]-1 :

9 j := C[offset]

10 if (dist[j] == )

11 dist[j] := dist[i] + 1;

12 Q.Enqueue(j)

We store graph edges in the order they are defined. We do not perform any offline pre-

processing in order to improve locality of reference, improve load balance, or eliminate

sparse memory references. Such strategies might include sorting neighbors within

their adjacency lists, sorting vertices into a space-filling curve and remapping their

corresponding vertex identifiers, splitting up vertices having large adjacency lists, en-

coding adjacency row-offset and length information into vertex identifiers, removing

duplicate edges, singleton vertices, and self-loops, etc.

Algorithm 1 presents the standard sequential BFS method. It operates by circulat-

ing the vertices of the graph through a FIFO queue that is initialized with v

s

[Cormen

et al. 2001]. As vertices are dequeued, their neighbors are examined, and unvisited

neighbors labeled with their distance and/or predecessor and enqueued for later pro-

cessing. This algorithm performs linear O(m + n) work since each vertex is labeled

exactly once and each edge is traversed exactly once.

2.2. Parallel Breadth-First Search

The FIFO ordering of the sequential algorithm forces it to label vertices in increasing

order of depth, where each depth level is fully explored before the next. Most parallel

BFS algorithms are level-synchronous, that is, each level may be processed in parallel,

so as long as the sequential ordering of levels is preserved. An implicit race condition

can exist where multiple tasks may concurrently discover a vertex v

j

. This is generally

considered benign since all such contending tasks would apply the same d

j

andgivea

valid value of p

j

.

Structurally different methods may be more suitable for graphs with very large di-

ameters, such as, algorithms based on the method of Ullman and Yannakakis [1990];

such alternatives are beyond the scope of this article.

As illustrated in Figure 2, each iteration of a level-synchronous method identifies

both an edge and vertex frontier. The edge frontier is the set of all edges to be tra-

versed during this iteration or, equivalently, the set of all A

i

where v

i

was marked in

ACM Transactions on Parallel Computing, Vol. 1, No. 2, Article 14, Publication date: January 2015.

i

i

i

i

i

i

i

i

High-Performance and Scalable GPU Graph Traversal 14:5

Fig. 2. Example BFS frontier evolution from source vertex v

0

. For each vertex, the distance from the source

is the BFS iteration in which it appeared in the vertex frontier.

ALGORITHM 2 A simple quadratic work

vertex-oriented BFS parallelization.

Input:Vertex set V, row-offsets array R,

column-indices array C, source vertex s

Output:Array dist[0..n-1] with dist[ v]

holding the distance from s to v

1 parallel for (i in V):

2 dist[i] :=

3 dist[s] := 0

4 iteration := 0

5 do :

6 done := true

7 parallel for (i in V) :

8 if (dist[i] == iteration)

9 done := false

10 for (offset in R[i] .. R[i+1]-1) :

11 j := C[offset]

12 dist[j] = iteration + 1

13 iteration++

while (!done)

ALGORITHM 3 A linear work BFS paralleliza-

tion constructed using a global vertex-frontier

queue.

Input: Vertex set V, row-offsets array R,

column-indices array C, source vertex s,

queues

Output: Array dist[0..n-1] with dist[ v]

holding the distance from s to v

Functions: LockedEnqueue(val) safely

inserts val at the end of the queue instance

1 parallel for (i in V) :

2 dist[i] :=

3 dist[s] := 0

4 iteration := 0

5 inQ := {}

6 inQ.LockedEnqueue(s)

7 while (inQ != {}) :

8 outQ := {}

9 parallel for (i in inQ) :

10 for (offset in R[i] .. R[i+1]-1) :

11 j := C[offset]

12 if (dist[j] == )

13 dist[j] = iteration + 1

14 outQ.LockedEnqueue(j)

15 iteration++

16 inQ := outQ

the previous iteration. The vertex frontier, by contrast is t he unique subset of such

neighbors that are unmarked and which will be labeled and expanded for the next

iteration. Each BFS iteration comprises two logical phases that realize these frontiers.

(1) Neighbor expansion. The vertices in the vertex frontier are expanded into an edge

frontier of neighboring vertex identifiers.

(2) Status lookup and filtering. The neighbor identifiers in the edge frontier are con-

tracted into a vertex frontier of unvisited vertices.

Quadratic Work Parallelizations. The simplest parallel BFS algorithms inspect ev-

ery edge or, at a minimum, every vertex during every iteration. These methods per-

form a quadratic amount of work. A vertex v

j

is marked when a task discovers an edge

v

i

→ v

j

, where v

i

has been marked and v

j

has not. As Algorithm 2 illustrates, vertex-

oriented variants must subsequently expand and mark the neighbors of v

j

. Their work

complexity is O(n

2

+ m) as there may n BFS iterations in the worst case.

Quadratic parallelization strategies have been used by almost all prior GPU

implementations. The static assignment of tasks to vertices (or edges) trivially maps

to the data-parallel GPU machine model, and each thread’s computation is completely

ACM Transactions on Parallel Computing, Vol. 1, No. 2, Article 14, Publication date: January 2015.

剩余29页未读,继续阅读

资源评论

qq_30051413

- 粉丝: 0

- 资源: 6

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于Kotlin语言的Android开发工具类集合源码

- 零延迟 DirectX 11 扩展实用程序.zip

- 基于Java的语音识别系统设计源码

- 基于Java和HTML的yang_home766个人主页设计源码

- 基于Java与前端技术的全国实时疫情信息网站设计源码

- 基于鸿蒙系统的HarmonyHttpClient设计源码,纯Java实现类似OkHttp的HttpNet框架与优雅的Retrofit注解解析

- 基于HTML和JavaScript的廖振宇图书馆前端设计源码

- 基于Java的Android开发工具集合源码

- 通过 DirectX 12 Hook (kiero) 实现通用 ImGui.zip

- 基于Java开发的YY网盘个人网盘设计源码

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功