propose one way how to address this limitation.

Specifically, we rely on graph convolutional

networks (GCNs) (Duvenaud et al., 2015; Kipf

and Welling, 2017; Kearnes et al., 2016), a recent

class of multilayer neural networks operating on

graphs. For every node in the graph (in our case

a word in a sentence), GCN encodes relevant in-

formation about its neighborhood as a real-valued

feature vector. GCNs have been studied largely in

the context of undirected unlabeled graphs. We in-

troduce a version of GCNs for modeling syntactic

dependency structures and generally applicable to

labeled directed graphs.

One layer GCN encodes only information about

immediate neighbors and K layers are needed

to encode K-order neighborhoods (i.e., informa-

tion about nodes at most K hops aways). This

contrasts with recurrent and recursive neural net-

works (Elman, 1990; Socher et al., 2013) which, at

least in theory, can capture statistical dependencies

across unbounded paths in a trees or in a sequence.

However, as we will further discuss in Section 3.3,

this is not a serious limitation when GCNs are used

in combination with encoders based on recurrent

networks (LSTMs). When we stack GCNs on top

of LSTM layers, we obtain a substantial improve-

ment over an already state-of-the-art LSTM SRL

model, resulting in the best reported scores on the

standard benchmark (CoNLL-2009), both for En-

glish and Chinese.

1

Interestingly, again unlike recursive neural net-

works, GCNs do not constrain the graph to be

a tree. We believe that there are many applica-

tions in NLP, where GCN-based encoders of sen-

tences or even documents can be used to incor-

porate knowledge about linguistic structures (e.g.,

representations of syntax, semantics or discourse).

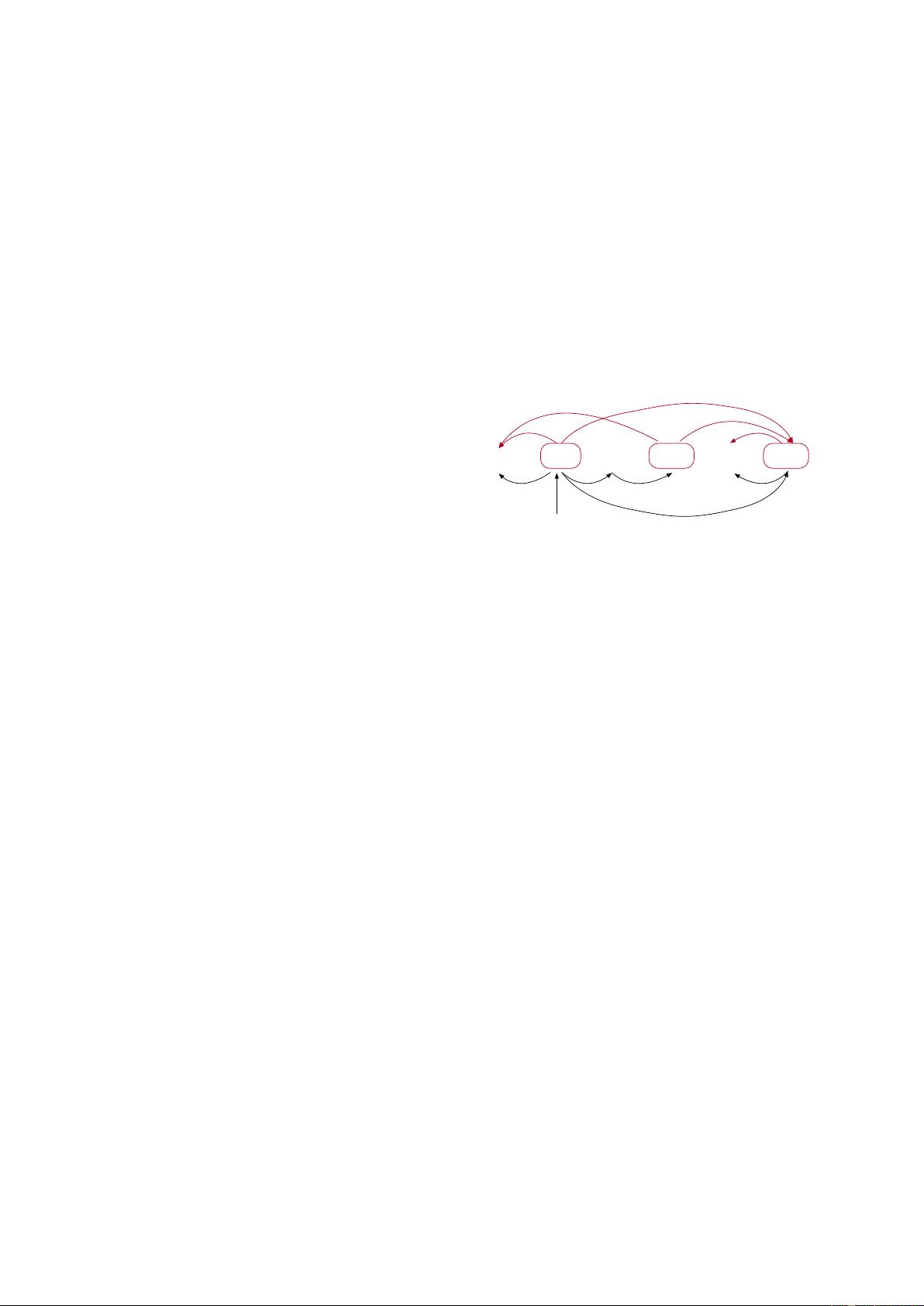

For example, GCNs can take as input combined

syntactic-semantic graphs (e.g., the entire graph

from Figure 1) and be used within downstream

tasks such as machine translation or question an-

swering. However, we leave this for future work

and here solely focus on SRL.

The contributions of this paper can be summa-

rized as follows:

• we are the first to show that GCNs are effec-

tive for NLP;

• we propose a generalization of GCNs suited

1

The code is available at https://github.com/

diegma/neural-dep-srl.

to encoding syntactic information at word

level;

• we propose a GCN-based SRL model and

obtain state-of-the-art results on English and

Chinese portions of the CoNLL-2009 dataset;

• we show that bidirectional LSTMs and

syntax-based GCNs have complementary

modeling power.

2 Graph Convolutional Networks

In this section we describe GCNs of Kipf and

Welling (2017). Please refer to Gilmer et al.

(2017) for a comprehensive overview of GCN ver-

sions.

GCNs are neural networks operating on graphs

and inducing features of nodes (i.e., real-valued

vectors / embeddings) based on properties of their

neighborhoods. In Kipf and Welling (2017), they

were shown to be very effective for the node clas-

sification task: the classifier was estimated jointly

with a GCN, so that the induced node features

were informative for the node classification prob-

lem. Depending on how many layers of convolu-

tion are used, GCNs can capture information only

about immediate neighbors (with one layer of con-

volution) or any nodes at most K hops aways (if

K layers are stacked on top of each other).

More formally, consider an undirected graph

G = (V, E), where V (|V | = n) and E are

sets of nodes and edges, respectively. Kipf and

Welling (2017) assume that edges contain all the

self-loops, i.e., (v, v) ∈ E for any v. We can de-

fine a matrix X ∈ R

m×n

with each its column

x

v

∈ R

m

(v ∈ V) encoding node features. The

vectors can either encode genuine features (e.g.,

this vector can encode the title of a paper if citation

graphs are considered) or be a one-hot vector. The

node representation, encoding information about

its immediate neighbors, is computed as

h

v

= ReLU

X

u∈N (v)

(W x

u

+ b)

, (1)

where W ∈ R

m×m

and b ∈ R

m

are a weight ma-

trix and a bias, respectively; N(v) are neighbors

of v; ReLU is the rectifier linear unit activation

function.

2

Note that v ∈ N(v) (because of self-

loops), so the input feature representation of v (i.e.

x

v

) affects its induced representation h

v

.

2

We dropped normalization factors used in Kipf and

Welling (2017), as they are not used in our syntactic GCNs.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功