没有合适的资源?快使用搜索试试~ 我知道了~

yolov1的原始论文,便于理解yolov框架。

资源推荐

资源详情

资源评论

You Only Look Once:

Unified, Real-Time Object Detection

Joseph Redmon

∗

, Santosh Divvala

∗†

, Ross Girshick

¶

, Ali Farhadi

∗†

University of Washington

∗

, Allen Institute for AI

†

, Facebook AI Research

¶

http://pjreddie.com/yolo/

Abstract

We present YOLO, a new approach to object detection.

Prior work on object detection repurposes classifiers to per-

form detection. Instead, we frame object detection as a re-

gression problem to spatially separated bounding boxes and

associated class probabilities. A single neural network pre-

dicts bounding boxes and class probabilities directly from

full images in one evaluation. Since the whole detection

pipeline is a single network, it can be optimized end-to-end

directly on detection performance.

Our unified architecture is extremely fast. Our base

YOLO model processes images in real-time at 45 frames

per second. A smaller version of the network, Fast YOLO,

processes an astounding 155 frames per second while

still achieving double the mAP of other real-time detec-

tors. Compared to state-of-the-art detection systems, YOLO

makes more localization errors but is less likely to predict

false positives on background. Finally, YOLO learns very

general representations of objects. It outperforms other de-

tection methods, including DPM and R-CNN, when gener-

alizing from natural images to other domains like artwork.

1. Introduction

Humans glance at an image and instantly know what ob-

jects are in the image, where they are, and how they inter-

act. The human visual system is fast and accurate, allow-

ing us to perform complex tasks like driving with little con-

scious thought. Fast, accurate algorithms for object detec-

tion would allow computers to drive cars without special-

ized sensors, enable assistive devices to convey real-time

scene information to human users, and unlock the potential

for general purpose, responsive robotic systems.

Current detection systems repurpose classifiers to per-

form detection. To detect an object, these systems take a

classifier for that object and evaluate it at various locations

and scales in a test image. Systems like deformable parts

models (DPM) use a sliding window approach where the

classifier is run at evenly spaced locations over the entire

image [10].

More recent approaches like R-CNN use region proposal

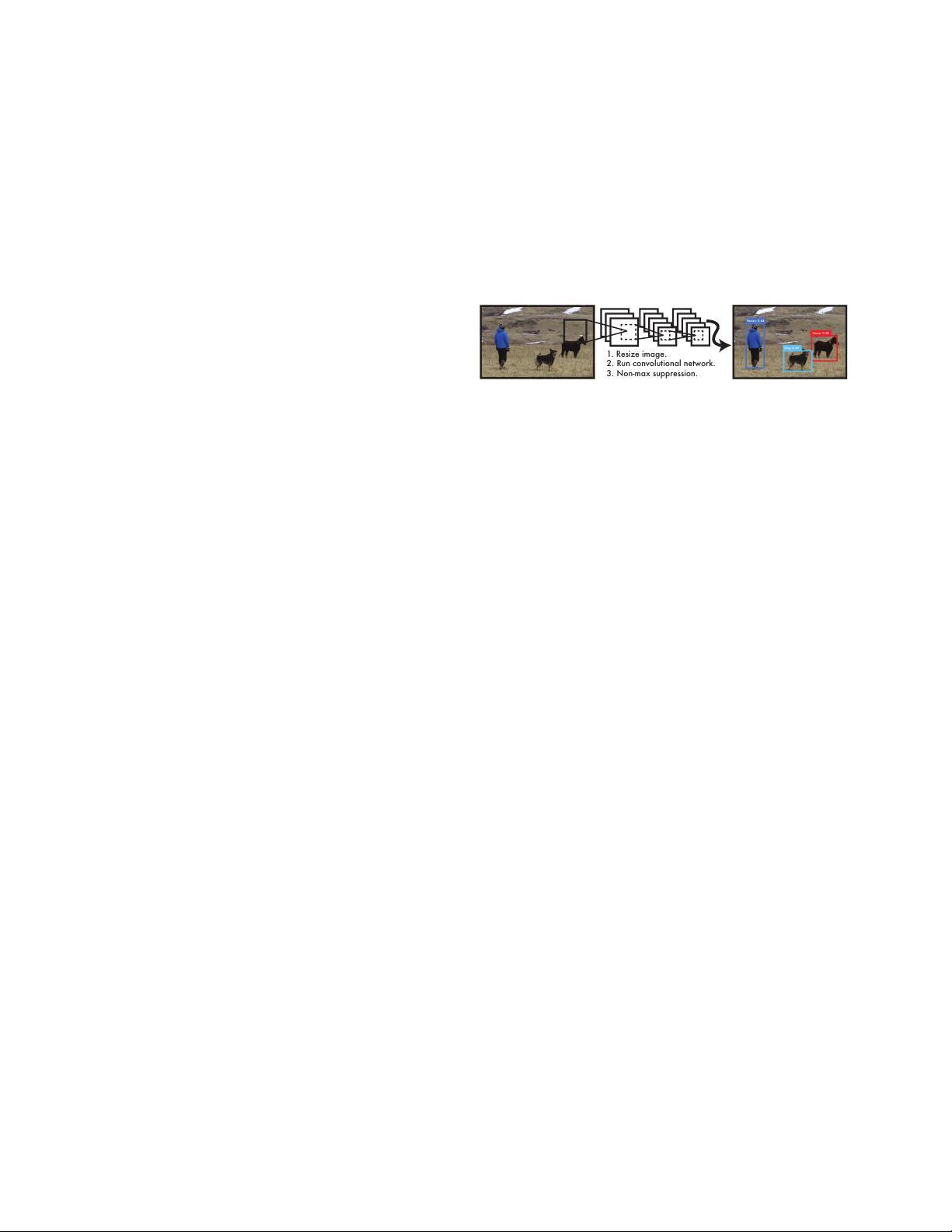

1. Resize image.

2. Run convolutional network.

3. Non-max suppression.

Dog: 0.30

Person: 0.64

Horse: 0.28

Figure 1: The YOLO Detection System. Processing images

with YOLO is simple and straightforward. Our system (1) resizes

the input image to 448 × 448, (2) runs a single convolutional net-

work on the image, and (3) thresholds the resulting detections by

the model’s confidence.

methods to first generate potential bounding boxes in an im-

age and then run a classifier on these proposed boxes. After

classification, post-processing is used to refine the bound-

ing boxes, eliminate duplicate detections, and rescore the

boxes based on other objects in the scene [13]. These com-

plex pipelines are slow and hard to optimize because each

individual component must be trained separately.

We reframe object detection as a single regression prob-

lem, straight from image pixels to bounding box coordi-

nates and class probabilities. Using our system, you only

look once (YOLO) at an image to predict what objects are

present and where they are.

YOLO is refreshingly simple: see Figure 1. A sin-

gle convolutional network simultaneously predicts multi-

ple bounding boxes and class probabilities for those boxes.

YOLO trains on full images and directly optimizes detec-

tion performance. This unified model has several benefits

over traditional methods of object detection.

First, YOLO is extremely fast. Since we frame detection

as a regression problem we don’t need a complex pipeline.

We simply run our neural network on a new image at test

time to predict detections. Our base network runs at 45

frames per second with no batch processing on a Titan X

GPU and a fast version runs at more than 150 fps. This

means we can process streaming video in real-time with

less than 25 milliseconds of latency. Furthermore, YOLO

achieves more than twice the mean average precision of

other real-time systems. For a demo of our system running

in real-time on a webcam please see our project webpage:

http://pjreddie.com/yolo/.

Second, YOLO reasons globally about the image when

1

arXiv:1506.02640v5 [cs.CV] 9 May 2016

资源评论

图灵追慕者

- 粉丝: 3925

- 资源: 159

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功