Eötvös Loránd University

Faculty of Science

Institute of Mathematics

Neural network-based control of a

non-linear dynamical system

Supervisor: Author:

Dr. Ferenc Izsák Károly Csurilla

Habil. Associate Professor Postgraduate student

Budapest, 2023

Abstract

This thesis aims to be an entry point to reinforcement learning control. We

are applying the DDPG (Deep Deterministic Policy Gradient) algorithm to the

cart pole system, which is a classical non-linear dynamical system often used for

benchmarking of control algorithms. The resulting Matlab framework contains the

end-to-end system specication from the derivation of its dierential equations (as a

proxy for a "real" system) to the real-time interactive visualisation of its trajectories.

To improve training eciency, special attention was paid to the trajectory generation

and reward structure.

1

Contents

1 Introduction 2

2 Reinforcement learning foundations 3

2.1 Markov decision process . . . . . . . . . . . . . . . . . . . . . . . . . 4

2.2 Value functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.3 Deterministic Gradient Policy Theorem . . . . . . . . . . . . . . . . . 9

2.4 DDPG algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

3 Cart pole environment 15

3.1 Dynamical model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.2 Trajectory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.3 Environment signals . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.4 Reward . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

4 Agents 26

4.1 Agent architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.2 Training process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.3 Simulation results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.3.1 Stable agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4.3.2 Unstable agent . . . . . . . . . . . . . . . . . . . . . . . . . . 33

5 Summary 36

Bibliography 37

Chapter 1

Introduction

Control engineering is an engineering discipline that focuses on actuation meth-

ods to make a dynamical system converge to a desired state or approximate a pre-

scribed trajectory. Also, a basic requirement for this procedure is the robustness

against environmental or modelling uncertainties. Since its formalisation in the late

19th century, control theory turned out to be a melting pot of real and complex

analysis, linear algebra and probability theory.

With the resurgence of neural networks in the 1990s, it was natural to use them

as a substitute for control principles designed with classical methods. However, em-

bedding them in a learning scheme was prone to the vanishing gradient problem,

similar to recurrent neural networks. It took recent breakthroughs in reinforcement

learning to provide a stable training environment, which turned out to be especially

eective in tackling high output-dimensional, non-linear problems, where human

intuition was infeasible.

Chapter 2 aims to give a concise but self-contained introduction to reinforce-

ment learning, covering the basic results necessary for understanding the Deep

Deterministic Policy Gradient (DDPG) algorithm, one of the stepping stones in con-

tinuous control. It is followed by the environment description in Chapter 3, where the

details of the environment, trajectory and reward equations are discussed. Finally

in Chapter 4, we collect the training and controller performance results, with a few

concluding remarks in Chapter 5.

2

Chapter 2

Reinforcement learning foundations

Reinforcement learning can be recognised as a learning framework, where an

agent is conditioned to continuously improve by sequentially interacting with a

stochastic environment [1]. As an area of machine learning, it focuses on explor-

ing and exploiting (maximizing) the reward mechanism of the environment, instead

of mimicking observation-action relations coming from a supposed optimal policy

(as is the case in supervised learning).

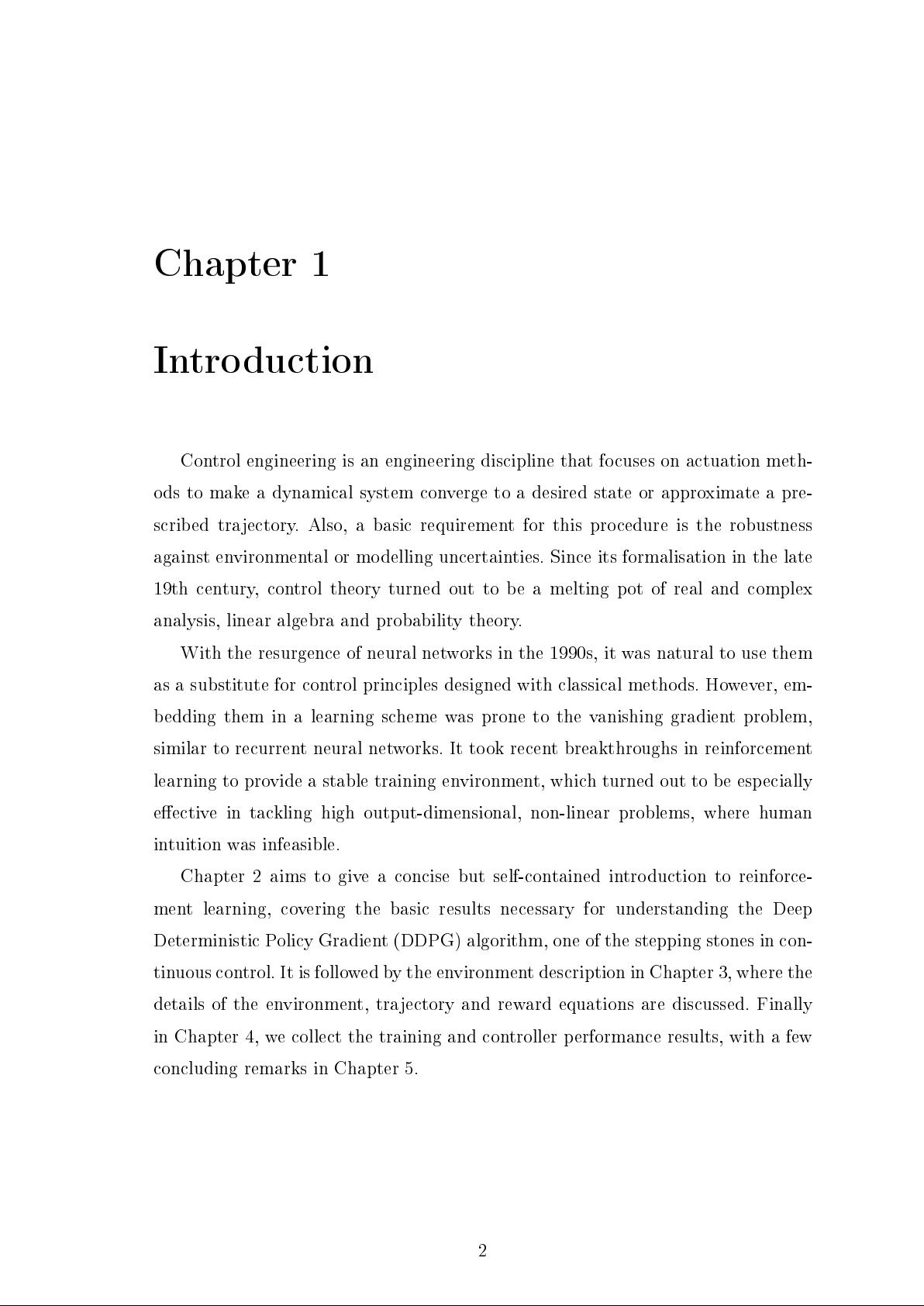

Reinforcement learning is such a vast area that it cannot be reasonably cov-

ered within the frames of this work (Figure 2.1). Instead, we are focusing on the

derivation of the DDPG algorithm, the central method that we are applying to

the cart-pole problem. As these reinforcement learning algorithms have numerous

hyperparameters, it is crucial to understand the theory behind them.

DDPG is a model-free, online, o-policy actor-critic reinforcement learning

Figure 2.1: Reinforcement learning methodology (based on [2]).

3