As another advantage, local optima avoidance is high due the

stochastic nature of evolutionary algorithms. If an evolutionary

algorithm is trapped in a local optimum, stochastic operator lead

to random changes in the solution and eventually escaping from

the local optimum. Although there is no guarantee for resolving this

issue completely, stochastic algorithms have much higher probabil-

ity to escape from local optima compared to deterministic methods.

A very accurate approximation of the global optimum is not guaran-

teed as well, but with running an evolutionary algorithm several

times the probability of obtaining a better solution is increased.

Last but not least, the simplicity is another characteristic of evo-

lutionary algorithms. Natural evolutionary concepts or collective

behaviours are the main inspirations for the majority of algorithms

in this field where they are so simple. In addition, evolutionary

algorithms follow a general and common framework, in which a

set of randomly created solutions is enhanced or evolved itera-

tively. What makes algorithms different in this field is the method

of improving this set.

Some of the most popular algorithms in this field are: Genetic

Algorithms (GA) [14,15], Particle Swarm Optimization (PSO) [16],

Ant Colony Optimization (ACO) [17], Differential Evolution (DE)

[18], Evolutionary Programming (EP) [19] [20]. Although these

algorithms are able to solve many real and challenging problems,

the so-called No Free Lunch theorem [21] allows researchers to

propose new algorithms. According to this theorem, all algorithms

perform equal when solving all optimization problems. Therefore,

one algorithm can be very effective in solving one set of problems

but in effective on a different set of problems. This is the founda-

tion of many works in this field. Some of the recent algorithms

are: Grey Wolf Optimizer (GWO) [22], Artificial Bee Colony (ABC)

algorithm [23], Firefly Algorithm (FA) [24,25], Cuckoo Search (CS)

algorithm [26,27], Cuckoo Optimization Algorithm (COA) [28],

Gravitational Search Algorithm (GSA) [29], Charged System Search

(CSS) [30–33], Magnetic Charged System Search [34,35], Ray Opti-

mization (RO) [36–38] algorithm, Colliding Bodies Optimization

(CBO) [39–44] algorithm, Hybrid Particle Swallow Swarm Optimi-

zation (HPSSO) [45], Democratic Particle Swarm Optimization

(DPSO) [46,47], Dolphin Echolocation (DE) [48,49], and Chaotic

Swarming of Particles (CSP) [50].

This paper also proposes a new algorithm called Ant Lion Opti-

mizer (ALO) as an alternative approach for solving optimization

problems. As its name implies, the ALO algorithm mimics the intel-

ligent behaviour of antlions in hunting ants in nature. The rest of

the paper is organized as follows:

Section 2 presents the main inspiration of this paper and pro-

poses the ALO algorithm. Experimental results of the test functions

are provided in Section 3. Sections 4 and 5 solve several real prob-

lems to demonstrate the applicability of the proposed algorithm.

Finally, Section 6 concludes the work and discusses possible future

research.

2. Ant Lion Optimizer

In this section the inspiration of the ALO algorithm is first pre-

sented. The mathematical model and the ALO algorithm are then

discussed in details.

2.1. Inspiration

Antlions (doodlebugs) belong to the Myrmeleontidae family

and Neuroptera order (net-winged insects). The lifecycle of ant-

lions includes two main phases: larvae and adult. A natural total

lifespan can take up to 3 years, which mostly occurs in larvae (only

3–5 weeks for adulthood). Antlions undergo metamorphosis in a

cocoon to become adult. They mostly hunt in larvae and the adult-

hood period is for reproduction.

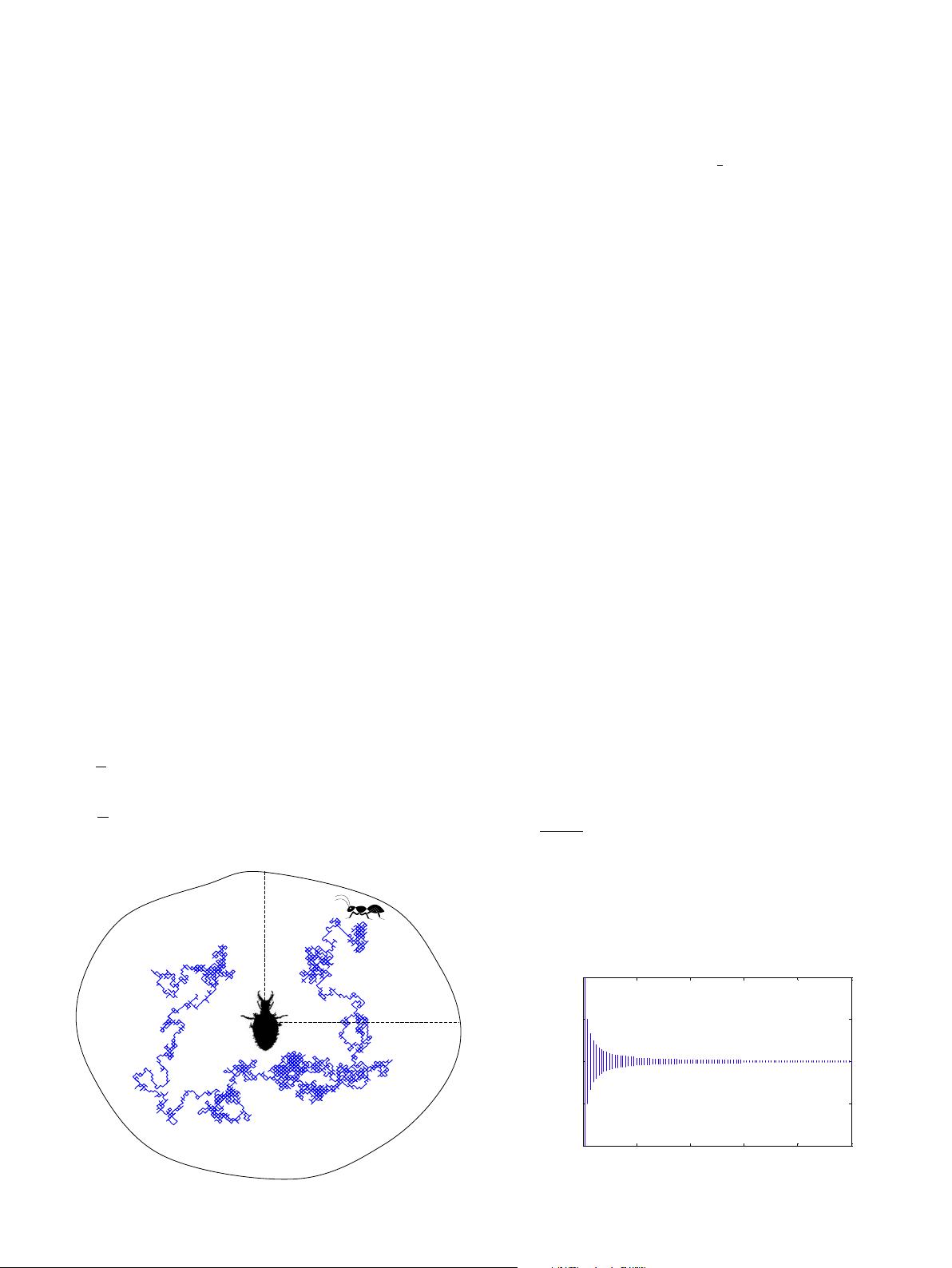

Their names originate from their unique hunting behaviour and

their favourite prey. An antlion larvae digs a cone-shaped pit in

sand by moving along a circular path and throwing out sands with

its massive jaw [51,52]. Fig. 1(a) shows several cone-shaped pits

with different sizes. After digging the trap, the larvae hides under-

neath the bottom of the cone (as a sit-and-wait predator [53]) and

waits for insects (preferably ant) to be trapped in the pit [53] as

illustrated in Fig. 1(b). The edge of the cone is sharp enough for

insects to fall to the bottom of the trap easily. Once the antlion

realizes that a prey is in the trap, it tries to catch it. However,

insects usually are not caught immediately and try to escape from

the trap. In this case, antlions intelligently throw sands towards to

edge of the pit to slide the prey into the bottom of the pit. When a

prey is caught into the jaw, it is pulled under the soil and con-

sumed. After consuming the prey, antlions throw the leftovers out-

side the pit and amend the pit for the next hunt.

Another interesting behaviour that has been observed in life

style of antlions is the relevancy of the size of the trap and two

things: level of hunger and shape of the moon. Antlions tend to

dig out larger traps as they become more hungry [54] and/or when

the moon is full [55]. They have been evolved and adapted this way

to improve their chance of survival. It also has been discovered that

an antlion does not directly observe the shape of the moon to

decide about the size of the trap, but it has an internal lunar clock

to make such decisions [55].

The main inspiration of the ALO algorithm comes from the for-

aging behaviour of antlion’s larvae. In the next subsection the

behaviour of antlions and their prey in nature is first modelled

mathematically. An optimization algorithm is then proposed based

on the mathematical model.

2.2. Operators of the ALO algorithm

The ALO algorithm mimics interaction between antlions and

ants in the trap. To model such interactions, ants are required to

move over the search space, and antlions are allowed to hunt them

and become fitter using traps. Since ants move stochastically in

nature when searching for food, a random walk is chosen for mod-

elling ants’ movement as follows:

XðtÞ¼½0; cumsumð2rðt

1

Þ1Þ; cumsumð2rðt

2

Þ1Þ;...; cumsumð2rðt

n

Þ1Þ

ð2:1Þ

where cumsum calculates the cumulative sum, n is the maximum

number of iteration, t shows the step of random walk (iteration in

this study), and r(t) is a stochastic function defined as follows:

rðtÞ¼

1 if rand > 0:5

0 if rand 6 0:5

ð2:2Þ

where t shows the step of random walk (iteration in this study) and

rand is a random number generated with uniform distribution in

the interval of [0,1].

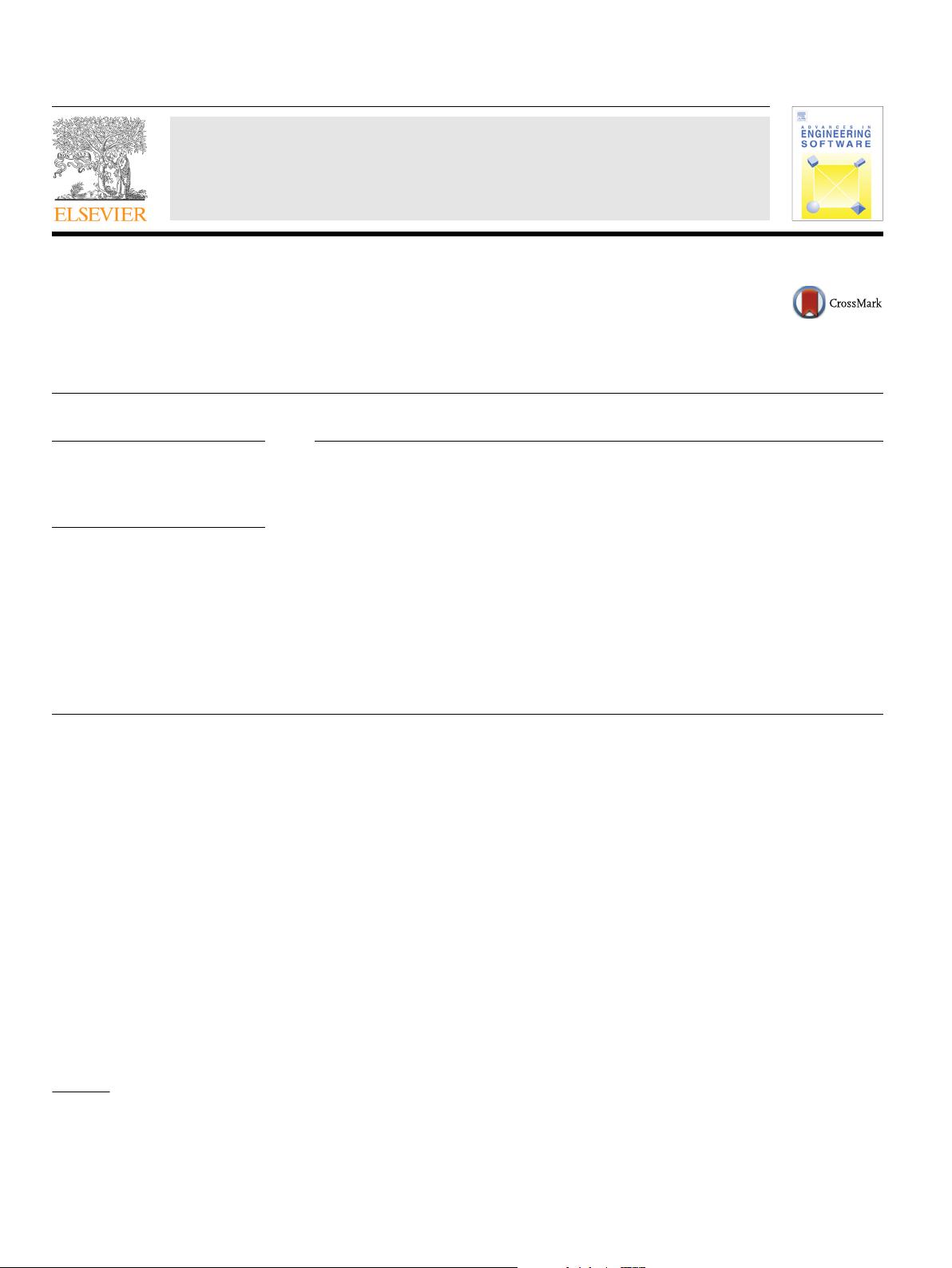

To have an image of this random walk, Fig. 2 is provided that

illustrates three random walks over 500 iterations. This figure

shows that the random walk utilized may fluctuate dramatically

around the origin (red

1

curve), have increasing trend (black curve),

or have descending behaviour (blue curve).

The position of ants are saved and utilized during optimization

in the following matrix:

M

Ant

¼

A

1;1

A

1;2

... ... A

1;d

A

2;1

A

2;2

... ... A

2;d

:::::

:::::

A

n;1

A

n;2

... ... A

n;d

2

6

6

6

6

6

4

3

7

7

7

7

7

5

ð2:3Þ

1

For interpretation of color in Fig. 2, the reader is referred to the web version of

this article.

S. Mirjalili / Advances in Engineering Software 83 (2015) 80–98

81

基于matlab实现蚁狮优化算法.zip (8个子文件)

基于matlab实现蚁狮优化算法.zip (8个子文件)  基于matlab实现蚁狮优化算法

基于matlab实现蚁狮优化算法  3038ALO(蚁狮优化算法)

3038ALO(蚁狮优化算法)  RouletteWheelSelection.m 2KB

RouletteWheelSelection.m 2KB initialization.m 2KB

initialization.m 2KB main.m 3KB

main.m 3KB ALO.m 6KB

ALO.m 6KB Random_walk_around_antlion.m 3KB

Random_walk_around_antlion.m 3KB Get_Functions_details.m 8KB

Get_Functions_details.m 8KB func_plot.m 4KB

func_plot.m 4KB Fardapaper-The-Ant-Lion-Optimizer.pdf 2.96MB

Fardapaper-The-Ant-Lion-Optimizer.pdf 2.96MB

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功