The paper is organized as follows. After discussing related

work in Section II, we will introduce our border extraction

method in Section III. We then will describe the interest

point extraction in Section IV and the NARF-descriptor

in Section V. We finally present experimental results in

Sections VI and VII.

II. RELATED WORK

Two of the most popular systems for extracting interest

points and creating stable descriptors in the area of 2D

computer vision are SIFT (Scale Invariant Feature Trans-

form) [6] and SURF (Speeded Up Robust Features) [2].

The interest point detection and the descriptors are based on

local gradients, and a unique orientation for the image patch

is extracted to achieve rotational invariance. Our approach

operates on 3D data instead of monocular camera images.

Compared to cameras, 3D sensors provide depth information,

and can be less sensitive to lighting conditions (e.g., laser

sensors). In addition, scale information is directly available.

A disadvantage, however, is that geometry alone is less

expressive in terms of object uniqueness. While SIFT and

SURF are not directly transferable to 3D scans, many of

the general concepts, such as the usage of gradients and the

extraction of a unique orientation, are useful there.

One of the most popular descriptors for 3D data is

the Spin-image presented by Johnson [5], which is a 2D

representation of the surface surrounding a 3D point and

is computed for every point in the scene. A variant of spin-

images, spherical spin images [9], improves the compari-

son by applying nearest neighbor search using the linear

correlation coefficient as the equivalence classes of spin-

images. To efficiently perform the comparison of features, the

authors furthermore compress the descriptor. In our previous

work [14] we found that range value patches as features

showed a better reliability in an object recognition system

compared to spin images. The features we propose in this

paper build on those range value patches and show an

improved matching capability (see Section VII). Spin images

also do not explicitly take empty space (e.g., beyond object

borders) into account. For example, for a square plane the

spin images for points in the center and the corners would

be identical, while the feature described in this paper is able

to discriminate between such points.

An object detection approach based on silhouettes ex-

tracted from range images is presented in [15]. The features

proposed are based on a fast Eigen-CSS method and a

supervised learning algorithm. This is similar to our work

in the sense that the authors also try to make explicit use

of border information. By restricting the system to borders

only, however, valuable information regarding the structure

of the objects is not considered. Additionally, the extraction

of a single descriptor for the complete silhouette makes the

system less robust to occlusions.

Many approaches compute descriptors exhaustively in ev-

ery data point or use simple sampling methods ([5], [3], [7]),

thereby introducing an unnecessary overhead. In [4] the au-

thors present an approach to global registration using Integral

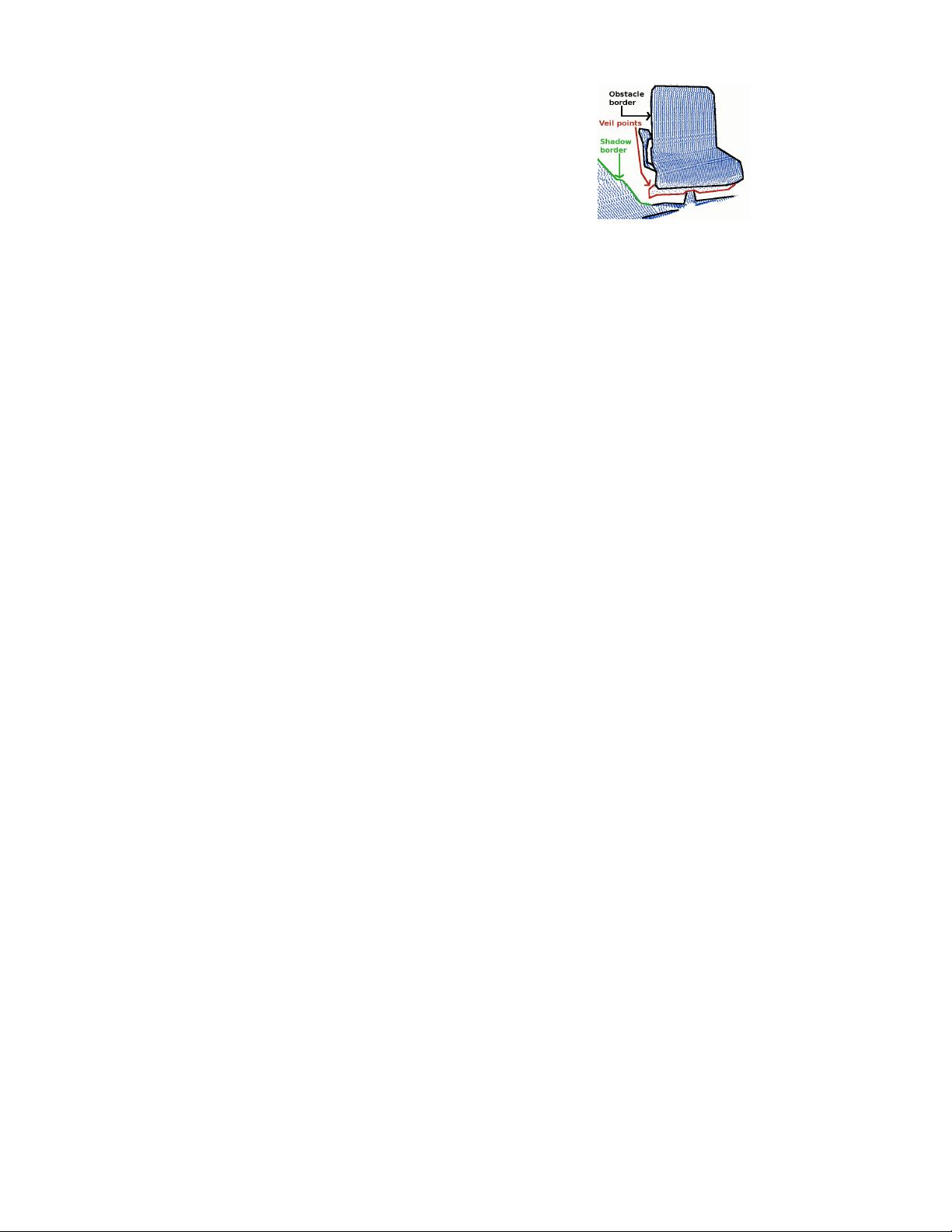

Fig. 2. Different kinds of border points.

Volume Descriptors (IVD) estimated at certain interest points

in the data. These interest points are extracted using a self

similarity approach in the IVD space, meaning the descriptor

of a point is compared to the descriptors of its neighbors to

determine areas where there is a significant change. While

this method for interest point extraction explicitly takes the

descriptor into account, it becomes impractical for more

complex descriptors, which are more expensive to extract.

Unnikrishnan [16] presented an interest point extraction

method with automatic scale detection in unorganized 3D

point clouds. This approach, however, does not consider any

view-point related information and does not attempt to place

interest points in stable positions. In [12] we presented a

method for selecting interest points based on their persistence

in growing point neighborhoods. Given a PFH (Point Feature

Histogram) space [11], multiple descriptors are estimated for

several different radii, and only the ones similar between r

i

and r

i+1

are kept. The method described in this paper is

by several orders of magnitude faster in the estimation of

interest points.

In our previous work we also used interest point extraction

methods known from the 2D computer vision literature, such

as the Harris Detector or Difference of Gaussians, adapted for

range images [14], [13]. Whereas these methods turned out to

be robust, they have several shortcomings. For example, the

estimated keypoints tend to lie directly on the borders of the

objects or on other positions that have a significant change

in structure. While these areas are indeed interesting parts of

the scene, having the interest points directly there can lead to

high inaccuracies in the descriptor calculation since these are

typically unstable areas, e.g., regarding normal estimation.

The goal of our work described here is to find points that

are in the vicinity of significant changes and at the same

time are on stable parts of the surface.

III. BORDER EXTRACTION

A. Motivation

One important requirement to our feature extraction pro-

cedure is the explicit handling of borders in the range data.

Borders typically appear as non-continuous traversals from

foreground to background. In this context there are mainly

three different kinds of points that we are interested in

detecting: object borders, which are the outermost visible

points still belonging to an object, shadow borders, which

are points in the background that adjoin occlusions, and veil

points, which are interpolated points between the obstacle

border and the shadow border. Veil points are a typical

phenomenon in 3D range data obtained by lidars and treating

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功