没有合适的资源?快使用搜索试试~ 我知道了~

A Distributional Perspective on Reinforcement Learning.pdf

需积分: 31 5 下载量 62 浏览量

2019-09-02

11:29:40

上传

评论

收藏 1.63MB PDF 举报

温馨提示

关于A Distributional Perspective on Reinforcement Learning的原始论文,适合初学者对深度强化学习A Distributional Perspective on Reinforcement Learning的认识和了解

资源推荐

资源详情

资源评论

A Distributional Perspective on Reinforcement Learning

Marc G. Bellemare

* 1

Will Dabney

* 1

R

´

emi Munos

1

Abstract

In this paper we argue for the fundamental impor-

tance of the value distribution: the distribution

of the random return received by a reinforcement

learning agent. This is in contrast to the com-

mon approach to reinforcement learning which

models the expectation of this return, or value.

Although there is an established body of liter-

ature studying the value distribution, thus far it

has always been used for a specific purpose such

as implementing risk-aware behaviour. We begin

with theoretical results in both the policy eval-

uation and control settings, exposing a signifi-

cant distributional instability in the latter. We

then use the distributional perspective to design

a new algorithm which applies Bellman’s equa-

tion to the learning of approximate value distri-

butions. We evaluate our algorithm using the

suite of games from the Arcade Learning En-

vironment. We obtain both state-of-the-art re-

sults and anecdotal evidence demonstrating the

importance of the value distribution in approxi-

mate reinforcement learning. Finally, we com-

bine theoretical and empirical evidence to high-

light the ways in which the value distribution im-

pacts learning in the approximate setting.

1. Introduction

One of the major tenets of reinforcement learning states

that, when not otherwise constrained in its behaviour, an

agent should aim to maximize its expected utility Q, or

value (Sutton & Barto, 1998). Bellman’s equation succintly

describes this value in terms of the expected reward and ex-

pected outcome of the random transition (x, a) →(X

0

, A

0

):

Q(x, a) = E R(x, a) + γ E Q(X

0

, A

0

).

In this paper, we aim to go beyond the notion of value and

argue in favour of a distributional perspective on reinforce-

*

Equal contribution

1

DeepMind, London, UK. Correspon-

dence to: Marc G. Bellemare <bellemare@google.com>.

Proceedings of the 34

th

International Conference on Machine

Learning, Sydney, Australia, PMLR 70, 2017. Copyright 2017

by the author(s).

ment learning. Specifically, the main object of our study is

the random return Z whose expectation is the value Q. This

random return is also described by a recursive equation, but

one of a distributional nature:

Z(x, a)

D

= R(x, a) + γZ(X

0

, A

0

).

The distributional Bellman equation states that the distribu-

tion of Z is characterized by the interaction of three random

variables: the reward R, the next state-action (X

0

, A

0

), and

its random return Z(X

0

, A

0

). By analogy with the well-

known case, we call this quantity the value distribution.

Although the distributional perspective is almost as old

as Bellman’s equation itself (Jaquette, 1973; Sobel, 1982;

White, 1988), in reinforcement learning it has thus far been

subordinated to specific purposes: to model parametric un-

certainty (Dearden et al., 1998), to design risk-sensitive al-

gorithms (Morimura et al., 2010b;a), or for theoretical anal-

ysis (Azar et al., 2012; Lattimore & Hutter, 2012). By con-

trast, we believe the value distribution has a central role to

play in reinforcement learning.

Contraction of the policy evaluation Bellman operator.

Basing ourselves on results by R

¨

osler (1992) we show that,

for a fixed policy, the Bellman operator over value distribu-

tions is a contraction in a maximal form of the Wasserstein

(also called Kantorovich or Mallows) metric. Our partic-

ular choice of metric matters: the same operator is not a

contraction in total variation, Kullback-Leibler divergence,

or Kolmogorov distance.

Instability in the control setting. We will demonstrate an

instability in the distributional version of Bellman’s opti-

mality equation, in contrast to the policy evaluation case.

Specifically, although the optimality operator is a contrac-

tion in expected value (matching the usual optimality re-

sult), it is not a contraction in any metric over distributions.

These results provide evidence in favour of learning algo-

rithms that model the effects of nonstationary policies.

Better approximations. From an algorithmic standpoint,

there are many benefits to learning an approximate distribu-

tion rather than its approximate expectation. The distribu-

tional Bellman operator preserves multimodality in value

distributions, which we believe leads to more stable learn-

ing. Approximating the full distribution also mitigates the

effects of learning from a nonstationary policy. As a whole,

arXiv:1707.06887v1 [cs.LG] 21 Jul 2017

A Distributional Perspective on Reinforcement Learning

we argue that this approach makes approximate reinforce-

ment learning significantly better behaved.

We will illustrate the practical benefits of the distributional

perspective in the context of the Arcade Learning Environ-

ment (Bellemare et al., 2013). By modelling the value dis-

tribution within a DQN agent (Mnih et al., 2015), we ob-

tain considerably increased performance across the gamut

of benchmark Atari 2600 games, and in fact achieve state-

of-the-art performance on a number of games. Our results

echo those of Veness et al. (2015), who obtained extremely

fast learning by predicting Monte Carlo returns.

From a supervised learning perspective, learning the full

value distribution might seem obvious: why restrict our-

selves to the mean? The main distinction, of course, is that

in our setting there are no given targets. Instead, we use

Bellman’s equation to make the learning process tractable;

we must, as Sutton & Barto (1998) put it, “learn a guess

from a guess”. It is our belief that this guesswork ultimately

carries more benefits than costs.

2. Setting

We consider an agent interacting with an environment in

the standard fashion: at each step, the agent selects an ac-

tion based on its current state, to which the environment re-

sponds with a reward and the next state. We model this in-

teraction as a time-homogeneous Markov Decision Process

(X, A, R, P, γ). As usual, X and A are respectively the

state and action spaces, P is the transition kernel P (·|x, a),

γ ∈ [0, 1] is the discount factor, and R is the reward func-

tion, which in this work we explicitly treat as a random

variable. A stationary policy π maps each state x ∈ X to a

probability distribution over the action space A.

2.1. Bellman’s Equations

The return Z

π

is the sum of discounted rewards along the

agent’s trajectory of interactions with the environment. The

value function Q

π

of a policy π describes the expected re-

turn from taking action a ∈ A from state x ∈ X, then

acting according to π:

Q

π

(x, a) := E Z

π

(x, a) = E

"

∞

X

t=0

γ

t

R(x

t

, a

t

)

#

, (1)

x

t

∼ P (·|x

t−1

, a

t−1

), a

t

∼ π(·|x

t

), x

0

= x, a

0

= a.

Fundamental to reinforcement learning is the use of Bell-

man’s equation (Bellman, 1957) to describe the value func-

tion:

Q

π

(x, a) = E R(x, a) + γ E

P,π

Q

π

(x

0

, a

0

).

In reinforcement learning we are typically interested in act-

ing so as to maximize the return. The most common ap-

P

⇡

Z

R+

P

⇡

Z

Z

P

⇡

(a) (b)

(c) (d)

T

⇡

Z

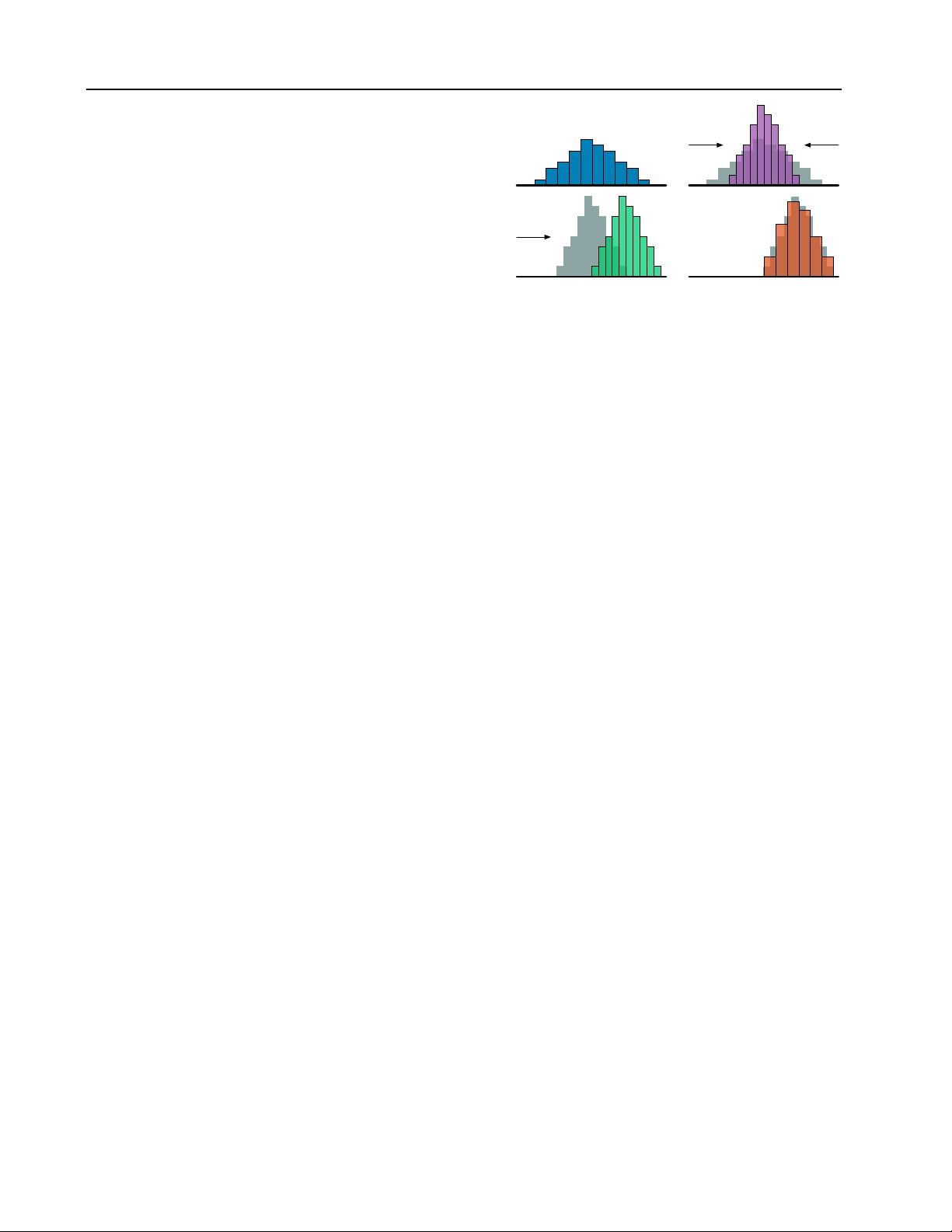

Figure 1. A distributional Bellman operator with a deterministic

reward function: (a) Next state distribution under policy π, (b)

Discounting shrinks the distribution towards 0, (c) The reward

shifts it, and (d) Projection step (Section 4).

proach for doing so involves the optimality equation

Q

∗

(x, a) = E R(x, a) + γ E

P

max

a

0

∈A

Q

∗

(x

0

, a

0

).

This equation has a unique fixed point Q

∗

, the optimal

value function, corresponding to the set of optimal policies

Π

∗

(π

∗

is optimal if E

a∼π

∗

Q

∗

(x, a) = max

a

Q

∗

(x, a)).

We view value functions as vectors in R

X ×A

, and the ex-

pected reward function as one such vector. In this context,

the Bellman operator T

π

and optimality operator T are

T

π

Q(x, a) := E R(x, a) + γ E

P,π

Q(x

0

, a

0

) (2)

T Q(x, a) := E R(x, a) + γ E

P

max

a

0

∈A

Q(x

0

, a

0

). (3)

These operators are useful as they describe the expected

behaviour of popular learning algorithms such as SARSA

and Q-Learning. In particular they are both contraction

mappings, and their repeated application to some initial Q

0

converges exponentially to Q

π

or Q

∗

, respectively (Bert-

sekas & Tsitsiklis, 1996).

3. The Distributional Bellman Operators

In this paper we take away the expectations inside Bell-

man’s equations and consider instead the full distribution

of the random variable Z

π

. From here on, we will view Z

π

as a mapping from state-action pairs to distributions over

returns, and call it the value distribution.

Our first aim is to gain an understanding of the theoretical

behaviour of the distributional analogues of the Bellman

operators, in particular in the less well-understood control

setting. The reader strictly interested in the algorithmic

contribution may choose to skip this section.

3.1. Distributional Equations

It will sometimes be convenient to make use of the proba-

bility space (Ω, F, Pr). The reader unfamiliar with mea-

A Distributional Perspective on Reinforcement Learning

sure theory may think of Ω as the space of all possible

outcomes of an experiment (Billingsley, 1995). We will

write kuk

p

to denote the L

p

norm of a vector u ∈ R

X

for

1 ≤ p ≤ ∞; the same applies to vectors in R

X ×A

. The

L

p

norm of a random vector U : Ω → R

X

(or R

X ×A

) is

then kU k

p

:=

E

kU(ω)k

p

p

1/p

, and for p = ∞ we have

kUk

∞

= ess sup kU(ω)k

∞

(we will omit the dependency

on ω ∈ Ω whenever unambiguous). We will denote the

c.d.f. of a random variable U by F

U

(y) := Pr{U ≤ y},

and its inverse c.d.f. by F

−1

U

(q) := inf{y : F

U

(y) ≥ q}.

A distributional equation U

D

:= V indicates that the ran-

dom variable U is distributed according to the same law

as V . Without loss of generality, the reader can understand

the two sides of a distributional equation as relating the dis-

tributions of two independent random variables. Distribu-

tional equations have been used in reinforcement learning

by Engel et al. (2005); Morimura et al. (2010a) among oth-

ers, and in operations research by White (1988).

3.2. The Wasserstein Metric

The main tool for our analysis is the Wasserstein metric d

p

between cumulative distribution functions (see e.g. Bickel

& Freedman, 1981, where it is called the Mallows metric).

For F, G two c.d.fs over the reals, it is defined as

d

p

(F, G) := inf

U,V

kU −V k

p

,

where the infimum is taken over all pairs of random vari-

ables (U, V ) with respective cumulative distributions F

and G. The infimum is attained by the inverse c.d.f. trans-

form of a random variable U uniformly distributed on [0, 1]:

d

p

(F, G) = kF

−1

(U) − G

−1

(U)k

p

.

For p < ∞ this is more explicitly written as

d

p

(F, G) =

Z

1

0

F

−1

(u) − G

−1

(u)

p

du

1/p

.

Given two random variables U, V with c.d.fs F

U

, F

V

, we

will write d

p

(U, V ) := d

p

(F

U

, F

V

). We will find it conve-

nient to conflate the random variables under consideration

with their versions under the inf, writing

d

p

(U, V ) = inf

U,V

kU −V k

p

.

whenever unambiguous; we believe the greater legibility

justifies the technical inaccuracy. Finally, we extend this

metric to vectors of random variables, such as value distri-

butions, using the corresponding L

p

norm.

Consider a scalar a and a random variable A independent

of U, V . The metric d

p

has the following properties:

d

p

(aU, aV ) ≤ |a|d

p

(U, V ) (P1)

d

p

(A + U, A + V ) ≤ d

p

(U, V ) (P2)

d

p

(AU, AV ) ≤ kAk

p

d

p

(U, V ). (P3)

We will need the following additional property, which

makes no independence assumptions on its variables. Its

proof, and that of later results, is given in the appendix.

Lemma 1 (Partition lemma). Let A

1

, A

2

, . . . be a set of

random variables describing a partition of Ω, i.e. A

i

(ω) ∈

{0, 1} and for any ω there is exactly one A

i

with A

i

(ω) =

1. Let U, V be two random variables. Then

d

p

U, V

≤

X

i

d

p

(A

i

U, A

i

V ).

Let Z denote the space of value distributions with bounded

moments. For two value distributions Z

1

, Z

2

∈ Z we will

make use of a maximal form of the Wasserstein metric:

¯

d

p

(Z

1

, Z

2

) := sup

x,a

d

p

(Z

1

(x, a), Z

2

(x, a)).

We will use

¯

d

p

to establish the convergence of the distribu-

tional Bellman operators.

Lemma 2.

¯

d

p

is a metric over value distributions.

3.3. Policy Evaluation

In the policy evaluation setting (Sutton & Barto, 1998) we

are interested in the value function V

π

associated with a

given policy π. The analogue here is the value distribu-

tion Z

π

. In this section we characterize Z

π

and study the

behaviour of the policy evaluation operator T

π

. We em-

phasize that Z

π

describes the intrinsic randomness of the

agent’s interactions with its environment, rather than some

measure of uncertainty about the environment itself.

We view the reward function as a random vector R ∈ Z,

and define the transition operator P

π

: Z → Z

P

π

Z(x, a)

D

:= Z(X

0

, A

0

) (4)

X

0

∼ P (·|x, a), A

0

∼ π(·|X

0

),

where we use capital letters to emphasize the random na-

ture of the next state-action pair (X

0

, A

0

). We define the

distributional Bellman operator T

π

: Z → Z as

T

π

Z(x, a)

D

:= R(x, a) + γP

π

Z(x, a). (5)

While T

π

bears a surface resemblance to the usual Bell-

man operator (2), it is fundamentally different. In particu-

lar, three sources of randomness define the compound dis-

tribution T

π

Z:

剩余18页未读,继续阅读

资源评论

GanD.GanD

- 粉丝: 3

- 资源: 90

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 饥饿游戏搜索HGS算法结合BP建立多特征输入单变量预测模型,Matlab实现,可应用于学术研究及快速数据拟合预测,饥饿游戏搜索HGS算法结合BP建立多特征输入单变量输出拟合预测模型的设计与实现-基于

- 基于多时间尺度滚动优化的多能源微网双层调度策略实现与模型求解,基于多时间尺度滚动优化的多能源微网双层调度策略实现与模型求解,MATLAB代码:基于多时间尺度滚动优化的多能源微网双层调度模型 关键词:多

- MATLAB P文件到M文件的解码转换与编辑操作指南,Matlab P文件解密并转换为可查看编辑的M源码文件,Matlab p文件 转为m文件MATLAB matlab pcode,matlab p

- 基于Python的活动管理系统基础教程

- 基于MATLAB R2021b运行环境,利用双树复小波变换(DTCWT)进行轴承故障诊断研究:精细的方向选择与低冗余度分析 ,基于MATLAB R2021b环境的双树复小波变换(DTCWT)在轴承故障

- 多孔介质多相流中的水驱油模型与达西两相流模型的COMSOL模拟研究,基于多孔介质多相流的水驱油模型与达西两相流模型的COMSOL模拟研究,多孔介质多相流,水驱油模型,达西两相流模型comsol ,多孔

- Matlab麻雀搜索算法(SSA)优化BP神经网络权值与阈值-数据代码全包仿真图形生成器,Matlab麻雀搜索算法(SSA)优化BP神经网络权值与阈值-数据代码全包仿真图形生成器,matlab麻雀

- 基于LSTM神经网络和Adam优化器的短期负荷预测MATLAB程序:利用多因素影响数据训练模型并精准预测未来负荷,基于LSTM神经网络和Adam优化器的短期负荷预测MATLAB程序:利用多因素影响数据

- (源码)基于Arduino的炸弹拆除机器人.zip

- STM32全桥逆变电路原理图:IR2110驱动IRF540N MOS,最大50V直流输入,高交流利用率,谐波低于0.6%,SPWM波形学习好选择,STM32全桥逆变电路原理图:IR2110驱动IRF5

- (源码)基于FUSE框架的简单文件系统.zip

- MATLAB环境下Ricker小波及其频率切片小波变换的代码实现与应用研究,Ricker小波与频率切片小波变换:MATLAB环境下的应用与选择策略,Ricker小波及其频率切片小波变 代码运行环境为M

- (源码)基于Go语言的图书管理系统与并发任务调度系统.zip

- PLC全自动洗衣机控制系统的设计与实现:从硬件选型到软件流程图解析,基于PLC的全自动洗衣机控制系统设计详解含硬件和软件设计章节一套完整方案文档 ,基于PLC全自动洗衣机控制系统设计 含Word文档一

- (源码)基于机械臂的自动咖啡分配器系统.zip

- (源码)基于AVR微控制器的手势感应迷你钢琴.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功