没有合适的资源?快使用搜索试试~ 我知道了~

Unstructured Lumigraph Rendering.PDF

需积分: 13 3 下载量 93 浏览量

2011-03-12

21:55:18

上传

评论

收藏 3.04MB PDF 举报

温馨提示

Unstructured Lumigraph Rendering.PDF

资源推荐

资源详情

资源评论

Unstructured Lumigraph Rendering

Chris Buehler Michael Bosse Leonard McMillan Steven Gortler Michael Cohen

MIT Laboratory for Computer Science Harvard University Microsoft Research

Abstract

We describe an image based rendering approach that generalizes

many current image based rendering algorithms, including light

field rendering and view-dependent texture mapping. In particular,

it allows for lumigraph-style rendering from a set of input cameras

in arbitrary configurations (i.e., not restricted to a plane or to any

specific manifold). In the case of regular and planar input camera

positions, our algorithm reduces to a typical lumigraph approach.

When presented with fewer cameras and good approximate geom-

etry, our algorithm behaves like view-dependent texture mapping.

The algorithm achieves this flexibility because it is designed to meet

a set of specific goals that we describe. We demonstrate this flexi-

bility with a variety of examples.

Keyword Image-Based Rendering

1 Introduction

Image-based rendering (IBR) has become a popular alternative to

traditional three-dimensional graphics. Two effective IBR meth-

ods are view-dependent texture mapping (VDTM) [3] and the light

field/lumigraph [10, 5] approaches. The light field and VDTM algo-

rithms are in many ways quite different in their assumptions and in-

put. Light field rendering requires a large collection of images from

cameras whose centers lie on a regularly sampled two-dimensional

patch, but it makes few if any assumptions about the geometry of

the scene. In contrast, VDTM assumes a relatively accurate ge-

ometric model, but requires only a small number of images from

input cameras that can be in general positions. These images are

then “projected” onto the geometry for rendering.

We suggest that, at their core, these two approaches are quite

similar. Both are methods for interpolating color values for a de-

sired ray as some combination of input rays. In VDTM this inter-

polation is performed using a geometric model to determine which

pixel from each input image “corresponds” to the desired ray in the

output image. Of these corresponding rays, those that are closest in

angle to the desired ray are weighted to make the greatest contribu-

tion to the interpolated result.

Light field rendering can be similarly interpreted. For each de-

sired ray (s, t, u, v), one searches the image database for rays that

intersect near some (u, v) point on a “focal plane” and have a simi-

lar angle to the desired ray, as measured by the ray’s intersection on

the “camera plane” (s, t). In a depth-corrected lumigraph, the focal

plane is effectively replaced with an approximate geometric model,

making this approach even more similar to view dependent texture

mapping.

Given these related IBR approaches, we attempt to address the

following questions: Is there a generalized rendering framework

that spans all of these image-based rendering algorithms, having

VDTM and lumigraph/light fields as extremes? Might such an al-

gorithm adapt well to various numbers of input images from cam-

eras in general configurations while also permitting various levels

of geometric accuracy?

In this paper we approach the problem by suggesting a set of

goals that any image based rendering algorithm should have. We

find that no previous IBR algorithm simultaneously satisfies all of

these goals. Therefore these algorithms behave quite well under

appropriate assumptions on their input, but may produce unneces-

sarily poor renderings when these assumptions are violated.

We then describe an algorithm for “unstructured lumigraph ren-

dering” (ULR), that generalizes both lumigraph and VDTM render-

ing. Our algorithm is designed specifically with the stated goals in

mind. As a result, our renderer behaves well with a wide variety

of inputs. These include source cameras that are not on a com-

mon plane, such as source images taken by moving forward into a

scene, a configuration that would be problematic for previous IBR

approaches.

It should be no surprise that our algorithm bears many resem-

blances to earlier approaches. The main contribution of our algo-

rithm is that, unlike previously published methods, it is designed to

meet a set of listed goals. Thus, it works well on a wide range of

differing inputs, from few images with an accurate geometric model

to many images with minimal geometric information.

2 Previous Work

The basic approach to view dependent texture mapping (VDTM) is

put forth by Debevec et al. [3] in their Fac¸ade image-based model-

ing and rendering system. Fac¸ade is designed to estimate geometric

models consistent with a small set of source images. As part of this

system, a rendering algorithm was developed where pixels from all

relevant cameras were combined and weighted to determine a view-

dependent texture for the derived geometric models. In later work,

Debevec et al [4] describe a real-time VDTM algorithm. In this

algorithm, each polygon in the geometric model maintains a “view

map” data structure that is used to quickly determine a set of three

input cameras that should be used to texture it. Like most real-time

VDTM algorithms, this algorithm uses hardware supported projec-

tive texture mapping [6] for efficiency.

At the other extreme, Levoy and Hanrahan [10] describe the light

field rendering algorithm, in which a large collection of images are

used to render novel views of a scene. This collection of images

is captured from cameras whose centers lie on a regularly sampled

two-dimensional plane. Light fields otherwise make few assump-

tions about the geometry of the scene. Gortler et al. [5] describe

a similar rendering algorithm called the lumigraph. In addition,

the authors of the lumigraph paper suggest many workarounds to

overcome limitations of the basic approach, including a “rebinning”

process to handle source images acquired from general camera po-

sitions and a “depth-correction” extension to allow for more ac-

curate ray reconstructions from an insufficient number of source

cameras.

Many extensions, enhancements, alternatives, and variations

to these basic algorithms have since been suggested. These in-

clude techniques for rendering digitized three-dimensional models

in combination with acquired images such as Pulli et al. [13] and

Wood et al. [18]. Shum et al. [17] suggests alternate lower di-

mensional lumigraph approximations that use approximate depth

correction. Heigl et al. [7] describe an algorithm to perform IBR

from an unstructured set of data cameras where the projections of

the source cameras’ centers were projected into the desired im-

age plane, triangulated, and used to reconstruct the interior pixels.

Isaksen et al. [9] show how the common “image-space” coordinate

frames used in light field rendering can be viewed as a focal plane

for dynamically generating alternative ray reconstructions. A for-

mal analysis of the trade off between the number of cameras and

the fidelity of geometry is presented in [1].

3 Goals

We begin by presenting a list of desirable properties that we feel an

ideal image-based rendering algorithm should have. No previously

published method satisfies all of these goals. In the following sec-

tion we describe a new algorithm that attempts to meet these goals

while maintaining interactive rendering rates.

Use of geometric proxies: When geometric knowledge is

present, it should be used to assist in the reconstruction of a desired

ray (see Figure 1). We refer to such approximate geometric infor-

mation as a proxy. The combination of accurate geometric proxies

with nearly Lambertian surface properties allows for high quality

reconstructions from relatively few source images. The reconstruc-

tion process merely entails looking for rays from source cameras

that see the “same” point. This idea is central to all VDTM algo-

rithms. It is also the distinguishing factor in geometry-corrected lu-

migraphs and surface light field algorithms. Approximate proxies,

such as the focal-plane abstraction used by Isaksen [9], allow for

the accurate reconstruction of rays at specific depths from standard

light fields.

With a highly accurate geometric model, the visibility of any

surface point relative to a particular source camera can also be de-

termined. If a camera’s view of the point is occluded by some other

point on the geometric model, then that camera should not be used

in the reconstruction of the desired ray. When possible, image-

based algorithms should consider visibility in their reconstruction.

C

1

C

5

C

4

C

3

D

C

2

C

6

Figure 1: When available, approximate geometric information

should be used to determine which source rays correspond well to

a desired ray. Here C

x

denotes the position of a reference camera,

and D is desired novel viewpoint.

Unstructured input: It is also desirable for an image-based

rendering algorithm to accept input images from cameras in gen-

eral position. The original light field method assumes that the cam-

eras are arranged at evenly spaced positions on a single plane. This

limits the applicability of this method since it requires a special

capture gantry that is both expensive and difficult to use in many

settings [11].

The lumigraph paper describes an acquisition system that uses a

hand-held video camera to acquire input images [5]. They apply a

preprocessing step, called rebinning, that resamples the input im-

ages from virtual source cameras situated on a regular grid. This

rebinning process adds an additional reconstruction and sampling

step to lumigraph creation. This extra step tends to degrade the

overall quality of the representation. This can be demonstrated by

noting that a rebinned lumigraph cannot, in general, reproduce its

input images. The surface light field algorithm suffers from essen-

tially the same resampling problem.

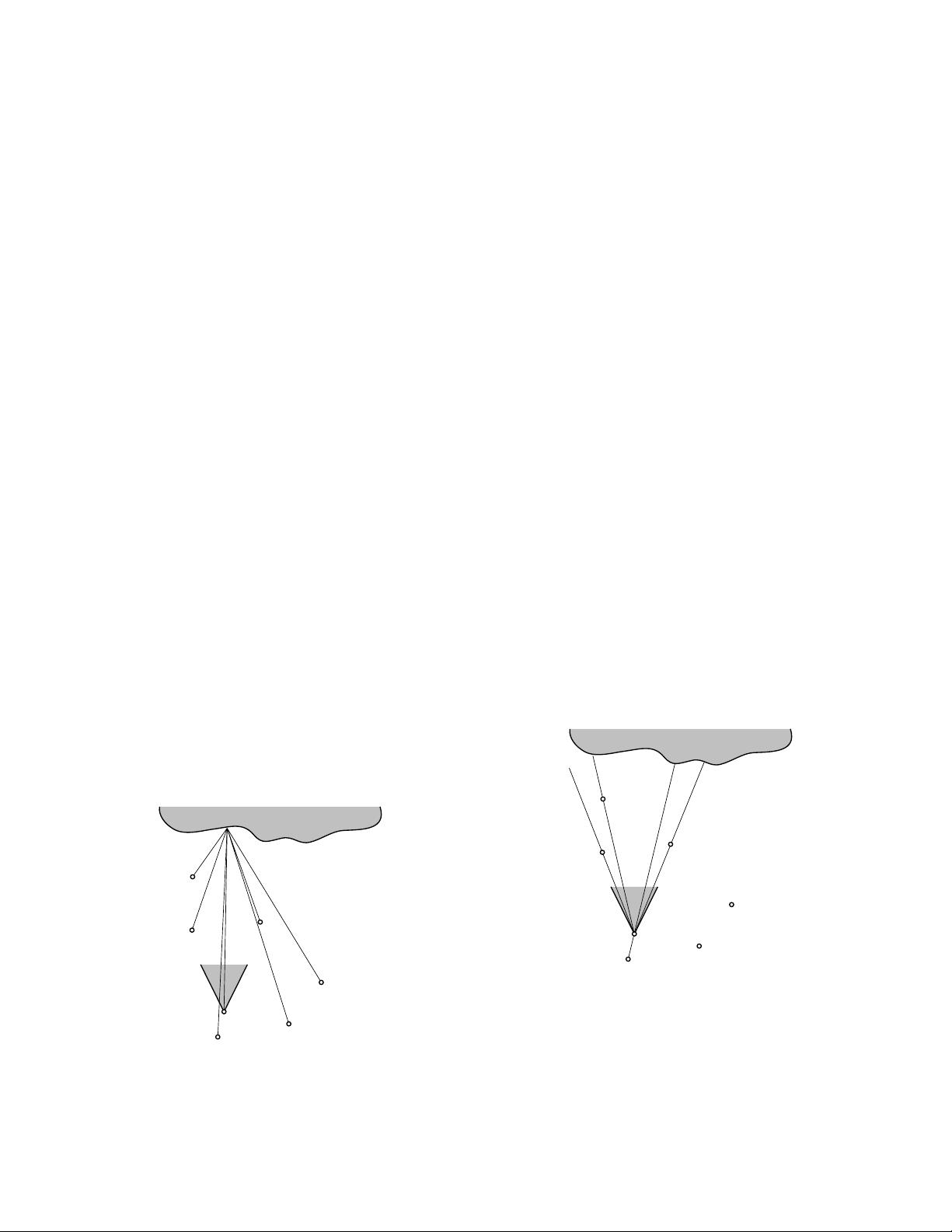

Epipole consistency: When a desired ray passes through the

center of projection of a source camera it can be trivially re-

constructed from the ray database (assuming a sufficiently high-

resolution input image and the ray falls within the camera’s field-

of-view) (see Figure 2). In this case, an ideal algorithm should

return a ray from the source image. An algorithm with epipole

consistency will reconstruct this ray correctly without any geomet-

ric information. With large numbers of source cameras, algorithms

with epipole consistency can create accurate reconstructions with

essentially no geometric information. Light field and lumigraph al-

gorithms are designed specifically to maintain this property.

Surprisingly, many real-time VDTM algorithms do not ensure

this property, even approximately, and therefore, will not work

properly when given poor geometry. The algorithms described

in [13, 2] reconstruct all of the rays in a fixed desired view using

a fixed selection of three source images but, as shown by the origi-

nal light field paper, proper reconstruction of a desired image may

involve using some rays from each of the source images. The algo-

rithm described in [4] always uses three source cameras to recon-

struct all of the desired pixels on a polygon of the geometry proxy.

This departs from epipole consistency if the proxy is coarse. The

algorithm of Heigl et al. [7] is an notable exception that, like a light

field or lumigraph, maintains epipole consistency.

C

1

C

5

C

4

C

3

C

2

D

C

6

Figure 2: When a desired ray passes through a source camera cen-

ter, that source camera should be emphasized most in the recon-

struction. Here this case occurs for cameras C

1

, C

2

, C

3

, and C

6

.

Minimal angular deviation: In general, the choice of which

input images are used to reconstruct a desired ray should be based

on a natural and consistent measure of closeness (See Figure 3). In

particular, source image rays with similar angles to the desired ray

should be used when possible.

剩余7页未读,继续阅读

资源评论

lvzhigao2008

- 粉丝: 0

- 资源: 6

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- screenrecorder-20241221-204839.mp4

- Screenshot_20241221-204051.png

- 自考计算机网络原理04741真题及答案2018-2020

- YOLO算法-垃圾箱检测数据集-214张图像带标签-垃圾桶.zip

- Hive存储压缩与Hive3性能优化-必看文档

- YOLO算法-施工管理数据集-7164张图像带标签-安全帽-装载机-挖掘机-平地机-移动式起重机-反光背心-工人-推土机-滚筒-哑巴卡车.zip

- YOLO算法-俯视视角草原绵羊检测数据集-4133张图像带标签-羊.zip

- YOLO算法-挖掘机数据集-2656张图像带标签-自卸卡车-挖掘机-轮式装载机.zip

- YOLO算法-火车-轨道-手推车数据集-3793张图像带标签-火车-轨道-手推车.zip

- YOLO算法-垃圾数据集-6561张图像带标签-纸张-混合的-餐厅快餐.zip

- 技术报告:大型语言模型在压力下战略欺骗用户的行为研究

- YOLO算法-水泥路面裂纹检测数据集-5005张图像带标签-裂纹.zip

- YOLO算法-垃圾数据集-568张图像带标签-纸张-纸箱-瓶子.zip

- YOLO算法-施工设备数据集-2000张图像带标签-装载机-挖掘机-平地机-移动式起重机-推土机-滚筒-哑巴卡车.zip

- 防火墙系统项目源代码全套技术资料.zip

- 西门子V90效率倍增-伺服驱动功能库详解-循环通信库 DRIVELib.mp4

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功