没有合适的资源?快使用搜索试试~ 我知道了~

DPM是一个非常成功的目标检测算法,连续获得VOC(Visual Object Class)07,08,09年的检测冠军。目前已成为众多分类器、分割、人体姿态和行为分类的重要部分。2010年Pedro Felzenszwalb被VOC授予"终身成就奖"

资源推荐

资源详情

资源评论

A Discriminatively Trained, Multiscale, Deformable Part Model

Pedro Felzenszwalb

University of Chicago

pff@cs.uchicago.edu

David McAllester

Toyota Technological Institute at Chicago

mcallester@tti-c.org

Deva Ramanan

TTI-C and UC Irvine

dramanan@ics.uci.edu

Abstract

This paper describes a discriminatively trained, multi-

scale, deformable part model for object detection. Our sys-

tem achieves a two-fold improvement in average precision

over the best performance in the 2006 PASCAL person de-

tection challenge. It also outperforms the best results in the

2007 challenge in ten out of twenty categories. The system

relies heavily on deformable parts. While deformable part

models have become quite popular, their value had not been

demonstrated on difficult benchmarks such as the PASCAL

challenge. Our system also relies heavily on new methods

for discriminative training. We combine a margin-sensitive

approach for data mining hard negative examples with a

formalism we call latent SVM. A latent SVM, like a hid-

den CRF, leads to a non-convex training problem. How-

ever, a latent SVM is semi-convex and the training prob-

lem becomes convex once latent information is specified for

the positive examples. We believe that our training meth-

ods will eventually make possible the effective use of more

latent information such as hierarchical (grammar) models

and models involving latent three dimensional pose.

1. Introduction

We consider the problem of detecting and localizing ob-

jects of a generic category, such as people or cars, in static

images. We have developed a new multiscale deformable

part model for solving this problem. The models are trained

using a discriminative procedure that only requires bound-

ing box labels for the positive examples. Using these mod-

els we implemented a detection system that is both highly

efficient and accurate, processing an image in about 2 sec-

onds and achieving recognition rates that are significantly

better than previous systems.

Our system achieves a two-fold improvement in average

precision over the winning system [5] in the 2006 PASCAL

person detection challenge. The system also outperforms

the best results in the 2007 challenge in ten out of twenty

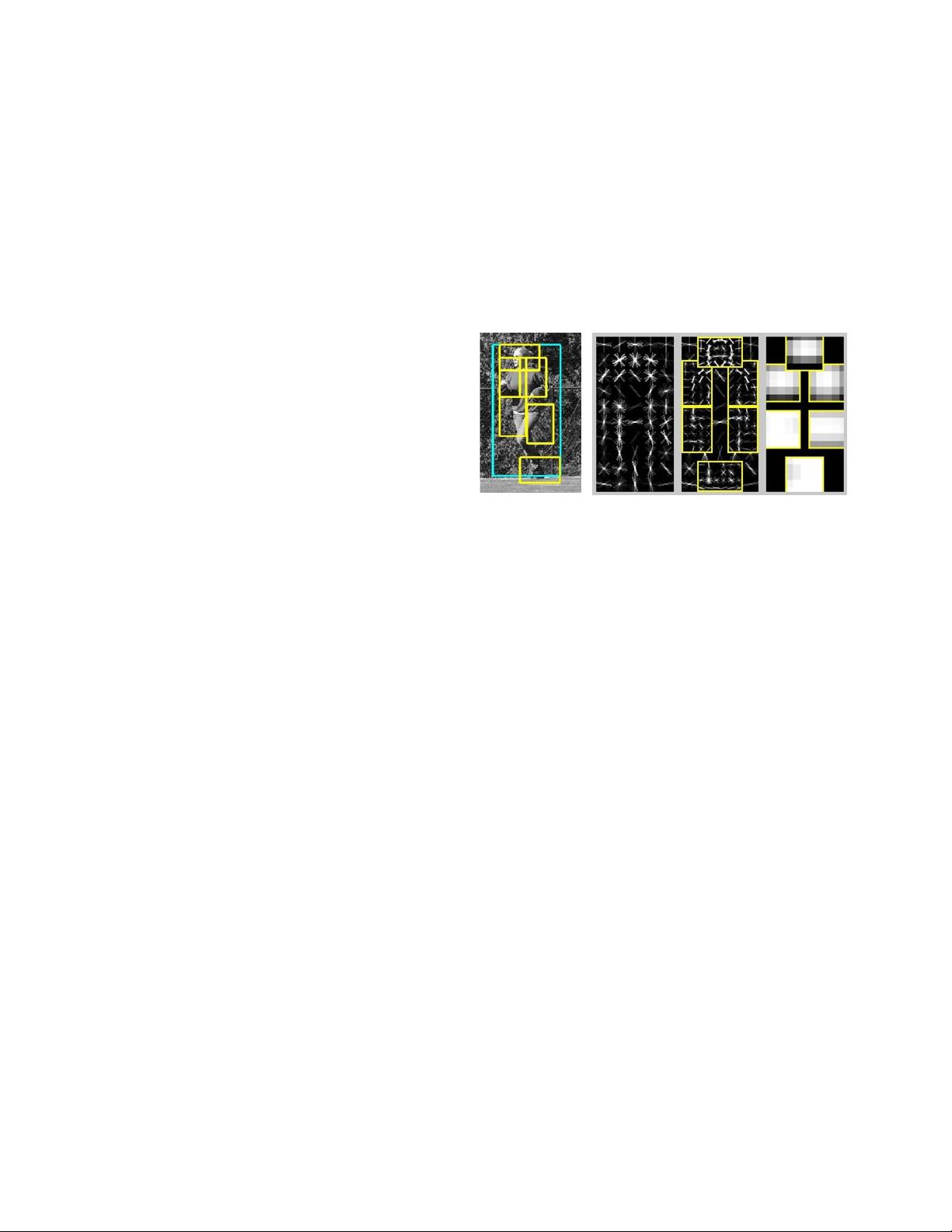

object categories. Figure 1 shows an example detection ob-

tained with our person model.

Figure 1. Example detection obtained with the person model. The

model is defined by a coarse template, several higher resolution

part templates and a spatial model for the location of each part.

The notion that objects can be modeled by parts in a de-

formable configuration provides an elegant framework for

representing object categories [1–3, 6, 10, 12, 13,15,16, 22].

While these models are appealing from a conceptual point

of view, it has been difficult to establish their value in prac-

tice. On difficult datasets, deformable models are often out-

performed by “conceptually weaker” models such as rigid

templates [5] or bag-of-features [23]. One of our main goals

is to address this performance gap.

Our models include both a coarse global template cov-

ering an entire object and higher resolution part templates.

The templates represent histogram of gradient features [5].

As in [14, 19, 21], we train models discriminatively. How-

ever, our system is semi-supervised, trained with a max-

margin framework, and does not rely on feature detection.

We also describe a simple and effective strategy for learn-

ing parts from weakly-labeled data. In contrast to computa-

tionally demanding approaches such as [4], we can learn a

model in 3 hours on a single CPU.

Another contribution of our work is a new methodology

for discriminative training. We generalize SVMs for han-

dling latent variables such as part positions, and introduce a

new method for data mining “hard negative” examples dur-

ing training. We believe that handling partially labeled data

is a significant issue in machine learning for computer vi-

sion. For example, the PASCAL dataset only specifies a

bounding box for each positive example of an object. We

treat the position of each object part as a latent variable. We

1

also treat the exact location of the object as a latent vari-

able, requiring only that our classifier select a window that

has large overlap with the labeled bounding box.

A latent SVM, like a hidden CRF [19], leads to a non-

convex training problem. However, unlike a hidden CRF,

a latent SVM is semi-convex and the training problem be-

comes convex once latent information is specified for the

positive training examples. This leads to a general coordi-

nate descent algorithm for latent SVMs.

System Overview Our system uses a scanning window

approach. A model for an object consists of a global “root”

filter and several part models. Each part model specifies a

spatial model and a part filter. The spatial model defines a

set of allowed placements for a part relative to a detection

window, and a deformation cost for each placement.

The score of a detection window is the score of the root

filter on the window plus the sum over parts, of the maxi-

mum over placements of that part, of the part filter score on

the resulting subwindow minus the deformation cost. This

is similar to classical part-based models [10, 13]. Both root

and part filters are scored by computing the dot product be-

tween a set of weights and histogram of gradient (HOG)

features within a window. The root filter is equivalent to a

Dalal-Triggs model [5]. The features for the part filters are

computed at twice the spatial resolution of the root filter.

Our model is defined at a fixed scale, and we detect objects

by searching over an image pyramid.

In training we are given a set of images annotated with

bounding boxes around each instance of an object. We re-

duce the detection problem to a binary classification prob-

lem. Each example x is scored by a function of the form,

f

β

(x) = max

z

β · Φ(x, z). Here β is a vector of model pa-

rameters and z are latent values (e.g. the part placements).

To learn a model we define a generalization of SVMs that

we call latent variable SVM (LSVM). An important prop-

erty of LSVMs is that the training problem becomes convex

if we fix the latent values for positive examples. This can

be used in a coordinate descent algorithm.

In practice we iteratively apply classical SVM training to

triples (!x

1

,z

1

,y

1

", . . ., !x

n

,z

n

,y

n

") where z

i

is selected

to be the best scoring latent label for x

i

under the model

learned in the previous iteration. An initial root filter is

generated from the bounding boxes in the PASCAL dataset.

The parts are initialized from this root filter.

2. Model

The underlying building blocks for our models are the

Histogram of Oriented Gradient (HOG) features from [5].

We represent HOG features at two different scales. Coarse

features are captured by a rigid template covering an entire

detection window. Finer scale features are captured by part

Image pyramid HOG feature pyramid

Figure 2. The HOG feature pyramid and an object hypothesis de-

fined in terms of a placement of the root filter (near the top of the

pyramid) and the part filters (near the bottom of the pyramid).

templates that can be moved with respect to the detection

window. The spatial model for the part locations is equiv-

alent to a star graph or 1-fan [3] where the coarse template

serves as a reference position.

2.1. HOG Representation

We follow the construction in [5] to define a dense repre-

sentation of an image at a particular resolution. The image

is first divided into 8x8 non-overlapping pixel regions, or

cells. For each cell we accumulate a 1D histogram of gra-

dient orientations over pixels in that cell. These histograms

capture local shape properties but are also somewhat invari-

ant to small deformations.

The gradient at each pixel is discretized into one of nine

orientation bins, and each pixel “votes” for the orientation

of its gradient, with a strength that depends on the gradient

magnitude at that pixel. For color images, we compute the

gradient of each color channel and pick the channel with

highest gradient magnitude at each pixel. Finally, the his-

togram of each cell is normalized with respect to the gra-

dient energy in a neighborhood around it. We look at the

four 2 × 2 blocks of cells that contain a particular cell and

normalize the histogram of the given cell with respect to the

total energy in each of these blocks. This leads to a 9 × 4

dimensional vector representing the local gradient informa-

tion inside a cell.

We define a HOG feature pyramid by computing HOG

features of each level of a standard image pyramid (see Fig-

ure 2). Features at the top of this pyramid capture coarse

gradients histogrammed over fairly large areas of the input

image while features at the bottom of the pyramid capture

finer gradients histogrammed over small areas.

2

剩余7页未读,继续阅读

资源评论

luxiankao

- 粉丝: 0

- 资源: 8

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 电子版账户历史明细.zip

- Visio 2019 64位版本安装包

- 汽车损坏识别检测数据集,使用yolov11格式标注,6696张图片,可识别11种损坏类型 标签和图片参考:https://backend.blog.csdn.net/article/details/1

- 不同形状物体检测25-YOLO(v5至v11)、COCO、CreateML、Paligemma、TFRecord、VOC数据集合集.rar

- 数据分析-57-爬取KFC早餐,搭配出你的营养早餐(包含代码和数据)

- 程序员日常小工具,包含截图,接口调用,日期处理,json转换,翻译等

- 如何在Microsoft Visual Studio 2013 编写的程序的详细步骤

- IMG20241229160637.jpg

- java医药管理系统设计源代码.zip

- 汽车损坏识别检测数据集,使用yolov9格式标注,6696张图片,可识别11种损坏类型 标签和图片参考:https://backend.blog.csdn.net/article/details/1

- 汽车损坏识别检测数据集,使用yolov8格式标注,6696张图片,可识别11种损坏类型 标签和图片参考:https://backend.blog.csdn.net/article/details/1

- 远端桌面工具 2024最新版Setup.RemoteDesktopManager.2024.3.22.0

- 基于python的疫情数据爬虫+微博关键词爬虫(数据库)+数据预处理及可视化数据情感分析源码+文档说明

- 基于ssm的大学生心理健康系统设计与开发源码(java毕业设计完整源码+LW).zip

- idea 用了多年的settings

- RationalDMIS64全套教程

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功