may be affected by some competing workload on the host

computer. Hence a better understanding of data transfers as-

sociated with GPU computing is an essential piece of work to

support latency-critical real-time systems. Unfortunately prior

work [3], [9] did not provide performance characterization in

the context of latency and concurrent workload; they focused

on a basic comparison of DMA and direct I/O access.

Contribution: In this paper, we clarify the performance

characteristics of currently-achievable data transfer methods

for GPU computing while unveiling several new data transfer

methods other than the well-known DMA and I/O read-and-

write access. We reveal the advantage and disadvantage of

these methods in a quantitative way leading a conclusion that

the typical DMA and I/O read-and-write methods are the

most effective in latency even in the presence of compelling

workload, whereas concurrent data streams from multiple

different contexts can benefit from the capability of on-chip

microcontrollers integrated in the GPU. To the best of our

knowledge, this is the first evidence of data transfer matters for

GPU computing beyond an intuitive expectation, which allows

system designers to choose appropriate data transfer methods

depending on the requirement of their latency-sensitive GPU

applications. Without our findings, none can reason about

the usage of GPUs minimizing the data transfer latency and

performance interference. These findings are also applicable

for many PCIe compute devices rather than a specific GPU.

We believe that the contributions of this paper are useful for

low-latency GPU computing.

Organization: The rest of this paper is organized as fol-

lows. Section II presents the assumption and terminology

behind this paper. Section III provides an open investigation

of data transfer methods for GPU computing. Section IV

compares the performances of the investigated data transfer

methods. Related work are discussed in Section V. We provide

our concluding remarks in Section VI.

II. ASSUMPTION AND TERMINOLOGY

We assume that the Compute Unified Device Architecture

(CUDA) is used for GPU programming [11]. A unit of code

that is individually launched on the GPU is called a kernel.

The kernel is composed of multiple threads that execute the

code in parallel.

CUDA uses a set of an application programming interface

(API) functions to manage the GPU. A typical CUDA program

takes the following steps: (i) allocate space to the device

memory, (ii) copy input data to the allocated device memory

space, (iii) launch the program on the GPU, (iv) copy output

data back to the host memory, and (v) free the allocated

device memory space. The scope of this paper is related to

(ii) and (iv). Particularly we use the cuMemCopyHtoD() and

the cuMemCopyDtoH() functions provided by the CUDA

Driver API, which correspond to (ii) and (iv) respectively.

Since an open-source implementation of these functions is

available [9], we modify them to accommodate various data

transfer methods investigated in this paper. While they are

synchronous data transfer functions, CUDA also provides

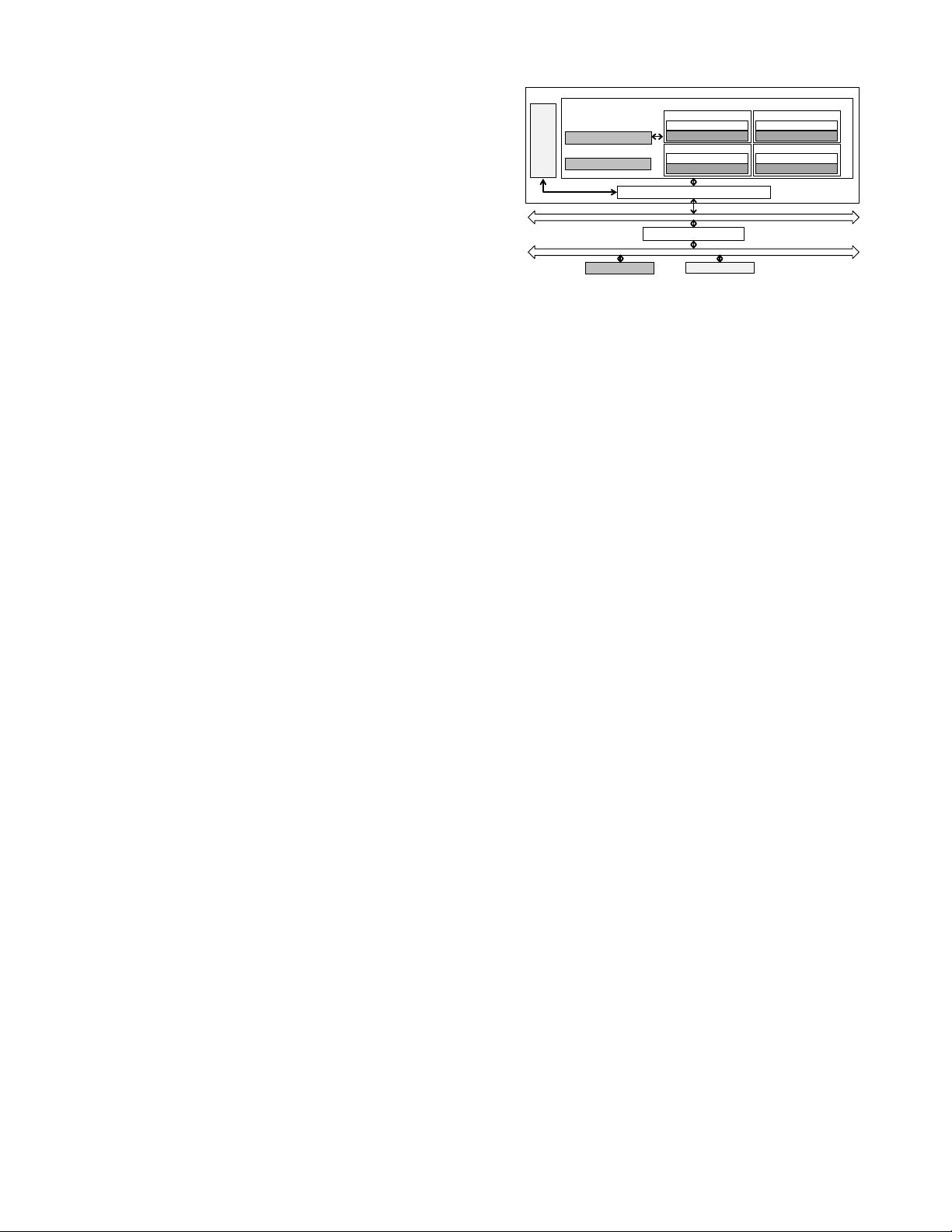

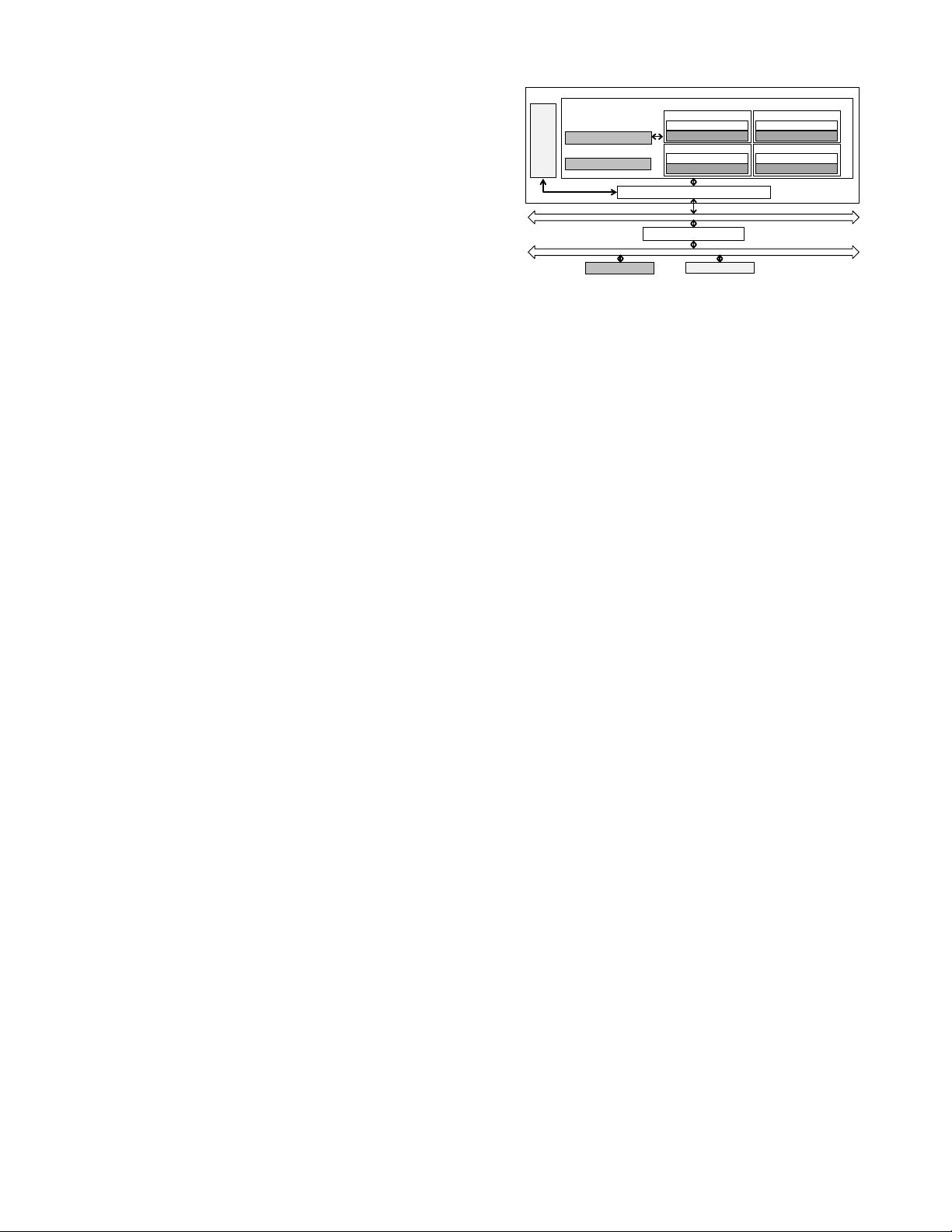

CPU$$

PCI$Bridge$

GPU$Board$

$

$

$

$

$

$

$

$

Host$Interface$

GPU$Chip$

$

$

$

$

$

$

$

Device$

Memory

Microcontrollers

Host$Memory$

DMA$Engines

GPC$

$

$

$

CUDA$Cores$

Microcontroller$

GPC$

$

$

$

CUDA$Cores$

Microcontroller$

GPC$

$

$

$

CUDA$Cores$

Microcontroller$

GPC$

$

$

$

CUDA$Cores$

Microcontroller$

Fig. 2. Block diagram of the target system.

asynchronous data transfer functions. In this paper, we restrict

our attention to the synchronous data transfer functions for

simplicity of description, but partly similar performance char-

acteristics can also be applied for the asynchronous ones. This

is because both techniques are using the same data transfer

method. The only difference is synchronization timing.

In order to focus on the performance of data transfers

between the host and the device memory, we allocate a data

buffer to the pinned host memory rather than the typical heap

allocated by malloc(). This pinned host memory space is

mapped to the PCIe address and is never swapped out. It is

also accessible to the GPU directly.

Our computing platform contains a single set of the CPU

and the GPU. Although we restrict our attention to CUDA and

the GPU, the notion of the investigated data transfer methods is

well applicable to other heterogeneous compute devices. GPUs

are currently well-recognized forms of the heterogeneous

compute devices, but emerging alternatives include the Intel

Many Integrated Core (MIC) and the AMD Fusion technology.

The programming models of these different platforms are

almost identical in that the CPU controls the compute devices.

Our future work includes an integrated investigation of these

different platforms.

Figure 2 shows a summarized block diagram of the target

system. The host computer consists of the CPU and the host

memory communicating on the system I/O bus. They are

connected to the PCIe bus to which the GPU board is also

connected. This means that the GPU is visible to the CPU

as a PCIe device. The GPU is a complex compute device

integrating a lot of hardware functional units on a chip. This

paper is only focused on the CUDA-related units. There are

the device memory and the GPU chip connected through a

high bandwidth memory bus. The GPU chip contains graphics

processing clusters (GPCs), each of which integrates hundreds

of processing cores, a.k.a, CUDA cores. The number of GPCs

and CUDA cores is architecture-specific. For example, GPUs

based on the NVIDIA GeForce Fermi architecture [12] used

in this paper support at most 4 GPCs and 512 CUDA cores.

Each GPC is configured by an on-chip microcontroller. This

microcontroller is wimpy but is capable of executing firmware

code with its own instruction set. There is also a special

hub microcontroller, which broadcasts the operations on all

the GPC-dedicated microcontrollers. In addition to hardware

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功