LLM预备知识-attention is all you need

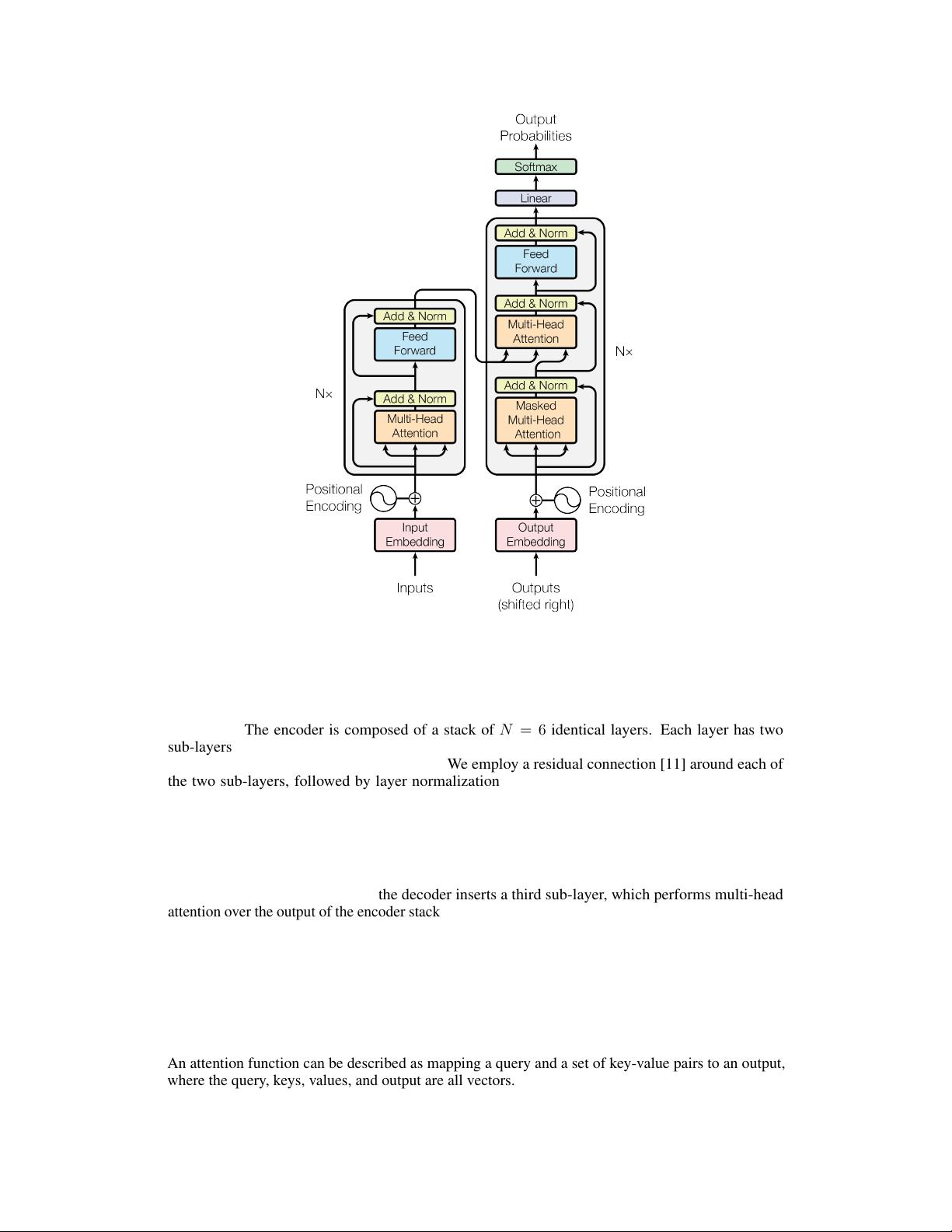

Transformer 模型架构的设计理念和优势 本文主要介绍 Transformer 模型架构的设计理念和优势,Transformer 模型是基于自注意力机制的序列转换模型,摒弃了传统的循环神经网络(RNN)和卷积神经网络(CNN),并证明了其在机器翻译任务上的优越性。 一、Transformer 模型架构的设计理念 传统的序列转换模型基于循环神经网络(RNN)或卷积神经网络(CNN),但是这些模型存在一些缺陷,如计算复杂、难以并行计算等。Transformer 模型架构的设计理念是基于自注意力机制,摒弃了循环和卷积操作,使用自注意力机制来模拟序列之间的依赖关系。 Transformer 模型架构主要由 encoder 和 decoder 两个部分组成,encoder 负责将输入序列转换为连续的表示,decoder 负责将连续的表示转换回输出序列。自注意力机制是 Transformer 模型架构的核心组件,负责模拟序列之间的依赖关系。 二、Transformer 模型架构的优势 Transformer 模型架构具有多个优势,如: 1. 并行计算能力强,在训练和推理阶段可以并行计算,提高计算效率。 2. 计算复杂度低,摒弃了循环和卷积操作,减少了计算复杂度。 3. 可以处理长序列,自注意力机制可以模拟长序列之间的依赖关系。 4. 可以并行处理多个任务,Transformer 模型架构可以并行处理多个机器翻译任务,提高计算效率。 三、Transformer 模型架构在机器翻译任务上的应用 Transformer 模型架构在机器翻译任务上的应用效果非常好,在 WMT 2014 英文到德文翻译任务上,Transformer 模型架构的 BLEU 分数达到 28.4,超越了之前的最好结果。在 WMT 2014 英文到法文翻译任务上,Transformer 模型架构的 BLEU 分数达到 41.8,创造了新的单模型状态的艺术记录。 四、Transformer 模型架构的泛化能力 Transformer 模型架构不仅可以应用于机器翻译任务,也可以应用于其他自然语言处理任务,如英语成分分析任务。在英语成分分析任务上,Transformer 模型架构可以达到很好的结果,证明了其泛化能力。 Transformer 模型架构是一种基于自注意力机制的序列转换模型,具有多个优势,如并行计算能力强、计算复杂度低、可以处理长序列等,可以应用于多种自然语言处理任务。

剩余14页未读,继续阅读

- 粉丝: 7189

- 资源: 5

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于单片机智能电子密码锁设计(proteus仿真+程序) (1)输入密码:通过4*4矩阵键盘输入6位密码; (2)修改密码:可以对初始密码进行修改; (3)显示电路:使用LCD1602显示密码锁运行状

- 微电网分层控制中二次控制,集中控制,分布式协调控制,事件触发,运行效果良好

- 三菱Q系列L系列程序 三菱L程序,主站L02PLC QX42.QY42P等输入输出模块.L系列定位控制模块 3C-FPC组装机 三菱JE系列伺服控制,绝对定位,X,Y,Z,R模组取

- MATLAB代码:计及碳捕集电厂灵活运行方式及需求响应的综合能源系统日前调度模型 仿真平台:MATLAB yalmip+cplex 包含新能源消纳、热电联产、电锅炉、储能电池、天然气、碳捕集CCS、计

- 新能源汽车车载双向OBC,PFC,LLC,V2G 双向 充电 新能源汽车车载双向OBC,PFC,LLC,V2G 双向 充电桩 电动汽车 车载充电机 充放电机 MATLAB仿真模型 (1)基于V2G技术

- 潮流追踪法,采用牛拉法计算任意拓扑结构系统网损,支路功率,考虑分布式电源接入情况,采用潮流追踪法计算负荷和分布式电源进行网损分摊

- 三相PWM整流器仿真模型 包括基于开关表的直接功率控制,滞环电流控制,有限集模型预测直接功率控制,有限集模型预测电流控制,均为输入三相对称交流电,220V 50Hz,直流侧输出760V,且直流输出电压

- 17 16届智能车十六届国二代码源程序,基础四轮摄像头循迹识别判断 逐飞tc264龙邱tc264都有 能过十字直角三岔路环岛元素均能识别,功能全部能实现 打包出的龙邱逐飞都有,代码移植行好,有基础的

- 西门子1500PLC程序 BMS系统 医药洁净室程序 串级PID 温度误差正负0.2(控温湿度强烈推荐) 程序有详细注释,很方便能看懂; 在运行医药厂房BMS PLC程序; 串级PID,分程调节,控

- 西门子224 XP程序源码,包括pcb,原理图 ,bom PLC 224 全套生产量产方案 非常具有参考价值

- matlab 图像分割gui可视化代码 ,代码功能有 图像灰度化,显示灰度直方图,阈值分割法,区域分割法,梯度边缘分割法,canny边缘分割,拉普拉斯边缘分割,并且可以进行各个方法的比较

- 电动汽车备用能力分析 对电动汽车备用能力的评估需置于合理的、计及用户响应意愿的市场机制下来考察 首先设计出兼顾系统调控需求与用户出行需求的充(放)电合约机制,提出了EV短时备用能力计算方法和响应电价

- Agv伺服驱动器方案开发,本人在AGV行业三年,有丰富的行业经验

- Comsol金属开口环倍频SHG转效率计算

- (断开git服务器合并本地两个分支代码)Git操作技巧:本地合并两个分支代码详细步骤与冲突解决方法

- 交错并联Boost PFC仿真电路模型,控制方法采用输出电压外环,电感电流内环的双闭环PI控制方式 控制效果:交流侧输入电流畸变小,波形良好,输出直流电压可完好跟随给定,两相电感电流均流很好,如展示

信息提交成功

信息提交成功