42 IEEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL. 22, NO. 1, JANUARY 2004

MojoNation, and Freenet. Napster uses central directory

servers to locate files. Gnutella provides a similar, but dis-

tributed service using scoped broadcast queries, limiting

scalability. MojoNation [12] uses an online economic model to

encourage sharing of resources. Freenet [13] is a file-sharing

network designed to resist censorship. Neither Gnutella nor

Freenet guarantee that files can be located—even in a func-

tioning network.

The second generation of P2P systems are structured P2P

overlay networks, including Tapestry [1], [2], Chord [8], Pastry

[7], and CAN [6]. These overlays implement a basic key-based

routing (KBR) interface, that supports deterministic routing of

messages to a live node that has responsibility for the destina-

tion key. They can also support higher level interfaces such as

a distributed hash table (DHT) or a DOLR layer [3]. These sys-

tems scale well and guarantee that queries find existing objects

under nonfailure conditions.

One differentiating property between these systems is that

neither CAN nor Chord take network distances into account

when constructing their routing overlay; thus, a given overlay

hop may span the diameter of the network. Both protocols route

on the shortest overlay hops available and use runtime heuris-

tics to assist. In contrast, Tapestry and Pastry construct locally

optimal routing tables from initialization and maintain them in

order to reduce routing stretch.

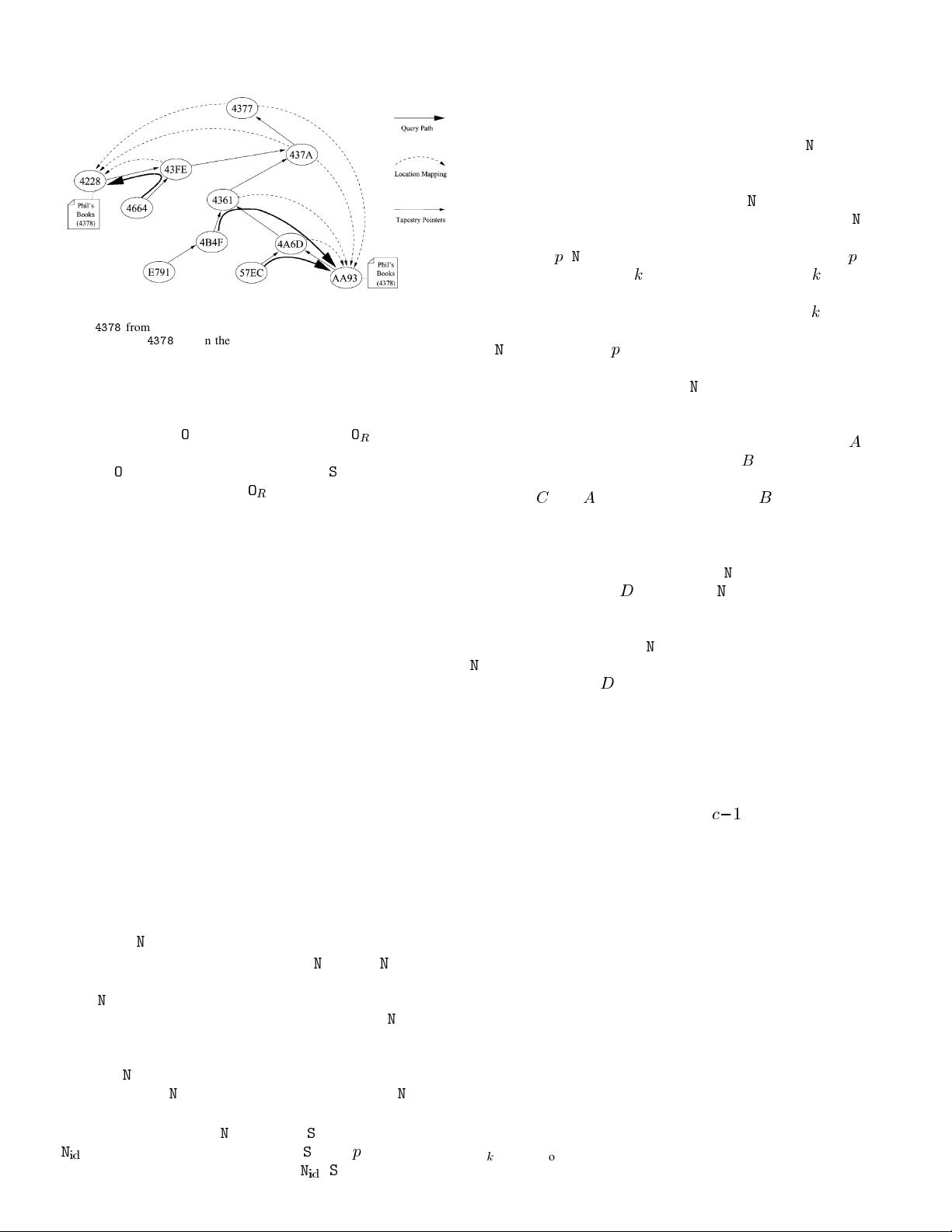

While some systems fix the number and location of object

replicas by providing a DHT interface, Tapestry allows ap-

plications to place objects according to their needs. Tapestry

“publishes” location pointers throughout the network to facili-

tate efficient routing to those objects with low network stretch.

This technique makes Tapestry locality-aware [14]: queries for

nearby objects are generally satisfied in time proportional to the

distance between the query source and a nearby object replica.

Both Pastry and Tapestry share similarities to the work

of Plaxton

et al. [15] for a static network. Others [16], [17]

explore distributed object location schemes with provably

low search overhead, but they require precomputation, and so

are not suitable for dynamic networks. Recent works include

systems such as Kademlia [9], which uses XOR for overlay

routing, and Viceroy [10], which provides logarithmic hops

through nodes with constant degree routing tables. SkipNet

[11] uses a multidimensional skip-list data structure to support

overlay routing, maintaining both a DNS-based namespace for

operational locality and a randomized namespace for network

locality. Other overlay proposals [18], [19] attain lower bounds

on local routing state. Finally, proposals such as Brocade [20]

differentiate between local and interdomain routing to reduce

wide-area traffic and routing latency.

A new generation of applications have been proposed on top

of these P2P systems, validating them as novel application in-

frastructures. Several systems have application level multicast:

CAN-MC [21] (CAN), Scribe [22] (Pastry), and Bayeux [5]

(Tapestry). In addition, several decentralized file systems have

been proposed: CFS [23] (Chord), Mnemosyne [24] (Chord,

Tapestry), OceanStore [4] (Tapestry), and PAST [25] (Pastry).

Structured P2P overlays also support novel applications (e.g.,

attack resistant networks [26], network indirection layers [27],

and similarity searching [28]).

III. T

APESTRY

ALGORITHMS

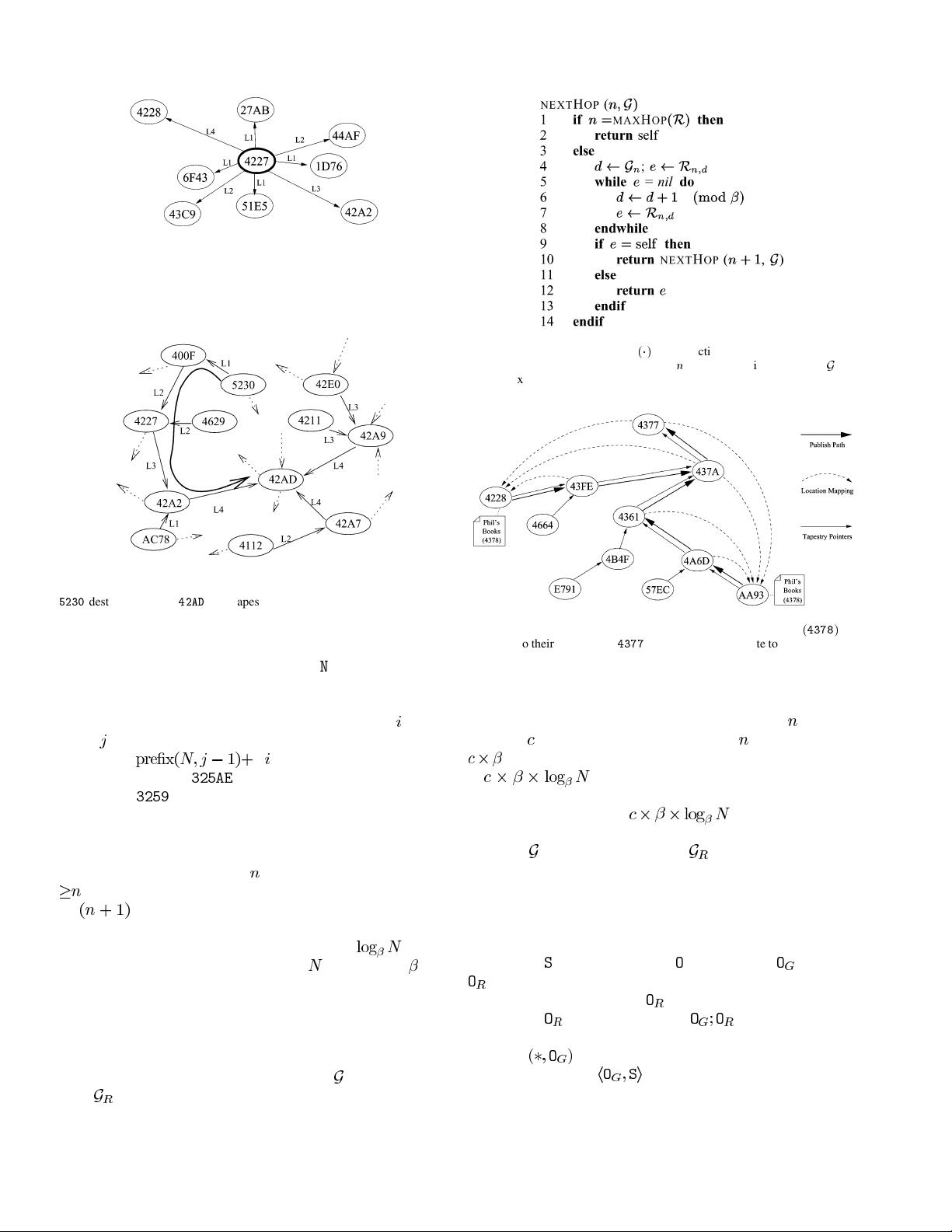

This section details Tapestry’s algorithms for routing and ob-

ject location and describes how network integrity is maintained

under dynamic network conditions.

A. DOLR Networking API

Tapestry provides a datagram-like communications interface,

with additional mechanisms for manipulating the locations of

objects. Before describing the API, we start with a couple of

definitions.

Tapestry nodes participate in the overlay and are assigned

nodeIDs uniformly at random from a large identifier space.

More than one node may be hosted by one physical host.

Application-specific endpoints are assigned globally unique

identifiers (GUIDs), selected from the same identifier space.

Tapestry currently uses an identifier space of 160-bit values

with a globally defined radix (e.g., hexadecimal, yielding

40-digit identifiers). Tapestry assumes nodeIDs and GUIDs

are roughly evenly distributed in the namespace, which can

be achieved by using a secure hashing algorithm like SHA-1

[29]. We say that node

has nodeID , and an object has

GUID

.

Since the efficiency of Tapestry generally improves with net-

work size, it is advantageous for multiple applications to share a

single large Tapestry overlay network. To enable application co-

existence, every message contains an application-specific iden-

tifier

, which is used to select a process, or application for

message delivery at the destination [similar to the role of a port

in transmission control protocol/Internet protocol (TCP/IP)], or

an upcall handler where appropriate.

Given the above definitions, we state the four-part DOLR net-

working API as follows.

1) P

UBLISHOBJECT

: Publish, or make available, ob-

ject

on the local node. This call is best effort, and re-

ceives no confirmation.

2) U

NPUBLISHOBJECT

: Best-effort attempt to

remove location mappings for

.

3) R

OUTETOOBJECT

: Routes message to location

of an object with GUID

.

4) R

OUTETONODE( , Exact): Route message to applica-

tion

on node . “Exact” specifies whether destination

ID needs to be matched exactly to deliver payload.

B. Routing and Object Location

Tapestry dynamically maps each identifier

to a unique live

node called the identifier’s root or

. If a node exists with

, then this node is the root of . To deliver messages,

each node maintains a routing table consisting of nodeIDs and

IP addresses of the nodes with which it communicates. We refer

to these nodes as neighbors of the local node. When routing

toward

, messages are forwarded across neighbor links to

nodes whose nodeIDs are progressively closer (i.e., matching

larger prefixes) to

in the ID space.

1) Routing Mesh: Tapestry uses local tables at each node,

called neighbor maps, to route overlay messages to the des-

tination ID digit by digit (e.g.,

, where ’s represent wildcards). This approach is similar

21840300DHT.zip (17个子文件)

21840300DHT.zip (17个子文件)  Distributed_hash_table.htm 49KB

Distributed_hash_table.htm 49KB Tapestry_(DHT).htm 33KB

Tapestry_(DHT).htm 33KB Tapestry__ A Resilient Global-Scale Overlay for Service Deployment JSAC03.pdf 422KB

Tapestry__ A Resilient Global-Scale Overlay for Service Deployment JSAC03.pdf 422KB www.pudn.com.txt 218B

www.pudn.com.txt 218B Distributed_hash_table_files

Distributed_hash_table_files  wikimedia-button.png 890B

wikimedia-button.png 890B index.php 11B

index.php 11B wikibits.js 40KB

wikibits.js 40KB injection_graph_func.js 15KB

injection_graph_func.js 15KB ajax.js 4KB

ajax.js 4KB poweredby_mediawiki_88x31.png 2KB

poweredby_mediawiki_88x31.png 2KB people-meter-ltr.png 9KB

people-meter-ltr.png 9KB index_004.php 52KB

index_004.php 52KB meter-en.png 2KB

meter-en.png 2KB index_005.php 11KB

index_005.php 11KB index_002.php 34KB

index_002.php 34KB commonPrint.css 5KB

commonPrint.css 5KB index_003.php 193B

index_003.php 193B

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

- 1

- 2

前往页