186 C. Rascon, I. Meza / Robotics and Autonomous Systems 96 (2017) 184–210

means that there are no objects between the sources and

the microphones and that there are no objects between the

microphones. In addition, it is assumed that there are no

reflections from the environment (i.e., no reverberation).

• Far field: The relation between the inter-microphone dis-

tance and the distance of the sound source to the micro-

phone array is such that the sound wave can be considered

as being planar.

The second assumption greatly simplifies the mapping proce-

dure between feature and location, as discussed in Section 4.1.

There are other type of propagation models that are relevant

in SSL in robotics. The Woodworth–Schlosberg spherical head

model [43, pp. 349–361] has been used extensively in binaural

arrays placed on robotic heads [23,44] and is explained in Sec-

tion 4.2. The near-field model [45] assumes that the user can be

near the microphone array, which requires to consider the sound

wave as being circular. There are a few robotic applications that use

the near-field model, such as [46], however it is not as commonly

used as the far-field model. In fact, there are approaches that

use a modified far-field model successfully in near-field circum-

stances [47] or that modify the methodology design to consider

the near-field case [48]. Nevertheless, as presented in [48], a far-

field model directly used in near-field circumstances can decrease

the SSL performance considerably. In addition, there are cases

in which the propagation model is learned, such as the neural-

network-based approaches in [49,50], manifold learning [33,51],

linear regression [52] and as part of a multi-modal fusion [11,21].

3.2. Features

There are several acoustic features used throughout the re-

viewed methodologies. In this section, we provide a brief overview

of the most popular:

Time difference of arrival (TDOA). It is the time-difference be-

tween two captured signals. In 2-microphone arrays (binaural

arrays) that use external pinnae, this feature is also sometimes

called the inter-aural time difference (ITD). There are several

ways of calculating it, such as measuring the time difference be-

tween the moments of zero-level-crossings of the signals [18] or

between the onset times calculated from each signal [6,7,14,17].

Another way to calculate the TDOA is by assuming the sound

source signal is narrowband. Let us denote the phase difference

of two signals at frequency f as 1ϕ

f

. If f

m

is the frequency with

the highest energy, the TDOA for narrowband signals (which

is equivalent to the inter-microphone phase difference, or IPD)

can be obtained by

1ϕ

f

m

2πf

m

[23]. However, the most popular way

of calculating the TDOA as of this writing is based on cross-

correlation techniques, which are explained in detail in Sec-

tion 4.1.

Inter-microphone intensity difference (IID). It is the difference

of energy between two signals at a given time. This feature,

when extracted from time-domain signals, can be useful to

determine if the source is in the right, left or front of a

2-microphone array. To provide greater resolution, a many-

microphone array is required [53] or a learning-based mapping

procedure can be used [10]. The frequency-domain version

of IID is the inter-microphone level difference (ILD) that is

provided as the difference spectrum between the two short-

time-frequency-transformed captured signals. This feature is

also often used in conjunction with a learning-based mapping

procedure [35].

A similar feature to the ILD are the set of differences of the

outputs of a set of filters spaced logarithmically in the frequency

domain (known as a filter bank). These set of features have

shown more robustness against noise than the IID [9], while

employing a feature vector with less dimensions than the ILD.

In [54], the ILD is calculated in the overtone domain. A

frequency f

o

is an overtone of another f when f

o

= rf (given that

r ∈ [2, 3, 4, . . .]) and their magnitudes are highly correlated

through time. This approach has the potential of being more

robust against interferences, since the correlation between the

frequencies implies they belong to the same source.

Spectral notches. When using external pinnae

1

or inner-ear

canals, there is a slight asymmetry between the microphone

signals. Because of this, the result of their subtraction presents

a reduction or amplification in certain frequencies, which de-

pend on the direction of a sound source. These notches can

be mapped against the direction of the sound source by ex-

perimentation [52]. However, because small changes to the

external pinnae may hinder the results from these observations,

it is advisable to use learning-based mapping when using these

features [49].

Binaural/spectral cues. It is a popular term to refer to the feature

set that is composed by the IPD and the ILD in conjunction. This

feature set is often used with learning-based mapping [50,51].

They are often extracted on an onset to reduce the effect of

reverberation [55]. It has been shown in practice that temporal

smoothing of this feature set makes the resulting mapping more

robust against moderate reverberation [56].

Besides these features, there are others that are also highly used,

such as the MUSIC pseudo-spectrum and the beamformer steered-

response. However, their application is bound to specific end-to-

end methodologies. Because of this, their detailed explanation is

given in Section 4.

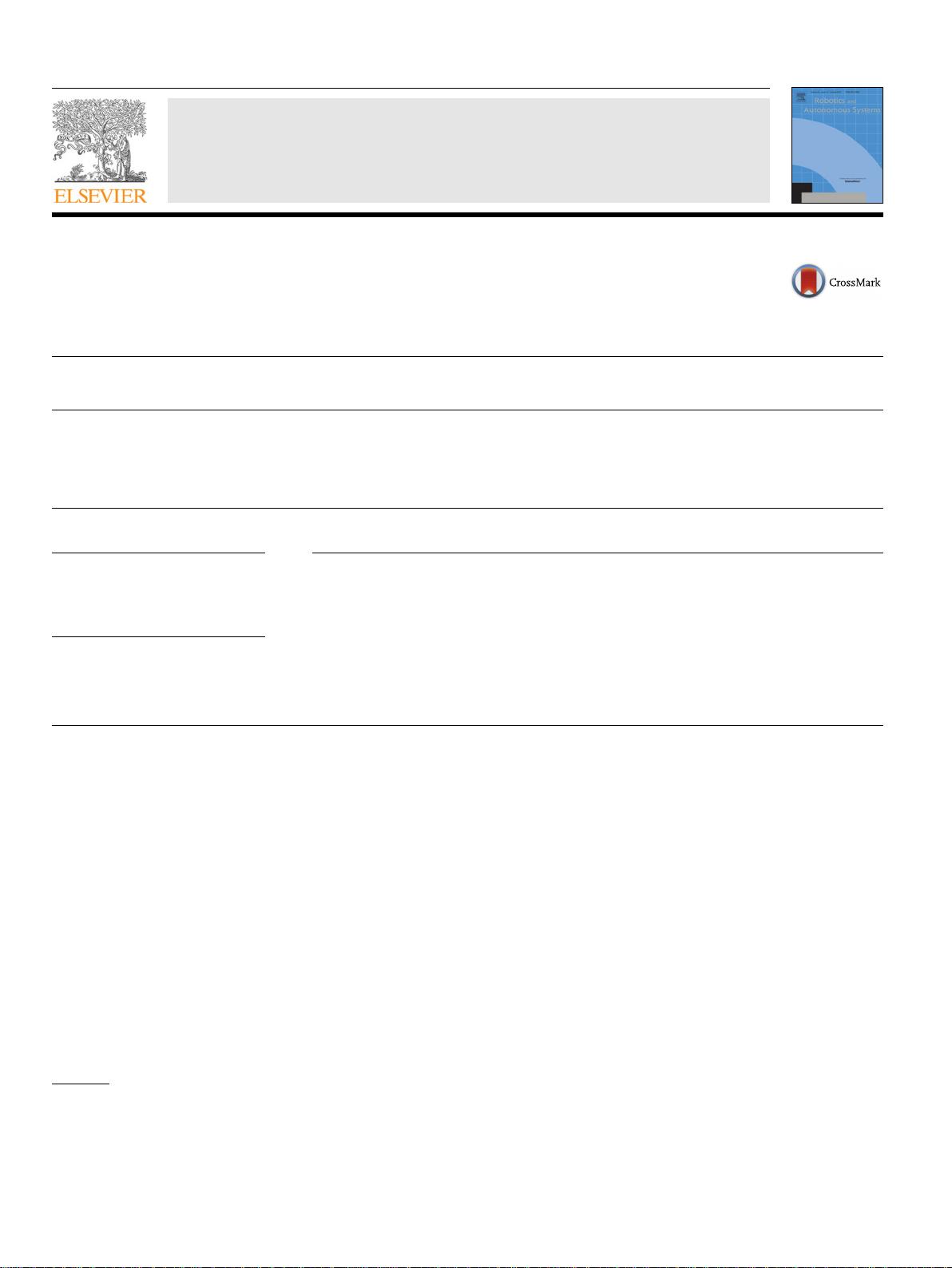

3.3. Mapping procedures

A mapping procedure for SSL is expected to map a given ex-

tracted feature to a location. A typical manner to carry this out

is by applying directly the propagation model, such as the free-

field/far-field model or the Woodworth–Schlosberg spherical head

model, both discussed in Section 3.1. However, there are some

type of features (especially those used for multiple-source-location

estimation) which require an exploration or optimization of the

SSL solution space. A common approach is to carry out a grid-

search, in which a mapping function is applied throughout the SSL

space and the function output is recorded for each tested sound

source location. This produces a solution spectrum in which peaks

(or local maximums) are regarded as the SSL solutions. This is the

most used type of mapping procedure for multiple-source-location

estimation. Two important examples are the subspace orthogonal-

ity feature of MUSIC and the steered-response of a delay-and-sum

beamformer. These are detailed further in Section 4.3.

There are types of mapping procedures other than grid-search.

Their main focus is to train the mapping function based on

recorded data of sources with known locations. As a result, the

mapping function that was learned implicitly encodes the prop-

agation model. In this survey, this type of mapping procedures are

referred to as learning-based mapping. These are based in differ-

ent training methodologies, such as neural networks [11,21,49],

locally-linear regression [57], manifold learning [33,51], etc. Fur-

ther details are given of each mapping procedure in the relevant

branches of the methodology classification presented in Section 4.

1

External ears.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功