RDMA over Commodity Ethernet at Scale

Chuanxiong Guo, Haitao Wu, Zhong Deng, Gaurav Soni,

Jianxi Ye, Jitendra Padhye, Marina Lipshteyn

Microsoft

{chguo, hwu, zdeng, gasoni, jiye, padhye, malipsht}@microsoft.com

ABSTRACT

Over the past one and half years, we have been using

RDMA over commodity Ethernet (RoCEv2) to support

some of Microsoft’s highly-reliable, latency-sensitive ser-

vices. This paper describes the challenges we encoun-

tered during the process and the solutions we devised to

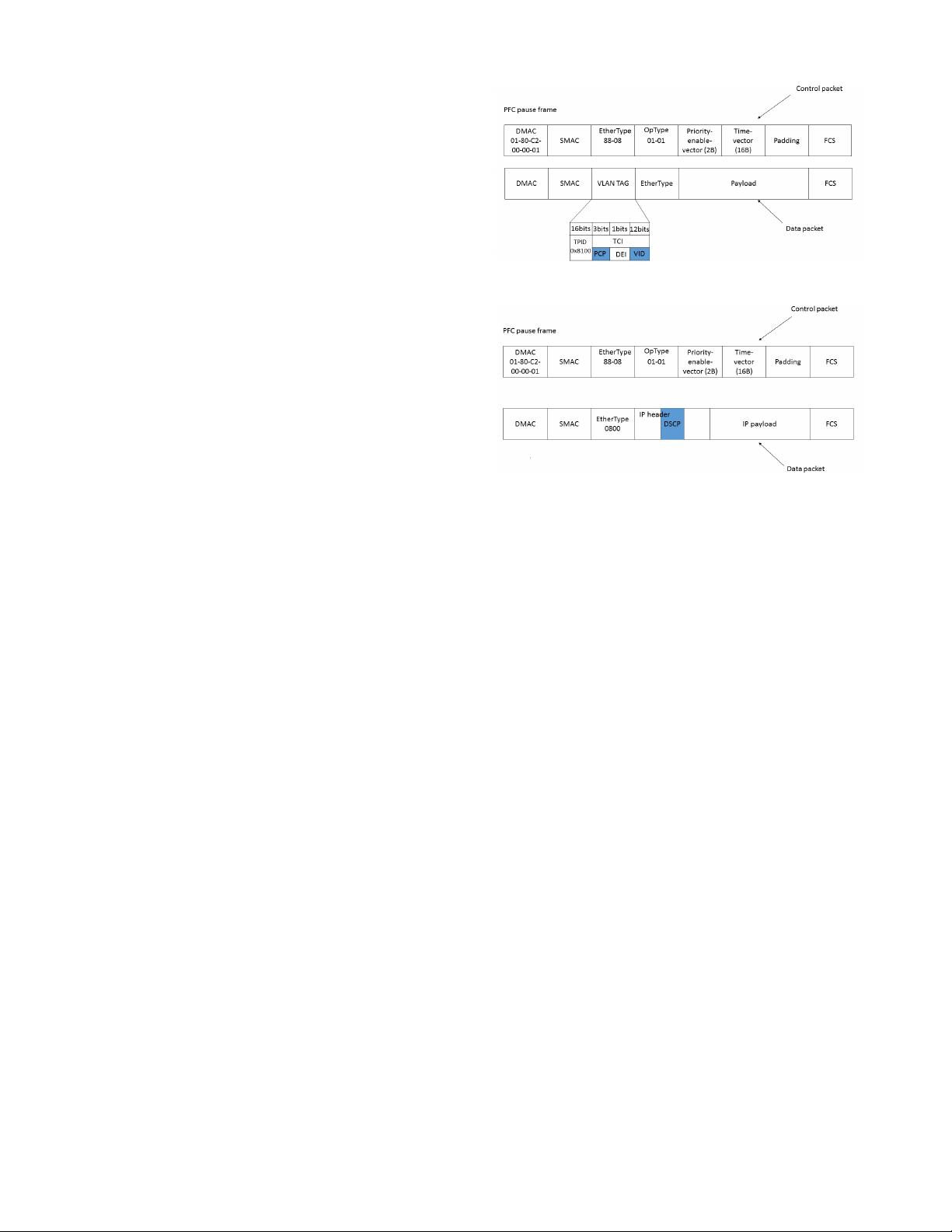

address them. In order to scale RoCEv2 beyond VLAN,

we have designed a DSCP-based priority flow-control

(PFC) mechanism to ensure large-scale deployment. We

have addressed the safety challenges brought by PFC-

induced deadlock (yes, it happened!), RDMA transport

livelock, and the NIC PFC pause frame storm problem.

We have also built the monitoring and management

systems to make sure RDMA works as expected. Our

experiences show that the safety and scalability issues

of running RoCEv2 at scale can all be addressed, and

RDMA can replace TCP for intra data center commu-

nications and achieve low latency, low CPU overhead,

and high throughput.

CCS Concepts

•Networks → Network protocol design; Network

experimentation; Data center networks;

Keywords

RDMA; RoCEv2; PFC; PFC propagation; Deadlock

1. INTRODUCTION

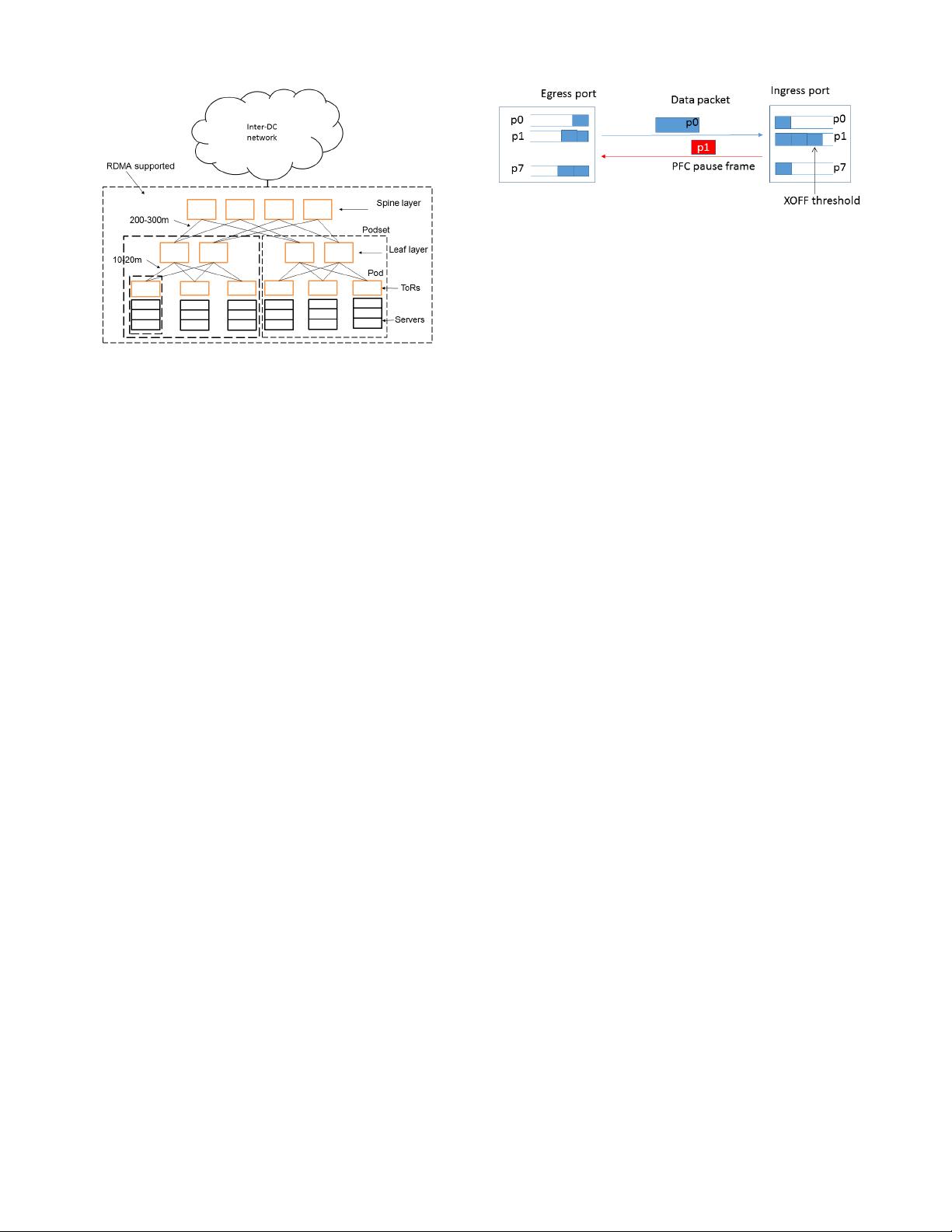

With the rapid growth of online services and cloud

computing, large-scale data centers (DCs) are being

built around the world. High speed, scalable data cen-

ter networks (DCNs) [1, 3, 19, 31] are needed to connect

the servers in a DC. DCNs are built from commodity

Permission to make digital or hard copies of all or part of this work for personal

or classroom use is granted without fee provided that copies are not made or

distributed for profit or commercial advantage and that copies bear this notice

and the full citation on the first page. Copyrights for components of this work

owned by others than the author(s) must be honored. Abstracting with credit is

permitted. To copy otherwise, or republish, to post on servers or to redistribute to

lists, requires prior specific permission and/or a fee. Request permissions from

permissions@acm.org.

SIGCOMM ’16, August 22 - 26, 2016, Florianopolis , Brazil

c

2016 Copyright held by the owner/author(s). Publication rights licensed to

ACM. ISBN 978-1-4503-4193-6/16/08. . . $15.00

DOI: http://dx.doi.org/10.1145/2934872.2934908

Ethernet switches and network interface cards (NICs).

A state-of-the-art DCN must support several Gb/s or

higher throughput between any two servers in a DC.

TCP/IP is still the dominant transport/network stack

in today’s data center networks. However, it is increas-

ingly clear that the traditional TCP/IP stack cannot

meet the demands of the new generation of DC work-

loads [4, 9, 16, 40], for two reasons.

First, the CPU overhead of handling packets in the

OS kernel remains high, despite enabling numerous hard-

ware and software optimizations such as checksum of-

floading, large segment offload (LSO), receive side scal-

ing (RSS) and interrupt moderation. Measurements in

our data centers show that sending at 40Gb/s using 8

TCP connections chews up 6% aggregate CPU time on

a 32 core Intel Xeon E5-2690 Windows 2012R2 server.

Receiving at 40Gb/s using 8 connections requires 12%

aggregate CPU time. This high CPU overhead is unac-

ceptable in modern data centers.

Second, many modern DC applications like Search

are highly latency sensitive [7, 15, 41]. TCP, however,

cannot provide the needed low latency even when the

average traffic load is moderate, for two reasons. First,

the kernel software introduces latency that can be as

high as tens of milliseconds [21]. Second, packet drops

due to congestion, while rare, are not entirely absent

in our data centers. This occurs because data center

traffic is inherently bursty. TCP must recover from the

losses via timeouts or fast retransmissions, and in both

cases, application latency takes a hit.

In this paper we summarize our experience in deploy-

ing RoCEv2 (RDMA over Converged Ethernet v2) [5],

an RDMA (Remote Direct Memory Access) technol-

ogy [6], to address the above mentioned issues in Mi-

crosoft’s data centers. RDMA is a method of accessing

memory on a remote system without interrupting the

processing of the CPU(s) on that system. RDMA is

widely used in high performance computing with In-

finiband [6] as the infrastructure. RoCEv2 supports

RDMA over Ethernet instead of Infiniband.

Unlike TCP, RDMA needs a lossless network; i.e.

there must be no packet loss due to buffer overflow at

the switches. RoCEv2 uses PFC (Priority-based Flow

Control) [14] for this purpose. PFC prevents buffer

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功