implementation, and operator error. We had to engineer the software and design operational proce dures

to robustly handle this wider set of failure modes.

• A real system is rarely specified precise ly. Even worse, the specification may change during the im-

plementation phase. Consequently, an implementation should be malleable. Finally, a system might

“fail” due to a misunderstanding that occurre d during its specification phase.

This paper discusses a selection of the algorithmic and engineering challenges we encountered in moving

Paxos from theo ry to practice. This exercise took more R&D efforts than a straightforward translation of

pseudo-code to C++ might suggest.

The rest of this paper is organized as follows. The next two sections expand on the motiva tio n for this

project and describe the general environment into which our system was built. We then provide a quick

refresher on Paxos. We divide our expe riences into three categorie s and discuss each in turn: algorithmic gaps

in the literature, software engineering challenges, a nd unexpected failures. We co nclude with meas urements

of our system, and some broader observations on the state of the art in our field.

2 Background

Chubby [1] is a fault-tolerant system a t Google that provides a distributed locking mechanism and stores

small files. Typically there is one Chubby instance, or “cell”, per data center. Several Google systems – such

as the Google Filesystem (GFS) [4] and Bigtable [2] – use Chubby for distributed coordinatio n a nd to store

a small amount of metadata.

Chubby achieves fa ult-to lerance through replication. A typical Chubby cell consists of five replicas,

running the same code, each running on a dedicated machine. Every Chubby object (e.g., a Chubby lock,

or file) is stored as an entry in a database. It is this database that is replicated. At any one time, one of

these replicas is considered to be the “ma ster”.

Chubby clients (such as GFS and Bigtable) contact a Chubby cell for service. The master replica serves

all Chubby requests. If a Chubby client contacts a replica that is not the master, the replica replies with

the master’s network addre ss. The Chubby client may then contact the master. If the mas ter fails, a new

master is automatically elected, which will then continue to serve tra ffic based on the contents of its local

copy of the replicated database. Thus, the replicated database ensures continuity of Chubby state ac ross

master failover.

The first version of Chubby was based on a commerc ial, third-party, fault-toler ant databas e; we will

refer to this databa se as “3DB” for the rest of this pap er. This database had a history of bugs related to

replication. In fact, as far as we know, the replication mechanism was not based on a proven replication

algorithm and we do not know if it is correct. Given the history of problems associated with tha t product

and the importance of Chubby, we eventually decided to replace 3DB with our own solution based on the

Paxos algorithm.

3 Architecture outline

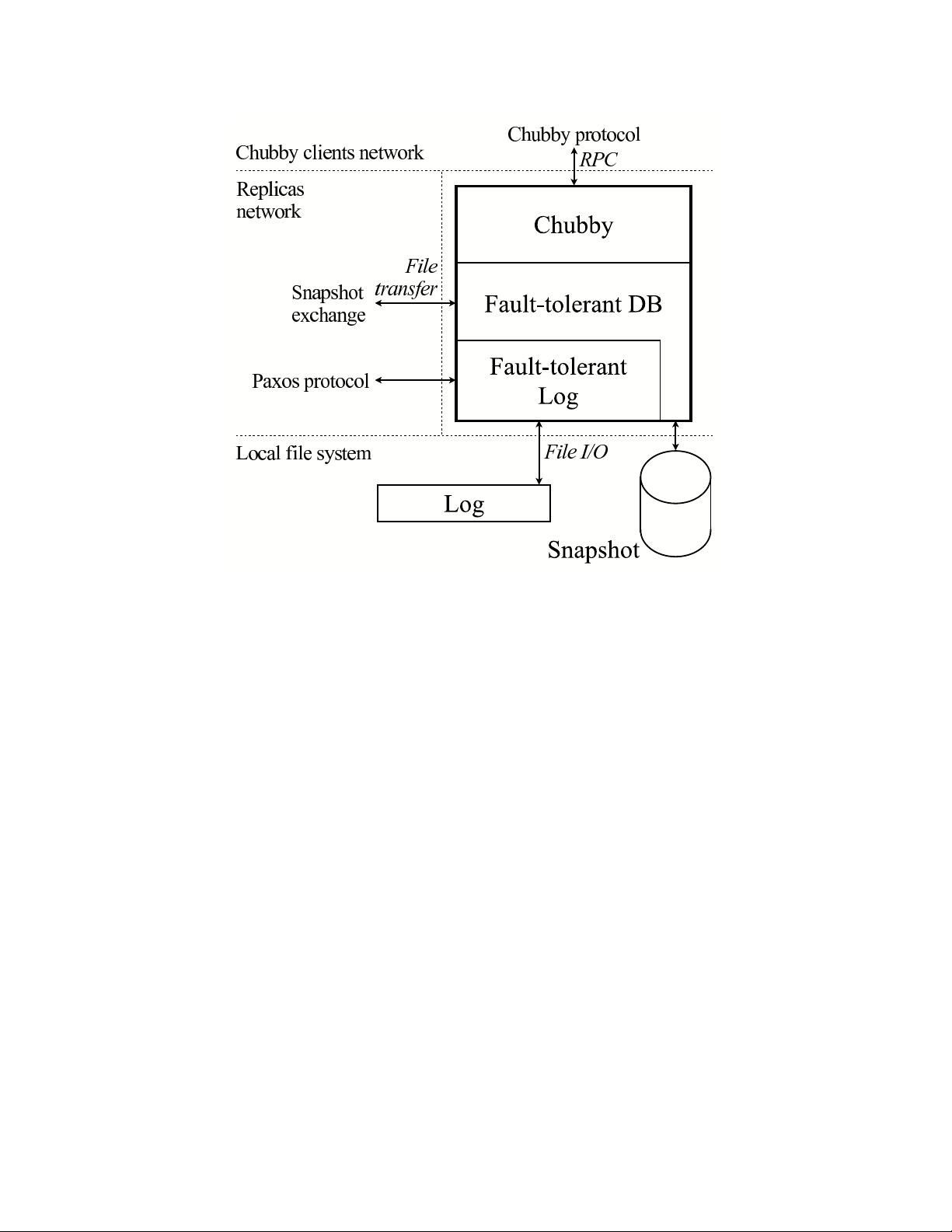

Figure 1 illustrates the architecture of a single Chubby replica. A fault-tolerant replicated log based on the

Paxos algorithm sits at the bottom of the protocol s tack. Each replica maintains a local copy of the log. The

Paxos a lgorithm is r un repeatedly as requir e d to ensure that all replicas have identical sequences of entries

in their local logs. Replicas communicate with each other through a Paxos-specific protocol.

The nex t layer is a fault-tolerant replicated database which includes a local copy of the databas e at each

replica. The database consists of a local snapshot and a replay-log of database operations. New database

operations are submitted to the replicated log. When a database operation appears at a replica, it is applied

on tha t replica’s local database copy.

Finally, Chubby uses the fault-tolerant database to store its sta te. Chubby clients communicate with a

single Chubby re plica through a Chubby-specific protocol.

2

cisco_vpn2014-08-26又是一篇关于paxos算法的经典论文,正在学习中,很不错。

cisco_vpn2014-08-26又是一篇关于paxos算法的经典论文,正在学习中,很不错。 baidu_225254132016-07-19不错,很好用,很经典!

baidu_225254132016-07-19不错,很好用,很经典! 我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功