通过自阴影算法生成动态毛发

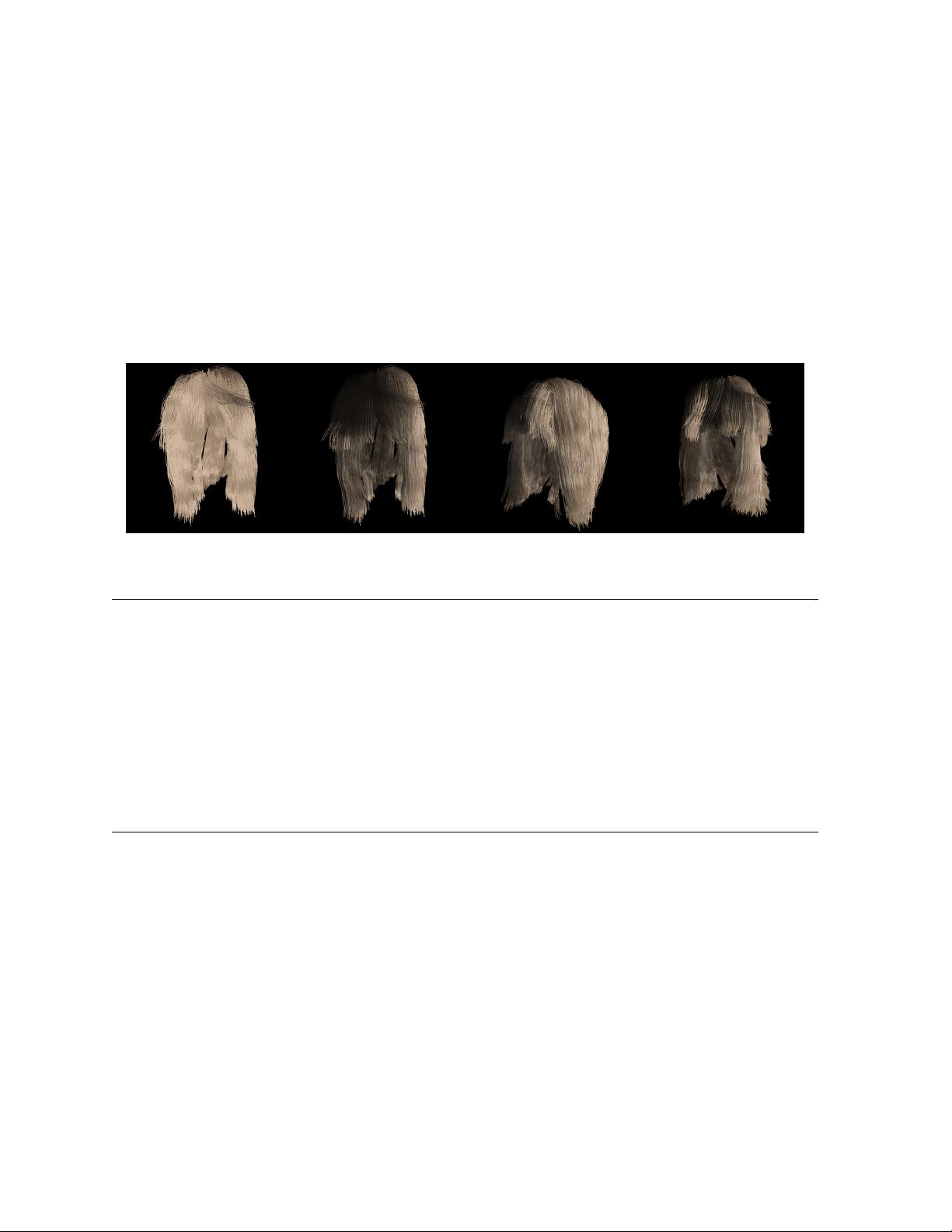

### 通过自阴影算法生成动态毛发的关键技术与原理 #### 概述 本文介绍了一种新的渲染算法,该算法能够利用图形硬件实时计算出具有真实感的自阴影效果的动态毛发。自阴影(self-shadowing)是毛发和皮毛外观的重要因素之一。在该研究中,作者提出了一种方法,能够在互动速率下准确地计算出带有阴影的毛发,且不受毛发样式限制,允许毛发几何形状随时间变化。 #### 关键技术与原理 ##### 1. 密度场与密度聚类 **密度场**的概念在此处扮演了核心角色。与以往的方法不同,该算法将毛发几何视为三维密度场。这种处理方式允许算法在渲染过程中动态地采样毛发的密度信息。具体来说,使用简单的光栅化技术来实时采样密度场中的数据点。 **密度聚类**则进一步提升了算法的效率。通过将光栅化得到的片段进行聚类,可以有效地估计沿光线路径上的毛发密度分布情况。这种聚类不仅简化了后续处理步骤,还确保了即使在处理大量动态毛发时也能保持良好的性能。 ##### 2. 可视性函数构建 可视性函数(visibility function)是算法的核心组成部分之一。对于每个光源视角下的视线方向,都会构造一个一维的可视性函数。这一函数用于描述光线穿过毛发时被遮挡的程度,从而模拟出自阴影的效果。 与传统的基于深度图的方法不同,本文提出的算法并不存储单一的深度值,而是存储了一个分数可见性函数。这种方法能够更精细地捕捉到毛发内部结构的变化,进而实现更为逼真的阴影效果。 ##### 3. 实时渲染能力 该算法的一个显著特点是其能够在消费级图形硬件上实现互动速率的渲染。这意味着用户可以在不牺牲图像质量的情况下,实时观察到毛发动态变化时的自阴影效果。这对于游戏开发、虚拟现实应用等领域尤为重要。 #### 应用实例 文中给出了一个具体的示例,展示了含有10万个线段的毛发模型在没有自阴影与添加了自阴影效果时的不同表现(如图1所示)。可以看到,在加入自阴影之后,毛发的视觉效果更加自然,细节更加丰富。 #### 结论 本文介绍的自阴影算法为动态毛发的实时渲染提供了一种高效且真实的解决方案。通过对毛发几何进行三维密度场的建模,并结合密度聚类以及分数可见性函数的构建,该算法能够在消费级图形硬件上实现互动速率下的高质量渲染。这一成果对于提升虚拟环境中毛发渲染的真实感具有重要意义,同时也为游戏开发、电影制作等领域提供了强大的技术支持。 通过采用创新的密度场和密度聚类技术,结合高效的可视性函数构建策略,本文提出的自阴影算法成功地实现了动态毛发的实时、高质量渲染。这项研究成果不仅推进了计算机图形学领域的发展,也为多个相关行业带来了实质性的技术进步。

flymagic012012-11-19对理解渲染毛发的方法比较有参考价值~

flymagic012012-11-19对理解渲染毛发的方法比较有参考价值~ 聊逍2012-10-1704年的头发渲染的一篇文献,用自阴影算法动态生成的...不值8分

聊逍2012-10-1704年的头发渲染的一篇文献,用自阴影算法动态生成的...不值8分

- 粉丝: 6

- 资源: 4

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

信息提交成功

信息提交成功